5th June 2008 A question regarding the ability to transfer of data between SAS and R set me off on a spot of investigation a while back and I have always planned to share the results of my labours. Once I managed to locate the required documentation, things became clearer with further inspection. Functions from the foreign package seem to offer the most from the data import and export point of view so they’re what I’ll be featuring in this posting.

I’ll start with importing and using the read.ssd function makes life so much easier for getting SAS data into R. I discovered that the foreign package may not be loaded by default but you can determine this easily by issuing the following command:

search()

If “package:foreign” isn’t in the list, then you need to issue the following function call:

library(foreign)

Of course, if the foreign package isn’t installed, none of this will work. It should live in the library sub-folder of the main R installation directory but if it isn’t there, then downloading the relevant binary package from CRAN is in order. Assuming that all is installed, then a command like the following will perform the needful:

read.ssd("c:/data","data1",sascmd="C:/Program Files/SAS Institute/SAS/V8/sas.exe")

This creates a temporary SAS program that converts the SAS data set into a transport file for reading by another R function that is called in the background, read.xport. Form my experience, it all seems to work fairly seamlessly.

To get data out of R and into SAS is a multi-stage process, even with the foreign package. There are other ways but using the write.foreign seems more useful than most and here’s an example function call:

write.foreign(data1,"C:/test.txt","C:/test.sas",package="SAS",dataname="data1",validvarname="V7")

No SAS data sets are created at this stage but a text file is generated along with a SAS program for converting it into a data set. Running the SAS program is a separate step that follows the creation of the two files. Even if it is less streamlined than read.ssd, write.foreign does make easier to transfer data into SAS than having to write a program from scratch to read in write.table output.

In summary, R can neither read or write SAS data sets by itself so you need SAS installed to really make things happen. SAS gets called by read.ssd and I feel that it would be better if was called by write.foreign also rather than a SAS program generated for execution later on. Even so, it is good to see some custom functionality being provided that makes life easier. There’s also the hmisc package but my experiences while working with that on S-Plus have been such that it compares less favourably with foreign on the reliability front. Saying that, things may have changed since I last tried it.

3rd June 2008 Using hash objects in SAS data step code offers some great advantages from the speed point of view; having a set of data in memory rather than on disk makes things much faster. However, that means that you need to keep more of an eye on the amount of memory that’s being used. The first thing is to work out how much memory is available and it’s not necessarily the total amount installed on the system or, for that matter, the amount of memory per processor on a multi-processor system. What you really need is the number, in bytes, that is stored in the XMRLMEM system option and here’s a piece of code that’ll do just that:

data _null_;

mem=getoption('xmrlmem');

put mem;

run;

The XMRLMEM is itself an option that you can only declare in the system call that starts SAS up in the first place and there are advantages to keeping it under control, particularly on large multi-user servers. However, if your hash objects start to exceed what is available, here’s the sort of thing that you can expect to see:

ERROR: Hash object added 49136 items when memory failure occurred.

FATAL: Insufficient memory to execute data step program. Aborted during the EXECUTION phase.

NOTE: The SAS System stopped processing this step because of insufficient memory.

NOTE: SAS set option OBS=0 and will continue to check statements. This may cause NOTE: No observations in data set.

Those messages are a cue for you to learn to keep those hash objects and to only ever make them as large as your memory settings will allow. Another thing to note is that hash objects are best retained for rather fixed data volumes instead of ones that could outgrow their limits. There’s a certain amount of common sense in operation here but it may be that promoters of hash objects don’t mention their limitations as much as they should. If you want to find out more, SAS have a useful paper on their website and the their Knowledge Base has more on the error messages that you can get.

30th November 2007 I was setting up a key mapping in SAS 8.1 such that the log and output windows are cleared and a SAS program run in the most recently used program editor window. The idea was that debugging would be easier and command was what you see below:

log; clear; output; clear; wpgm; submit

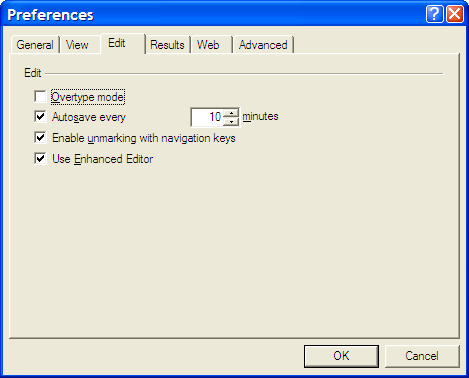

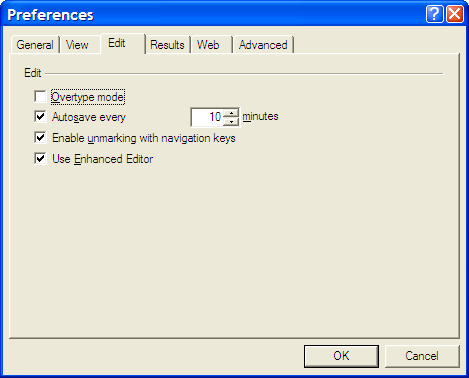

I was having trouble getting SAS to pick up the most recently used Enhanced Editor window and it was opening up an old style Program Editor window in its place. If I had wanted to use that, I would have used pgm and not wpgm. What was conspiring against me was a pesky system option. Pottering over to Tools > Options > Preferences and navigating to the Edit tab brought me to the cause of the problem: the Use Enhanced Editor check box was in the clear and fixing that set me on my way. SAS 9 could also be afflicted by the same irritation and that is where i got the screenshot that you see below where everything is hunky dory.

25th November 2007 SAS can generate many types of output: plain text, XML, PDF, RTF, Excel, etc. With all of these and the SAS procedures like PROC REPORT, PROC TABULATE and so on, it might seem surprising for me to say that I have been generating output with data step PUT and FILE statements. There was, of course, a reason for this: creating text files for loading into a new database-driven software application. At one stage, I also did some data interleaving at the output stage and that’s when I discovered that the default behaviour for SAS FILE statements is to completely overwrite a file unless the MOD option was specified. Adding that switches on APPEND behaviour. The code below adds a header in one step while adding data below it in another. I know that there are slicker ways to achieve this like setting up your data as you want it or using _N_ to ensure that something only appears once but here’s another way. As per the Perl, there’s often more than one way to do something with SAS.

data _null_;

file ds_data;

put "fieldtype;datasetname;datasetlabel;datasetlayout;datasetclass;datasetstandardversion";

run;

data _null_;

set ds_ispec;

file ds_data mod;

line="datasetstandard;"||trim(memname)||";"||trim(memlabel)||";;;"||trim(memver);

put line;

run;

4th October 2007 Recently, I was reviewing a log of a program being run by SAS 9.1.3 on a Solaris system and spotted lines like the following:

MACROGEN(MACRO1): OPTIONS NOMPRINT NOMPRINTNEST

NOTE: PROCEDURE DISPLAY used (Total process time):

real time 0.73 seconds

cpu time 0.50 seconds

MPRINT(MACRO1): SOURCE SOURCE2 NOTES;

The appearance of the word MACROGEN made me wonder if there was another system option that I had missed. A quick search of the SAS website threw up a support note that shed some light on the situation. Apparently, MACROGEN is the SAS v5 forbear of today’s MPRINT, MLOGIC, and SYMBOLGEN options and would seem to be obsolete in these days. Having started programming SAS in the days of version 6, I had missed out on MACROGEN and so use its replacements instead, hence my never coming across the option. Quite what it’s doing showing up in a SAS 9 log is another story: and there I was thinking that SAS 9 was the result of a full rewrite… Now, I am not so sure but at least I know what MACROGEN is if someone ever takes the time to ask me.

23rd July 2007 With SAS 9, SAS Institute have introduced the sort sort of integrity constraints that have been bread and butter for relational database SQL programs but some SAS programmers may find them more restrictive than they might like. The main one that comes to my mind is the following:

proc sql noprint;

create table a as select a.*,b.var from a left join b on a.index=b.index;

quit;

Before SAS 9, that worked merrily with nary a comment but you now will see a warning like this:

WARNING: This CREATE TABLE statement recursively references the target table. A consequence of this is a possible data integrity problem.

In data step, the following still runs without a complaint:

data a;

merge a b(keep=index var);

by index;

run;

On the surface of it this does look inconsistent. From a database programmer’s point of view having to use different source and target datasets is no hardship but seems a little surplus to requirements for a SAS programmer trained to keep down the number of temporary datasets in an effort to reduce I/O and keep things tidy, an academic concept perhaps in these days of high processing power and large disks. Adding UNDO_POLICY=NONE to the PROC SQL line does make everything consistent again but I see this as being anathema to a database programming type. I do admit to indulging in the override for personal quick and dirty purposes but abiding by the constraint is how I do things for formal purposes like inclusion in an application.

20th June 2007 This is a SAS error that can be seen from time to time:

WARNING: The quoted string currently being processed has become more than 262 characters long. You may have unbalanced quotation marks.

In the days prior to SAS version 8, this was something that needed to be immediately corrected. In these days of SAS character variables extending beyond 200 characters in length, it becomes a potential millstone around a SAS programmer’s neck. If you run a piece of code like this:

data _null_;

x="[string with more than 262 characters (putting in an actual string wrecks the appearance of the website)]";

run;

What you get back is the warning message at the heart of the matter. The code is legitimate and works fine but the spurious error is returned because SAS hasn’t found a closing quote by the required position and the 262 character limit is a hard constraint that cannot be extended. There is another way though: the new QUOTELENMAX option in SAS9. Setting it as follows removes the messages in most situations (yes, I did find one where it didn’t play ball):

options noquotelenmax;

This does however beg the question as to how you check for unbalanced quotes in SAS logs these days; clearly, looking for a closing quote is an outmoded approach. Thanks to code highlighting, it is far easier to pick them out before the code gets submitted. The other question that arises is why you would cause this to happen anyway but there are occasions where you assign the value of a macro variable to a data set one and the string is longer than the limit set by SAS. Here’s some example code:

data _null_;

length y $400;

y=repeat("f",400);

call symput("y",y)

run;

data _null_;

x="&y";

run;

My own weakness is where I use PROC SQL to combine strings into a macro variable, a lazy man’s method of combining all distinct values for a variable into a delimited list like this:

proc sql noprint;

select distinct compress(string_var) into :vals separated by " " from dataset;

quit;

Of course, creating a long delimited string using the CATX (new to SAS9) function avoids the whole situation and there are other means but there may be occasions, like the use of system macro variables, where it is unavoidable and NOQUOTELENMAX makes a much better impression when these arise.

8th June 2007 When you open up a SAS macro catalog so that its entries for use by other programs, it has a major impact on the ability to change the library reference used to access the catalog after it has apparently been unlocked.

options mstored sasmstore=bld_v001;

Using the line above will open the catalog for reading but there is no way to close it in order to change the library reference or deassign it until the SAS session is shut down. Even this line will not do the trick:

options nomstored sasmstore='';

What it means in practice is that if you have a standard macro setting up access to a number of standard macro libraries, then that setup macro needs to check for any library references used and not try to reassign them, causing errors in the process.

30th May 2007 The filename statement’s pipe option allows you to direct the output of operating system commands into SAS for further processing. Usefully, the Windows dir command (with its /s switch) and the UNIX and Linux equivalent ls allow you get a file listing into SAS. For example, here’s how you extract the list of files in your UNIX or Linux home directory into SAS:

filename DIRLIST pipe 'ls ~';

data dirlist;

length filename $200;

infile dirlist length=reclen;

input buffer $varying200. reclen;

run;

Using the ftp option on the filename statement allows you to get a list of the files in a directory on a remote server, even one with a different operating system to that used on the client (PC or server), very useful for cases where cross-platform systems are involved. Here’s some example code:

filename dirlist ftp ' ' ls user='user' host='host' prompt;

data _null_;

length filename $200;

infile dirlist length=reclen;

input buffer $varying200. reclen;

run;

The PROMPT option will cause SAS to ask you for a password and the null string is where you would otherwise specify the name of a file.

12th February 2007 Over the weekend, I have been updating the theme on my other blog, HennessyBlog. It has been a task that projected me onto a learning curve with the WordPress 2.1 codebase. I have collected what I encountered so I know that it’s out there on the web for you (and I) to use and peruse. It took some digging to get to know some of what you find below. Any function used to power WordPress takes some finding so I need to find one place on the web where the code for WordPress is fully documented. The sites presenting tutorials on how to use WordPress are more often than not geared towards the non-techie rather than code cutters like myself. Then again, they might be waiting for someone to do it for them…

The changes made are as follows:

Tweaks to the interface

These are subtle with the addition of navigation controls to the sidebar and the change in location of the post metadata being the most obvious enhancements. “Decoration” with solid and dashed lines (using CSS border attributes rather than the deprecated hr tagset) and standards compliance links.

Standards compliance

Adding standards compliance links does mean that you’d better check that all is in order; it was then that I discovered that there was work to be done. There is an issue with the WordPress wpautop function (it lives in the formatting.php file) in that it sometimes doesn’t add closing tags. Finding out that it was this function that is implicated took a trip to the WordPress.org website; a good rummage in the wp-includes folder does a lot but it can’t achieve everything.

Like a lot of things in the WordPress code, the wpautop function isn’t half buried. The the_content function (see template-functions-post.php) used to output blog entries calls get_content function (also in template-functions-post.php) to extract the data from mySQL. The add_filter function (in plugin.php) associates the wpautop function and others with get_the_content function and the p tags get added to the output.

To return to the non-ideal behaviour that caused me to start out on the above quest, an example is where you have an img tag enclosed by div tags. The required substitution involves the use of regular expressions that work most of the time but get confused here. So adding a hack to the wpautop function was needed to change the code so that the p end tag got inserted. I’ll be keeping an eye out for any more scenarios like this that slip through the net and for any side effects. Otherwise, compliance is just making sure that all those img tags have their alt attributes completed.

Tweaks to navigation code

Most of my time has been spent on tweaking of the PHP code supporting the navigation. Different functions were being called in different places and I wanted to harmonise things. To do this, I created new functions in the functions.php for my theme and needed to resolve a number of issues along the way. Not least among these were regular expressions used for subsetting with the preg_match match that weren’t to my eyes Perl-compliant, as would be implied by the choice of function. I have since found that PCRE’s in PHP use a more pragmatic syntax but there remained issues with the expressions that were being used. They seemed to behave OK in their native environment but fell out of favour within the environs of my theme. Being acquainted with Perl, I went for a more familiar expression style and the issue has been resolved.

Along the way, I broke the RSS feed. This was on my off-line test blog so no one, apart from myself, that is, would have noticed. After a bit of searching, I realised that some stray white-space from the end of a PHP file (wp-config.php being a favourite culprit), after the PHP end tag in the script file as it happens, was finding its way into the feed and causing things to fall over. Feed readers don’t take too kindly to the idea of the XML declaration not making an appearance on the first line of the file. The refusal of Firefox to refresh things as it should caused some confusion until I realised that a forced refresh of the feed display was needed -- sometimes, it takes a while for an addled brain to think of these kinds of things.