Upgrading from OpenMediaVault 6.x to OpenMediaVault 7.x

8th March 2024Having an older PC to upgrade, I decided to install OpenMediaVault on there a few years ago after adding in 6 TB and 4 TB hard drives for storage, a Gigabit network card to speed up backups and a new BeQuiet! power supply to make it quieter. It has been working smoothly since then, and the release of OpenMediaVault 6.x had me wondering how to move to it.

Usefully, I enabled an SSH service for remote logins and set up an account for anything that I needed to do. This includes upgrades, taking backups of what is on my NAS drives, and even shutting down the machine when I am done with what I need to do with it.

Using an SSH session, the first step was to switch to the administrator account and issue the following command to ensure that my OpenMediaVault 6.x installation was as up-to-date as it could be:

omv-update

Once that had completed what it needed to do, the next step was to do the upgrade itself with the following command:

omv-release-upgrade

With that complete, it was time to reboot the system, and I fired up the web administration interface and spotted a kernel update that I applied. Again, the system was restarted, and further updates were noticed and these were applied, again through the web interface. The whole thing is based on Debian 12.x, but I am not complaining since it quietly does exactly what I need of it. There was one slight glitch when doing an update after the changeover, and that was quickly sorted.

Another way to supply the terminal output of one BASH command to another

26th April 2023My usual way for sending the output of one command to another is to be place one command after another, separated by the pipe (|) operator and adjusting the second command as needed. However, I recently found that this approach does not work well for docker pull commands and I then uncovered another option.

That is to enclose the input command in $( ) within the output command. Within the parentheses, any kind of command can be declared and includes anything with piping as part of it. As long as text is being printed to the terminal, it can be fed to the second command and used as required. Thus, you can have something like the following:

docker pull $([command outputting name of image to download])

This approach has helped with other kinds of automation of docker image and container use and deployment because it is so general. There may be other uses found for the approach yet.

Getting Adobe Lightroom Classic to remember the search filters that you have set

23rd April 2023With Windows 10 support to end in October 2025 and VirtualBox now offering full support for Windows 11, I have moved onto Windows 11 for personal use while retaining Windows 10 for professional work, at least for now. Of course, a lot could happen before 2025 with rumours of a new Windows version, the moniker Windows 12 has been mooted, but all that is speculation for now.

As part of the changeover, I moved the Adobe apps that I have in an ongoing subscription, Lightroom Classic and Photoshop are the main ones for me, to the new virtual machine. That meant that some settings from the previous one were lost and needed reinstating.

One of those was the persistence of Library Filters, so I had to find out how to get that sorted. If my memory is not fooling me, this seemed to be a default action in the past and that meant that I was surprised by the change in behaviour.

Nevertheless, I had to go to the File menu, select Library Filters (it is near the bottom of the menu in the current version at the time of writing) and switch on Lock Filters by clicking on it to get a tick mark preceding the text. There is another setting called Remember Each Source’s Filters Separately in the same place that can be set in the same manner if so desired, and I am experimenting with that at the moment, even though I have not bothered with this in the past.

Getting a Windows 11 Guest to run smoothly on VirtualBox

23rd November 2022In recent days, I have been trying to get Windows 11 to run smoothly within a VirtualBox virtual machine, and there has been a lot of experimentation along the way. This was to eradicate intermittent freezes that escalated CPU usage and necessitated hard restarts. If I was to use Windows 11 as a long-term replacement for Windows 10, these needed to go.

An internet search showed that others faced the same predicament but a range of proposed solutions did nothing for me. The suggestion of enabling 3D graphics capability did nothing but produce a black screen at startup time so that was not a runner. It might have been the combination of underlying graphics hardware and the drivers on my Linux Mint machine that hindered me when it helped others.

In the end, a look at the bug tracker for Windows guest operating systems running on VirtualBox sent me in another direction. The Paravirtualisation interface also may have caused issues with Windows 10 virtual machines since these were all set to KVM. Doing the same for Windows 11 seems to have stopped the freezing behaviour so far. It meant going to the virtual machine settings, navigating to System > Acceleration and changing the dropdown menu value from Default to KVM before clicking on the OK button.

Before that, I have been blaming the newness of VirtualBox 7 (it is best not to expect too much of a fresh release bringing such major changes) and even the way that I installed Windows 11 using the streamlined installation or licensing issues. Now that things are going better, it may have been a lesson from Windows 10 that I had forgotten. The EFI, Secure Boot and TPM 2.0 requirements of Windows 11 also blindsided me, especially given the long wait for VirtualBox to add such compatibility, but that is behind me at this stage.

Windows 11 is not perfect but Start11 makes it usable and the October 2025 expiry for Windows 10 also focuses my mind. It is time to move over for sake of future-proofing if nothing else. In time, we may get a better operating system as Windows 11 matures and some minds surely are thinking of a “Windows 12”. However things go, it may be that we get to a point where something vintage in the nature of Windows XP, Windows 7 or Windows 10 appears. Those older versions of Windows became like old gold during their lives.

Resolving a clash between Homebrew and Python

22nd November 2022For reasons that I cannot recall now, I installed the Hugo static website generator on my Linux system and web servers using Homebrew. The only reason that I suggest is that it might have been a way to get the latest version at the time since Linux Mint only does major changes every two years, keeping it in line with long-term support editions of Ubuntu.

When Homebrew was installed, it changed the lookup path for command line executables by adding the following line to my .bashrc file:

eval "$(/home/linuxbrew/.linuxbrew/bin/brew shellenv)"

This executed the following lines:

export HOMEBREW_PREFIX="/home/linuxbrew/.linuxbrew";

export HOMEBREW_CELLAR="/home/linuxbrew/.linuxbrew/Cellar";

export HOMEBREW_REPOSITORY="/home/linuxbrew/.linuxbrew/Homebrew";

export PATH="/home/linuxbrew/.linuxbrew/bin:/home/linuxbrew/.linuxbrew/sbin${PATH+:$PATH}";

export MANPATH="/home/linuxbrew/.linuxbrew/share/man${MANPATH+:$MANPATH}:";

export INFOPATH="/home/linuxbrew/.linuxbrew/share/info:${INFOPATH:-}";

While the result suits Homebrew, it changed the setup of Python and its packages on my system. Eventually, this had undesirable consequences like messing up how Spyder started so I wanted to change this. There are other things that I have automated using Python and these were not working either.

One way that I have seen suggested is to execute the following command but I cannot vouch for this:

brew unlink python

What I did was to comment out the offending line in .bashrc and replace it with the following:

export PATH="$PATH:/home/linuxbrew/.linuxbrew/bin:/home/linuxbrew/.linuxbrew/sbin"

export HOMEBREW_PREFIX="/home/linuxbrew/.linuxbrew";

export HOMEBREW_CELLAR="/home/linuxbrew/.linuxbrew/Cellar";

export HOMEBREW_REPOSITORY="/home/linuxbrew/.linuxbrew/Homebrew";

export MANPATH="/home/linuxbrew/.linuxbrew/share/man${MANPATH+:$MANPATH}:";

export INFOPATH="${INFOPATH:-}/home/linuxbrew/.linuxbrew/share/info";

The first of these adds the Homebrew paths to the end of the PATH variable instead of the start of the same as was the case before. This means that system folders get searched for executable files before the Homebrew ones. It also means that Python packages are loaded from my user area and not the Homebrew one as was the case under its own terms. There are other things to remember with Python packages such as not having a version installed at the system level and another at the user one since these will conflict with one another.

So far, the result of the Homebrew changes is not unsatisfactory and I will watch for any rough edges that need addressing. If something comes up, then I will set things up in another way.

Removing redundant kernels from Ubuntu

29th October 2022Recently, a message appear on some web servers that I have that exhorted me to upgrade to Ubuntu 22.04.1 using the do-release-upgrade command. In the interests of remaining current, I did just that to get another message, one like the following:

The upgrade needs a total of [amount of space with units] free space on disk `/boot`.

Please free at least an additional [amount of space with units] of disk space on `/boot`.

Empty your trash and remove temporary packages of former installations

using `sudo apt-get clean`.

Using sudo apt-get clean did not resolve the problem so the advice given was of no use. The actual problem was that there were too many old kernels cluttering up /boot and searching around the web provided that wisdom. What also came up was a single command for fixing the problem. However, removing the wrong kernel can trash a system so I took a more cautious approach. First, I listed the kernels to be removed and checked that they did not include the currently running one. This was done with the following command (broken up over several lines for clarity using the backslash character to denote continuation) and running uname -r found the details of the running kernel:

dpkg -l linux-{image,headers}-"[0-9]*" \

| awk '/ii/{print $2}' \

| grep -ve "$(uname -r \

| sed -r 's/-[a-z]+//')"

The dpkg command listed the installed kernels with awk, grep and sed filtering out unwanted sections of the text. The awk command takes the tabular output from dpkg and turns it into a list. The -v switch on the grep command gets the lines that do not match the search expression created by the sed command, while the -e switch makes grep look for patterns. The sed command removes all letters from the output of the uname command, where the -r switch produces the kernel release details, to leave on the release number of the current kernel. On being satisfied that nothing untoward would happen, the full command below (also broken up over several lines for clarity using the backslash character to denote continuation) could be executed.

sudo apt purge $(dpkg -l linux-{image,headers}-"[0-9]*" \

| awk '/ii/{print $2}' \

| grep -ve "$(uname -r \

| sed -r 's/-[a-z]+//')")

This apt to purge the unwanted kernels, thus freeing up enough space for the upgrade to continue. That happened without significant incident though there were some remediations needed on the PHP side to get the website working smoothly again.

One way to fix slow CyberGhost VPN connections on Windows 10

31st January 2020Due to a need to access websites with country blocking, I have decided to give CyberGhost a go and it also will come in handy when connecting devices to other Wi-Fi connections. What I have got is the three year subscription package and all went went well on the first day of use. However, things became unusable on the second and a reboot did not sort it.

The problem seemed to affect a phone running Android too and I even got to suspecting my router an broadband provider. Even terminating the subscription came to mind but it did not come to that. Instead, I did a bit more research and tried changing the maximum transition unit (MTU) for the connection to 1300 as suggested in a CyberGhost help article. Using the Control Panel meant that it was resetting to 1500 on my Windows 10 machine so I then turned to a command line based solution.

To do that, I started PowerShell in administrator mode from the context menu produced by right clicking on the Start Menu icon on the taskbar. Then, I entered the following command to see what connections I had and what the MTU settings were:

netsh interface ipv4 show subinterfaces

From looking through the Settings and Control Panel applications, I already had worked out what network interface belonged to the CyberGhost connection. Seeing that the MTU setting was 1500, I then issued a command like the following to change that to 1300.

netsh interface ipv4 set subinterface "<name of ethernet interface>" mtu=1300 store=persistent

Here, <name of ethernet interface> gets replace by the name of your connection and the string is quoted to avoid spaces in the name causing problems with executing the command. Once that second command had been run, the first one was issued again and the output checked to ensure that the MTU setting was as expected.

This was done when the VPN connection was inactive but it may work also with an active connection. After making the change, I again reconnected to the VPN and all has been as expected since then and I found a better connection for my Android phone too.

Lessons learned on managing Windows Taskbar and Start Menu colouring in VirtualBox virtual machines

9th December 2019In the last few weeks, I have had a few occasions when the colouration of the Windows 10 taskbar and its Star Menu has departed from my expectations. At times, this happened in VirtualBox virtual machine installations and both the legacy 5.2.x versions and the current 6.x ones have thrown up issues.

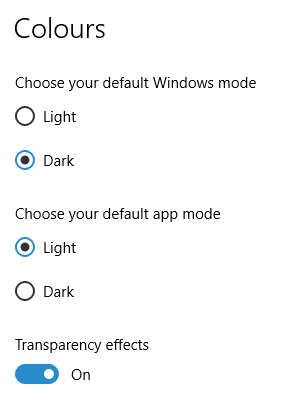

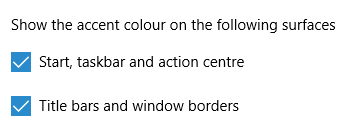

The first one actually happened with a Windows 10 installation in VirtualBox 5.2.x when the taskbar changed colour to light grey and there was no way to get it to pick up the colour of the desktop image to become blue instead. The solution was to change the Windows from Light to Dark in order for the desired colouration to be applied and the settings above are taken from the screen that appears on going to Settings > Personalisation > Colours.

The second issue appeared in Windows 10 Professional installation in VirtualBox 6.0.x when the taskbar and Start Menu turned transparent after an updated. This virtual machine is used to see what is coming in the slow ring of Windows Insider so some rough edges could be expected. The solution here was to turn off 3D acceleration in the Display pane of the VM settings after shutting it down. Starting it again showed that all was back as expected.

Both resolutions took a share of time to find and there was a deal of experimentation needed too. Once identified, they addressed the issues as desired so I am recording here for use by others as much as future reference for myself.

Performing parallel processing in Perl scripting with the Parallel::ForkManager module

30th September 2019In a previous post, I described how to add Perl modules in Linux Mint while mentioning that I hoped to add another that discusses the use of the Parallel::ForkManager module. This is that second post and I am going to keep things as simple and generic as they can be. There are other articles like one on the Perl Maven website that go into more detail.

The first thing to do is ensure that the Parallel::ForkManager module is called by your script and having the following line near the top will do just that. Without this step, the script will not be able to find the required module by itself and errors will be generated.

use Parallel::ForkManager;

Then, the maximum number of threads needs to be specified. While that can be achieved using a simple variable declaration, the following line reads this from the command used to invoke the script. It even tells a forgetful user what they need to do in its own terse manner. Here $0 is the name of the script and N is the number of threads. Not all these threads will get used and processing capacity will limit how many actually are in use so there is less chance of overwhelming a CPU.

my $forks = shift or die "Usage: $0 N\n";

Once the maximum number of available threads is known, the next step is to instantiate the Parallel::ForkManager object as follows to use these child processes:

my $pm = Parallel::ForkManager->new($forks);

With the Parallel::ForkManager object available, it is now possible to use it as part of a loop. A foreach loop works well though only a single array can be used with hashes being needed when other collections need interrogation. Two extra statements are needed with one to start a child process and another to end it.

foreach $t (@array) {

my $pid = $pm->start and next;

<< Other code to be processed >>

$pm->finish;

}

Since there often is other processing performed by script and it is possible to have multiple threaded loops in one, there needs to be a way of getting the parent process to wait until all the child processes have completed before moving from one step to another in the main script and that is what the following statement does. In short, it adds more control.

$pm->wait_all_children;

To close, there needs to be a comment on the advantages of parallel processing. Modern multi-core processors often get used in single threaded operations and that leaves most of the capacity unused. Utilising this extra power then shortens processing times markedly. To give you an idea of what can be achieved, I had a single script taking around 2.5 minutes to complete in single threaded mode while setting the maximum number of threads to 24 reduced this to just over half a minute while taking up 80% of the processing capacity. This was with an AMD Ryzen 7 2700X CPU with eight cores and a maximum of 16 processor threads. Surprisingly, using 16 as the maximum thread number only used half the processor capacity so it seems to be a matter of performing one’s own measurements when making these decisions.

Lightening of desktop background images on Linux Mint Debian Edition running in Virtualbox

22nd October 2018After a recent upgrade to Linux Mint Debian Edition 3 in a VirtualBox virtual machine that I had running its predecessor, I began to notice that background images were being loaded with more washed out of faded colours. This happened at startup so selecting another background image worked as intended until the same thing happened to that after a system restart.

This problem is not new and has affected the Cinnamon desktop in the main Linux Mint variant (the one that is based on Ubuntu) and issuing the following command in a terminal session is a suggested solution:

gsettings set org.cinnamon.muffin background-transition fade-in

In my case, that solved the problem and desktop background image display is as it should be since I executed the above. All it took was a change to a system setting.