Having been on a web-building journey from Geocities to having a website with my own domain hosted by Fasthosts, it should come as no surprise that I have encountered a number of tools and technologies over this time and that my choices and knowledge have evolved too. I’ll muse over the technologies first before going on to the tools that I use.

Technologies

XHTML

When I started building websites, HTML 4 was not long in existence and I devoured most if not all of Elizabeth Castro’s Peachpit Visual Quickstart guide to the language within a weekend. Having previously used fairly primitive WYSIWYG tools like Netscape Composer and Claris Home Page, it was an empowering experience and the first edition (it is now on its third) of Jennifer Niederst Robbins’ Web Design in a Nutshell took things much further, becoming something of a bible for a number of years.

When it first appeared, XHTML 1.0 wasn’t a major change from HTML 4, but its stricter more XML-compliant syntax was meant to point the way to the future and semantic markup was at its heart at least as much as it was for HTML 4. XHTML 2.0 is on the horizon and after the modular approach of XHTML 1.1 (which I have never used), it will be interesting to see how it develops. Nevertheless, there is a surprising development in that some people are musing over the idea of having an HTML 5. Let’s hope that the (X)HTML apple cart doesn’t get completely overturned after some years of relative stability. I still bear scars from the browser wars raging in the 1990’s and don’t want to see standards wars supplanting the relative peace that we have now. That said, I don’t mind peaceful progression.

CSS

Only seems to be coming into its own in the last few years and is truly an amazing technology in spite of the hobbles that MSIE places on our ambitions. CSS Zen Garden has been a major source of ideas; I wouldn’t have been able to customise this blog as much as I have without them. I was an early adopter of the technology and got burnt by inconsistent browser support; Netscape 4 was the proverbial bête noir back then, fulfilling the role that MSIE plays today. In those days, it was the idea of controlling text display and element backgrounds from a single place that appealed. Since then, I have progressed to using CSS to replace table-based layouts and to control element positioning. It can do more…

JavaScript

Having had a JavaScript-powered photo gallery before my current Perl-driven one, I can say that I have definitely sampled this ever-pervasive scripting language. Being a client-side language rather than a server-side one, it does place you rather at the mercy of the browser purveyors and it never ceases to amaze me that there is a buzz around AJAX because of this. In fact, the abundance of AJAX cross-browser function libraries is testimony to the need for browser-specific code. Despite my preferences for server-side scripting, I still find a use for JavaScript and its main use for me these days is to dynamically control CSS elements to do such things as control the height of a page element or whether it is shown or not. Apparently, CSS may get some dynamic capabilities in the future and reduce my dependence on JavaScript. In the meantime, Jeremy Keith’s DOM Scripting (Friends of Ed) will prove as much of an asset as it has done.

XML

These days, a lot of the raw data underlying my personal website is stored in XML. I did try to dynamically transform the display of the XML into something meaningful with CSS and XSLT when I first scaled its dizzy heights but I soon resorted to other techniques. Browser support and the complexity of what I required were the major contributors to this. The new strategy involved two different approaches. The first was to create PHP/XHTML pages from the precursor XML offline and this is how I generate the website’s directory pages. The other one is to process the XML as text to dynamically supply an XHTML page as the user visits it; this is the way that the photo gallery works.

Perl

This still powers all of my photo gallery. While thoughts of changing it all to PHP linger, there is a certain something about the Perl language that keeps it there. I suppose it is that PHP is entangled in the HTML while Perl encases the whole business and I am reasonably familiar with its syntax these days which is why it still does a lot of the data processing grunt work that I need.

PHP

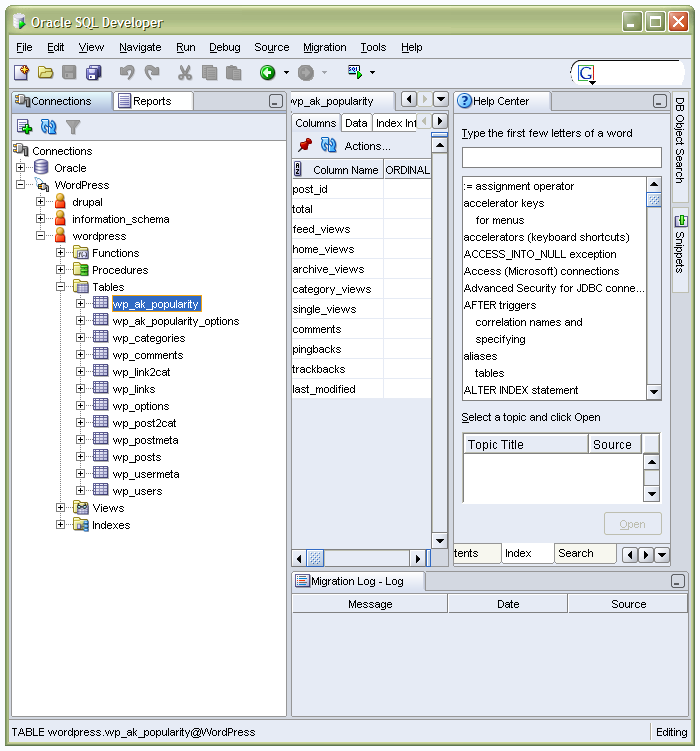

PHP is everywhere these days, though it doesn’t attract quite the level of hype that used to be the case. It still appears with its sidekick MySQL in many website applications. Blogging software such as WordPress and content management systems like Drupal, Mambo and Joomla! wouldn’t exist without the pair. It appears on my website as the glue that holds my visitor directories together and is the processing engine of my WordPress blog. And if I ever get to a Drupal element to the site, by no means a foregone conclusion though I am spending a lot of time with it at the moment, PHP will continue its presence in my website scripting as it powers that too.

Applications

Macromedia HomeSite

I have a liking for hand coding, so this does most of what I need. When Macromedia (itself since taken over by Adobe, of course) took over Allaire, HomeSite sadly lost its WYSIWYG capability, but the application still soldiers on even though Dreamweaver offers a lot to code cutters these days. Nevertheless, it does have certain advantages over Dreamweaver: it is a fleeter beast to start up and colour codes Perl syntax.

Macromedia Dreamweaver

There was a time when Dreamweaver was solely a tool for visual web page development, but the advent of Dreamweaver UltraDev added server-side development capabilities to the Dreamweaver family. These days, there is only one Dreamweaver version, but UltraDev’s capabilities still live on in the latest version and I would not be surprised if they were taken further in these database-driven times.

Nowadays, Dreamweaver isn’t an application where I spend a great deal of time. In former times, when my site was made up of static HTML pages, I used Dreamweaver a lot even if its rendering capabilities were a step behind the then-current browser versions. I suppose that it didn’t fit the way in which I worked, but its template-driven workflow would have been a boon back then.

However, my move from a static site to a dynamic one, starting with my photo gallery, has meant that I haven’t used it as much since then. However, with my use of PHP/MySQL components on my site. Its server-side abilities could get the level of investigation that its PHP/MySQL capabilities allow.

Altova XMLSpy Professional

Adding MySQL databases to my web hosting costs money, not a lot but it could be spent on other (more important?) things. Hence, I use XML as the data store for my photo gallery and XML files are pre-processed into XHTML/PHP pages for my visitor directories prior to uploading onto the server.

I use XMLSpy to edit and manage the XML files that I use: its ability to view XML in grid format is a killer feature as far as I am concerned and XML validation also proves very useful; particularly with regard to ensuring that DTD’s and XML files are in step and for the correct coding of XSLT files. There are other features that I need to explore and that would also take my knowledge of the XML further to boot, not at all a bad thing.

Saxon

For processing XML into another file format such as XHTML, you need a parser and I use the free version of Saxon to do the needful, Saxonica offers commercial versions of it. There is, I believe, a parser in XMLSpy but I don’t use it because Saxon’s command line interface fits better into my workflow. This is a Perl-driven process where XML files are read and XSLT files, one per XML file, are built before both are fed to Saxon for transforming into XHTML/PHP files. It all works smoothly and updating the XML inputs is all that is required.

AceFTP

If I were looking for an FTP client now, it would be FileZilla but AceFTP has served me well over the last few years and it looks as if that will continue. It does have some extra features over FileZilla: transfers between remote sites, and scheduling, for example. I have yet to use either but they look valuable.

Hutmil

In bygone days when I had loads of static HTML files, making changes was a bit of a chore if they affected every single file. An example is changing the year on the copyright message on the page footers. Hutmil, which I found on a magazine cover-mounted disc, was a great time saver in those days. Today, I achieve this by putting this information into a single file and getting Perl or PHP to import that when building the page. The same “define once, use anywhere” approach underlies CSS as well and scripting very usefully allows you to take that into the XHTML domain.

Apache

Apache is ubiquitous these days and both the online and offline versions of my site are powered by it. It does require some configuration but it is a very powerful piece of kit. The introduction of 2.2.x meant a big change in the way that configuration files were modularised and while most things were contained in a single file for 2.0.x, the settings are broken up into different files in 2.2.x and it can take a while to find things again. Without having it on my home PC, I would not be able to use Perl, PHP or MySQL. Apart from this, I especially like its virtual site capability; very useful for offline development.

WordPress

My hosting supplier offers blogs on Blogware, but that didn’t offer the level of configuration that I would have liked. It is true that this is probably true of any host of blogs. I can’t speak for Blogger but WordPress.com does have its restrictions too. To make my hillwalking blog fit in with the appearance of my photo gallery, I went popped over to WordPress.org to download WordPress so that I could host a blog myself and have maximum control over its appearance. WordPress supports themes so I created my own and got my blog pages looking as if they are part of my website, rather than looking like something that was bolted on. Now that I think of it, what about WordPress supporting user-created themes? I support that there is the worry of insecure PHP code but what about it?

MySQL

I am between minds on whether this is a technology or a tool. SQL certainly would be a technology standard but I am not so clear on what MySQL would be. In any case, I have classed it as a tool and a very useful one at that. It is the linchpin for my WordPress blogs and, if I go for a content management system like Drupal, its role would surely grow. While I do have a lot of experience with using SAS SQL and this helps me to deal with other varieties, there is still a learning curve with MySQL that gets me heading for a good book and Kofler’s The Definitive Guide to MySQL5 (Apress) seems to perform more than adequately in this endeavour.

Paint Shop Pro

As someone who hosts an online photo gallery, it won’t come as a surprise that I have had exposure to image editors. Despite various other flirtations, Paint Shop Pro has been my tool of choice over the years, but it is now set to be usurped by a member of Adobe’s Photoshop family. Paint Shop Pro does have books devoted to it but it seems that Photoshop gets better coverage and I feel that my image processing needs to be taken up a gear, hence the potential move to Photoshop