Solving an upgrade hitch en route to Ubuntu 10.04

4th May 2010After waiting until after a weekend in the Isle of Man, I got to upgrading my main home PC to Ubuntu 10.04. Before the weekend away, I had been updating a 10.04 installation on an old spare PC and that worked fine so the prospects were good for a similar changeover on the main box. That may have been so but breaking a computer hardly is the perfect complement to a getaway.

So as to keep the level of disruption to a minimum, I opted for an in-situ upgrade. The download was left to complete in its own good time and I returned to attend to installation messages asking me if I wished to retain old logs files for the likes of Apache. When the system asked for reboot at the end of the sequence of package downloading, installation and removal, I was ready to leave it do the needful.

However, I met with a hitch when the machine restarted: it couldn’t find the root drive. Live CD’s were pressed into service to shed light on what had happened. First up was an old disc for 9.10 before one for 10.04 Beta 1 was used. That identified a difference between the two that was to prove to be the cause of what I was seeing. 10.04 uses /dev/hd*# (/dev/hda1 is an example) nomenclature for everything including software RAID arrays (“fakeraid”). 9.10 used the /dev/mapper/sil_**************# convention for two of my drives and I get the impression that the names differ according to the chipset that is used.

During the upgrade process, the one thing that was missed was the changeover from /dev/mapper/sil_**************# to /dev/hd*# in the appropriate places in /boot/grub/menu.lst; look for the lines starting with the word kernel. When I did what the operating system forgot, I was greeted by a screen telling of the progress of checks on one of the system’s disks. That process took a while but a login screen followed and I had my desktop much as before. The only other thing that I had to do was run gconf-editor from the terminal to send the title bar buttons to the right where I am accustomed to having them. Since then, I have been working away as before.

Some may decry the lack of change (ImageMagick and UFRaw could do with working together much faster, though) but I’m not complaining; the rough of 9.10 drilled that into me. Nevertheless, I am left wondering how many are getting tripped up by what I encountered, even if it means that Palimpsest (what Ubuntu calls Disk Utility) looks much tidier than it did. Could the same thing be affecting /etc/fstab too? The reason that I don’t know the answer to that question is that I changed all hard disk drive references to UUID a while ago but it’s another place to look if the GRUB change isn’t fixing things for you. If my memory isn’t failing me, I seem to remember seeing /dev/mapper/sil_**************# drive names in there too.

A waiting game

20th August 2011Having been away every weekend in July, I was looking forward to a quiet one at home to start August. However, there was a problem with one of my websites hosted by Fasthosts that was set to occupy me for the weekend and a few weekday evenings afterwards.

The issue appeared to be slow site response so I followed advice given to me by second line support when this website displayed the same type of behaviour: upgrade from Apache 1.3 to 2.2 using the control panel. Unfortunately for me, that didn’t work smoothly at all and there seemed to be serious file loss as a result. Raising a ticket with the support desk only got me the answer that I had to wait for completion and I now have come to the conclusion that the migration process may have got stuck somewhere along the way. Maybe another ticket is in order.

There were a number of causes of the waiting that gave rise to the title of this post. Firstly, support for low costing isn’t exactly timely and I do wonder if it’s any better for more prominent websites. Restoration of websites by FTP is another activity that takes up plenty of time as does rebuilding databases and populating them with data. Lastly, there’s changing the DNS details for a website. In hindsight, there may be ways of reducing the time demands of these. For instance, contacting a support team by telephone may be quicker unless there is a massive queue awaiting attention and there was a wait of several hours one night when a security changeover affected a multitude of Fasthosts users. Of course, it is not a panacea at the best of times as we have known since all those stories began to do the rounds in the middle of the 1990’s. Doing regular backups would help the second though the ones that I was using for the restoration weren’t too bad at all. Nevertheless, they weren’t complete so there was unfinished business that required resolution later. The last of these is helped along by more regular PC restarts so that unexpected discovery will remain a lesson for the future though I don’t plan on moving websites around for a while. After all, getting DNS details propagated more quickly really is a big help.

While awaiting a response from Fasthosts, I began to ponder the idea of using an alternative provider. Perusal of the latest digital edition of .Net (I now subscribe to the non-paper edition so as to cut down on the clutter caused by having paper copies about the place) ensued before I decided to investigate the option of using Webfusion. Having decided to stick with shared hosting, I gave their Unlimited Linux option a go. For someone accustomed to monthly billing, it was unusual to see annual biannual and triannual payment schemes too. The first of these appears to be the default option so a little care and attention is needed if you want something else. In order to encourage you to stay with Webfusion longer, the per month is on sliding scale: the longer the period you buy, the lower the cost of a month’s hosting.

Once the account was set up, I added a database and set to the long process of uploading files from my local development site using FileZilla. Having got a MySQL backup from the Fasthosts site, I used the provided PHPMyAdmin interface to upload the data in pieces not exceeding the 8 MB file size limitation. It isn’t possible to connect remotely to the MySQL server using the likes of MySQL Administrator so I bear with this not so smooth process. SSH is another connection option that isn’t available but I never use it much on Fasthosts sites anyway. There were some questions to the support people along and the first of these got a timely answer though later ones took longer before I got an answer. Still, getting advice on the address of the test website was a big help while I was sorting out the DNS changeover.

Speaking of the latter, it took a little doing and not little poking around Webfusion’s FAQ’s before I made it happen. First, I tried using name servers that I found listed in one of the articles but this didn’t seem to achieve the end that I needed. Mind you, I would have seen the effects of this change a little earlier if I had rebooted my PC earlier than I did than I did but it didn’t occur to me at the time. In the end, I switched to using my domain provider’s name servers and added the required information to them to get things going. It was then that my website was back online in some fashion so I could any outstanding loose ends.

With the site essentially operating again, it was time to iron out the rough edges. The biggest of these was that MOD_REWRITE doesn’t seem to work the same on the Webfusion server like it does on the Fasthosts ones. This meant that I needed to use the SCRIPT_URI CGI variable instead of PATH_INFO in order to keep using clean URL’s for a PHP-powered photo gallery that I have. It took me a while to figure that out and I felt much better when I managed to get the results that I needed. However, I also took the chance to tidy up site addresses with redirections in my .htaccess file in an attempt to ensure that I lost no regular readers, something that I seem to have achieved with some success because one such visitor later commented on a new entry in the outdoors blog.

Once any remaining missing images were instated or references to them removed, it was then time to do a full backup for sake of safety. The first of these activities was yet another consumer while the second didn’t take so long and I need to do this more often in case anything happens. Hopefully though, the relocated site’s performance continues to be as solid as it is now.

The question as to what to do with the Fasthosts webspace remains outstanding. Currently, they are offering free upgrades to existing hosting packages so long as you commit for a year. After my recent experience, I cannot say that I’m so sure about doing that kind of thing. In fact, the observation leaves me wondering if instating that very extension was the cause of breaking my site. In fact, it appears that the migration from Apache 1.3 to 2.2 seems to have got stuck for whatever reason. Maybe another ticket should be raised but I am not decided on that yet. All in all, what happened to that Fasthosts website wasn’t the greatest of experiences but the service offered by Webfusion is rock solid thus far. While wondering if the service from Fasthosts wasn’t as good as it once was, I’ll keep an open mind and wait to see if my impressions change over time.

Ubuntu upgrades: do a clean installation or use Update Manager?

9th April 2009Part of some recent “fooling” brought on by the investigation of what turned out to be a duff DVD writer was a fresh installation of Ubuntu 8.10 on my main home PC. It might have brought on a certain amount of upheaval but it was nowhere near as severe as that following the same sort of thing with a Windows system. A few hours was all that was needed but the question as to whether it is better to do an upgrade every time a new Ubuntu release is unleashed on the world or to go for a complete virgin installation instead. With Ubuntu 9.04 in the offing, that question takes on a more immediate significance than it otherwise might do.

Various tricks make the whole reinstallation idea more palatable. For instance, many years of Windows usage have taught me the benefits of separating system and user files. The result is that my home directory lives on a different disk to my operating system files. Add to that the experience of being able to reuse that home drive across different Linux distros and even swapping from one distro to another becomes feasible. From various changes to my secondary machine, I can vouch that this works for Ubuntu, Fedora and Debian; the latter is what currently powers the said PC. You might have to user superuser powers to attend to ownership and access issues but the portability is certainly there and it applies anything kept on other disks too.

Naturally, there’s always the possibility of losing programs that you have had installed but losing the clutter can be liberating too. However, assembling a script made up up of one of more apt-get install commands can allow you to get many things back at a stroke. For example, I have a test web server (Apache/MySQL/PHP/Perl) set up so this would be how I’d get everything back in place before beginning further configuration. It might be no bad idea to back up your collection of software sources either; I have yet to add all of the ones that I have been using back into Synaptic. Then there are closed source packages such as VirtualBox (yes, I know that there is an open source edition) and Adobe Reader. After reinstating the former, all my virtual machines were available for me to use again without further ado. Restoring the latter allowed me to grab version 9.1 (probably more secure anyway) and it inveigles itself into Firefox now too so the number of times that I need to go through the download shuffle before seeing the contents of a PDF are much reduced, though not completely eliminated by the Windows-like ability to see a PDF loaded in a browser tab. Moving from software to hardware for a moment, it looks like any bespoke actions such as my activating an Epson Perfection 4490 Photo scanner need to be repeated but that was all that I needed to do. Getting things back into order is not so bad but you need to allow a modicum of time for this.

What I have discussed so far are what might be categorised as the common or garden aspects of a clean installation but I have seen some behaviours that make me wonder if the usual Ubuntu upgrade path is sufficiently complete in its refresh of your system. The counterpoint to all of this is that I may not have been looking for some of these things before now. That may apply to my noticing that DSLR support seems to be better with my Canon and Pentax cameras both being picked up and mounted for me as soon as they are connected to a PC, the caveat being that they are themselves powered on for this to happen. Another surprise that may be new is that the BBC iPlayer’s Listen Again works without further work from the user, a very useful development. It very clearly wasn’t that way before I carried out the invasive means. My previous tweaking might have prevented the in situ upgrade from doing its thing but I do see the point of not upsetting people’s systems with an overly aggressive update process, even if it means that some advances do not make themselves known.

So what’s my answer regarding which way to go once Ubuntu Jaunty Jackalope appears? For sake of avoiding initial disruption, I’d be inclined to go down the Update Manager route first while reserving the right to do a fresh installation later on. All in all, I am left with the gut feeling is that the jury is still out on this one.

Useful Python packages for working with data

14th October 2021My response to changes in the technology stack used in clinical research is to develop some familiarity with programming and scripting platforms that complement and compete with SAS, a system with which I have been programming since 2000. One of these has been R but Python is another that has taken up my attention and I now also have Julia in my sights as well. There may be others to assess in the fullness of time.

While I first started to explore the Data Science world in the autumn of 2017, it was in the autumn of 2019 that I began to complete LinkedIn training courses on the subject. Good though they were, I find that I need to actually use a tool in order to better understand it. At that time, I did get to hear about Python packages like Pandas, NumPy, SciPy, Scikit-learn, Matplotlib, Seaborn and Beautiful Soup though it took until of spring of this year for me to start gaining some hands-on experience with using any of these.

During the summer of 2020, I attended a BCS webinar on the CodeGrades initiative, a programming mentoring scheme inspired by the way classical musicianship is assessed. In fact, one of the main progenitors is a trained classical musician and teacher of classical music who turned to Python programming when starting a family so as to have a more stable income. The approach is that a student selects a project and works their way through it with mentoring and periodic assessments carried out in a gentle and discursive manner. Of course, the project has to be engaging for the learning experience to stay the course and that point came through in the webinar.

That is one lesson that resonates with me with subjects as diverse as web server performance and the ongoing pandemic pandemic supplying data and there are other sources of public data to examine as well before looking through my own personal archive gathered over the decades. Some subjects are uplifting while others are more foreboding but the key thing is that they sustain interest and offer opportunities for new learning. Without being able to dream up new things to try, my knowledge of R and Python would not be as extensive as it is and I hope that it will help with learning Julia too.

In the main, my own learning has been a solo effort with consultation of documentation along with web searches that have brought me to the likes of Real Python, Stack Abuse, Data Viz with Python and R and others for longer tutorials as well as threads on Stack Overflow. Usually, the web searching begins when I need a steer on a particular or a way to resolve a particular error or warning message but books always are worth reading even if that is the slower route. Those from the Dummies series or from O’Reilly have proved must useful so far but I do need to read them more completely than I already have; it is all too tempting to go with the try the “programming and search for solutions as you go” approach instead.

To get going, many choose the Anaconda distribution to get Jupyter notebook functionality but I prefer a more traditional editor so Spyder has been my tool of choice for Python programming and there are others like PyCharm as well. Spyder itself is written in Python so it can be installed using pip from PyPi like other Python packages. It has other dependencies like Pylint for code management activities but these get installed behind the scenes.

The packages that I first met in 2019 may be the mainstays for doing data science but I have discovered others since then. It also seems that there is porosity between the worlds of R an Python so you get some Python packages aping R packages and R has the Reticulate package for executing Python code. There are Python counterparts to such Tidyverse stables as dply and ggplot2 in the form of Siuba and Plotnine, respectively. The syntax of these packages are not direct copies of what is executed in R but they are close enough for there to be enough familiarity for added user friendliness compared to Pandas or Matplotlib. The interoperability does not stop there for there is SQLAlchemy for connecting to MySQL and other databases (PyMySQL is needed as well) and there also is SASPy for interacting with SAS Viya.

Pyhton may not have the speed of Julia but there are plenty of packages for working with larger workloads. Of these, Dask, Modin and RAPIDS all have there uses for dealing with data volumes that make Pandas code crawl. As if to prove that there are plenty of libraries for various forms of data analytics, data science, artificial intelligence and machine learning, there also are the likes of Keras, TensorFlow and NetworkX. These are just a selection of what is available and there is no need not to check out more. It may be tempting to stick with the most popular packages all the time, especially when they do so much, but it never hurst to keep an open mind either.

Thoughts on eBooks

20th August 2016In recent months, I have been doing a clear out of paper books in case the recent European Union referendum result in the U.K. affects my ability to stay there since I am an Irish citizen. In my two decades here, I have not felt as much uncertainty and lack of belonging as I do now. It is as if life wants to become difficult for a while.

What made the clearance easier was that there was of making sure that the books were re-used and eBooks replaced anything that I would wanted to keep. However, what I had not realised is that demand for eBooks has flatlined, something that only became apparent in recent article in PC Pro article penned by Stuart Turton. He had all sorts of suggestions about how to liven up the medium but I have some of my own.

Niall Benvie also broached the subject from the point of view of photographic display in an article for Outdoor Photography because most are looking at photos on their smartphones and that often reduces the quality of what they see. Having a partiality to photo books, it remains the one class of books that I am more likely to have in paper form, even I have an Apple iPad Pro (the original 12.9 inch version) and am using it to write these very words. There also is the six year old 24 inch Iiyama screen that I use with my home PC.

The two apps with which I have had experience are Google Play Books and Amazon Kindle, both of which I have used on both iOS and Android while I use the Windows app for the latter too. Both apps are simple and work effectively until you end up with something of a collection. Then, shortcomings become apparent.

Search functionality is something that can be hidden away on menus and that is why I missed it for so long. For example, Amazon’s Kindle supports puts the search box in a prominent place on iOS but hides the same function in menus on its Android or Windows incarnations. Google Play Books consistently does the latter from what I have seen and it would do no harm to have a search box on the library screen since menus and touchscreen devices do not mix as well. The ability to search within a book is similarly afflicted so this also needs moving to a more prominent place and is really handy for guidebooks or other more technical textbooks.

The ability to organise a collection appears to be another missed opportunity. The closest that I have seen so far are the Cloud and Device screens on Amazon’s Kindle app but even this is not ideal. Having the ability to select some books as favourites would help as would hiding others from the library screen would be an improvement. Having the ability to re-sell unwanted eBooks would be another worthwhile addition because you do just that with paper books.

When I started on this piece, I reached the conclusion the eBooks too closely mimicked libraries of paper books. Now, I am not so sure. It appears to me that the format is failing to take full advantage of its digital form and that might have been what Turton was trying to evoke but the examples that he used did not appeal to me. Also, we could do with more organisation functionality in apps and the ability to resell could be another opportunity. Instead, we appear to be getting digital libraries and there are times when a personal collection is best.

All the while, paper books are being packaged in ever more attractive ways and there always will be some that look better in paper form than in digital formats and that still applies to those with glossy appealing photos. Paper books almost feel like gift items these days and you cannot fault the ability to browse them by flicking through the pages with your hands.

More About WordPress

6th December 2010

Having been a WordPress for over four years, I have collected a number of useful places on the web that are useful to those who are using this pervasive publishing platform and those who develop for and on the thing. Of course, there are more out there than those that I have collected here, but a list has to start somewhere and I will continue to add to it as I find more.

Documentation and Tutorials

The first stop to check out for answers to any WordPress questions. Please bear in mind that it is a wiki and that makes it a work in progress. While that reality may mean that information could be incomplete (you could always offer to help…), it still remains very useful and it’s my first port of call when I need to know what a specific WordPress function does.

The contributions that you find here may be occasional yet you could find them useful.

An aggregation of news and usage information created by the people behind WordPress.

This topical website covers a whole load of news topics pertaining to WordPress and there have been a good few lessons that I have learned here.

It seems that lawmakers are getting their oar in when it comes to website operation, so this operation does no harm in a world where privacy policies and cookie disclosure appears to be the start of some kind of regulation. Certainly, a bit of website auditing done before Christmas 2014 left me with that impression.

As the name suggests, this is a beginner’s guide to WordPress. We all have to start somewhere and it looks as if this could grow with you along the way too.

A belated goodbye to PC Plus magazine

13th October 2012Last year, Future Publishing made a loss so something had to be done to address that. Computer magazines such as Linux Format no longer could enclose their cover-mounted discs in elaborate cardboard wallets and moved to simpler sleeves instead. Another casualty has been one of their longest standing titles: PC Plus.

It has been around since 1986 and possibly was one of the publisher’s first titles. It was the late nineties when I first encountered and, for quite a few years afterwards, it was my primary computer magazine of choice every month. The mix of feature articles, reviews and tutorials covering a variety of aspects of personal computing was enough for me. After a while though, it became a bit stale and I stopped buying it regularly. Then, the collection that I had built up was dispatched to the recycling bin and I turned to other magazines.

In the late nineties, Future had a good number of computing titles on magazine shelves in newsagents and there did seem to be some overlap in content. For instance, we had PC Answers and PC Format alongside PC Plus at one point. Now, only PC Format is staying with us and its market seems to be high home computer users such as those interested in PC gaming. .Net, initially a web usage title and now one focussing on website design and development, started from the same era and Linux Format dates from around the turn of the century. Looking back, it looks there was a lot of duplication going on in a heady time of expanding computer usage.

That expansion may have killed off PC Plus in the end. For me, it certainly meant that it no longer was a one stop shop like Dennis’s PC Pro. For instance, the programming and web design content that used to come in PC Plus found itself appearing in .Net and in Linux Format. The appearance of the latter certainly meant that was somewhere else for Linux content; for the record, my first dalliance with SuSE Linux was from a PC Plus cover-mounted disk. The specialisation and division certainly made PC Plus a less essential read than I once thought it.

Of course, we now have an economic downturn and major changes in the world of publishing alongside it. Digital publishing certainly is growing and this isn’t just about websites anymore. That probably explains in part Future’s recent financial performance. Then, when a title like PC Plus is seen as less important, then it can cease to exist but I reckon that it’s the earlier expansion that really did for it. If Future had one computing title that contained extensive reviews and plenty of computing tutorials with sections of programming and open source software, who knows what may have happened. Maybe consolidating the other magazines into that single title would have been an alternative but my thinking is that it wouldn’t have been commercially realistic. Either way, the present might have be very different and PC Plus would be a magazine that I’d be reading every month. That isn’t the case of course and it’s sad to see it go from newstands even if the reality was that it left us quite a while ago in reality.

Getting Adobe Lightroom Classic to remember the search filters that you have set

23rd April 2023With Windows 10 support to end in October 2025 and VirtualBox now offering full support for Windows 11, I have moved onto Windows 11 for personal use while retaining Windows 10 for professional work, at least for now. Of course, a lot could happen before 2025 with rumours of a new Windows version, the moniker Windows 12 has been mooted, but all that is speculation for now.

As part of the changeover, I moved the Adobe apps that I have in an ongoing subscription, Lightroom Classic and Photoshop are the main ones for me, to the new virtual machine. That meant that some settings from the previous one were lost and needed reinstating.

One of those was the persistence of Library Filters, so I had to find out how to get that sorted. If my memory is not fooling me, this seemed to be a default action in the past and that meant that I was surprised by the change in behaviour.

Nevertheless, I had to go to the File menu, select Library Filters (it is near the bottom of the menu in the current version at the time of writing) and switch on Lock Filters by clicking on it to get a tick mark preceding the text. There is another setting called Remember Each Source’s Filters Separately in the same place that can be set in the same manner if so desired, and I am experimenting with that at the moment, even though I have not bothered with this in the past.

GNOME 3 in Fedora 15: A Case of Acclimatisation and Configuration

29th May 2011When I gave the beta version of the now finally released Fedora 15 a try, GNOME 3 left me thinking that it was even more dramatic and less desirable a change than Ubuntu’s Unity desktop interface. In fact, I was left with serious questions about its actual usability, even for someone like me. It all felt as if everything was one click further away from me and thoughts of what this could mean for anyone seriously afflicted by RSI started to surface in my mind, especially with big screens like my 24″ Iiyama being commonplace these days. Another missing item was somewhere on the desktop interface for shutting down or restarting a PC; it seemed to be a case of first logging off and then shutting down from the login screen. This was yet another case of adding to the number of steps for doing something between GNOME 2 and GNOME 3 with its GNOME Shell.

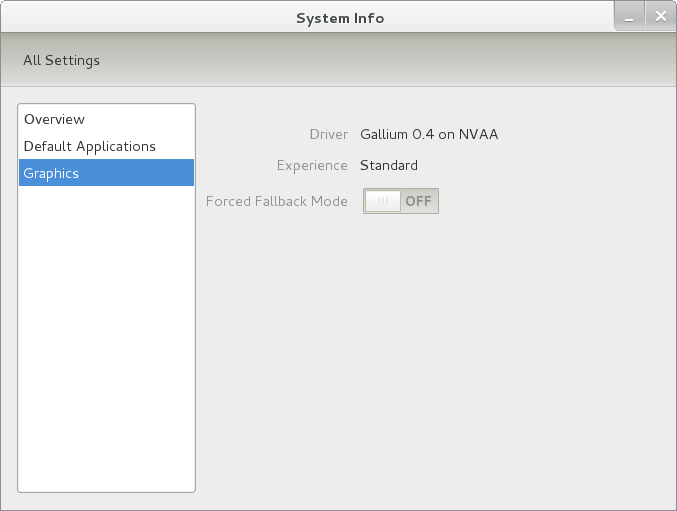

After that less than positive experience with a Live CD, you’d be forgiven for thinking that I’d be giving the GNOME edition of Fedora 15 a wide berth with the LXDE one being chosen in its place. Another alternative approach would have been to turn off GNOME Shell altogether by forcing the fallback mode to run all the time. The way to do this is start up the System Settings application and click on the System Info icon. Once in there, click on Graphics and turn on the Forced Fallback Mode option. With that done, closing down the application, logging off and then back on again will gain you an environment not dissimilar to the GNOME 2 of Fedora 14 and its forbears.

Even after considering the above easy way to get away from and maybe even avoid the world of GNOME Shell, I still decided to give it another go to see if I could make it work in a way that was less alien to me. After looking at the handy Quickstart guide, I ventured into the world of GNOME Shell extensions and very useful these have come to be too. The first of these that I added was the Alternate Status Menu and I ran the following command to do so:

yum install gnome-shell-extensions-alternative-status-menu

The result was that the “me” menu gained the ever useful “Power Off…” entry that I was seeking once I refreshed the desktop by running the command r in the command entry box produced by the ALT + F2 keyboard combination. Next up was the Place Menu and the command used to add that is:

yum install gnome-shell-extensions-place-menu

Again, refreshing the desktop as described for the Alternate Status Menu added the new menu to the (top) panel. Not having an application dock on screen all the time was the next irritation that was obliterated and it helps to get around the lack of a workspace switcher for now too. The GNOME Shell approach to virtual desktops is to have a dynamic number of workspaces with there always being one more than what you are using. It’s an interesting way of working that doesn’t perturb more pragmatic users like me, but there are those accustomed to tying applications to particular workspaces aren’t so impressed by the change. The other change to workspace handling is that keyboard shortcuts have changed to CTRL-ALT-[Up Arrow] and CTRL-ALT-[Down Arrow] from CTRL-ALT-[Left Arrow] and CTRL-ALT-[Right Arrow].

To add that application dock, I issued the command below and refreshed the desktop to get it showing. Though it stops application windows becoming fully maximised on the screen, that’s not a problem with my widescreen monitor. In fact, it even helps to switch between workspaces using the keyboard because that doesn’t seem to work when you have fully maximised windows.

yum install gnome-shell-extensions-dock

After adding the application dock, I stopped adding extensions though there are more available, such as Alternate Tab Behaviour (restores the ALT-TAB behaviour of GNOME 2), Auto-Move Windows, Drive Menu, Native Window Placement, Theme Selector and Window Navigator. Here are the YUM commands for each of these in turn:

yum install gnome-shell-extensions-alternate-tab

yum install gnome-shell-extensions-auto-move-windows

yum install gnome-shell-extensions-drive-menu

yum install gnome-shell-extensions-native-window-placement

yum install gnome-shell-extensions-theme-selector

yum install gnome-shell-extensions-user-theme

yum install gnome-shell-extensions-windowsNavigator

One hope that I will retain is that more of these extensions will appear over time, but Ranjith Siji seems to have a good round up of what is available. Other than these, I also have added the DCONF Editor and GNOME Tweaks Tool with the latter restoring buttons for minimising and maximising windows to their title bars for me. As ever, YUM was called to add them using the following commands:

yum install dconf-editor

yum install gnome-tweaks-tool

There are other things that can be done with these but I haven’t explored them yet. All YUM commands were run as root and the ones that I used certainly have helped me to make myself at home in what once was a very unfamiliar desktop environment for me. In fact, I am beginning to like what has been done with GNOME 3 though I have doubts as to how attractive it would be to a user coming to Linux from the world of Windows. While everything is solidly crafted, the fact that I needed to make some customisations of my own raises questions about how suitable the default GNOME set-up in Fedora is for a new user though Fedora probably isn’t intended for that user group anyway. Things get more interesting when you consider distros favouring new and less technical users, both of whom need to be served anyway.

Ubuntu has gone its own way with Unity and, having spent time with GNOME 3, I can see why they might have done that. Unity does put a lot more near at hand on the desktop than is the case with GNOME 3 where you find yourself going to the Activities window a lot, either by using your mouse or by keystrokes like the “super” (or Windows) key or ALT-F1. Even so, there are common touches like searching for an application like you would search for a web page in Firefox. In retrospect, it is a pity to see the divergence when something from both camps might have helped for a better user experience. Nevertheless, I am reaching the conclusion that the Unity approach feels like a compromise and that GNOME feels that little bit more polished. Saying that, an extra extension or two to put more items nearer to hand in GNOME Shell would be desirable. If I hadn’t found a haven like Linux Mint where big interface changes are avoided, maybe going with the new GNOME desktop mightn’t have been a bad thing to do after all.

Rough?

11th November 2009Was it because Canonical and friends kept Ubuntu in such a decent state from 8.04 through to 9.04 that things went a little quiet in the blogosphere on the subject of the well-known Linux distribution? If so, 9.10 might be proving more of a talking point and you have to wonder if this is such a good thing with the appearance of Windows 7 on the scene. Looking on the bright side, 10.04 will be an LTS release so there is some chance that any rough edges that are on display now could be resolved by next April. Even so, it might have been better not to see anything so obvious at all.

In truth, Ubuntu always has had its gaps and I have seen a few of their ilk over the last two years. Of these, a few have triggered postings on here. In fact, issues with accessing the BBC iPlayer still bring a goodly number of folk to this website. That may just be a matter of grabbing RealPlayer, now helpfully available as a DEB package, from the requisite place on the web and ensuring that Ubuntu-Restricted-Extras is in place too but you have to know that in the first place. Even so, unexpected behaviours like Palimpsest seeing every partition on a disk as a different drive and SIL Raid mappings being seen for hard drives that used to live on the main home PC that bit the dust earlier this year; it only happens on one of the machines that I have running Ubuntu so it may be hardware thing and newly added hard drive uses none of the SIL mapping either. Perhaps more seriously (is it something that a new user should be encountering?), a misfiring variant of Brasero had me moving to K3b. Then UFRaw was sluggish in batch but that’s nothing that having a Debian VM won’t overcome. Rough edges like these do get you asking if 9.10 was ready for the big time while making you reluctant to recommend it to mainstream users like my brother.

The counterpoint to the above is that 9.10 includes a host of under the bonnet changes like the introduction of Ext4 hard drive formatting, Xsplash to allow the faster system loading to occur unseen and GNOME 2.28. To someone looking in from outside like me, that looks like a lot of work and might explain the ingress of the annoyances that I have seen. Add to that the fact that we are between Debian releases so things like the optimised packaging of ImageMagick or UFRaw may not be so high up the list of the things to do, especially with the more general speed optimisations that were put in place for 9.10. With 10.04 set to be an LTS release so I’d be hoping that consolidation is the order of the day over the next five or six months but it seems to be the inclusion of new features and other such progress that get magazine reviewers giving higher ratings (Linux Format has given it a mark of 9 out of 10). With the mooted inclusion of GNOME 3 and its dramatically different interface in 10.10, they should get their fill of that. However, I’d like to see some restraint for the take of a smooth transition from the familiar GNOME 2.x to the new. If GNOME 3 stays very like its alpha builds, then the question as how users will take to it arises. Of course, there’s some time yet before we see GNOME 3 and, having seen how the Ubuntu developers transformed GNOME 2.28, I wouldn’t be surprised if the impact of any change could be dulled.

In summary, my few weeks with Ubuntu 9.10 as my main OS have thrown up no major roadblocks that would cause me to look at moving elsewhere; Fedora would be tempting if that situation were to arise. The irritations that I have seen are more like signs of a lack of polish and remain peripheral to day-to-day working if you discount CD/DVD burning. To be honest, there always have been roughnesses in Ubuntu but has the lack of sizeable change spoilt us? Whatever about how things feel afterwards, big changes can mean new problems to resolve and inspire blog posts describing any solutions so it’s not all bad. If that’s what Canonical wants to see, they might get it and the year ahead looks as if it is going to be an interesting one after a recent quieter period.