Online favicon.ico creation

21st January 2008I recently updated the icon that appears beside this blog’s address in the address bar and bookmarks menus of some browsers. I gave it a go in GIMP but I seemed to get no joy. I pottered out on the web to discover what I might have done wrong only to find Dynamic Drive offering online favicon.ico creation. Out of curiosity, I decided to give the thing a whirl and download the result to upload onto my web server. GIF’s, JPG’s PNG’s and BMP’s with a size less than 150 KB are accepted and it did work for me.

More Linux Distributions

21st September 2012

If a certain Richard Stallman had his way, Linux would be called GNU/Linux because he wants GNU to have some of the credit, but we’re lazy creatures and we all call it Linux instead. What still amazes me is the number of Linux distributions that are out there. This list captures those that do not fit into other lists that you can find in the sidebar, so do look at the others as well.

Many fit into the desktop and server computing paradigms while a minority are very distinctive. It is easier to write about the latter than the former, though personal experiences do add to any narrative. It is tempting to think that everything has become static after more than thirty years, yet that may be foolish given the ongoing flux in the world of technology. Only change is ever a constant presence.

More in the Way of Privacy

The controversy about security agencies eavesdropping on internet communications has upset some and here are some distros offering anonymity and privacy. Of course, none of these should be used for unlawful purposes since there are those in less liberal countries who need invisibility to speak their minds.

It is harder and harder to create a Linux distro that is very different from the rest, but this one uses application virtualisation for added security. You can organise your software into different domains so that you work more securely when moving data between applications from different domains.

There is more than a hint of privacy-mindedness in this distro when you look long enough at what it offers. Cinnamon, MATE and Xfce desktop environments are part of the offer and there is added software for extra privacy and security.

This is an option for those who are worried about being tracked online. All internet connections are sent via the Tor network and it is run exclusively as a live distro from CD, DVD or USB stick drive too, so no trace is left on any PC. The basis is Debian and the distro’s name is an acronym: The Amnesiac Incognito Live System. For us living in a democratic country, the effort may seem excessive but that changes in other places where folk are not so fortunate. The use of Tor may not be perfect but it should help in combination with the use of different sessions for different tasks and encrypting any files. There even is an option to make the desktop appear like that of Windows XP for extra discreteness of use.

Most Linux distros that have enhanced security and anonymity as a feature are not installable on a PC, but that exactly is what’s unique about Whonix. It’s based on Debian but all internet connections go via the Tor network. The latter is called Whonix-Gateway with Whonix-Workstation being what you use to work on your system. It may sound like being overly careful but it has me intrigued.

Entertainment

In many ways, these are appliance distros for anyone who just wants an install-it-and-go approach to things. That works better with dedicated devices than with multipurpose machines, so that is one thing that needs to be kept in mind.

The idea behind this offering is what it offers console gamers. Legacy games and peripherals will work and there even is support for Raspberry Pi as well.

The main purpose of this distro is to offer a home for the KODI entertainment centre on PC and Raspberry Pi devices. It follows from the now defunct OpenELEC project, which ran into trouble when developers’ voices were not given a hearing.

The acronym stands for Open-Source Media Centre and there is KODI here too. Though the distro also is based on Debian, one is tempted to wonder why anyone would not just install that and install KODI on top of it. The answer possibly has something got to do with added user-friendliness for those who do not need to deal with such things.

Mandriva Offshoots

Mandrake once was a spin of Red Hat with a more user-friendly focus. In the days before the appearance of Ubuntu, it would have been a choice for those not wanting to overcome obstacles such as a level of hardware support that was much less than what we have today. Later, Mandrake became Mandriva following litigation and the acquisition of Conectiva in 2005. The organisation has declined since those heady days and it became defunct during 2015. Its legacy continues though in the form of two spin-off projects, so all the work of forebears has not been lost.

It was the uncertainty surrounding the future of Mandriva that originally caused this project to be started. Beginnings have been promising, so this is a one to watch, though you have to wonder if the now community-based OpenMandriva is stealing some of its limelight.

Of the pair that is listed here, it is OpenMandriva which is a continuation of the now-defunct Mandriva. Seeing how things progress for a project with user-friendliness at its heart will be of interest in these days when Debian, Ubuntu and Linux Mint are so pervasive. Even with those, there are KDE options, so there is a challenge in place.

Anything Russian may not be everyone’s choice given the state of world affairs at the time of writing, yet this still is an offshoot of Mandriva so it gets a mention in this list. Desktop environment options include KDE, XFCE and LXQt and there are various use cases covered by a range of solutions.

Others

Not every distro falls in the above categories, and some that you find here may surprise you. There are some better-known names like openSUSE that go their way.

Aside from the founder’s dislike of ISO disk images for whatever reason, this distro has its own eccentricities. For example, it is container-friendly, runs in memory as root and much more. This is branded as an experimental distro, and it is that in many ways.

This project creates respins of openSUSE for the sake of a more refined experience. For instance, there are live booting ISO images as well as inclusion of media codecs. There is plenty of choice too when it comes to desktop environments.

From what I have seen, this project seems to be supporting the same needs as Arch, albeit with all software needing to be compiled, so there’s more of a DIY approach. The wiki also comes in handy for those users.

Billing itself as a lean independent distribution focussing on QT and KDE, this is built from the ground up without any dependence on other distros. Some tools, like pacman, naturally come from elsewhere in this otherwise standalone offering.

Here is another distro apart from Ubuntu that has an African name, the Zulu for big chief this time around. It came to my notice among the pages of the now defunct Micro Mart magazine and uses MATE, XFCE, Enlightenment and KDE as its desktop environment choices.

SuSE Linux was one of the first Linux distros that I started to explore and I even had it loaded on my home PC as a secondary operating system for quite a while too before my attention went elsewhere. Only for a PC Plus cover-mounted CD, it never might have discovered it and it bested Red Hat, which was as prominent then, as Fedora is today. When SuSE fell into Novell’s hands, it became both openSUSE and SuSE Linux Enterprise Edition. The former is the community and the latter is what Novell, now itself an Attachmate Group company, offers to business customers. As it happens, I continue to keep an eye on openSUSE and even had it on a secondary PC before font resolution deficiencies had me looking elsewhere. While it’s best known for its KDE variant, there is a GNOME one too and it is this that I have been examining.

There was a time when this was being touted as an Ubuntu killer but it never seems to have made good on that promise. Recent troubles within the project haven’t helped either, especially with a long wait between releases.

This Turkish distro recently got reviewed in Linux Format and they were not satisfied with its documentation. It does not help that the website is not in English, so you need a translation tool of your choosing for this one.

Though there also is a spin using the MATE desktop environment, this distro is perhaps better known as the home for the Budgie desktop environment. All of this is for computing and not its business or enterprise counterpart. There is nothing to say against that and may make it feel a little more friendly.

The name sounded similar for some reason and I reckon that’s because Samsung has smartphones running Tizen on sale. The whole point of the project is to power mobile computing platforms with only the mention of netbooks sullying an otherwise non-PC target market that includes tablets and TV’s. It’s overseen by the Linux Foundation too.

A useful little device

1st October 2011Last weekend, I ran into quite a lot of bother with my wired broadband service. Eventually, after a few phone calls to my provider, it was traced to my local telephone exchange and took another few days before it finally got sorted. Before that, a new ADSL filter (from a nearby branch of Maplin as it happened) was needed because the old one didn’t work with my phone. Without that, it wouldn’t have been possible to debug what was happening with the broadband clashing with my phone with the way that I set up things. Resetting the router was next and then there was a password change before the exchange was blamed. After all that, connectivity is back again and I even upgraded in the middle of it all. Downloads are faster and television viewing is a lot, lot smoother too. Having seen fairly decent customer service throughout all this, I am planning to stick with my provider for a while longer too.

Of course, this outage could have left me disconnected from the Internet but for the rise of mobile broadband. Working off dongles is all very fine until coverage lets you down and that seems to be my experience with Vodafone at the moment. Another fly in the ointment was my having a locked down work laptop that didn’t entertain such the software installation that is needed for running these things, a not unexpected state of affairs though it is possible to connect over wired and wireless networks using VPN. With my needing to work from home on Monday, I really had to get that computer online. Saturday evening saw me getting my Toshiba laptop online using mobile broadband and then setting up an ad hoc network using Windows 7 to hook up the work laptop. To my relief, that did the trick but the next day saw me come across another option in Argos (the range of computing kit in there still continues to surprise me) that made life even easier.

While seeing if it was possible to connect a wired or wireless router to mobile broadband, I came across devices that both connected via the 3G network and acted as wireless routers too. Vodafone have an interesting option into which you can plug a standard mobile broadband dongle for the required functionality. For a while now, 3 has had its Mifi with the ability to connect to the mobile network and relay Wi-Fi signals too. Though it pioneered this as far as I know, others are following their lead with T-Mobile offering something similar: its Wireless Pointer. Unsurprisingly, Vodafone has its own too though I didn’t find and mention of mobile Wi-Fi on the O2 website.

That trip into Argos resulted in a return home to find out more about the latter device before making a purchase. Having had a broadly positive experience of T-Mobile’s network coverage, I was willing to go with it as long as it didn’t need a dongle. The T-Mobile one that I have seems not to be working properly so I needed to make sure that wasn’t going to be a problem before I spent any money. When I brought home the Wireless Pointer, I swapped the SIM card from the dongle to get going without too much to do. Thankfully, the Wi-Fi is secured using WPA2 and the documentation tells you where to get the entry key. Having things secured like this means that someone cannot fritter away your monthly allowance too and that’s as important for PAYG customers (like me) as much as those with a contract. Of course, eavesdropping is another possibility that is made more difficult too. So far, I have stuck with using it while plugged in to an electrical socket (USB computer connections are possible as well) but I need to check on the battery life too. Up to five devices can be connected by Wi-Fi and I can vouch that working with two connected devices is more than a possibility. My main PC has acquired a Belkin Wi-Fi dongle in order to use the Wireless Pointer too and that has worked very well too. In fact, I found that connectivity was independent of what operating system I used: Linux Mint, Ubuntu, Windows XP and Windows 7 all connected without any bother. The gadget fits in the palm of my hand too so it hardly can be called large but it does what it sets out to do and I have been glad to have it so far.

All that was needed was a trip to a local shop

5th March 2011In the end, I did take the plunge and acquired a Sigma 50-200 mm f4-5.6 DC OS HSM lens to fit my ever faithful Pentax K10D. After surveying a few online retailers, I plumped for Park Cameras where the total cost, including delivery, came to something to around £125. This was around £50 less than what others were quoting for the same lens with delivery costs yet to be added. Though the price was good at Park Cameras, I was wondering still about how they could manage to do that sort of deal when others don’t. Interestingly, it appears that the original price of the lens was around £300 but that may have been at launch and prices do seem to tumble after that point in the life of many products of an electrical or electronic nature.

Unlike the last lens that I bought from them around two years ago, delivery of this item was a prompt affair with dispatch coming the day after my order and delivery on the morning after that. All in all, that’s the kind of service that I like to get. On opening the box, I was surprised to find that the lens came with a hood but without a cap. However, that was dislodged slightly from my mind when I remembered that I neglected to order a UV or skylight filter to screw into the 55 mm front of it. In the event, it was the lack of a lens cap needed sorting more than the lack of a filter. The result was that I popped in the local branch of Wildings where I found the requisite lens cap for £3.99 and asked about a filter while I was at it. Much to my satisfaction, there was a UV filter that matched my needs in stock though it was that cheap at £18.99 and was made by a company of which I hadn’t heard before, Massa. This was another example of good service when the shop attendant juggled two customers, a gentleman looking at buying a DSLR and myself. While I would not have wanted to disturb another sales interaction, I suppose that my wanting to complete a relatively quick purchase was what got me the attention while the other customer was left to look over a camera, something that I am sure he would have wanted to do anyway. After all, who wouldn’t?

Unlike the last lens that I bought from them around two years ago, delivery of this item was a prompt affair with dispatch coming the day after my order and delivery on the morning after that. All in all, that’s the kind of service that I like to get. On opening the box, I was surprised to find that the lens came with a hood but without a cap. However, that was dislodged slightly from my mind when I remembered that I neglected to order a UV or skylight filter to screw into the 55 mm front of it. In the event, it was the lack of a lens cap needed sorting more than the lack of a filter. The result was that I popped in the local branch of Wildings where I found the requisite lens cap for £3.99 and asked about a filter while I was at it. Much to my satisfaction, there was a UV filter that matched my needs in stock though it was that cheap at £18.99 and was made by a company of which I hadn’t heard before, Massa. This was another example of good service when the shop attendant juggled two customers, a gentleman looking at buying a DSLR and myself. While I would not have wanted to disturb another sales interaction, I suppose that my wanting to complete a relatively quick purchase was what got me the attention while the other customer was left to look over a camera, something that I am sure he would have wanted to do anyway. After all, who wouldn’t?

With the extras acquired, I attached them to the front of the lens and carried out a short test (with the cap removed, of course). When it was pointed at an easy subject, the autofocus worked quickly and quietly. A misty hillside had the lens hunting so much that turning to manual focussing was needed a few times to work around something understandable. Like the 18-125 mm Sigma lens that I already had, the manual focussing ring is generously proportioned with a hyperfocal scale on it though some might think the action a little loose. In my experience though, it seems no worse than the 18-125 mm so I can live with it. Both lenses share something else in common in the form of the zoom lens having a stiffer action than the focus ring. However, the zoom lock of the 18-125 mm is replaced by an OS (Optical Stabilisation) one on the 50-200 mm and the latter has no macro facility either, another feature of the shorter lens though it remains one that I cannot ever remember using. In summary, first impressions are good but I plan to continue appraising it. Maybe an outing somewhere tomorrow might offer a good opportunity for using it a little more to get more of a feeling for its performance.

Changing file timestamps using Windows PowerShell

29th October 2014Recently, a timestamp got changed on an otherwise unaltered file on me and I needed to change it back. Luckily, I found an answer on the web that used PowerShell to do what I needed and I am recording it here for future reference. The possible commands are below:

$(Get-Item temp.txt).creationtime=$(Get-Date "27/10/2014 04:20 pm")

$(Get-Item temp.txt).lastwritetime=$(Get-Date "27/10/2014 04:20 pm")

$(Get-Item temp.txt).lastaccesstime=$(Get-Date "27/10/2014 04:20 pm")

The first of these did not interest me since I wanted to leave the file creation date as it was. The last write and access times were another matter because these needed altering. The Get-Item commandlet brings up the file, so its properties can be set. Here, these include creationtime, lastwritetime and lastaccesstime. The Get-Date commandlet reads in the provided date and time for use in the timestamp assignment. While PowerShell itself is case-insensitive, I have opted to show the camel case that is produced when you are tabbing through command options for the sake of clarity.

The Get-Item and Get-Date have aliases of gi and gd, respectively and the Get-Alias commandlet will show you a full list while Get-Command (gcm) gives you a list of commandlets. Issuing the following gets you a formatted list that is sent to a text file:

gcm | Format-List > temp2.txt

There is some online help but it is not quite as helpful as it ought to be so I have popped over to Microsoft Learn whenever I needed extra enlightenment. Here is a command that pops the full thing into a text file:

Get-Help Format-List -full > temp3.txt

In fact, getting a book might be the best way to find your way around PowerShell because of all its commandlets and available objects.

For now, other commands that I have found useful include the following:

Get-Service | Format-List

New-Item -Name test.txt -ItemType "file"

The first of these gets you a list of services while the second creates a new blank text file for you and it can create new folders for you too. Other useful commandlets are below:

Get-Location (gl)

Set-Location (sl)

Copy-Item

Remove-Item

Move-Item

Rename-Item

The first of the above is like the cwd or pwd commands that you may have seen elsewhere in that the current directory location is given. Then, the second will change your directory location for you. After that, there are commandlets for copying, deleting, moving and renaming files. These also have aliases so users of the legacy Windows command line or a UNIX or Linux shell can use something that is familiar to them.

Little fixes like the one with which I started this piece are all very good to know but it is in scripting that PowerShell really is said to show its uses. Having seen the usefulness of such things in the world on Linux and UNIX, I cannot disagree with that and PowerShell has its own IDE too. That may be just as well given how much there is to learn. That especially is the case when you might need to issue the following command in a PowerShell session opened using the Run as Administrator option just to get the execution as you need it:

Set-ExecutionPolicy RemoteSigned

Issuing Get-ExecutionPolicy will show you if this is needed when the response is: Restricted. A response of RemoteSigned shows you that all is in order, though you need to check that any script you then run has no nasty payload in there, which is why execution is restrictive in the first place. This sort of thing is yet another lesson to be learnt with PowerShell.

Other Website Tools

19th March 2011Here are a few pieces of open-source software that would fall into the category of website accessories. The list initially was a short one but is growing into a very eclectic collection. More will be added as they come my way.

JavaScript may be an interpreted language, but the concept has been applied here too. More correctly, Babel is a JavaScript code builder that takes code written in its utility language and expands it into standard verbose JavaScript that is easier to read and maintain than if a utility library has been used.

All of these are tools you can use to set up a web forum. Discourse tends to hide away its wares from anyone who fancies downloading it for a look and that cannot be said about the others. Of the rest, phpBB is a standalone option while bbPress is built on WordPress.

This is a Node.js tool that supports simultaneous cross-browser testing, making the process easier and faster.

This is a JavaScript CSS pre-processor that adds functionality to CSS that would not in place with the base language.

While I have started to evaluate Open Web Analytics, this has been my main self-hosted website traffic analyser. Using Google Analytics and Woopra is all very fine but they are implemented using JavaScript scripts hosted on other parts of the web and that can slow down page loading as well as giving blockers of JavaScript something else to do.

Matomo works well and has an attractive interface but it can be sluggish to load, so much so that I have set it to load its work data in the database instead of the web server file system. For those wanting to stick with older versions for the sake of speed and/or reliability, there even is a repository of these.

While I mainly use Matomo, this is another website traffic analysis tool. What caused me to look at it once I saw a mention in Linux Magazine is the sluggishness of Matomo. The interface may not be as swish but it does load faster so I will keep it under evaluation. It, too, is self-hosted, so there is no need to have JavaScript or PHP enabled connections to other web services.

The point of this tool is to manage advertising. While that means that I may never get to use it, that’s never to say that there aren’t others who might.

There may be a myriad of file storage providers on the web, but that doesn’t mean that there isn’t a place for a self-hosted alternative of your own. After all, some providers may not be as robust as you’d like them to be so it’s always good to feel that a little more control can come your way. That is where ownCloud comes in and it is not dissimilar to other web applications with its dependence on PHP, MySQL and so on. DIY approaches are not dead and gone just yet.

This not a UI framework but is a set of tools for building up sets of reusable components and templates. There is a chemical/biological metaphor for template components and the tools are installable on development systems. The approach is an interesting one.

There are times when I have wished to override whatever post-processing has been added to CSS on me to a JavaScript tool like PostCSS looks interesting to me.

Essentially, this is an open-source counterpart to what powers Twitter that you can run yourself on your own system. Whether shared website hosting can support how it works remains to be seen but you can try it out for yourself at indenti.ca.

This is an open-source file sharing and synchronisation platform that offers a self-hosted counterpart to the likes of Dropbox, Google Drive and others. With all the discussion about privacy and the eavesdropping revelations of the last few years, this is a pertinent tool in this age of cloud computing.

This is an open-source webmail platform that runs on a web server like many other web applications. It could have its uses for small enterprises.

Another tool that extends CSS makes management of CSS easier than if you were coding stylesheets by hand.

A PHP library for re-using RSS feeds in web content.

This is a self-hosted analogue to the now doomed Google Reader. There may be other options in the cloud like Netvibes, but there is some additional security in having an option almost entirely under your own control.

This is a more general Linux and UNIX server administration tool, but web platforms see service too so it gets added to this list. It, too, runs on a web server instance itself and there are a number of modules for things such as cloud computing and virtualisation.

Moves to Hugo

30th November 2022What amazes me is how things can become more complicated over time. As long as you knew HTML, CSS and JavaScript, building a website was not as onerous as long as web browsers played ball with it. Since then, things have got easier to use but more complex at the same time. One example is WordPress: in the early days, themes were much simpler than they are now. The web also has got more insecure over time, and that adds to complexity as well. It sometimes feels as if there is a choice to make between ease of use and simplicity.

It is against that background that I reassessed the technology that I was using on my public transport and Irish history websites. The former used WordPress, while the latter used Drupal. The irony was that the simpler website was using the more complex platform, so the act of going simpler probably was not before time. Alternatives to WordPress were being surveyed for the first of the pair, but none had quite the flexibility, pervasiveness and ease of use that WordPress offers.

There is another approach that has been gaining notice recently. One part of this is the use of Markdown for web publishing. This is a simple and distraction-free plain text format that can be transformed into something more readable. It sees usage in blogs hosted on GitHub, but also facilitates the generation of static websites. The clutter is absent for those who have no need of the Gutenberg Editor on WordPress.

With the content written in Markdown, it can be fed to a static website generator like Hugo. Using defined templates and fixed assets like CSS together with images and other static files, it can slot the content into HTML files very speedily since it is written in the Go programming language. Once you get acclimatised, there are no folder structures that cannot be used, so you get full flexibility in how you build out your website. Sitemaps and RSS feeds can be built at the same time, both using the same input as the HTML files.

In a nutshell, it automates what once needed manual effort used a code editor or a visual web page editor. The use of HTML snippets and layouts means that there is no necessity for hand-coding content, like there was at the start of the web. It also helps that Bootstrap can be built in using Node, so that gives a basis for any styling. Then, SCSS can take care of things, giving even more automation.

Given that there is no database involved in any of this, the required information has to be stored somewhere, and neither the Markdown content nor the layout files contain all that is needed. The main site configuration is defined in a single TOML file, and you can have a single one of these for every publishing destination; I have development and production servers, which makes this a very handy feature. Otherwise, every Markdown file needs a YAML header where titles, template references, publishing status and other similar information gets defined. The layouts then are linked to their components, and control logic and other advanced functionality can be added too.

Because static files are being created, it does mean that site searching and commenting, or contact pages cannot work like they would on a dynamic web platform. Often, external services are plugged in using JavaScript. One that I use for contact forms is Getform.io. Then, Zapier has had its uses in using the RSS feed to tweet site updates on Twitter when new content gets added. Though I made different choices, Disqus can be used for comments and Algolia for site searching. Generally, though, you can find yourself needing to pay, particularly if you need to remove advertising or gain advanced features.

Some comments service providers offer open source self-hosted options, but I found these difficult to set up and ended up not offering commenting at all. That was after I tried out Cactus Comments only to find that it was not discriminating between pages, so it showed the same comments everywhere. There are numerous alternatives like Remark42, Hyvor Talk, Commento, FastComments, Utterances, Isso, Mouthful, Muut and HyperComments but trying them all out was too time-consuming for what commenting was worth to me. It also explains why some static websites even send readers to Twitter if they have something to say, though I have not followed this way of working.

For searching, I added a JavaScript/JSON self-hosted component to the transport website, and it works well. However, it adds to the size of what a browser needs to download. That is not a major issue for desktop browsers, but the situation with mobile browsers is such that it has a sizeable effect. Testing with PageSpeed and Lighthouse highlighted this, even if I left things as they are. The solution works well in any case.

One thing that I have yet to work out is how to edit or add content while away from home. Editing files using an SSH connection is as much a possibility as setting up a Hugo publishing setup on a laptop. After that, there is the question of using a tablet or phone, since content management systems make everything web based. These are points that I have yet to explore.

As is natural with a code-based solution, there is a learning curve with Hugo. Reading a book provided some orientation, and looking on the web resolved many conundrums. There is good documentation on the project website, while forum discussions turn up on many a web search. Following any research, there was next to nothing that could not be done in some way.

Migration of content takes some forethought and took quite a bit of time, though there was an opportunity to carry some housekeeping as well. The history website was small, so copying and pasting sufficed. For the transport website, I used Python to convert what was on the database into Markdown files before refining the result. That provided some automation, but left a lot of work to be done afterwards.

The results were satisfactory, and I like the associated simplicity and efficiency. That Hugo works so fast means that it can handle large websites, so it is scalable. The new Markdown method for content production is not problematical so far apart from the need to make it more portable, and it helps that I found a setup that works for me. This also avoids any potential dealbreakers that continued development of publishing platforms like WordPress or Drupal could bring. For the former, I hope to remain with the Classic Editor indefinitely, but now have another option in case things go too far.

OWASP Top 10 for Large Language Model Applications

21st January 2024OWASP stands for Open Web Application Security Project, and it is an online community dedicated to web application security. They are well known for their Top 10 Web Application Security Risks and late last year, they added a Top 10 for

Large Language Model (LLM) Applications.

Given that large language models made quite a splash last year, this was not before time. ChatGPT gained a lot of attention (OpenAI also has had DALL-E for generation of images for quite a while now), there are many others with Anthropic Claude and Perplexity also being mentioned more widely.

Figuring out what to do with any of these is not as easy as one might think. For someone more used to working with computer code, using natural language requests is quite a shift when you no longer have documentation that tells what can and what cannot be done. It is little wonder that prompt engineering has emerged as a way to deal with this.

Others have been plugging in LLM capability into chatbots and other applications, so security concerns have come to light, so far, I have not heard anything about a major security incident, but some are thinking already about how to deal with AI-suggested code that other already are using more and more.

Given all that, here is OWASP’s summary of their Top 10 for LLM Applications. This is a subject that is sure to draw more and more interest with the increasing presence of artificial intelligence in our everyday working and no-working lives.

LLM01: Prompt Injection

This manipulates an LLM through crafty inputs, causing unintended actions by the LLM. Direct injections overwrite system prompts, while indirect ones manipulate inputs from external sources.

LLM02: Insecure Output Handling

This vulnerability occurs when an LLM output is accepted without scrutiny, exposing backend systems. Misuse may lead to severe consequences such as Cross-Site Scripting (XSS), Cross-Site Request Forgery (CSRF), Server-Side Request Forgery (SSRF), privilege escalation, or remote code execution.

LLM03: Training Data Poisoning

This occurs when LLM training data is tampered, introducing vulnerabilities or biases that compromise security, effectiveness, or ethical behaviour. Sources include Common Crawl, WebText, OpenWebText and books.

LLM04: Model Denial of Service

Attackers cause resource-heavy operations on LLMs, leading to service degradation or high costs. The vulnerability is magnified due to the resource-intensive nature of LLMs and the unpredictability of user inputs.

LLM05: Supply Chain Vulnerabilities

LLM application lifecycle can be compromised by vulnerable components or services, leading to security attacks. Using third-party datasets, pre-trained models, and plugins can add vulnerabilities.

LLM06: Sensitive Information Disclosure

LLMs may inadvertently reveal confidential data in its responses, leading to unauthorized data access, privacy violations, and security breaches. It’s crucial to implement data sanitization and strict user policies to mitigate this.

LLM07: Insecure Plugin Design

LLM plugins can have insecure inputs and insufficient access control. This lack of application control makes them easier to exploit and can result in consequences such as remote code execution.

LLM08: Excessive Agency

LLM-based systems may undertake actions leading to unintended consequences. The issue arises from excessive functionality, permissions, or autonomy granted to the LLM-based systems.

LLM09: Overreliance

Systems or people overly depending on LLMs without oversight may face misinformation, miscommunication, legal issues, and security vulnerabilities due to incorrect or inappropriate content generated by LLMs.

LLM10: Model Theft

This involves unauthorized access, copying, or exfiltration of proprietary LLM models. The impact includes economic losses, compromised competitive advantage, and potential access to sensitive information.

EVF or OVF?

22nd December 2019In photography, some developments are passing fads while others bring longer lasting changes. In their own way, special effects filters and high dynamic range techniques cause their share of excitement before that passed and their usage became more sensible. In fact, the same might be said for most forms of image processing because tastefulness eventually gets things in order. Equally, there are others that mark bigger shifts.

The biggest example of the latter is the move away from film photography to digital image capture. There still are film photographers but they largely depend on older cameras since very few are made any more. My own transition came later than others but I hardly use film any more and a lack of replacement parts for cameras that are more than fifteen years old only helps to keep things that way. Another truth is that digital photography makes me look at my images more critically and that helps for some continued improvement.

Also, mobile phone cameras have become so capable that the compact camera market has shrunk dramatically. In fact, I gave away my Canon PowerShot G11 earlier this year because there was little justification in hanging onto it. After all, it dated back to 2010 and a phone would do now what it once did though the G11 did more for me than I might have expected. Until 2017, my only photos of Swedish locations were made with that camera. If I ever was emotional at its departure and I doubt that I was, that is not felt now.

If you read photography magazines, you get the sense that mirrorless cameras have captured a lot of the limelight and that especially is the case with the introduction of full frame models. Some writers even are writing off the chances of SLR’s remaining in production though available model ranges remain extensive in spite of the new interlopers. Whatever about the departure of film, the possible loss of SLR’s with their bright optical viewfinders (OVF’s) does make me a little emotional since they were the cameras that so many like me aspired to owning during my younger years and the type has served me well over the decades.

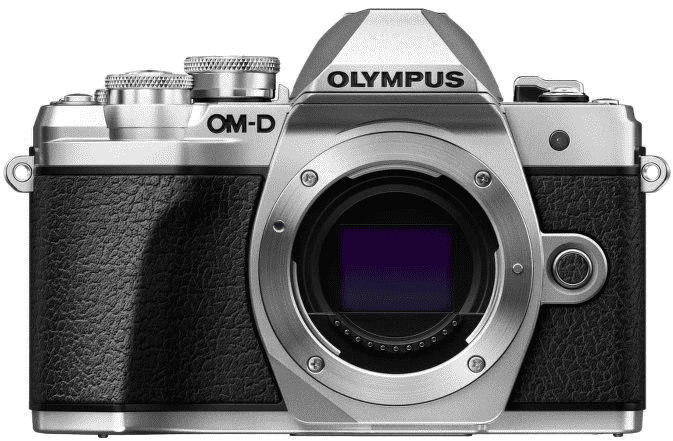

Even so, I too have used mirrorless cameras and an Olympus PEN E-PL5 came into my possession in 2013. However, I found that using the screen on the back of a camera was not to my liking and the quality of mobile phone cameras is such that I no longer need any added portability. However, it needs to be remembered that using a Tamron 14 to 150 mm zoom lens with the body cannot have helped either. Wishing to sample a counterpart with an electronic view finder, I replaced it with an Olympus OM-D E-M10 Mark III earlier this year and have been getting on fine with that.

The body certainly is a compact one but the handling is very like an SLR and I have turned off the automatic switching between viewfinder and screen since I found it distracting; manually switching between the two is my preference. As it happens, using the EVF took a little acclimatisation but being able to add a spirit level overlay proved to as useful as it was instructive. The resulting images may be strong in the green and blue ends of the visible spectrum but that suits a user that is partial to both colours anyway. It also helps that the 16.1 megapixel sensor creates compact images that are quick to upload to a backup service. There have been no issues working with my Tamron lens and keeping that was a deciding factor in my remaining with Olympus in spite of a shutter failure with the older camera. That was fixed efficiently and at a reasonable cost too.

As good as the new Olympus has been, it has not displaced my existing Canon EOS 5D Mark II and Pentax K5 II SLR’s. The frame size is much smaller anyway and January saw me acquire a new Sigma 24 to 105 mm zoom lens for the former after an older lens developed an irreparable fault. The new lens is working as expected and the sharpness of any resulting images is impressive. However, the full frame combination is weighty even if I do use it handheld so that means that the Pentax remains my choice for overseas trips. There also is an added brightness in the viewfinders of both cameras that I appreciate so the OM-D complements the others rather than replacing them.

While I can get on with EVF’s if SLR’s ever get totally superseded, I am planning to stick mainly with SLR’s for now. Interestingly, Canon has launched a new enthusiast model so there must be some continuing interest in them. Also, it seems that Canon foresees a hybrid approach where live viewing using the screen on the back of the camera may add faster autofocus or other kinds of functionality while the OVF allows more traditional working. That of itself makes me wonder if we might see cameras that can switch between EVF and OVF modes within the same viewfinder. The thought may be as far fetched as it is intriguing yet there may be other possibilities that have not been foreseen. One thing is clear though: we are in an age of accelerating change.

Restoration of service

27th March 2008Unfortunately, due to a spot of hosting trouble, this blog was offline for a few days while I was getting things sorted out. Along the way, I learned a few lessons about web hosting that I’ll share soon. In the meantime, I’ll continue to set in place the last few bits and pieces that made the site’s precessor what it was.