Improving Font Display in Fedora 15

30th May 2011When I first started to poke around Fedora 15 after upgrading my Fedora machine, the definition of the font display was far from being acceptable to me. Thankfully, it was something that I could resolve and I am writing these words with the letters forming them being shown in a way that was acceptable to me. The main thing that I did to achieve this was to add a file named 99-autohinter-only.conf in the folder /etc/fonts/conf.d. The file contains the following:

<?xml version=”1.0″?>

<!DOCTYPE fontconfig SYSTEM “fonts.dtd”>

<fontconfig>

<match target=”font”>

<edit name=”autohint” mode=”assign”>

<bool>true</bool>

</edit>

</match>

</fontconfig>

This forces autohinting for all fonts so that text looks better than it otherwise would. Apparently, Fedora 15 has seen the incorporation of the TrueType bytecode interpreter (BCI) into FreeType now that its patent has expired. To my eyes, this has worsened font rendering so I incorporated the above from a post by Kevin Kofler and it seems to have done the trick for me. However, I also have added the GNOME Tweak Tool and that allows you to alter the autohinting settings too so it may be a combination of the two actions that has helped. Anything that helps rendering of letters like k only can be a good thing. Another Linux distribution whose font rendering has not satisfied me is openSUSE and I am now set to wondering if the same approach would help there too, albeit without the GNOME Tweak Tool until a GNOME 3 version of that distro comes to fruition.

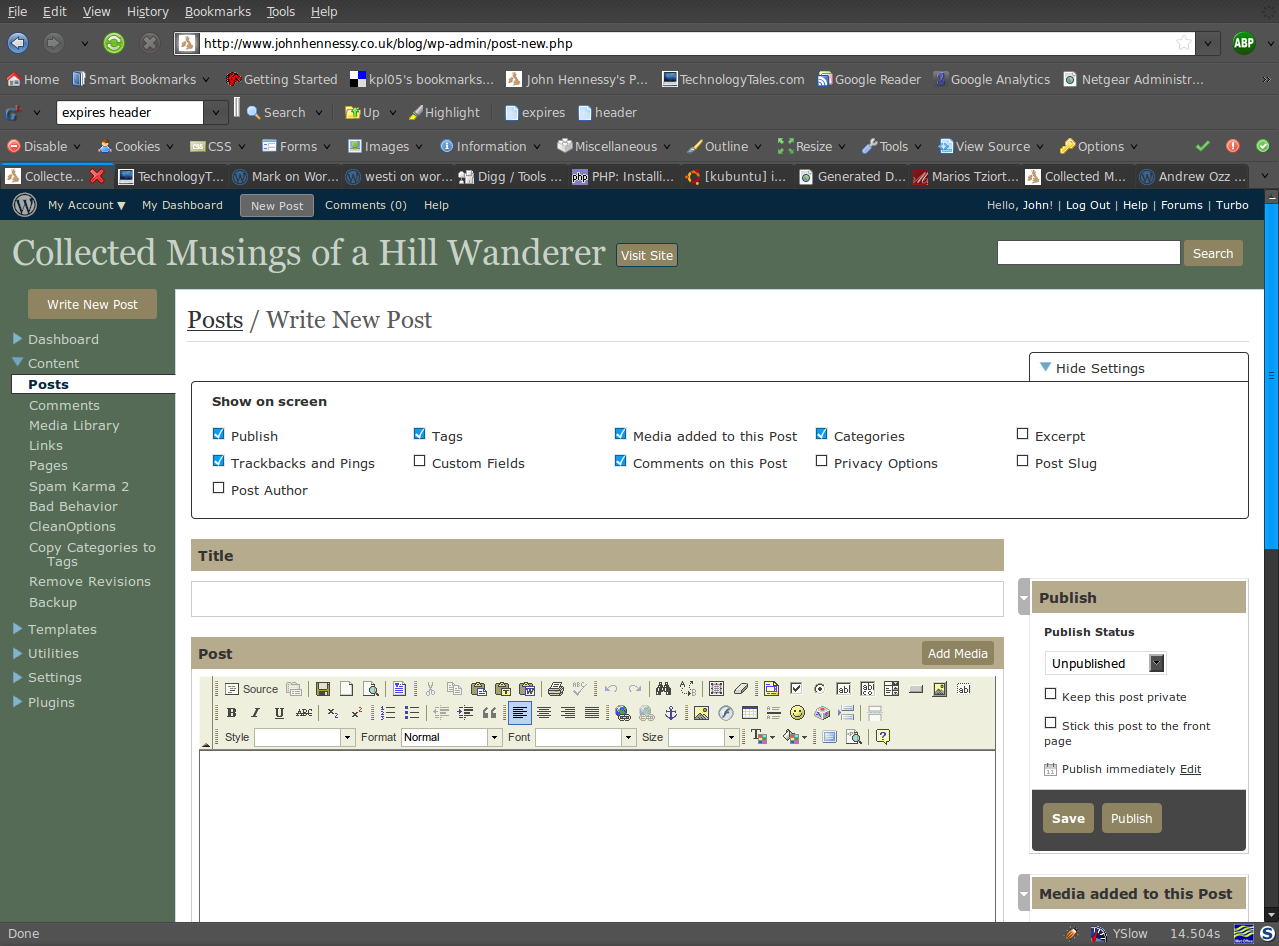

A radical new look on the way for WordPress’ administration area

31st August 2008

One thing that you can never say about Automattic is that it stands still for very long. That may generate adverse commentary from some but it’s bad to leave things stagnate too. In fact, resting on laurels also generates flak so you can’t please everyone all the time.

Earlier on this year, the WordPress administration screens went through something of an overall for version 2.5. In hindsight, it wasn’t terribly dramatic, but the prospect had me checking out what was happening with the development version and contributing to the project in my own small way. Now, it seems that a bigger upheaval is in prospect with wireframes and what not being brought into play on the design side.

The first change that anyone will notice is that the navigation has moved from the top to the side and that some things have been moved around and renamed. Another thing that you’ll see soon enough is that there is a QuickPress section added to the dashboard screen for those really quick and short postings. Sticking with the subject of content creation, alterations to post and page editing screens and the link creation screen are obvious too. What really comes to mind here is the level of customisation on offer so you can make yourself feel right at home: some of the screen furniture can be moved about and you can remove what you feel is nothing but useless clutter. Content generates comments, so the addition of keyboard shortcuts for comment moderation should be a boon for those with very active readers.

Some functionality currently added by plugins is getting incorporated in the main application. An example is automatic upgrades of WordPress itself. In the development version, it installs the latest nightly build but I am certain that it can be made to point to the latest stable release. That makes it more convenient for keeping a backseat eye on things rather than getting stuck into the hurly-burly of checking on what’s in Subversion.

There are some pieces that remain incompletely functional at present, such as the Inbox and Media added to this post pieces, but I have seen nothing that used to work to be broken. So, while the development version of WordPress is more of a work in progress than I have seen for a while, it will do what you need it to do. You always need to be cautious and I’d advise you to keep away until it’s ready for the big time until you have the knowledge to put things back should the undesirable befall your blog. I had an automatic WordPress update kick in maintenance mode without turning it off again. Nothing happened to the database, so a manual re-installation was all that was required to restore order. Otherwise, the development installation remains relatively stable though there are display problems in IE6 that do not afflict either Firefox, Opera or even IE8 Beta2.

All in all, these changes will make the next major WordPress a very big one and I have little doubt that the new administration interface will make for many comments. I must admit that I wasn’t too taken with it when I first glimpsed it in the crazyhorse branch but it now seems to be growing on me. The biggest change on the aesthetic front is that there’s a lot less whitespace about which may or may not float your boat. In any event, you can always change the colours like I have to make things more amenable. Even so, I reckon that WordPress 2.7 will be a major step forward when it comes and I think that I could like it. Saying that, I reckon that the release date is a good while away yet and the fact that 2.6.2 is being readied for release is telling in itself. Leaving plenty of time to remove any roughness is never a bad thing and especially so when you see the changes that are being made. In the meantime, I am certain that most people can wait.

A Look at a Compact System Camera

4th September 2013During August, I acquired an Olympus Pen E-PL5 and it is an item to which I still am becoming accustomed and it looks as if that is set to continue. The main reason that it appealed to me was the idea of having a camera with much of the functionality of an SLR but with many of the dimensions of a compact camera. In that way, it was a step up from my Canon PowerShot G11 without carrying around something that was too bulky.

Before I settled on the E-PL5, I had been looking at Canon’s EOS M and got to hear about its sluggish autofocus. That it had no mode dial on its top plate was another consideration though it does pack in an APS-C sized sensor (with Canon’s tendency to overexpose finding a little favour with me too on inspection of images from an well aged Canon EOS 10D) at a not so unappealing price of around £399. A sighting of a group of it and similar cameras in Practical Photography was enough to land that particular issue into my possession and they liked the similarly priced Olympus Pen E-PM2 more than the Canon. Though it was a Panasonic that won top honours in that test, I was intrigued enough by the Olympus option that I had a further look. Unlike the E-PM2 and the EOS M, the E-PL5 does have a mode dial on its top plate and an extra grip so that got my vote even it meant paying a little extra for it. There was a time when Olympus Pen models attracted my attention before now due to sale prices but this investment goes beyond that opportunism.

The E-PL5 comes in three colours: black, silver and white. Though I have a tendency to go for black when buying cameras, it was the silver option that took my fancy this time around for the sake of a spot of variety. The body itself is a very compact affair so it is the lens that takes up the most of the bulk. The standard 14-42 mm zoom ensures that this is not a camera for a shirt pocket and I got a black Lowepro Apex 100 AW case for it; the case fits snugly around the camera, so much so that I was left wondering if I should have gone for a bigger one but it’s been working out fine anyway. The other accessory that I added was a 37 mm Hoya HMC UV filter so that the lens doesn’t get too knocked about while I have the camera with me on an outing of one sort or another, especially when its plastic construction protrudes a lot further than I was expecting and doesn’t retract fully into its housing like some Sigma lenses that I use.

When I first gave the camera a test run, I had to work out how best to hold it. After all, the powered zoom and autofocus on my Canon PowerShot G11 made that camera more intuitive to hold and it has been similar for any SLR that I have used. Having to work a zoom lens while holding a dinky body was fiddly at first until I worked out how to use my right thumb to keep the body steady (the thumb grip on the back of the camera is curved to hold a thumb in a vertical position) while the left hand adjusted the lens freely. Having an electronic viewfinder instead of using the screen would have made life a little easier but they are not cheap and I already had spent enough money.

The next task after working out how to hold the camera was to acclimatise myself to the exposure characteristics of the camera. In my experience so far, it appears to err on the side of overexposure. Because I had set it to store images as raw (ORF) files, this could be sorted later but I prefer to have a greater sense of control while at the photo capture stage. Until now, I have not found a spot or partial metering button like what I would have on an SLR or my G11. That has meant either using exposure compensation to go along with my preferred choice of aperture priority mode or go with fully manual exposure. Other modes are available and they should be familiar to any SLR user (shutter priority, program, automatic, etc.). Currently, I am using bracketing while finding my feet after setting the ISO setting to 400, increasing the brightness of the screen and adding histograms to the playback views. With my hold on the camera growing more secure, using the dial to change exposure settings such as aperture (f/16 remains a favourite of mine in spite what others may think given the size of a micro four thirds sensor) and compensation while keeping the scene exactly the same to test out what the response to any changes might be.

While I still am finding my feet, I am seeing some pleasing results so far that encourage me to keep going; some remind me of my Pentax K10D. The E-PL5 certainly is slower to use than the G11 but that often can be a good thing when it comes to photography. That it forces a little relaxation in this often hectic world is another advantage. The G11 is having a quieter time at the moment and any episodes of sunshine offer useful opportunities for further experimentation and acclimatisation too. So far, my entry in the world of compact system cameras has revealed them to be of a very different form to those of compact fixed lens cameras or SLR’s. Neither truly get replaced and another type of camera has emerged.

Moves to Hugo

30th November 2022What amazes me is how things can become more complicated over time. As long as you knew HTML, CSS and JavaScript, building a website was not as onerous as long as web browsers played ball with it. Since then, things have got easier to use but more complex at the same time. One example is WordPress: in the early days, themes were much simpler than they are now. The web also has got more insecure over time, and that adds to complexity as well. It sometimes feels as if there is a choice to make between ease of use and simplicity.

It is against that background that I reassessed the technology that I was using on my public transport and Irish history websites. The former used WordPress, while the latter used Drupal. The irony was that the simpler website was using the more complex platform, so the act of going simpler probably was not before time. Alternatives to WordPress were being surveyed for the first of the pair, but none had quite the flexibility, pervasiveness and ease of use that WordPress offers.

There is another approach that has been gaining notice recently. One part of this is the use of Markdown for web publishing. This is a simple and distraction-free plain text format that can be transformed into something more readable. It sees usage in blogs hosted on GitHub, but also facilitates the generation of static websites. The clutter is absent for those who have no need of the Gutenberg Editor on WordPress.

With the content written in Markdown, it can be fed to a static website generator like Hugo. Using defined templates and fixed assets like CSS together with images and other static files, it can slot the content into HTML files very speedily since it is written in the Go programming language. Once you get acclimatised, there are no folder structures that cannot be used, so you get full flexibility in how you build out your website. Sitemaps and RSS feeds can be built at the same time, both using the same input as the HTML files.

In a nutshell, it automates what once needed manual effort used a code editor or a visual web page editor. The use of HTML snippets and layouts means that there is no necessity for hand-coding content, like there was at the start of the web. It also helps that Bootstrap can be built in using Node, so that gives a basis for any styling. Then, SCSS can take care of things, giving even more automation.

Given that there is no database involved in any of this, the required information has to be stored somewhere, and neither the Markdown content nor the layout files contain all that is needed. The main site configuration is defined in a single TOML file, and you can have a single one of these for every publishing destination; I have development and production servers, which makes this a very handy feature. Otherwise, every Markdown file needs a YAML header where titles, template references, publishing status and other similar information gets defined. The layouts then are linked to their components, and control logic and other advanced functionality can be added too.

Because static files are being created, it does mean that site searching and commenting, or contact pages cannot work like they would on a dynamic web platform. Often, external services are plugged in using JavaScript. One that I use for contact forms is Getform.io. Then, Zapier has had its uses in using the RSS feed to tweet site updates on Twitter when new content gets added. Though I made different choices, Disqus can be used for comments and Algolia for site searching. Generally, though, you can find yourself needing to pay, particularly if you need to remove advertising or gain advanced features.

Some comments service providers offer open source self-hosted options, but I found these difficult to set up and ended up not offering commenting at all. That was after I tried out Cactus Comments only to find that it was not discriminating between pages, so it showed the same comments everywhere. There are numerous alternatives like Remark42, Hyvor Talk, Commento, FastComments, Utterances, Isso, Mouthful, Muut and HyperComments but trying them all out was too time-consuming for what commenting was worth to me. It also explains why some static websites even send readers to Twitter if they have something to say, though I have not followed this way of working.

For searching, I added a JavaScript/JSON self-hosted component to the transport website, and it works well. However, it adds to the size of what a browser needs to download. That is not a major issue for desktop browsers, but the situation with mobile browsers is such that it has a sizeable effect. Testing with PageSpeed and Lighthouse highlighted this, even if I left things as they are. The solution works well in any case.

One thing that I have yet to work out is how to edit or add content while away from home. Editing files using an SSH connection is as much a possibility as setting up a Hugo publishing setup on a laptop. After that, there is the question of using a tablet or phone, since content management systems make everything web based. These are points that I have yet to explore.

As is natural with a code-based solution, there is a learning curve with Hugo. Reading a book provided some orientation, and looking on the web resolved many conundrums. There is good documentation on the project website, while forum discussions turn up on many a web search. Following any research, there was next to nothing that could not be done in some way.

Migration of content takes some forethought and took quite a bit of time, though there was an opportunity to carry some housekeeping as well. The history website was small, so copying and pasting sufficed. For the transport website, I used Python to convert what was on the database into Markdown files before refining the result. That provided some automation, but left a lot of work to be done afterwards.

The results were satisfactory, and I like the associated simplicity and efficiency. That Hugo works so fast means that it can handle large websites, so it is scalable. The new Markdown method for content production is not problematical so far apart from the need to make it more portable, and it helps that I found a setup that works for me. This also avoids any potential dealbreakers that continued development of publishing platforms like WordPress or Drupal could bring. For the former, I hope to remain with the Classic Editor indefinitely, but now have another option in case things go too far.

A penchant for strange decisions?

14th June 2007WordPress.com has retired its Feed Stats feature. While there might have been problems with it for some, I do find it a strange decision not to spend some time on it. After all, given the existence of Google Reader and its kind, I wouldn’t be surprised to learn that more people read blogs with RSS readers than by going to the sites themselves. In fact, I peruse blogs more often with Google Reader than by visiting the websites themselves. It’s enough to make me wonder if I could use FeedBurner with this blog.

To follow on from this, I am beginning to wonder if that Automattic, the people behind WordPress.com, seems to be a quirky company that makes decisions that are questioned by its customers. After all, they did remove the post preview functionality from blog post editing screens and that has generated a good deal of comment. On self-hosted WordPress, you can add a plug-in to correct this but that option is not open to WordPress.com users. The answer that I got to a theme change request earlier this year adds to the impression as does seeing a company having staff apparently work from home all over the world.

Automattic seems an unconventional beast alright; could that lead to their undoing? It is king of the hill with blogging world for now but there is nothing to say that will last forever.

CSS Control of Text Wrapping

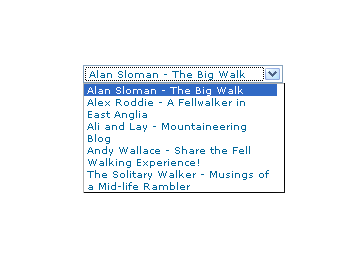

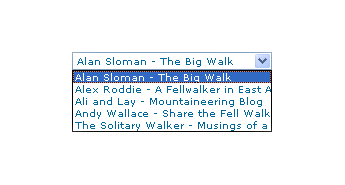

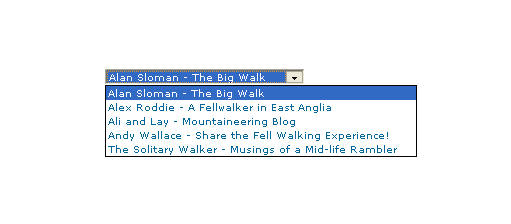

11th September 2007I recently spotted a request for a drop down list like that which you see below. I managed to create it using the CSS but it only worked for Firefox so I couldn’t suggest it to the requester.

form select, form select option {width: 185px; white-space: normal;}

form select {height: 16px; width: 200px; white-space: normal;}

form {margin: 300px auto 0 auto; width: 300px;}

Here’s how it looks in Firefox 2:

And in IE6:

And in Opera 9:

It would be nice if the white-space attribute gave the same result in all three but hey ho… As it happens, the W3C are working up other possible ways of controlling text wrapping in (X)HTML elements but that’s for the future and I’ll be expecting it when I see it.

For menus with wrapped entries, using DHTML menus and DOM scripting seems the best course for now. I suppose that you could always make the entries shorter which is exactly what I tend to do; I am pragmatic like that. Nevertheless, there’s never any harm in attempting to push the boundaries. You just have to come away from the cutting edge at the first sign of bleeding…

Of course, if anyone had other ideas, please let me know.

Dean’s FCKEditor for WordPress plugin, a wish list

8th September 2008I must admit that I have a liking for FCKEditor over and above what comes as standard with WordPress and the FCKEditor for WordPress plugin has been addressing my preference for a while now. However, its most recent release dates from last April and its integration with WordPress 2.6.x has been leaving a lot to be desired. In that vein, I have decided to collect a few of them here:

- Automatic saves: the idea behind this feature of WordPress is that you aren’t hitting the save button that often at all. In fact, given that hitting save creates a revision and an extra record in your database, it really isn’t something that you should be doing very often anyway. Unless, you don’t mind a bloated database, it’s probably best to avoid that habit of saving every few minutes like I do when using Word.

- Word count: this doesn’t update without saving a revision while it should update periodically in a manner akin to the automatic saves.

- Insertion of media such as images: this is just broken and it takes away the possibility of having galleries and captions without manual work.

What I have above are the major inconsistencies but there have always been annoyances like the adding unwanted entities allover the place, probably a habit of FCKEditor itself anyway. Nevertheless, it’s the integration work that really shows the lack of attention. Maybe, it’s time to move Dean Lee’s labour of love over to a fully community-maintained course of development. I know that it’s hard to see your “baby” leave you and take flight but I am inclined that it’s the best way forward when you considered how rapidly WordPress has been changing over the last year. Some moves have been made towards this but they really do need to go further.

GNOME 3 in Fedora 15: A Case of Acclimatisation and Configuration

29th May 2011When I gave the beta version of the now finally released Fedora 15 a try, GNOME 3 left me thinking that it was even more dramatic and less desirable a change than Ubuntu’s Unity desktop interface. In fact, I was left with serious questions about its actual usability, even for someone like me. It all felt as if everything was one click further away from me and thoughts of what this could mean for anyone seriously afflicted by RSI started to surface in my mind, especially with big screens like my 24″ Iiyama being commonplace these days. Another missing item was somewhere on the desktop interface for shutting down or restarting a PC; it seemed to be a case of first logging off and then shutting down from the login screen. This was yet another case of adding to the number of steps for doing something between GNOME 2 and GNOME 3 with its GNOME Shell.

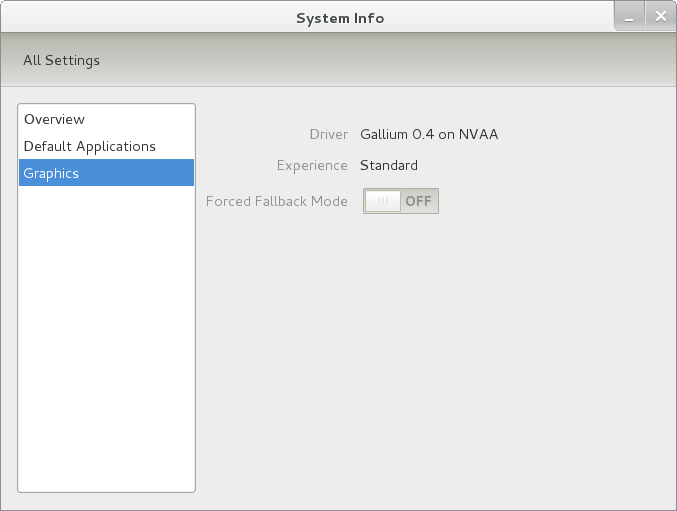

After that less than positive experience with a Live CD, you’d be forgiven for thinking that I’d be giving the GNOME edition of Fedora 15 a wide berth with the LXDE one being chosen in its place. Another alternative approach would have been to turn off GNOME Shell altogether by forcing the fallback mode to run all the time. The way to do this is start up the System Settings application and click on the System Info icon. Once in there, click on Graphics and turn on the Forced Fallback Mode option. With that done, closing down the application, logging off and then back on again will gain you an environment not dissimilar to the GNOME 2 of Fedora 14 and its forbears.

Even after considering the above easy way to get away from and maybe even avoid the world of GNOME Shell, I still decided to give it another go to see if I could make it work in a way that was less alien to me. After looking at the handy Quickstart guide, I ventured into the world of GNOME Shell extensions and very useful these have come to be too. The first of these that I added was the Alternate Status Menu and I ran the following command to do so:

yum install gnome-shell-extensions-alternative-status-menu

The result was that the “me” menu gained the ever useful “Power Off…” entry that I was seeking once I refreshed the desktop by running the command r in the command entry box produced by the ALT + F2 keyboard combination. Next up was the Place Menu and the command used to add that is:

yum install gnome-shell-extensions-place-menu

Again, refreshing the desktop as described for the Alternate Status Menu added the new menu to the (top) panel. Not having an application dock on screen all the time was the next irritation that was obliterated and it helps to get around the lack of a workspace switcher for now too. The GNOME Shell approach to virtual desktops is to have a dynamic number of workspaces with there always being one more than what you are using. It’s an interesting way of working that doesn’t perturb more pragmatic users like me, but there are those accustomed to tying applications to particular workspaces aren’t so impressed by the change. The other change to workspace handling is that keyboard shortcuts have changed to CTRL-ALT-[Up Arrow] and CTRL-ALT-[Down Arrow] from CTRL-ALT-[Left Arrow] and CTRL-ALT-[Right Arrow].

To add that application dock, I issued the command below and refreshed the desktop to get it showing. Though it stops application windows becoming fully maximised on the screen, that’s not a problem with my widescreen monitor. In fact, it even helps to switch between workspaces using the keyboard because that doesn’t seem to work when you have fully maximised windows.

yum install gnome-shell-extensions-dock

After adding the application dock, I stopped adding extensions though there are more available, such as Alternate Tab Behaviour (restores the ALT-TAB behaviour of GNOME 2), Auto-Move Windows, Drive Menu, Native Window Placement, Theme Selector and Window Navigator. Here are the YUM commands for each of these in turn:

yum install gnome-shell-extensions-alternate-tab

yum install gnome-shell-extensions-auto-move-windows

yum install gnome-shell-extensions-drive-menu

yum install gnome-shell-extensions-native-window-placement

yum install gnome-shell-extensions-theme-selector

yum install gnome-shell-extensions-user-theme

yum install gnome-shell-extensions-windowsNavigator

One hope that I will retain is that more of these extensions will appear over time, but Ranjith Siji seems to have a good round up of what is available. Other than these, I also have added the DCONF Editor and GNOME Tweaks Tool with the latter restoring buttons for minimising and maximising windows to their title bars for me. As ever, YUM was called to add them using the following commands:

yum install dconf-editor

yum install gnome-tweaks-tool

There are other things that can be done with these but I haven’t explored them yet. All YUM commands were run as root and the ones that I used certainly have helped me to make myself at home in what once was a very unfamiliar desktop environment for me. In fact, I am beginning to like what has been done with GNOME 3 though I have doubts as to how attractive it would be to a user coming to Linux from the world of Windows. While everything is solidly crafted, the fact that I needed to make some customisations of my own raises questions about how suitable the default GNOME set-up in Fedora is for a new user though Fedora probably isn’t intended for that user group anyway. Things get more interesting when you consider distros favouring new and less technical users, both of whom need to be served anyway.

Ubuntu has gone its own way with Unity and, having spent time with GNOME 3, I can see why they might have done that. Unity does put a lot more near at hand on the desktop than is the case with GNOME 3 where you find yourself going to the Activities window a lot, either by using your mouse or by keystrokes like the “super” (or Windows) key or ALT-F1. Even so, there are common touches like searching for an application like you would search for a web page in Firefox. In retrospect, it is a pity to see the divergence when something from both camps might have helped for a better user experience. Nevertheless, I am reaching the conclusion that the Unity approach feels like a compromise and that GNOME feels that little bit more polished. Saying that, an extra extension or two to put more items nearer to hand in GNOME Shell would be desirable. If I hadn’t found a haven like Linux Mint where big interface changes are avoided, maybe going with the new GNOME desktop mightn’t have been a bad thing to do after all.

Changing the working directory in a SAS session

12th August 2014It appears that PROC SGPLOT and other statistical graphics procedures create image files even if you are creating RTF or PDF files. By default, these are PNG files but there are other possibilities. When working with PC SAS , I have seen them written to the current working directory and that could clutter up your folder structure, especially if they are unwanted.

Being unable to track down a setting that controls this behaviour, I resolved to find a way around it by sending the files to the SAS work directory so they are removed when a SAS session is ended. One option is to set the session’s working directory to be the SAS work one and that can be done in SAS code without needing to use the user interface. As a result, you get some automation.

The method is implicit though in that you need to use an X statement to tell the operating system to change folder for you. Here is the line of code that I have used:

x "cd %sysfunc(pathname(work))";

The X statement passes commands to an operating system’s command line and they are enclosed in quotes. %sysfunc then is a macro command that allows certain data step functions or call routines as well as some SCL functions to be executed. An example of the latter is pathname and this resolves library or file references and it is interrogating the location of the SAS work library here so it can be passed to the operating systems cd (change directory) command for processing. This method works on Windows and UNIX so Linux should be covered too, offering a certain amount of automation since you don’t have to specify the location of the SAS work library in every session due to the folder name changing all the while.

Of course, if someone were to tell me of another way to declare the location of the generated PNG files that works with RTF and PDF ODS destinations, then I would be all ears. Even direct output without image file creation would be even better. Until then though, the above will do nicely.

Rendering Markdown into HTML using PHP

3rd December 2022One of the good things about using virtual private servers for hosting websites in preference to shared hosting or using a web application service like WordPress.com or Tumblr is that you get added control and flexibility. There was a time when HTML, CSS and client-side scripting were all that was available from the shared hosting providers that I was using. Then, static websites were my lot until it became possible to use Perl server side scripting. PHP predominates now, but Python or Ruby cannot be discounted either.

Being able to install whatever you want is a bonus as well, though it means that you also are responsible for the security of the containers that you use. There will be infrastructure security, but that of your own machine will be your own concern. Added power always means added responsibility, as many might say.

The reason that these thought emerge here is that getting PHP to render Markdown as HTML needs the installation of Composer. Without that, you cannot use the CommonMark package to do the required back-work. All the command that you see here will work on Ubuntu 22.04. First, you need to download Composer and executing the following command will accomplish this:

curl https://getcomposer.org/installer -o /tmp/composer-setup.php

Before the installation, it does no harm to ensure that all is well with the script before proceeding. That means that capturing the signature for the script using the following command is wise:

HASH=`curl https://composer.github.io/installer.sig`

Once you have the script signature, then you can check its integrity using this command:

php -r "if (hash_file('SHA384', '/tmp/composer-setup.php') === '$HASH') { echo 'Installer verified'; } else { echo 'Installer corrupt'; unlink('composer-setup.php'); } echo PHP_EOL;"

The result that you want is “Installer verified”. If not, you have some investigating to do. Otherwise, just execute the installation command:

sudo php /tmp/composer-setup.php --install-dir=/usr/local/bin --filename=composer

With Composer installed, the next step is to run the following command in the area where your web server expects files to be stored. That is important when calling the package in a PHP script.

composer require league/commonmark

Then, you can use it in a PHP script like so:

define("ROOT_LOC",$_SERVER['DOCUMENT_ROOT']);

include ROOT_LOC . '/vendor/autoload.php';

use League\CommonMark\CommonMarkConverter;

$converter = new CommonMarkConverter();

echo $converter->convertToHtml(file_get_contents(ROOT_LOC . '<location of markdown file>));

The first line finds the absolute location of your web server file directory before using it when defining the locations of the autoload script and the required markdown file. The third line then calls in the CommonMark package, while the fourth sets up a new object for the desired transformation. The last line converts the input to HTML and outputs the result.

If you need to render the output of more than one Markdown file, then repeating the last line from the preceding block with a different file location is all you need to do. The CommonMark object persists and can be used like a variable without needing the reinitialisation to be repeated every time.

The idea of building a website using PHP to render Markdown has come to mind, but I will leave it at custom web pages for now. If an opportunity comes, then I can examine the idea again. Before, I had to edit HTML, but Markdown is friendlier to edit, so that is a small advance for now.