30th November 2022 What amazes me is how things can become more complicated over time. As long as you knew HTML, CSS and JavaScript, building a website was not as onerous as long as web browsers played ball with it. Since then, things have got easier to use but more complex at the same time. One example is WordPress: in the early days, themes were much simpler than they are now. The web also has got more insecure over time, and that adds to complexity as well. It sometimes feels as if there is a choice to make between ease of use and simplicity.

It is against that background that I reassessed the technology that I was using on my public transport and Irish history websites. The former used WordPress, while the latter used Drupal. The irony was that the simpler website was using the more complex platform, so the act of going simpler probably was not before time. Alternatives to WordPress were being surveyed for the first of the pair, but none had quite the flexibility, pervasiveness and ease of use that WordPress offers.

There is another approach that has been gaining notice recently. One part of this is the use of Markdown for web publishing. This is a simple and distraction-free plain text format that can be transformed into something more readable. It sees usage in blogs hosted on GitHub, but also facilitates the generation of static websites. The clutter is absent for those who have no need of the Gutenberg Editor on WordPress.

With the content written in Markdown, it can be fed to a static website generator like Hugo. Using defined templates and fixed assets like CSS together with images and other static files, it can slot the content into HTML files very speedily since it is written in the Go programming language. Once you get acclimatised, there are no folder structures that cannot be used, so you get full flexibility in how you build out your website. Sitemaps and RSS feeds can be built at the same time, both using the same input as the HTML files.

In a nutshell, it automates what once needed manual effort used a code editor or a visual web page editor. The use of HTML snippets and layouts means that there is no necessity for hand-coding content, like there was at the start of the web. It also helps that Bootstrap can be built in using Node, so that gives a basis for any styling. Then, SCSS can take care of things, giving even more automation.

Given that there is no database involved in any of this, the required information has to be stored somewhere, and neither the Markdown content nor the layout files contain all that is needed. The main site configuration is defined in a single TOML file, and you can have a single one of these for every publishing destination; I have development and production servers, which makes this a very handy feature. Otherwise, every Markdown file needs a YAML header where titles, template references, publishing status and other similar information gets defined. The layouts then are linked to their components, and control logic and other advanced functionality can be added too.

Because static files are being created, it does mean that site searching and commenting, or contact pages cannot work like they would on a dynamic web platform. Often, external services are plugged in using JavaScript. One that I use for contact forms is Getform.io. Then, Zapier has had its uses in using the RSS feed to tweet site updates on Twitter when new content gets added. Though I made different choices, Disqus can be used for comments and Algolia for site searching. Generally, though, you can find yourself needing to pay, particularly if you need to remove advertising or gain advanced features.

Some comments service providers offer open source self-hosted options, but I found these difficult to set up and ended up not offering commenting at all. That was after I tried out Cactus Comments only to find that it was not discriminating between pages, so it showed the same comments everywhere. There are numerous alternatives like Remark42, Hyvor Talk, Commento, FastComments, Utterances, Isso, Mouthful, Muut and HyperComments but trying them all out was too time-consuming for what commenting was worth to me. It also explains why some static websites even send readers to Twitter if they have something to say, though I have not followed this way of working.

For searching, I added a JavaScript/JSON self-hosted component to the transport website, and it works well. However, it adds to the size of what a browser needs to download. That is not a major issue for desktop browsers, but the situation with mobile browsers is such that it has a sizeable effect. Testing with PageSpeed and Lighthouse highlighted this, even if I left things as they are. The solution works well in any case.

One thing that I have yet to work out is how to edit or add content while away from home. Editing files using an SSH connection is as much a possibility as setting up a Hugo publishing setup on a laptop. After that, there is the question of using a tablet or phone, since content management systems make everything web based. These are points that I have yet to explore.

As is natural with a code-based solution, there is a learning curve with Hugo. Reading a book provided some orientation, and looking on the web resolved many conundrums. There is good documentation on the project website, while forum discussions turn up on many a web search. Following any research, there was next to nothing that could not be done in some way.

Migration of content takes some forethought and took quite a bit of time, though there was an opportunity to carry some housekeeping as well. The history website was small, so copying and pasting sufficed. For the transport website, I used Python to convert what was on the database into Markdown files before refining the result. That provided some automation, but left a lot of work to be done afterwards.

The results were satisfactory, and I like the associated simplicity and efficiency. That Hugo works so fast means that it can handle large websites, so it is scalable. The new Markdown method for content production is not problematical so far apart from the need to make it more portable, and it helps that I found a setup that works for me. This also avoids any potential dealbreakers that continued development of publishing platforms like WordPress or Drupal could bring. For the former, I hope to remain with the Classic Editor indefinitely, but now have another option in case things go too far.

5th November 2022 Since I created a bespoke theme for this site, I have been tweaking things as I go. The basis came from the WordPress Theme Developer Handbook, which gave me a simpler starting point shorn of all sorts of complexity that is encountered with other themes. Naturally, this means that there are little rough edges that need tidying over time.

One of these is dealing with errors on the site like when content is not found. This could be a wrong address or a search query that finds no matching posts. When that happens, there is a redirection to the home page using some simple JavaScript within the loop fallback code enclosed within script start and end tags (including the whole code triggers the action from this post so it cannot be shown here):

location.href="[blog home page ]";

The bloginfo function can be used with the url keyword to find the home page so this does not get hard coded. For now, this works so long as JavaScript is enabled but a more robust approach may come in time. It is not possible to do a PHP redirect because of the nature of HTTP: when headers have been sent, it is not possible to do server redirects. At this stage, things become client side so using JavaScript is one way to go instead.

26th December 2019 Recently, I needed to inactivate blocks of code in a Perl script while doing some testing. This is something that I often do in other computing languages so I sought the same in Perl. To do that, I need to use the POD methodology. This meant enclosing the code as follows.

=start

<< Code to be inactivated by inclusion in a comment >>

=cut

The =start line could use any word after the equality sign but it seems that =cut is needed to close the multi-line comment. If this was actual programming documentation, then the comment block should include some meaningful text for use with perldoc but that was not a concern here since the commenting statements would be removed afterwards anyway and it is good practice not to leave commented code in a production script or program to avoid any later confusion.

In my case, this facility allowed me to isolate the code that I needed to alter and test before putting everything back as needed. It also saved time since I did not need to individually comment out every executable line because multiple lines could be inactivated at a time.

23rd April 2018 On another website, I have had a contact form but it was missing some functionality. For instance, it stored the input in files on a web server instead of emailing them. That was fixed more easily than expected using the PHP mail function. Even so, it remains useful to survey corresponding documentation on the w3schools website.

The other changes affected the way the form looked to a visitor. There was a reset button and that was removed on finding that such things are out of favour these days. Thinking again, there hardly was any need for it any way.

Newer additions that came with HTML5 had their place too. Including user hints using the placeholder attribute should add some user friendliness although I have avoided experimenting with browser-powered input validation for now. Use of the required attribute has its uses for tell a visitor that they have forgotten something but I need to check how that is handled in CSS more thoroughly before I go with that since there are new :required, :optional, :valid and :invalid pseudoclasses that can be used to help.

It seems that there is much more to learn about setting up forms since I last checked. This is perhaps a hint that a few books need reading as part of catching with how things are done these days. There also is something new to learn.

15th September 2011 By default in the DMS, Base SAS opens datasets from its Explorer using VIEWTABLE and with variable labels in the column headings and not variable names. Because I have been fortunate to use systems with SAS/FSP both installed and licensed, I have taken to using FSVIEW for browsing SAS datasets as a workaround and, though the interface may look old to some, it proves to be a very flexible tool that still has a few things to teach newer ones. With SAS Enterprise Guide, the dataset viewing functionality is different to both VIEWTABLE and FSVIEW but I have been to make it work for me. The SAS EG dataset viewing tool may appear like the former of these but it has a few tricks to teach its forbear.

Now that I find myself working again with the traditional SAS DMS interface and without SAS/FSP, I decided to see if there was a way to get VIEWTABLE to display variable names instead of variable labels by default. As it happened, the answer was found in an internet forum discussion. From the SAS command line, you can achieve the result issuing a command like the following:

VT SASHELP.VCOLUMN COLHEADING=NAMES

VT is the VIEWTABLE shortcut but it is the COLHEADING=NAMES option on the line that gets variable names shown in column headings. Taking it further, you can set this as the default setting for datasets opened using a mouse from Explorer panes using the following procedure:

- Click in or on the Explorer pane to highlight the the Explorer window.

- Select Tools->Options->Explorer in the menus.

- Select the Members tab.

- Double click on the TABLE icon.

- Double click on the &Open action.

- Set the Action command to: VIEWTABLE %8b.’%s’.DATA COLHEADING=NAMES.

- Click on the Set Default button.

- Save changes and close the Explorer Options window.

Because the DMS looks similar across versions 8.0 through to 9.2, the above instructions should be relevant to all of those. While I have yet to get the opportunity to use SAS 9.3, I would be surprised to find that the traditional SAS interface has changed there too, even though much else has changed about SAS. In fact, the latest version of SAS has brought quite a few new interesting features for programmers so it seems that you can do more through a familiar interface, not entirely a bad thing. It looks as if this VIEWTABLE tweak could be useful for a while yet.

22nd October 2008 While you can use <br /> tags, there is another way to achieve similar results: the or non-breaking space entity. Put one of them between two words and you stop them getting separated by a line break; I have been using this in the latest design tweaks that I made to my online photo gallery. Turning this on its head, if you see two words together acting without regard to normal wrapping conventions, then you can suspect that a non-breaking space could be a cause. There might be CSS options too but their effectiveness in different browsers may limit their usefulness.

1st July 2008 Recently, I changed the engine of my online photo gallery to a speedier PHP/MySQL-based affair from its PHP/Perl/XML-powered predecessor. On the server side, all was well, but a peculiar display issue turned up in Internet Explorer (6, 7 & 8 were afflicted by this behaviour) where photo caption text on the thumbnail gallery pages was being displayed erratically.

As far as I can gather, the trigger for the behaviour was that the thumbnail block was placed within a DIV floated using CSS that touched another DIV that cleared the floating behaviour. I use a table to hold the images and their associated captions in place. Furthermore, each caption was also a hyperlink nested within a set of P tags.

The remedy was to set the CSS Display property for the affected XHTML tag to a value of “inline-block”. Within a DIV, TABLE, TR, TD, P and A tag hierarchy, finding the right tag where the CSS property in question has the desired effect took some doing. As it happened, it was the tag set, that for the hyperlink, at the bottom of the stack that needed the fix.

Of course, it’s all very fine fixing something for one browser but it’s worthless if it breaks the presentation in other browsers. In that vein, I did some testing in Opera, Firefox, Seamonkey and Safari to check if all was well and it was. There may be older browsers, like versions of IE prior to 6, where things don’t appear as intended but I get the impression from my visitor statistics that the newer variants hold sway anyway. All in all, it was a useful lesson learnt and that’s never a bad thing.

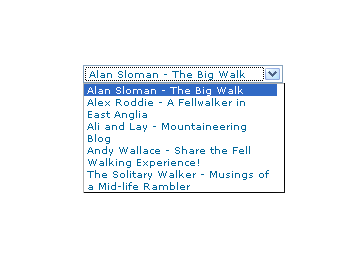

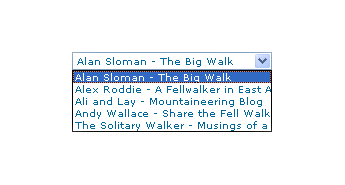

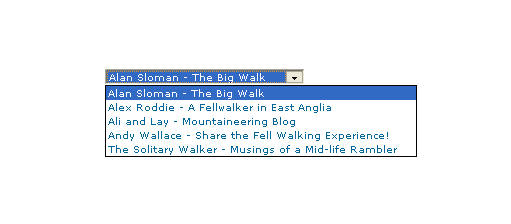

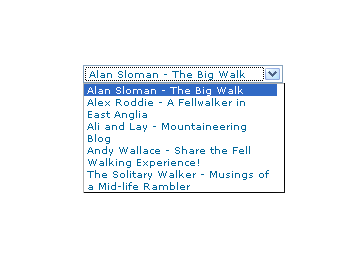

11th September 2007 I recently spotted a request for a drop down list like that which you see below. I managed to create it using the CSS but it only worked for Firefox so I couldn’t suggest it to the requester.

form select, form select option {width: 185px; white-space: normal;}

form select {height: 16px; width: 200px; white-space: normal;}

form {margin: 300px auto 0 auto; width: 300px;}

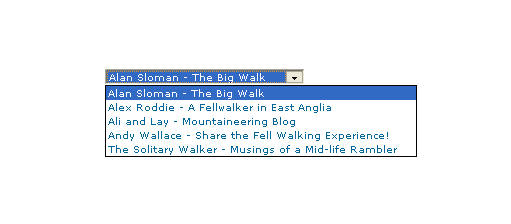

Here’s how it looks in Firefox 2:

And in IE6:

And in Opera 9:

It would be nice if the white-space attribute gave the same result in all three but hey ho… As it happens, the W3C are working up other possible ways of controlling text wrapping in (X)HTML elements but that’s for the future and I’ll be expecting it when I see it.

For menus with wrapped entries, using DHTML menus and DOM scripting seems the best course for now. I suppose that you could always make the entries shorter which is exactly what I tend to do; I am pragmatic like that. Nevertheless, there’s never any harm in attempting to push the boundaries. You just have to come away from the cutting edge at the first sign of bleeding…

Of course, if anyone had other ideas, please let me know.

12th February 2007 Over the weekend, I have been updating the theme on my other blog, HennessyBlog. It has been a task that projected me onto a learning curve with the WordPress 2.1 codebase. I have collected what I encountered so I know that it’s out there on the web for you (and I) to use and peruse. It took some digging to get to know some of what you find below. Any function used to power WordPress takes some finding so I need to find one place on the web where the code for WordPress is fully documented. The sites presenting tutorials on how to use WordPress are more often than not geared towards the non-techie rather than code cutters like myself. Then again, they might be waiting for someone to do it for them…

The changes made are as follows:

Tweaks to the interface

These are subtle with the addition of navigation controls to the sidebar and the change in location of the post metadata being the most obvious enhancements. “Decoration” with solid and dashed lines (using CSS border attributes rather than the deprecated hr tagset) and standards compliance links.

Standards compliance

Adding standards compliance links does mean that you’d better check that all is in order; it was then that I discovered that there was work to be done. There is an issue with the WordPress wpautop function (it lives in the formatting.php file) in that it sometimes doesn’t add closing tags. Finding out that it was this function that is implicated took a trip to the WordPress.org website; a good rummage in the wp-includes folder does a lot but it can’t achieve everything.

Like a lot of things in the WordPress code, the wpautop function isn’t half buried. The the_content function (see template-functions-post.php) used to output blog entries calls get_content function (also in template-functions-post.php) to extract the data from mySQL. The add_filter function (in plugin.php) associates the wpautop function and others with get_the_content function and the p tags get added to the output.

To return to the non-ideal behaviour that caused me to start out on the above quest, an example is where you have an img tag enclosed by div tags. The required substitution involves the use of regular expressions that work most of the time but get confused here. So adding a hack to the wpautop function was needed to change the code so that the p end tag got inserted. I’ll be keeping an eye out for any more scenarios like this that slip through the net and for any side effects. Otherwise, compliance is just making sure that all those img tags have their alt attributes completed.

Tweaks to navigation code

Most of my time has been spent on tweaking of the PHP code supporting the navigation. Different functions were being called in different places and I wanted to harmonise things. To do this, I created new functions in the functions.php for my theme and needed to resolve a number of issues along the way. Not least among these were regular expressions used for subsetting with the preg_match match that weren’t to my eyes Perl-compliant, as would be implied by the choice of function. I have since found that PCRE’s in PHP use a more pragmatic syntax but there remained issues with the expressions that were being used. They seemed to behave OK in their native environment but fell out of favour within the environs of my theme. Being acquainted with Perl, I went for a more familiar expression style and the issue has been resolved.

Along the way, I broke the RSS feed. This was on my off-line test blog so no one, apart from myself, that is, would have noticed. After a bit of searching, I realised that some stray white-space from the end of a PHP file (wp-config.php being a favourite culprit), after the PHP end tag in the script file as it happens, was finding its way into the feed and causing things to fall over. Feed readers don’t take too kindly to the idea of the XML declaration not making an appearance on the first line of the file. The refusal of Firefox to refresh things as it should caused some confusion until I realised that a forced refresh of the feed display was needed -- sometimes, it takes a while for an addled brain to think of these kinds of things.