Consolidation

19th November 2009For a while, the Windows computing side of my life has been spread across far too many versions of the pervasive operating systems with the list including 2000 (desktop and server), XP, 2003 Server, Vista and 7; 9x hasn’t been part of my life for what feels like an age. At home, XP has been the mainstay for my Windows computing needs with Vista Home Premium loaded on my Toshiba laptop. The latter variant came in for more use during that period of home computing “homelessness” and, despite a cacophony of complaints from some, it seemed to work well enough. Since the start of the year, 7 has also been in my sights with beta and release candidate instances in virtual machines leaving me impressed enough to go popping the final version onto both the laptop and in a VM on my main PC. Microsoft finally have got around to checking product keys over the net so that meant a licence purchase for each installation using the same downloaded 32-bit ISO image. 7 still is doing well by me so I am beginning to wonder whether having an XP VM is becoming pointless. The reason for that train of thought is that 7 is becoming the only version that I really need for anything that takes me into the world of Windows.

Work is a different matter with a recent move away from Windows 2000 to Vista heavily reducing my exposure to the venerable old stager (businesses usually take longer to migrate and any good IT manager usually delays any migration by a year anyway). 2000 is sufficiently outmoded by now that even my brother was considering a move to 7 for his work because of al the Office 2007 files that have been coming his way. He may be no technical user but the bad press gained by Vista hasn’t passed him by so a certain wariness is understandable. Saying that, my experiences with Vista haven’t been unpleasant and it always worked well on the laptop and the same also can be said for its corporate desktop counterpart. Much of the noise centered around issues of hardware and software compatibility and that certainly is apparent at work with my having some creases left to straighten.

With all of this general forward heaving, you might think that IE6 would be shuffling its mortal coil by now but a recent check on visitor statistics for this website places it at about 13% share, tantalisingly close to oblivion but still too large to ignore it completely. All in all, it is lingering like that earlier blight of web design, Netscape 4.x. If I was planning a big change to the site design, setting up a Win2K VM would be in order not to completely put off those labouring with the old curmudgeon. For smaller changes, the temptation is not to bother checking but that is questionable when XP is set to live on for a while yet. That came with IE6 and there must be users labouring with the old curmudgeon and that’s ironic with IE8 being available for SP2 since its original launch a while back. Where all this is leading me is towards the idea of waiting for IE6 share to decrease further before tackling any major site changes. After all, I can wait with the general downward trend in market share; there has to be a point when its awkwardness makes it no longer viable to support the thing. That would be a happy day.

Rough?

11th November 2009Was it because Canonical and friends kept Ubuntu in such a decent state from 8.04 through to 9.04 that things went a little quiet in the blogosphere on the subject of the well-known Linux distribution? If so, 9.10 might be proving more of a talking point and you have to wonder if this is such a good thing with the appearance of Windows 7 on the scene. Looking on the bright side, 10.04 will be an LTS release so there is some chance that any rough edges that are on display now could be resolved by next April. Even so, it might have been better not to see anything so obvious at all.

In truth, Ubuntu always has had its gaps and I have seen a few of their ilk over the last two years. Of these, a few have triggered postings on here. In fact, issues with accessing the BBC iPlayer still bring a goodly number of folk to this website. That may just be a matter of grabbing RealPlayer, now helpfully available as a DEB package, from the requisite place on the web and ensuring that Ubuntu-Restricted-Extras is in place too but you have to know that in the first place. Even so, unexpected behaviours like Palimpsest seeing every partition on a disk as a different drive and SIL Raid mappings being seen for hard drives that used to live on the main home PC that bit the dust earlier this year; it only happens on one of the machines that I have running Ubuntu so it may be hardware thing and newly added hard drive uses none of the SIL mapping either. Perhaps more seriously (is it something that a new user should be encountering?), a misfiring variant of Brasero had me moving to K3b. Then UFRaw was sluggish in batch but that’s nothing that having a Debian VM won’t overcome. Rough edges like these do get you asking if 9.10 was ready for the big time while making you reluctant to recommend it to mainstream users like my brother.

The counterpoint to the above is that 9.10 includes a host of under the bonnet changes like the introduction of Ext4 hard drive formatting, Xsplash to allow the faster system loading to occur unseen and GNOME 2.28. To someone looking in from outside like me, that looks like a lot of work and might explain the ingress of the annoyances that I have seen. Add to that the fact that we are between Debian releases so things like the optimised packaging of ImageMagick or UFRaw may not be so high up the list of the things to do, especially with the more general speed optimisations that were put in place for 9.10. With 10.04 set to be an LTS release so I’d be hoping that consolidation is the order of the day over the next five or six months but it seems to be the inclusion of new features and other such progress that get magazine reviewers giving higher ratings (Linux Format has given it a mark of 9 out of 10). With the mooted inclusion of GNOME 3 and its dramatically different interface in 10.10, they should get their fill of that. However, I’d like to see some restraint for the take of a smooth transition from the familiar GNOME 2.x to the new. If GNOME 3 stays very like its alpha builds, then the question as how users will take to it arises. Of course, there’s some time yet before we see GNOME 3 and, having seen how the Ubuntu developers transformed GNOME 2.28, I wouldn’t be surprised if the impact of any change could be dulled.

In summary, my few weeks with Ubuntu 9.10 as my main OS have thrown up no major roadblocks that would cause me to look at moving elsewhere; Fedora would be tempting if that situation were to arise. The irritations that I have seen are more like signs of a lack of polish and remain peripheral to day-to-day working if you discount CD/DVD burning. To be honest, there always have been roughnesses in Ubuntu but has the lack of sizeable change spoilt us? Whatever about how things feel afterwards, big changes can mean new problems to resolve and inspire blog posts describing any solutions so it’s not all bad. If that’s what Canonical wants to see, they might get it and the year ahead looks as if it is going to be an interesting one after a recent quieter period.

Evoluent Vertical Mouse 3 Configuration in Ubuntu 9.10

31st October 2009On popping Ubuntu 9.10 onto a newly built PC, I noticed that the button mappings weren’t as I had expected them to be. The button just below the wheel no longer acted like a right mouse button on a conventional mouse and it really was throwing me. The cause was found to be in a file name evoluent-verticalmouse3.fdi that is found either in /usr/share/hal/fdi/policy/20thirdparty/ or /etc/hal/fdi/policy/.

So, to get things back as I wanted, I changed the following line:

<merge key=”input.x11_options.ButtonMapping” type=”string”>1 2 2 4 5 6 7 3 8</merge>

to:

<merge key=”input.x11_options.ButtonMapping” type=”string”>1 2 3 4 5 6 7 3 8</merge>

If there is no sign of the file on your system, then create one named evoluent-verticalmouse3.fdi in /etc/hal/fdi/policy/ with the following content and you should be away. All that’s need to set things to rights is to disconnect the mouse and reconnect it again in both cases.

<?xml version=”1.0″ encoding=”ISO-8859-1″?>

<deviceinfo version=”0.2″>

<device>

<match key=”info.capabilities” contains=”input.mouse”>

<match key=”input.product” string=”Kingsis Peripherals Evoluent VerticalMouse 3″>

<merge key=”input.x11_driver” type=”string”>evdev</merge>

<merge key=”input.x11_options.Emulate3Buttons” type=”string”>no</merge>

<merge key=”input.x11_options.EmulateWheelButton” type=”string”>0</merge>

<merge key=”input.x11_options.ZAxisMapping” type=”string”>4 5</merge>

<merge key=”input.x11_options.ButtonMapping” type=”string”>1 2 3 4 5 6 7 3 8</merge>

</match>

</match>

</device>

</deviceinfo>

While I may not have appreciated the sudden change, it does show how you remap buttons on these mice and that can be no bad thing. Saying that, hardware settings can be personal things so it’s best not to go changing defaults based one person’s preferences. It just goes to show how valuable discussions like that on Launchpad about this matter can be. For one, I am glad to know what happened and how to make things the way that I want them to be though I realise that it may not suit everyone; that makes me reticent about asking for such things to be made the standard settings.

Still able to build PC systems

25th October 2009This weekend has been something of a success for me on the PC hardware front. Earlier this year, a series of mishaps rendering my former main home PC unusable; it was a power failure that finished it off for good. My remedy was a rebuild using my then usual recipe of a Gigabyte motherboard, AMD CPU and crucial memory. However, assembling the said pieces never returned the thing to life and I ended up in no man’s land for a while, dependent on and my backup machine and laptop. That wouldn’t have been so bad but for the need for accessing data from the old behemoth’s hard drives but an external drive housing set that in order. Nevertheless, there is something unfinished about work with machines having a series of external drives hanging off them. That appearance of disarray was set to rights by the arrival of a bare bones system from Novatech in July with any assembly work restricted to the kitchen table.There was a certain pleasure in seeing a system come to life after my developing a fear that I had lost all of my PC building prowess.

That restoration of order still left finding out why those components bought earlier in the year didn’t work together well enough to give me a screen display on start-up. Having electronics testing equipment and the knowledge of its correct use would make any troubleshooting far easier but I haven’t got these. There is a place near to me where I could go for this but you are left wondering what might be said to a PC build gone wrong. Of course, the last thing that you want to be doing is embarking on a series of purchases that do not fix the problem, especially in the current economic climate.

One thing to suspect when all doesn’t turn out as hoped is the motherboard and, for whatever reason, I always suspect it last. It now looks as if that needs to change after I discovered that it was the Gigabyte motherboard that was at fault. Whether it was faulty from the outset or it came a cropper with a rogue power supply or careless with static protection is something that I’ll never know. An Asus motherboard did go rogue on me in the past and it might be that it ruined CPU’s and even a hard drive before I laid it to rest. Its eventual replacement put a stop to a year of computing misfortune and kick-started my reliance on Gigabyte. That faith is under question now but the 2009 computing hardware mishap seems to be behind me and any PC rebuilds will be done on tables and motherboards will be suspected earlier when anything goes awry.

Returning to the present, my acquisition of an ASRock K10N78 and subsequent building activities has brought a new system using an AMD Phenom X4 CPU and 4 GB of memory into use. In fact, I writing these very words using the thing. It’s all in a new TrendSonic case too (placing an elderly behemoth into retirement) and with a SATA hard drive and DVD writer. The new motherboard has onboard audio and graphics so external cards are not needed unless you are an audiophile and/or a gamer; for the record, I am neither. Those additional facilities make for easier building and fault-finding should the undesirable happen.

The new box is running the release candidate of Ubuntu 9.10 and it seems to be working without a hitch too. Earlier builds of 9.10 broke in their VirtualBox VM so you should understand the level of concern that this aroused in my mind; the last thing that you want to be doing is reinstalling an operating system because its booting capability breaks every other day. Thankfully, the RC seems to have none of these rough edges so I can upgrade the Novatech box, still my main machine and likely to remain so for now, with peace of mind when the time comes.

A new phone

7th August 2009

For someone with an more than passing interest in technology, it may come as a surprise to you to learn that mobile telephony isn’t one of my strong points at all. That’s all the more marked when you cast your eye back over the developments in mobile telephone technology in recent years. Admittedly, until I subscribed to RSS feeds from the likes of TechRadar, the computing side of the area didn’t pass my way very much at all. That act has has alerted me to the now unmissable fact that mobile phones have become portable small computers, regardless of whether it is an offering from Apple or not. After the last few years, no one can say that things haven’t got really interesting.

In contrast to all the excitement, I only got my first phone in 2000 and stuck with it since and that was despite its scuffs and scratches along with its battery life troubles. Part of the reason for this is a certain blindness induced by having the thing on a monthly contract. As that is not sufficient to hide away the option of buying a phone on its own, then there’s the whole pay as you go arena too. The level of choice is such that packages such as those mentioned gain more prominence and potentially stop things in their tracks but I surmounted the perceived obstacles to buy a Nokia 1661 online from the Carphone Warehouse and collect it from the nearest store. The new replacement for my old Motorola is nothing flashy. Other phones may have nice stuff like an on-board camera or web access but I went down the route of sticking with basic functionality, albeit in a modern package with a colour screen. Still, for around £35, I got something that adds niceties like an alarm clock and a radio to the more bread and butter operations like making and taking phone calls and text messaging. Pay as you go may have got me the phone for less but I didn’t need a new phone number since I planned to slot in my old SIM card anyway; incidentally, the latter operation was a doddle once I got my brain into gear.

Now that I have replaced my mobile handset like I would for my land-line phone, I am left wondering why I dallied over the task for as long as I have. It may be that the combination of massive choice and a myriad of packages that didn’t appeal to me stalled things. With an increased awareness of the technology and options like buying a SIM card on its own, I can buy with a little more confidence now. Those fancier phones may tempt but I’ll be treating them as a nice to have rather than essential purchases. Saying all of this, the old handset isn’t going into the bin just yet though. It may be worn and worthless but its tri-band capabilities (I cannot vouch for the Nokia on this front) may make it a useful back up for international travel. The upgrade has given me added confidence for trying again when needs must but there is no rush and that probability of my developing an enthusiasm for fancy handsets is no higher.

A spot of roughness with VirtualBox 3.02 on Ubuntu

20th July 2009Among the various things that I needed to do on Saturday, I got to looking at why VirtualBox Windows guests could not shut down and the processes killed. Though it wasn’t clear at the outset, my suspicions began to centre on the sound hardware emulation and how it interacted with the host’s sound capabilities. A look at the VirtualBox log sent me that way after a spot of experimentation with reinstalling Windows 7 and adding the Guest Additions along with removal and reinstatement of the same for a Windows XP guest that makes my like easier. It also seems that the same problem blighted the start up of Linux guests too. Either removing virtual sound hardware or using the null sound driver seems to allow things to run smoothly. That may not sound ideal but it doesn’t bother me with the host providing all that I need. Also, it’s a moot point as to whether I have come across a bug in VirtualBox or whether using Ubuntu on a hardware configuration on which it wasn’t originally installed is the cause but I have found a way forward that suits me. Saying that, if I find that the issue disappears in a future, that would be even better.

A case of the reverse Midas touch?

18th May 2009Last week, a power cut put my main home PC of of action. It may have been recoverable if that silly accident of a few weeks back hadn’t happened but a rebuild is progress. That hasn’t been going so well but I somehow am managing to remain hopeful that an avenue of exploration will yield some fruit. Thoughts of throwing in the towel and call in the professionals rather than throwing good money after bad are gathering. The saga is causing me to question the sense of self building in place of buying something ready built. Saying that, they can have their off days too.

In the meantime, I have been displaced onto the spare desktop PC and the laptop. In other words, my home computing needs are being fulfilled to a point though the feeling of frustrated displacement and partial disconnection from my data remains; I have been able to extricate most of my digital photos and my web building so things are far from being hopeless. With every disappointment, there remains an opportunity or two. The spare desktop runs Debian so I have been spending some time seeing if I can bend that to my will and it can be done, sometimes after a fashion.

A few posts should result from this period, not least regarding working with Debian. On the subject of hardware, I’ll not elaborate until the matter comes to a more permanent resolution. From past attempts (all were successful in the end), I know that the business of PC building can feel like a dark art: you are left there wondering why none of your efforts summon a working system to life and then it all comes together in the blink of an eye and you wonder why all the effort was expended. The best analogy that I can offer is awaiting a bus or train; it often seems that the waiting takes longer than the journey. Restoring my home computing to what it was before is a mere triviality compared to what some people have to suffer but resolution of a problem always puts a spring in my step.

Ubuntu upgrades: do a clean installation or use Update Manager?

9th April 2009Part of some recent “fooling” brought on by the investigation of what turned out to be a duff DVD writer was a fresh installation of Ubuntu 8.10 on my main home PC. It might have brought on a certain amount of upheaval but it was nowhere near as severe as that following the same sort of thing with a Windows system. A few hours was all that was needed but the question as to whether it is better to do an upgrade every time a new Ubuntu release is unleashed on the world or to go for a complete virgin installation instead. With Ubuntu 9.04 in the offing, that question takes on a more immediate significance than it otherwise might do.

Various tricks make the whole reinstallation idea more palatable. For instance, many years of Windows usage have taught me the benefits of separating system and user files. The result is that my home directory lives on a different disk to my operating system files. Add to that the experience of being able to reuse that home drive across different Linux distros and even swapping from one distro to another becomes feasible. From various changes to my secondary machine, I can vouch that this works for Ubuntu, Fedora and Debian; the latter is what currently powers the said PC. You might have to user superuser powers to attend to ownership and access issues but the portability is certainly there and it applies anything kept on other disks too.

Naturally, there’s always the possibility of losing programs that you have had installed but losing the clutter can be liberating too. However, assembling a script made up up of one of more apt-get install commands can allow you to get many things back at a stroke. For example, I have a test web server (Apache/MySQL/PHP/Perl) set up so this would be how I’d get everything back in place before beginning further configuration. It might be no bad idea to back up your collection of software sources either; I have yet to add all of the ones that I have been using back into Synaptic. Then there are closed source packages such as VirtualBox (yes, I know that there is an open source edition) and Adobe Reader. After reinstating the former, all my virtual machines were available for me to use again without further ado. Restoring the latter allowed me to grab version 9.1 (probably more secure anyway) and it inveigles itself into Firefox now too so the number of times that I need to go through the download shuffle before seeing the contents of a PDF are much reduced, though not completely eliminated by the Windows-like ability to see a PDF loaded in a browser tab. Moving from software to hardware for a moment, it looks like any bespoke actions such as my activating an Epson Perfection 4490 Photo scanner need to be repeated but that was all that I needed to do. Getting things back into order is not so bad but you need to allow a modicum of time for this.

What I have discussed so far are what might be categorised as the common or garden aspects of a clean installation but I have seen some behaviours that make me wonder if the usual Ubuntu upgrade path is sufficiently complete in its refresh of your system. The counterpoint to all of this is that I may not have been looking for some of these things before now. That may apply to my noticing that DSLR support seems to be better with my Canon and Pentax cameras both being picked up and mounted for me as soon as they are connected to a PC, the caveat being that they are themselves powered on for this to happen. Another surprise that may be new is that the BBC iPlayer’s Listen Again works without further work from the user, a very useful development. It very clearly wasn’t that way before I carried out the invasive means. My previous tweaking might have prevented the in situ upgrade from doing its thing but I do see the point of not upsetting people’s systems with an overly aggressive update process, even if it means that some advances do not make themselves known.

So what’s my answer regarding which way to go once Ubuntu Jaunty Jackalope appears? For sake of avoiding initial disruption, I’d be inclined to go down the Update Manager route first while reserving the right to do a fresh installation later on. All in all, I am left with the gut feeling is that the jury is still out on this one.

Adding a new hard drive to Ubuntu

19th January 2009This is a subject that I thought that I had discussed on this blog before but I can’t seem to find any reference to it now. I have discussed the subject of adding hard drives to Windows machines a while back so that might explain what I was under the impression that I was. Of course, there’s always the possibility that I can’t find things on my own blog but I’ll go through the process.

What has brought all of this about was the rate at which digital images were filling my hard disks. Even with some housekeeping, I could only foresee the collection growing so I went and ordered a 1TB Western Digital Caviar Green Power from Misco. City Link did the honours with the delivery and I can credit their customer service with regard to organising delivery without my needing to get to the depot to collect the thing; it was a refreshing experience that left me pleasantly surprised.

For the most of the time, hard drives that I have had generally got on with the job there was one experience that has left me wary. Assured by good reviews, I went and got myself an IBM DeskStar and its reliability didn’t fill me with confidence and I will not touch their Hitachi equivalents because of it (IBM sold their hard drive business to Hitachi). This was a period in time when I had a hardware faltering on me with an Asus motherboard putting me off that brand around the same time as well (I now blame it for going through a succession of AMD Athlon CPU’s). The result is that I have a tendency to go for brands that I can trust from personal experience and both Western Digital falls into this category (as does Gigabyte for motherboards), hence my going for a WD this time around. That’s not to say that other hard drive makers wouldn’t satisfy my needs since I have had no problems with disks from Maxtor or Samsung but Ill stick with those makers that I know until they leave me down, something that I hope never happens.

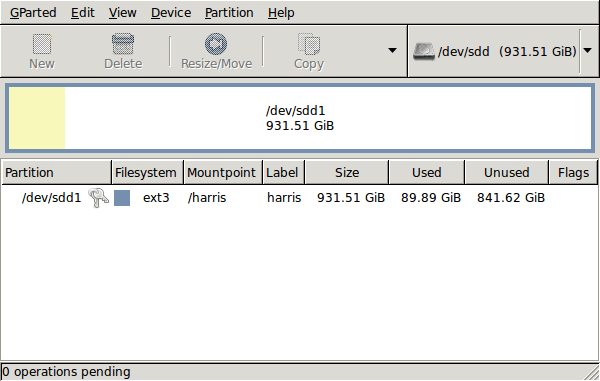

GParted running on Ubuntu

Anyway, let’s get back to installing the hard drive. The physical side of the business was the usual shuffle within the PC to add the SATA drive before starting up Ubuntu. From there, it was a matter of firing up GParted (System -> Administration -> Partition Editor on the menus if you already have it installed). The next step was to find the new empty drive and create a partition table on it. At this point, I selected msdos from the menu before proceeding to set up a single ext3 partition on the drive. You need to select Edit -> Apply All Operations from the menus set things into motion before sitting back and waiting for GParted to do its thing.

After the GParted activities, the next task is to set up automounting for the drive so that it is available every time that Ubuntu starts up. The first thing to be done is to create the folder that will be the mount point for your new drive, /newdrive in this example. This involves editing /etc/fstab with superuser access to add a line like the following with the correct UUID for your situation:

UUID=”32cf775f-9d3d-4c66-b943-bad96049da53″ /newdrive ext3 defaults,noatime,errors=remount-ro

You can can also add a comment like “# /dev/sdd1” above that so that you know what’s what in the future. To get the actual UUID that you need to add to fstab, issue a command like one of those below, changing /dev/sdd1 to what is right for you:

sudo vol_id /dev/sdd1 | grep “UUID=” /* Older Ubuntu versions */

sudo blkid /dev/sdd1 | grep “UUID=” /* Newer Ubuntu versions */

This is the sort of thing that you get back and the part beyond the “=” is what you need:

ID_FS_UUID=32cf775f-9d3d-4c66-b943-bad96049da53

Once all of this has been done, a reboot is in order and you then need to set up folder permissions as required before you can use the drive. This part gets me firing up Nautilus using gksu and adding myself to the user group in the Permissions tab of the Properties dialogue for the mount point (/newdrive, for example). After that, I issued something akin to the following command to set global permissions:

chmod 775 /newdrive

With that, I had completed what I needed to do to get the WD drive going under Ubuntu. After that IBM DeskStar experience, the new drive remains on probation but moving some non-essential things on there has allowed me to free some space elsewhere and carry out a reorganisation. Further consolidation will follow but I hope that the new 931.51 GiB (binary gigabytes or 1024*1024*1024 rather the decimal gigabytes (1,000,000,000) preferred by hard disk manufacturers) will keep me going for a good while before I need to add extra space again.

Whither Fedora?

10th January 2009There is a reason why things have got a little quieter on this blog: my main inspiration for many posts that make their way on here, Ubuntu, is just working away without much complaint. I have to say that BBC iPlayer isn’t working so well for me at the moment so I need to take a look at my setup. Otherwise, everything is continuing quietly. In some respects, that’s no bad thing and allows me to spend my time doing other things like engaging in hill walking, photography and other such things. I suppose that the calm is also a reflection of the fact that Ubuntu has matured but there is a sense that some changes may be on the horizon. For one thing, there are the opinions of a certain Mark Shuttleworth but the competition is progressing too.

That latter point brings me to Linux Format’s recently published verdict that Fedora has overtaken Ubuntu. I do have a machine with Fedora on there and it performs what I ask of it without any trouble. However, I have never been on it trying all of the sorts of things that I ask of Ubuntu so my impressions are not in-depth ones. Going deeper into the subject mightn’t be such a bad use of a few hours. What I am not planning to do is convert my main Ubuntu machine to Fedora. I moved from Windows because of constant upheavals and I have no intention to bring those upon me without good reason and that’s just not there at the moment.

Speaking of upheavals, one thought that is entering my mind is that of upgrading that main machine. Its last rebuild was over three years ago and computer technology has moved on a bit since then with dual and quad core CPU‘s from Intel and AMD coming into the fray. Of course, the cost of all of this needs to be considered too and that is never more true than of these troubled economic times. If you asked me about the prospect of a system upgrade a few weeks ago, I would have ruled it out of hand. What has got me wondering is my continued used of virtualisation and the resources that it needs. I am getting mad notions like the idea of running more than one VM at once and I do need to admit that it has its uses, even if it puts CPU’s and memory through their paces. Another attractive idea would be getting a new and bigger screen, particularly with what you can get for around £100 these days. However, my 17″ Iiyama is doing very well so this is one for the wish list more than anything else. None of the changes that I have described are imminent but I have noticed how fast I am filling disks up with digital images so an expansion of hard disk capacity has come much higher up the to do list.

If I ever get to doing a full system rebuild with a new CPU, memory and motherboard (I am not so sure about graphics since I am no gamer), the idea of moving into the world of 64-bit computing comes about. The maximum amount of memory usable by 32-bit software is 4 GB so 64-bit is a must if I decide to go beyond this limit. That all sounds very fine but for the possibility of problems arising with support for legacy hardware. It sounds like another bridge to be assessed before its crossing, even if two upheavals can be made into one.

Aside from system breakages, the sort of hardware and software changes over which I have been musing here are optional and can be done in my own time. That’s probably just as for a very good reason that I have mentioned earlier. Being careful with money becomes more important at times like these and it’s good that free software not only offers freedom of choice and usage but also a way to leave the closed commercial software acquisition treadmill with all of its cost implications, leaving money for much more important things.