Using .htaccess to control hotlinking

10th October 2020There are times when blogs cease to exist and the only place to find the content is on the Wayback Machine. Even then, it is in danger of being lost completely. One such example is the subject of this post.

Though this website makes use of the facilities of Cloudflare for various functions that include the blocking of image hotlinking, the same outcome can be achieved using .htaccess files on Apache web servers. It may work on Nginx to a point too but there are other configuration files that ought to be updated instead of using a .htaccess when some frown upon the approach. In any case, the lines that need adding to .htaccess are listed below though the web address needs to include your own domain in place of the dummy example provided:

RewriteEngine on

RewriteCond %{HTTP_REFERER} !^$

RewriteCond %{HTTP_REFERER} !^http://(www\.)?yourdomain.com(/)?.*$ [NC]

RewriteRule .*\.(gif|jpe?g|png|bmp)$ [F,NC]

The first line turns on the mod_rewrite engine and you may have that done anyway. Of course, the module needs enabling in your Apache configuration for this to work and you have to be allowed to perform the required action as well. This means changing the Apache configuration files. The next pair of lines look at the HTTP referer strings and the third one only allows images to be served from your own web domain and not others. To add more, you need to copy the third line and change the web address accordingly. Any new lines need to precede the last line that defines the file extensions that are to be blocked to other web addresses.

RewriteEngine on

RewriteCond %{HTTP_REFERER} !^$

RewriteCond %{HTTP_REFERER} !^http://(www\.)?yourdomain.com(/)?.*$ [NC]

RewriteRule \.(gif|jpe?g|png|bmp)$ /images/image.gif [L,NC]

Another variant of the previous code involves changing the last line to display a default image showing others what is happening. That may not reduce the bandwidth usage as much as complete blocking but it may be useful for telling others what is happening.

Moving a website from shared hosting to a virtual private server

24th November 2018This year has seen some optimisation being applied to my web presences guided by the results of GTMetrix scans. It was then that I realised how slow things were, so server loads were reduced. Anything that slowed response times, such as WordPress plugins, got removed. Usage of Matomo also was curtailed in favour of Google Analytics while HTML, CSS and JS minification followed. What had yet to happen was a search for a faster server. Now, another website has been moved onto a virtual private server (VPS) to see how that would go.

Speed was not the only consideration since security was a factor too. After all, a VPS is more locked away from other users than a folder on a shared server. There also is the added sense of control, so Let’s Encrypt SSL certificates can be added using the Electronic Frontier Foundation’s Certbot. That avoids the expense of using an SSL certificate provided through my shared hosting provider and a successful transition for my travel website may mean that this one undergoes the same move.

For the VPS, I chose Ubuntu 18.04 as its operating system and it came with the LAMP stack already in place. Have offload development websites, the mix of Apache, MySQL and PHP is more familiar to me than anything using Nginx or Python. It also means that .htaccess files become more useful than they were on my previous Nginx-based platform. Having full access to the operating system by means of SSH helps too and should mean that I have fewer calls on technical support since I can do more for myself. Any extra tinkering should not affect others either, since this type of setup is well known to me and having an offline counterpart means that anything riskier is tried there beforehand.

Naturally, there were niggles to overcome with the move. The first to fix was to make the MySQL instance accept calls from outside the server so that I could migrate data there from elsewhere and I even got my shared hosting setup to start using the new database to see what performance boost it might give. To make all this happen, I first found the location of the relevant my.cnf configuration file using the following command:

find / -name my.cnf

Once I had the right file, I commented out the following line that it contained and restarted the database service afterwards using another command to stop the appearance of any error 111 messages:

bind-address 127.0.0.1

service mysql restart

After that, things worked as required and I moved onto another matter: uploading the requisite files. That meant installing an FTP server so I chose proftpd since I knew that well from previous tinkering. Once that was in place, file transfer commenced.

When that was done, I could do some testing to see if I had an active web server that loaded the website. Along the way, I also instated some Apache modules like mod-rewrite using the a2enmod command, restarting Apache each time I enabled another module.

Then, I discovered that Textpattern needed php-7.2-xml installed, so the following command was executed to do this:

apt install php7.2-xml

Then, the following line was uncommented in the correct php.ini configuration file that I found using the same method as that described already for the my.cnf configuration and that was followed by yet another Apache restart:

extension=php_xmlrpc.dll

Addressing the above issues yielded enough success for me to change the IP address in my Cloudflare dashboard so it pointed at the VPS and not the shared server. The changeover happened seamlessly without having to await DNS updates as once would have been the case. It had the added advantage of making both WordPress and Textpattern work fully.

With everything working to my satisfaction, I then followed the instructions on Certbot to set up my new Let’s Encrypt SSL certificate. Aside from a tweak to a configuration file and another Apache restart, the process was more automated than I had expected so I was ready to embark on some fine-tuning to embed the new security arrangements. That meant updating .htaccess files and Textpattern has its own, so the following addition was needed there:

RewriteCond %{HTTPS} !=on

RewriteRule ^ https://%{HTTP_HOST}%{REQUEST_URI} [R=301,L]

This complemented what was already in the main .htaccess file and WordPress allows you to include http(s) in the address it uses, so that was another task completed. The general .htaccess only needed the following lines to be added:

RewriteCond %{SERVER_PORT} 80

RewriteRule ^(.*)$ https://www.assortedexplorations.com/$1 [R,L]

What all these achieve is to redirect insecure connections to secure ones for every visitor to the website. After that, internal hyperlinks without https needed updating along with any forms so that a padlock sign could be shown for all pages.

With the main work completed, it was time to sort out a lingering niggle regarding the appearance of an FTP login page every time a WordPress installation or update was requested. The main solution was to make the web server account the owner of the files and directories, but the following line was added to wp-config.php as part of the fix even if it probably is not necessary:

define('FS_METHOD', 'direct');

There also was the non-operation of WP Cron and that was addressed using WP-CLI and a script from Bjorn Johansen. To make double sure of its effectiveness, the following was added to wp-config.php to turn off the usual WP-Cron behaviour:

define('DISABLE_WP_CRON', true);

Intriguingly, WP-CLI offers a long list of possible commands that are worth investigating. A few have been examined but more await attention.

Before those, I still need to get my new VPS to send emails. So far, sendmail has been installed, the hostname changed from localhost and the server restarted. More investigations are needed but what I have not is faster than what was there before, so the effort has been rewarded already.

Reloading .bashrc within a BASH terminal session

3rd July 2016BASH is a command-line interpretor that is commonly used by Linux and UNIX operating systems. Chances are that you will find find yourself in a BASH session if you start up a terminal emulator in many of these though there are others like KSH and SSH too.

BASH comes with its own configuration files and one of these is located in your own home directory, .bashrc. Among other things, it can become a place to store command shortcuts or aliases. Here is an example:

alias us=’sudo apt-get update && sudo apt-get upgrade’

Such a definition needs there to be no spaces around the equals sign and the actual command to be declared in single quotes. Doing anything other than this will not work as I have found. Also, there are times when you want to update or add one of these and use it without shutting down a terminal emulator and restarting it.

To reload the .bashrc file to use the updates contained in there, one of the following commands can be issued:

source ~/.bashrc

. ~/.bashrc

Both will read the file and execute its contents so you get those updates made available so you can continue what you are doing. There appears to be a tendency for this kind of thing in the world of Linux and UNIX because it also applies to remounting drives after a change to /etc/fstab and restarting system services like Apache, MySQL or Nginx. The command for the former is below:

sudo mount -a

Often, the means for applying the sorts of in-situ changes that you make are simple ones too and anything that avoids system reboots has to be good since you have less work interruptions.

On Upgrading to Linux Mint 11

31st May 2011For a Linux distribution that focuses on user-friendliness, it does surprise me that Linux Mint offers no seamless upgrade path. In fact, the underlying philosophy is that upgrading an operating system is a risky business. However, I have been doing in-situ upgrades with both Ubuntu and Fedora for a few years without any real calamities. A mishap with a hard drive that resulted in lost data in the days when I mainly was a Windows user places this into sharp relief. These days, I am far more careful but thought nothing of sticking a Fedora DVD into a drive to move my Fedora machine from 14 to 15 recently. Apart from a few rough edges and the need to get used to GNOME 3 together with making a better fit for me, there was no problem to report. The same sort of outcome used to apply to those online Ubuntu upgrades that I was accustomed to doing.

The recommended approach for Linux Mint is to back up your package lists and your data before the upgrade. Doing the former is a boon because it automates adding the extras that a standard CD or DVD installation doesn’t do. While I did do a little backing up of data, it wasn’t total because I know how to identify my drives and take my time over things. Apache settings and the contents of MySQL databases were my main concern because of where these are stored.

When I was ready to do so, I popped a DVD in the drive and carried out a fresh installation into the partition where my operating system files are kept. Being a Live DVD, I was able to set up any drive and partition mappings with reference to Mint’s Disk Utility. What didn’t go so well was the GRUB installation, and it was due to the choice that I made on one of the installation screens. Despite doing an installation of version 10 just over a month ago, I had overlooked an intricacy of the task and placed GRUB on the operating system files partition rather than at the top level for the disk where it is located. Instead of trying to address this manually, I took the easier and more time-consuming step of repeating the installation like I did the last time. If there was a graphical tool for addressing GRUB problems, I might have gone for that instead, but am left wondering at why there isn’t one included at all. Maybe it’s something that the people behind GRUB should consider creating unless there is one out there already about which I know nothing.

With the booting problem sorted, I tried logging in only to find a problem with my desktop that made the system next to unusable. It was back to the DVD and I moved many of the configuration files and folders (the ones with names beginning with a “.”) from my home directory in the belief that there might have been an incompatibility. That action gained me a fully usable desktop environment but I now think that the cause of my problem may have been different to what I initially suspected. Later I discovered that ownership of files in my home area elsewhere wasn’t associated with my user ID though there was no change to it during the installation. As it happened, a few minutes with the chown command were enough to sort out the permissions issue.

The restoration of the extra software that I had added beyond what standardly gets installed was took its share of time but the use of a previously prepared list made things so much easier. That it didn’t work smoothly because some packages couldn’t be found the first time around, so another one was needed. Nevertheless, that is nothing compared to the effort needed to do the same thing by issuing an installation command at a time. Once the usual distribution software updates were in place, all that was left was to update VirtualBox to the latest version, install a Citrix client and add a PHP plugin to NetBeans. Then, next to everything was in place for me.

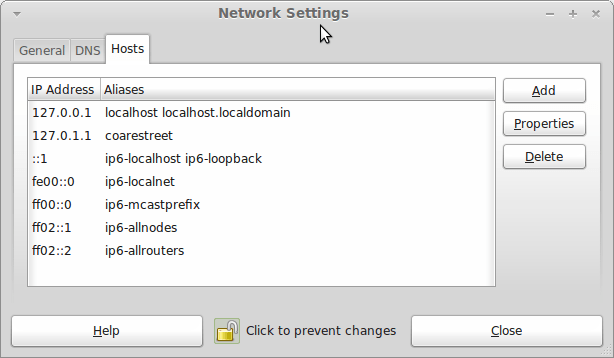

Next, Apache settings were restored as were the databases that I used for offline web development. That nearly was all that was needed to get offline websites working but for the need to add an alias for localhost.localdomain. That required installation of the Network Settings tool so that I could add the alias in its Hosts tab. With that out of the way, the system had been settled in and was ready for real work.

In light of some of the glitches that I saw, I can understand the level of caution regarding a more automated upgrade process on the part of the Linux Mint team. Even so, I still wonder if the more manual alternative that they have pursued brings its own problems in the form of those that I met. The fact that the whole process took a few hours in comparison to the single hour taken by the in-situ upgrades that I mentioned earlier is another consideration that makes you wonder if it is all worth it every six months or so. Saying that, there is something to letting a user decide when to upgrade rather than luring one along to a new version, a point that is more than pertinent in light of the recent changes made to Ubuntu and Fedora. Whichever approach you care to choose, there are arguments in favour as well as counterarguments too.