5th December 2007 As of the time of writing, Amazon MP3 is only available to customers in the U.S. of A., so any enthusiasm for its provision of DRM-free digital music offerings has to be tempered by that limitation on its availability. Apple’s iTunes store offers some, but locked down tracks are its mainstay. Amazon’s restrictions aren’t the first in the digital audio world and they are unlikely to be the last too. Pandora have done it in the world of internet radio, and I seem to remember that iTunes might have done it too in their time.

There may be other reasons, but licensing and royalties might need to be negotiated country by country, slowing the rolling out of new products across the world. The iPhone faced an equivalent situation, though that involved mobile telephony providers. Commercial considerations pervade too and I suppose that a worldwide launch of the iPhone might have been too complex a feat for Apple to manage; they probably wanted to nurture a sense of anticipation among customers in any case.

It seems that things are still following the pattern that at least used to be endemic in the motion picture industry: the U.S. gets to see a film first and then everywhere else thereafter. Being able to reuse the movie film reels used in American cinemas has been the studios’ advantage from the staggered releases. Because cinema releases have been staggered, video and DVD releases were staggered too so it’s both intriguing and frustrating to see American companies using a similar launching strategy in wholly different market sectors. It’s amazing how old habits die hard…

31st January 2020 Due to a need to access websites with country blocking, I have decided to give CyberGhost a go and it also will come in handy when connecting devices to other Wi-Fi connections. What I have got is the three year subscription package and all went went well on the first day of use. However, things became unusable on the second and a reboot did not sort it.

The problem seemed to affect a phone running Android too and I even got to suspecting my router an broadband provider. Even terminating the subscription came to mind but it did not come to that. Instead, I did a bit more research and tried changing the maximum transition unit (MTU) for the connection to 1300 as suggested in a CyberGhost help article. Using the Control Panel meant that it was resetting to 1500 on my Windows 10 machine so I then turned to a command line based solution.

To do that, I started PowerShell in administrator mode from the context menu produced by right clicking on the Start Menu icon on the taskbar. Then, I entered the following command to see what connections I had and what the MTU settings were:

netsh interface ipv4 show subinterfaces

From looking through the Settings and Control Panel applications, I already had worked out what network interface belonged to the CyberGhost connection. Seeing that the MTU setting was 1500, I then issued a command like the following to change that to 1300.

netsh interface ipv4 set subinterface "<name of ethernet interface>" mtu=1300 store=persistent

Here, <name of ethernet interface> gets replace by the name of your connection and the string is quoted to avoid spaces in the name causing problems with executing the command. Once that second command had been run, the first one was issued again and the output checked to ensure that the MTU setting was as expected.

This was done when the VPN connection was inactive but it may work also with an active connection. After making the change, I again reconnected to the VPN and all has been as expected since then and I found a better connection for my Android phone too.

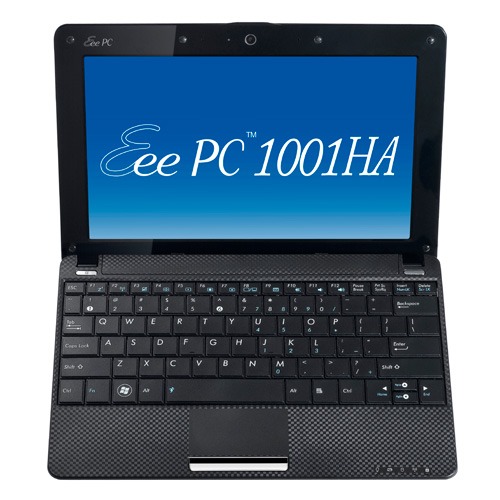

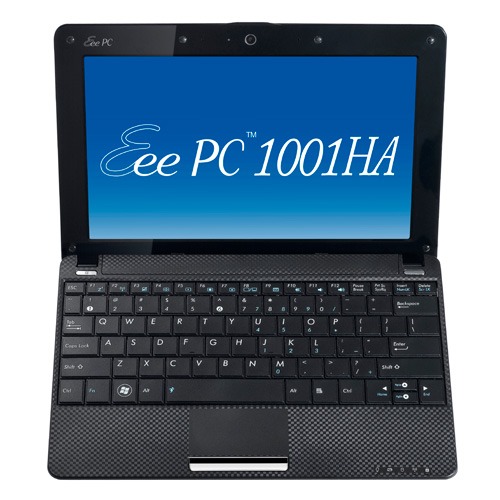

7th October 2010 Having had an Asus Eee PC 1001 HA for a few weeks now, I thought that it might be opportune to share a few words about the thing on here. The first thing that struck me when I got it was the size of the box in which it came. Being accustomed to things coming in large boxes meant the relatively diminutive size of the package was hard not to notice. Within that small box was the netbook itself along with the requisite power cable and not much else apart from warranty and quickstart guides; so that’s how they kept things small.

Though I was well aware of the size of a netbook from previous bouts of window shopping, the small size of something with a 10″ screen hadn’t embedded itself into my consciousness. In spite of that, it came with more items that reflect desktop computing than might be expected. First, there’s a 160 GB hard disk and 1 GB of memory, neither of which is disgraceful and the memory module sits behind a panel opened by loosening a screw so I am left wondering about adding more. Sockets for network and VGA cables are included along with three USB ports and sockets for a set of headphones and for a microphone. Portability starts to come to the fore with the inclusion of an Intel Atom CPU and a socket for an SD card. Unusual inclusions come in the form of an onboard webcam and microphone, both of which I plan on leaving off for sake of privacy. Wi-Fi is another networking option so you’re not short of features. The keyboard is not too compromised either and the mouse trackpad is the sort of thing that you’d find on full size laptops. With the latter, you can use gestures too so I need to learn what ones are available.

The operating system that comes with the machine is Windows XP and there are some extras bundled too. These include a trial of Trend Micro as an initial security software option as well as Microsoft Works and a trial of Microsoft Office 2007. Then, there are some Asus utilities too though they are not so useful to me. All in all, none of these burden the processing power too much and IE8 comes installed too. Being a tinkerer, I have put some of the sorts of things that I’d have on a full size PC on there. Examples include Mozilla Firefox, Google Chrome, Adobe Reader and Adobe Digital Editions. Pushing the boat out further, I used Wubi to get Ubuntu 10.04 on there in the same way as I have done with my 15″ Toshiba laptop. So far, nothing seems to overwhelm the available processing power though I am left wondering about battery life.

The mention of battery life brings me to mulling over how well the machine operates. So far, I am finding that the battery lasts around three hours, much longer than on my Toshiba but nothing startling either. Nevertheless, it does preserve things by going into sleep mode when you leave it unattended for long enough. Still, I’d be inclined to find a socket if I was undertaking a long train journey.

According to the specifications, it is suppose to weight around 1.4 kg and that seems not to be a weight that has been a burden to carry so far and the smaller size makes it easy to pop into any bag. It also seems sufficiently robust to allow its carrying by bicycle though I wouldn’t be inclined to carry it over too many rough roads. In fact, the manufacturer advises against carrying it anywhere (by bike or otherwise) with switching it off first but that’s a common sense precaution.

Start-up times are respectable though you feel the time going by when you’re on a bus for a forty minute journey and shutdown needs some time set aside near the end. Screen resolution can be increased to 1024×600 and the shallowness can be noticed, reminding you that you are using a portable machine. Because of that, there have been times when I hit the F11 key to get a full screen web browser session. Coupled with the Vodafone mobile broadband dongle that I have, it has done some useful things for me while on the move so long as there is sufficient signal strength (seeing the type of connection change between 3G, EDGE and GPRS is instructive). All in all, it’s not a chore to use so long as Internet connections aren’t temperamental.

11th January 2013 As of today, Jessops no longer continues to trade. It is but a third specialist purveyor of photographic equipment to go this way. Jacobs, another Leicester headquartered competitor, met the same fate as did the Wildings chain in the northwest of England. These were smaller operations than Jessops who may have overreached itself during the boom years and certainly had their share of financial troubles in recent times, the latest of which putting an end from the operation.

Many are pondering what is happening and the temptation is to blame the rise of the e-commerce and the economic situation for all of this. In addition, I have seen poor service blamed. However, where are we going to go now after this? Has photography become such a specialised market that you need a diversified business to stick with it? After all, independent retailers have been taking a hammering too and some have gone out of business like the chains that I have mentioned here.

It does raise the question as to where folk engaging in a photographic purchase are going to go for advice now; is the web sufficiently beginner friendly? There seemingly are going to be less bricks and mortar shops out there now so coming across one-to-one advice as once would have been the case is looking harder than it once was. Photographic magazines will help and the web has a big role to play too. It certainly informed some of my previous purchases but I have been that little bit more serious about my photography for a while now.

It might be that photography is becoming more specialist again after a period when the advent of digital cameras caused an explosion in interest. Cameras on mobile phones are becoming ever more capable and cannibalising the compact camera market for those only interested in point and shoot machinery. Maybe that is where things are going in that mass market photography doesn’t offer the future that it once might have done given the speed of technological advance. The future and present undoubtedly are about as interesting as they have become utterly uncertain.

Thinking over the last ten years or so, there has been a lot of change and that seems set to continue even if I am left wondering if photography has shot its bolt by now. My first SLR came from a Stockport branch of Jessops and was a film camera, a Canon EOS SLR. It certainly got me going and was exchanged for a Canon EOS 30 from Ffordes, an internet transaction during which the phone system around Manchester and Cheshire went on the blink. That outage may have exposed a frailty of our networked world but there has been no fire to melt cables in a tunnel since then. Further items from Jessops came via the same channel such as a Manfrotto 055 tripod and my Pentax K10D. A Canon-fit 28-135 mm Sigma came from Jessops’ then Manchester Deansgate store and another Canon-fit Sigma lens, a 70-300 mm telephoto affair, came from another branch of the chain, although not the Macclesfield branch since that had yet to be established and there’s no photographic store left in the town now after the Jessops and Wildings closures.

Those purchases have become history just like the photographic retail chain from which they were sourced. These days, I am more than comfortable in making dealings over the web but that concern about those starting out that I expressed earlier now remains. Seeing how that would work is set to become interesting. Might it limit the take up of photography on a more serious basis? That is a question that could get a very interesting answer as we continue into ever more uncertain times.

12th March 2012 Recent exposure to both the HTC Wildfire S and the HTC Desire S has me wondering if we need mobile broadband dongles anymore. The reason for my asking this is that both Android devices can act as mobile wi-fi hubs and they work very well as these too. Even the dedicated T-Mobile mobile wi-fi hub that I picked up in the closing months of 2011 now looks a little obsolete though it retains a cost advantage in its favour.

In the case of both HTC phones, it thankfully is possible to use high security encryption and a pass key too. However, it is best to change the default key before any activation of wi-fi signal, if only to ensure that you don’t end up with a very nasty bill. The Wi-Fi Hotspot App has all the settings that you need and up to five connections can be supported at a time, just like that T-Mobile hub that I mentioned earlier.

That replaced a defunct dongle from the same company; the USB connection appeared to have failed and was ailing for a while. Now, it might be that the more transparent use of an actual mobile phone might be usurping that as well, especially when I have been wondering why it has been doing so well with internet connectivity while using it on the move. This voyage into the world of what smartphones and similar devices can do is throwing up its share of surprises as I go along.

3rd August 2007 In the times when all software was bought boxed, there were fewer issues with finding serial numbers, activation codes and the like. If you were tidy and retained the packaging and documentation while knowing where to find them, you were away. However, in these days of software distribution over the web, things are a little less clear cut. The said codes tend to reside in emails sent following the purchase and, if you are like me, they tend to be scattered around the place; it is not a good thing when you need to get your software reinstalled after a system meltdown, like what I am needing to do. Another trap is that expensive software could disappear all of a sudden if your hard drive crashes, not an enticing thought. A spot of backup of both the installer and product key seems very much in order.

20th October 2013 GNOME 3.10 came out last month but it took until its inclusion into the Arch and Antergos repositories for me to see it in the flesh. Apart from the risk of instability, this is the sort of thing at which rolling distributions excel. They can give you a chance to see the latest software before it is included anywhere else. For the GNOME desktop environment, it might have meant awaiting the next release of Fedora in order to glimpse what is coming. This is not always a bad thing because Ubuntu GNOME seems to be sticking with using a release behind the latest version. With many GNOME Shell extension writers not updating their extensions until Fedora has caught up with the latest release of GNOME for a stable release, this is no bad thing and it means that a version of the desktop environment has been well bedded in by the time it reaches the world of Ubuntu too. Debian takes this even further by using a stable version from a few years ago and there is an argument in favour of that from a solidity perspective.

Being in the habit of kitting out GNOME Shell with extensions, I have a special interest in seeing which ones still work or could work with a little tweaking and those which have fallen from favour. In the top panel, the major change has been to replace the sound and user menus with a single aggregate menu. The user menu in particular has been in receipt of the attentions of extension writers and their efforts either need re-work or dropping after the latest development. The GNOME project seems to have picked up an annoying habit from WordPress in that the GNOME Shell API keeps changing and breaking extensions (plugins in the case of WordPress). There is one habit from the WordPress that needs copying though and that is with documentation, especially of that API for it is hardly anywhere to be found.

GNOME Shell theme developers don’t escape and a large border appeared around the panel when I used Elementary Luna 3.4 so I turned to XGnome Enhanced (found via GNOME-Look.org) instead. The former no longer is being maintained since the developer no longer uses GNOME Shell and has not got the same itch to scratch; maybe someone else could take it over because it worked well enough until 3.8? So far, the new theme works for me so that will be an option should there a move to GNOME 3.10 on one of my PC’s at some point in the future.

Returning to the subject of extensions, I had a go at seeing how the included Applications Menu extension works now since it wasn’t the most stable of items before. That has improved and it looks very usable too so I am not awaiting the updating of the Frippery equivalent. That the GNOME Shell backstage view has not moved on that much from how it was in 3.8 could be seen as a disappointed but the workaround will do just fine. Aside from the Frippery Applications Menu, there are other extensions that I use heavily that have yet to be updated for GNOME Shell 3.10. After a spot of success ahead of a possible upgrade to Ubuntu GNOME 13.10 and GNOME Shell 3.8 (though I remain with version 13.04 for now), I decided to see I could port a number of these to the latest version of the user interface. Below, you’ll find the results of my labours so feel free to make use of these updated items if you need them before they are update on the GNOME Shell Extensions website:

Frippery Bottom Panel

Frippery Move Clock

Remove App Menu

Show Desktop

There have been more changes coming in GNOME 3.10 than GNOME Shell, which essentially is a JavaScript construction. The consolidation of application title bars in GNOME applications continues but a big exit button has appeared in the affected applications that wasn’t there before. Also there remains the possibility of applying the previously shared modifications to Nautilus (also known as Files) and a number of these usefully extend themselves to other applications such as Gedit too. Speaking of Gedit, this gains a very useful x of y numbering for the string searching functionality with x being the actual number of the occurrence of a certain piece of text in a file and y being its total number of occurrences.GNOME Tweak Tool has got an overhaul too and lost the setting that makes a folder path box appear in Nautilus instead of a location part, opening Dconf-Editor and going to org > gnome > nautilus > preferences and completing the tick box for always-use-location-entry will do the needful.

Essentially, the GNOME project is continuing along the path on which it set a few years ago. Though I would rather that GNOME Shell would be more mature, invasive changes are coming still and it leaves me wondering if or when this might stop. Maybe that was the consequence of mounting a controversial experiment when users were happy with what was there in GNOME 2. The arrival of Fedora 20 should bring with it an increase in the number of GNOME shell extensions that have been updated. So long as it remains stable Antergos is good have a look at the latest version of GNOME for now and Cinnamon fans may be pleased the Cinnamon 2.0 is another desktop option for the Arch-based distribution. An opportunity to say more about that may arrive yet once the Antergos installer stops failing at a troublesome package download; a separate VM is being set aside for a look at Cinnamon because it destabilised GNOME during a previous look.

28th March 2008 Putting this blog back on its feet after a spot of web hosting bother caused me to learnt a bit more about web hosting than I otherwise might have done. Here’s a selection and they are in no particular order:

- Store your passwords securely and where you can find them because you never know how a foul up of your own making can strike. For example, a faux pas with a configuration file is all that’s needed to cause havoc for a database site such as a WordPress blog. After all, nobody’s perfect and your hosting provider may not get you out of trouble as quickly as you might like.

- Get a MySQL database or equivalent as part of your package rather than buying one separately. If your provider allows a trial period, then changing from one package to another could be cheaper and easier than if you bought a separate database and needed to jettison it because you changed from, say, a Windows package to a Linux one or vice versa.

- It might be an idea to avoid a reseller unless the service being offered is something special. Going for the sake of lower cost can be a false economy and it might be better to cut out the middleman altogether and go direct to their provider. Being able to distinguish a reseller from a real web host would be nice but I don’t see that ever becoming a reality; it is hardly in resellers’ interests, after all.

- Should you stick with a provider that takes several days to resolve a serious outage? The previous host of this blog had a major MySQL server outage that lasted for up to three days and seeing that was one of the factors that made me turn tail to go to a more trusted provider that I have used for a number of years. The smoothness of the account creation process might be another point worthy of consideration.

- Sluggish system support really can frustrate, especially if there is no telephone support provided and the online ticketing system seems to take forever to deliver solutions. I would advise strongly that a host who offers a helpline is a much better option than someone who doesn’t. Saying all of that, I think that it’s best to be patient and, when your website is offline, that might not be as easy you’d hope it to be.

- Setting up hosting or changing from one provider to another can take a number of days because of all that needs doing. So, it’s best to allow for this and plan ahead. Account creation can be very quick but setting up the website can take time while domain name transfer can take up to 24 hours.

- It might not take the same amount of time to set up Windows hosting as its Linux equivalent. I don’t know if my experience was typical but I have found that the same provider set up Linux hosting far quicker (within 30 minutes) than it did for a Windows-based package (several hours).

- Be careful what package you select; it can be easy to pick the wrong one depending on how your host’s sight is laid out and what they are promoting at the time.

- You can have a Perl/PHP/MySQL site working on Windows, even with IIS being used in place instead of Apache. The Linux/Apache/Perl/PHP/MySQL approach might still be better, though.

- The Windows option allows for ASP, .Net and other such Microsoft technologies to be used. I have to say that my experience and preference is for open source technologies so Linux is my mainstay but learning about the other side can never hurt from a career point of view. After, I am writing this on a Windows Vista powered laptop to see how the other half live as much as anything else.

- Domains serviced by hosting resellers can be visible to the systems of those from whom they buy their wholesale hosting. This frustrated my initial attempts to move this blog over because I couldn’t get an account set up for technologytales.com because a reseller had it already on the same system. It was only when I got the reseller to delete the account with them that things began to run more smoothly.

- If things are not going as you would like them, getting your account deleted might be easier than you think so don’t procrastinate because you think it a hard thing to do. Of course, it goes without saying that you should back things up beforehand.

15th February 2007 There are things in the Vista EULA that gave me the heepy jeepies when I first saw them. In fact, one provision set off something of a storm across the web in the latter part of 2006. Microsoft in its wisdom went and made everything more explicit and raised cane in doing so. It was their clarification of the one machine one licence understanding that was at the heart of whole furore. The new wording made it crystal clear that you were only allowed to move your licence between machines once and once only. After howls of protest, the XP wording reappeared and things calmed down again.

Around the same time, Paul Thurrott published his take on the Vista EULA on his Windows SuperSite. He takes the view that the new EULA only clarified what in the one XP and that enthusiast PC builders are but a small proportion of the software market. Another interesting point that he makes is that there is no need to license the home user editions of Vista for use in virtual machines because those users would not be doing that kind of thing. The logical conclusion of this argument is that only technical business users and enthusiasts would ever want to do such a thing; I am both. On the same site, Koroush Ghazi of TweakGuides.com offers an alternate view, at Thurrrott’s invitation, from the enthusiast’s’s side. That view takes note of the restrictions of both the licencing and all of the DRM technology that Microsoft has piled into Vista. Another point made is that enthusiasts add a lot to the coffers of both hardware and software producers.

Bit-tech.net got the Microsoft view on the numbers of activations possible with a copy of retail Vista before further action is required. The number comes in at 10 and it seems a little low. However, Vista will differ from XP in that it thankfully will not need reactivation as often. In fact, it will take changing a hard drive and one other component to do it. That’s less stringent than needing reactivation after changing three components from a wider list in a set period like it is in XP. I cannot remember the exact duration of the period in question but 60 days seems to ring a bell.

OEM Vista is more restrictive than this: one reactivation and no more. I learned that from the current issue of PC Plus, the trigger of my concern regarding Windows licensing. Nevertheless, so long as no hard drive changes go on, you should be fine. That said, I do wonder what happens if you add or remove an external hard drive. On this basis at least, it seems OEM is not such a bargain then and Microsoft will not support you anyway.

However, there are cracks appearing in the whole licencing edifice and the whole thing is beginning to look a bit of a mess. Brian Livingston of Windows Secrets has pointed out that you could do a clean install using only the upgrade edition(s) of Vista by installing it twice. The Vista upgrade will upgrade over itself, allowing you access to the activation process. Of course, he recommends that you only do this when you are in already in possession of an XP licence and it does mean that your XP licence isn’t put out of its misery, apparently a surprising consequence of the upgrade process if I have understood it correctly.

However, this is not all. Jeff Atwood has shared on his blog Coding Horror that the 30 grace activation period can be extended in three increments to 120 days. Another revelation was that all Windows editions are on the DVD and it is only the licence key that you have in your possession that will determine the version that you install. In fact, you can install any version for 30 days without entering a licence key at all. Therefore, you can experience 32-bit or 64-bit versions and any edition from Home Basic, Home Premium, Business or Ultimate. The only catch is that once the grace period is up, you have to licence the version that is installed at that point in time.

There is no cracking required to any of the above (a quick Google search digs loads of references to cracking of the Windows activation process). It sounds surprising but it is none other than Microsoft itself who has made these possibilities available, albeit in an undocumented fashion. And the reason is not commercial benevolence but the need to keep their technical support costs under control apparently.

That said, a unintended consequence of the activation period extensibility is that PC hardware enthusiasts, the types who rebuild their machines every few months (in contrast, I regard my main PC as a workhouse and I have no wish to cause undue disruption to my life with this sort of behaviour but each to their own… anyway, it’s not as if they are doing anyone else any harm), would not ever have to activate their copies of Vista, thus avoiding any issues with the 1 or 10 activation limit: an interesting workaround for the limitations in the first place. And all of this is available without (illegally, no doubt) using a fake Windows activation server as has been reported.

With all of these back doors inserted into the activation process by Microsoft itself, it makes some of the more scary provisions look not only over the top but also plain silly: a bit like using a sledgehammer to crack a nut. For instance, there is a provision that Microsoft could kill your Windows licence if it deems that you breached terms of that licence. It looks as if it’s meant to cover the loss in functionality at the end of the activation grace period but it does rather give the appearance that your £370 Vista Ultimate is as ephemeral as a puff of smoke: overdoing that reminder is an almost guaranteed method of encouraging power users jump ship to Linux or another UNIX. And the idea of Windows Genuine Advantage continually phoning home doesn’t provide any great reassurance either. However, it does seem that Microsoft has reactivated XP licences over the phone when reasonable grounds are given: irredeemable loss of system, for example. That ease and cost of technical support returns again. There is corollary to this: make life easy for Microsoft and they won’t bother you very much if at all. Incidentally, if they ever did do a remote control kill of your system, the whole action would be akin to skating on legal thin ice. And I suspect that they may not like making trouble for themselves.

I think I’ll let the dust settle and stay on my XP planet while in a Vista universe. As it happens, Paul Thurrott has a good article on that subject too.

30th September 2019 In a previous post, I described how to add Perl modules in Linux Mint while mentioning that I hoped to add another that discusses the use of the Parallel::ForkManager module. This is that second post and I am going to keep things as simple and generic as they can be. There are other articles like one on the Perl Maven website that go into more detail.

The first thing to do is ensure that the Parallel::ForkManager module is called by your script and having the following line near the top will do just that. Without this step, the script will not be able to find the required module by itself and errors will be generated.

use Parallel::ForkManager;

Then, the maximum number of threads needs to be specified. While that can be achieved using a simple variable declaration, the following line reads this from the command used to invoke the script. It even tells a forgetful user what they need to do in its own terse manner. Here $0 is the name of the script and N is the number of threads. Not all these threads will get used and processing capacity will limit how many actually are in use so there is less chance of overwhelming a CPU.

my $forks = shift or die "Usage: $0 N\n";

Once the maximum number of available threads is known, the next step is to instantiate the Parallel::ForkManager object as follows to use these child processes:

my $pm = Parallel::ForkManager->new($forks);

With the Parallel::ForkManager object available, it is now possible to use it as part of a loop. A foreach loop works well though only a single array can be used with hashes being needed when other collections need interrogation. Two extra statements are needed with one to start a child process and another to end it.

foreach $t (@array) {

my $pid = $pm->start and next;

<< Other code to be processed >>

$pm->finish;

}

Since there often is other processing performed by script and it is possible to have multiple threaded loops in one, there needs to be a way of getting the parent process to wait until all the child processes have completed before moving from one step to another in the main script and that is what the following statement does. In short, it adds more control.

$pm->wait_all_children;

To close, there needs to be a comment on the advantages of parallel processing. Modern multi-core processors often get used in single threaded operations and that leaves most of the capacity unused. Utilising this extra power then shortens processing times markedly. To give you an idea of what can be achieved, I had a single script taking around 2.5 minutes to complete in single threaded mode while setting the maximum number of threads to 24 reduced this to just over half a minute while taking up 80% of the processing capacity. This was with an AMD Ryzen 7 2700X CPU with eight cores and a maximum of 16 processor threads. Surprisingly, using 16 as the maximum thread number only used half the processor capacity so it seems to be a matter of performing one’s own measurements when making these decisions.