10th July 2013 One thing that I like to do is peruse the installed applications on any computer system. In most cases, this is simple enough to do but there are some who appear to believe in doing away with that in favour of text box searching. It also seems that the GNOME have fallen into that trap with version 3.8 of GNOME Shell. You could add the Applications Menu extension that is formally part of the GNOME Shell Classic interface and I have done this too. However, that has been known to freeze the desktop session so I am not that big a big fan of it.

However, there is a setting that brings back those application categories in the overview screen and it can be set using dconf-editor. After opening up the application, navigate to org > gnome > shell using the tree in the left hand panel of the tool. Editing the app-folder-categories entry in the right hand panel is what adds the categories back for you. The default is [‘Utilities’, ‘Sundry’] and this needs to be changed to [‘Utilities’, ‘Games’, ‘Sundry’, ‘Office’, ‘Network’, ‘Internet’, ‘Graphics’, ‘Multimedia’, ‘System’, ‘Development’, ‘Accessories’, ‘System Settings’, ‘Other’].

Once the above has been completed, a change is noticeable in that you get a list of categories in the application overview screen and a split of application icons in the middle and categories down the right hand side. Clicking on a category brings up a new panel that contains the application within it and this can be closed again. Cycling through the categories is a process of opening and closing the different panels. The behaviour may be changed but the functionality remains and I have heard that this will be polished further in release 3.10 of GNOME Shell.

For those wanting to to exit all of this and get something like the old GNOME 2, it is possible to add the Classic Session. In Fedora 19, it’s a matter of issuing something like the following command:

sudo yum -y install gnome-classic-session

In reality, this is a case of adding a number of extensions and changing the panel colour from black to grey but it works without needing the category tweak that I described above. The Application Menu extension does need more stability hardening before I’d trust it completely though. There’s no point having a nicer interface if it’s going to freeze up on you too often.

6th April 2015 Last week, I kept getting a multitude of messages from Ubuntu’s crash reporting tool, Apport. So many would appear at once on reaching the desktop session during system start-up that I actually downloaded an installation ISO disk image with the intention of performing a fresh installation to rid myself of the problem. In the end, it never came to that because another remedy produced the result that I needed.

Emptying /etc/crash was a start but it did not do what I needed and I disabled Apport altogether. This meant editing its configuration file, which is named apport and is found in /etc/default/. The following command should open it up in Gedit on supplying your password:

gksudo gedit /etc/default/apport

With the file opened, look for the line with enabled=1 and change this to enabled=0. Once that is done, restart Apport as follows:

sudo restart apport

This will need your account password to be supplied before it will act and any messages should appear thereafter. Of course, I would not have done this if there was a real system problem but my Ubuntu GNOME installation was and is working smoothly so it is the remedy that I needed. The idea behind the tool is that Ubuntu developers get information on any application crashes but I find that it directs me to the Ubuntu Launchpad bug reporting website and that requires a user name and password for the information to be processed. For some reason, that is enough to stall me and I wonder if there could be a way of getting developers what they need without adding that extra manual step. Then, more information gets supplied and we get a more stable operating system in return.

18th September 2016 It was a change of job in 2010 that got me interested in using devices with internet connectivity on the go. Until then, the attraction of smartphones had not been strong, but I got myself a Blackberry on a pay as you go contract, but the entry device was painfully slow, and the connectivity was 2G. It was a very sluggish start.

It was supplemented by an Asus Eee PC that I connected to the internet using broadband dongles and a Wi-Fi hub. This cumbersome arrangement did not work well on short journeys and the variability of mobile network reception even meant that longer journeys were not all that successful either. Usage in hotels and guest houses though went better and that has meant that the miniature laptop came with me on many a journey.

In time, I moved away from broadband dongles to using smartphones as Wi-Fi hubs and that largely is how I work with laptops and tablets away from home unless there is hotel Wi-Fi available. Even trips overseas have seen me operate in much the same manner.

One feature is that we seem to carry quite a number of different gadgets with us at a time and that can cause inconvenience when going through airport security since they want to screen each device separately. When you are carrying a laptop, a tablet, a phone and a camera, it does take time to organise yourself and you can meet impatient staff, as I found recently when returning from Oslo. Checking in whatever you can as hold luggage helps to get around at least some of the nuisance and it might be time for the use of better machinery to cut down on having to screen everything separately.

When you come away after an embarrassing episode as I once did, the attractions of consolidating devices start to become plain. In fact, most probably could get with having just their phone. It is when you take activities like photography more seriously that the gadget count increases. After all, the main reason a laptop comes on trips beyond Britain and Ireland at all is to back up photos from my camera in case an SD card fails.

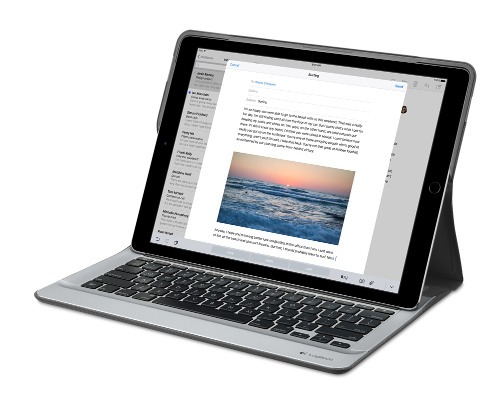

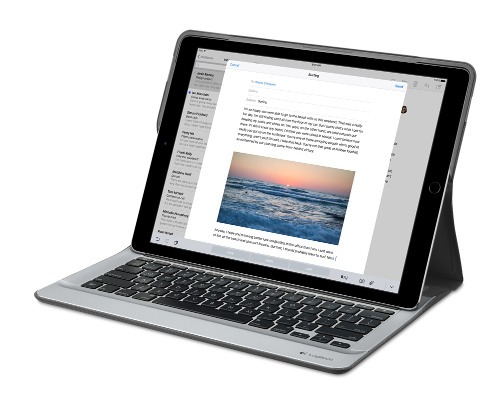

Parking that thought for a while, let’s go back to March this year when temptation overcame what should have been a period of personal restraint. The result was that a 32 GB 12.9″ Apple iPad Pro came into my possession along with an Apple Pencil and a Logitech CREATE Backlit Keyboard Case. It should have done so, but the size of the screen did not strike me until I got it home from the Apple Store. That was one of the main attractions because maps can be shown with a greater field of view in a variety of apps, a big selling point for a hiker with a liking for maps, who wants more than what is on offer from Apple, Google or even Bing. The precision of the Pencil is another boon that makes surfing the website so much easier and the solid connection between the case and the iPad means that keyboard usage is less fiddly than it would if it used Bluetooth. Having tried them with the BBC iPlayer app, I can confirm that the sound from the speakers is better than any other mobile device that I have used.

Already, it has come with me on trips around England and Scotland. These weekend trips saw me leave the Asus Eee PC stay at home when it normally might have come with me and taking just a single device along with a camera or two had its uses too. The screen is large for reading on a train but I find that it works just as well so long as you have enough space. Otherwise, combining use of a suite of apps with recourse to the web does much of the information seeking needed while on a trip away and I was not found wanting. Battery life is good too, which helps.

Those trips allowed for a little light hotel room blog post editing too and the iPad Pro did what was needed, though the ergonomics of reaching for the screen with the Pencil meant that my arm was held aloft more than was ideal. Another thing that raised questions in my mind is the appearance of word suggestions at the bottom of the screen as if this were a mobile phone since I wondered if these were more of a hindrance than a help given that I just fancied typing and not pointing at the screen to complete words. Copying and pasting works too but I have found the screen-based version a little clunky so I must see if the keyboard one works just as well, though the keyboard set up is typical of a Mac so that affects word selection. You need to use the OPTION key in the keyboard shortcut that you use for this and not COMMAND or CONTROL as you might do on a PC.

Even with these eccentricities, I was left wondering if it had any utility when it came to backing up photos from digital cameras and there is an SD card adapter that makes this possible. A failure of foresight on my part meant that the 32 GB capacity now is an obvious limitation but I think I might have hit on a possible solution that does not need to upload to an iCloud account. It involves clearing off the photos onto a 128 GB Transcend JetDrive Go 300 so they do not clog up the iPad Pro’s storage. That the device has both Lightning and USB connectivity means that you can plug it into a laptop or desktop PC afterwards too. If that were to work as I would hope, then the laptop/tablet combination that I have been using for all overseas trips could be replaced to allow a weight reduction as well as cutting the hassle at airport security.

Trips to Ireland still may see my sticking with a tried and tested combination though because I often have needed to do some printing while over there. While I have been able to print a test document from an iPad Mini on my home network-connected printer, not every model supports this and that for NFC or Air Print is not universal either. If this were not an obstacle, apps like Pages, Numbers and Keynote could have their uses for business-related work and there are web-based offerings from Google, Microsoft and others too.

In conclusion, I have found that my iPad Pro does so much of what I need on a trip away that retiring the laptop/tablet combination for most of these is not as outrageous as it once would have seemed. In some ways, iOS has a way to go yet before it could take over from macOS but it remains in development so it will be interesting to see what happens next. All the while, hybrid devices running Windows 10 are becoming more pervasive and that might provide Apple with the encouragement that it needs.

21st January 2024 OWASP stands for Open Web Application Security Project, and it is an online community dedicated to web application security. They are well known for their Top 10 Web Application Security Risks and late last year, they added a Top 10 for

Large Language Model (LLM) Applications.

Given that large language models made quite a splash last year, this was not before time. ChatGPT gained a lot of attention (OpenAI also has had DALL-E for generation of images for quite a while now), there are many others with Anthropic Claude and Perplexity also being mentioned more widely.

Figuring out what to do with any of these is not as easy as one might think. For someone more used to working with computer code, using natural language requests is quite a shift when you no longer have documentation that tells what can and what cannot be done. It is little wonder that prompt engineering has emerged as a way to deal with this.

Others have been plugging in LLM capability into chatbots and other applications, so security concerns have come to light, so far, I have not heard anything about a major security incident, but some are thinking already about how to deal with AI-suggested code that other already are using more and more.

Given all that, here is OWASP’s summary of their Top 10 for LLM Applications. This is a subject that is sure to draw more and more interest with the increasing presence of artificial intelligence in our everyday working and no-working lives.

LLM01: Prompt Injection

This manipulates an LLM through crafty inputs, causing unintended actions by the LLM. Direct injections overwrite system prompts, while indirect ones manipulate inputs from external sources.

LLM02: Insecure Output Handling

This vulnerability occurs when an LLM output is accepted without scrutiny, exposing backend systems. Misuse may lead to severe consequences such as Cross-Site Scripting (XSS), Cross-Site Request Forgery (CSRF), Server-Side Request Forgery (SSRF), privilege escalation, or remote code execution.

LLM03: Training Data Poisoning

This occurs when LLM training data is tampered, introducing vulnerabilities or biases that compromise security, effectiveness, or ethical behaviour. Sources include Common Crawl, WebText, OpenWebText and books.

LLM04: Model Denial of Service

Attackers cause resource-heavy operations on LLMs, leading to service degradation or high costs. The vulnerability is magnified due to the resource-intensive nature of LLMs and the unpredictability of user inputs.

LLM05: Supply Chain Vulnerabilities

LLM application lifecycle can be compromised by vulnerable components or services, leading to security attacks. Using third-party datasets, pre-trained models, and plugins can add vulnerabilities.

LLM06: Sensitive Information Disclosure

LLMs may inadvertently reveal confidential data in its responses, leading to unauthorized data access, privacy violations, and security breaches. It’s crucial to implement data sanitization and strict user policies to mitigate this.

LLM07: Insecure Plugin Design

LLM plugins can have insecure inputs and insufficient access control. This lack of application control makes them easier to exploit and can result in consequences such as remote code execution.

LLM08: Excessive Agency

LLM-based systems may undertake actions leading to unintended consequences. The issue arises from excessive functionality, permissions, or autonomy granted to the LLM-based systems.

LLM09: Overreliance

Systems or people overly depending on LLMs without oversight may face misinformation, miscommunication, legal issues, and security vulnerabilities due to incorrect or inappropriate content generated by LLMs.

LLM10: Model Theft

This involves unauthorized access, copying, or exfiltration of proprietary LLM models. The impact includes economic losses, compromised competitive advantage, and potential access to sensitive information.

28th January 2012 The computer on which I am writing these words is running Linux Mint with the Cinnamon desktop environment, a fork of GNOME Shell. This looks as if it is going to be the default face of GNOME 3 in the next version of Linux Mint with the MGSE dressing up of GNOME Shell looking more and more like an interim measure until something more consistent was available. Some complained that what was delivered in version 12 of the distribution was a sort of greatest hits selection but I reckon that bets were being hedged by the project team.

Impressions of what’s coming

By default, you get a single panel at the bottom of your screen with everything you need in there. However, it is possible to change the layout so that the panel is at the top or there are two panels, one at the top and the other at the bottom. So far, there is no means of configuring which panel applet goes where as was the case in Linux Mint 11 and its predecessors. However, the default placements are very sensible so I have no cause for complaint at this point.

Just because you cannot place applets doesn’t mean that there is no configurability though. Cinnamon is extensible and you can change the way that time is displayed in the clock as well as enabling additional applets. It also is possible to control visual effects such as the way new application windows pop up on a screen.

GNOME 3 is there underneath all of this though there’s no sign of the application dashboard of GNOME Shell. The continually expanding number of slots in the workspace launcher is one sign as is the enabling of a hotspot at the top right hand corner by default. This brings up an overview screen showing what application windows are open in a workspace. The new Mint menu even gets the ability to search through installed applications together with the ability to browser through what what’s available.

In summary, Cinnamon already looks good though a little polish and extra configuration options wouldn’t go amiss. An example of the former is the placement of desktop numbers in the workspace switcher and I already have discussed the latter. It does appear that the Linux Mint approach to desktop environments is taking shape with a far more conventional feel that the likes of Unity or GNOME Shell. Just as Cinnamon has become available in openSUSE, I can see it gracing LMDE too whenever Debian gets to moving over to GNOME 3 as must be inevitable now unless they take another approach such as MATE.

In comparison with revolution

While Linux Mint are choosing convention and streamlining GNOME to their own designs, it seems that Ubuntu’s Unity is getting ever more experimental as the time when Ubuntu simply evolved from one release to the next becomes an increasingly more distant memory. The latest development is the announcement that application menus could get replaced by a heads up display (HUD) instead. That would be yet another change made by what increasingly looks like a top down leadership reminiscent of what exists at Apple. While it is good to have innovation, you have to ask where users fit in all of this but Linux Mint already has gained from what has been done so far and may gain more again. Still, seeing what happens to the Ubuntu sounds like an interesting pastime though I’m not sure that I’d be depending on the default spin of this distro as my sole operating system right now. Also, changing the interface every few months wouldn’t work in a corporate environment at all so you have to wonder where Mark Shuttleworth is driving all this though Microsoft is engaging in a bit of experimentation of its own. We are living in interesting times for the computer desktop and it’s just as well that there are safe havens like Linux Mint too. Watching from afar sounds safer.

28th March 2008 Putting this blog back on its feet after a spot of web hosting bother caused me to learnt a bit more about web hosting than I otherwise might have done. Here’s a selection and they are in no particular order:

- Store your passwords securely and where you can find them because you never know how a foul up of your own making can strike. For example, a faux pas with a configuration file is all that’s needed to cause havoc for a database site such as a WordPress blog. After all, nobody’s perfect and your hosting provider may not get you out of trouble as quickly as you might like.

- Get a MySQL database or equivalent as part of your package rather than buying one separately. If your provider allows a trial period, then changing from one package to another could be cheaper and easier than if you bought a separate database and needed to jettison it because you changed from, say, a Windows package to a Linux one or vice versa.

- It might be an idea to avoid a reseller unless the service being offered is something special. Going for the sake of lower cost can be a false economy and it might be better to cut out the middleman altogether and go direct to their provider. Being able to distinguish a reseller from a real web host would be nice but I don’t see that ever becoming a reality; it is hardly in resellers’ interests, after all.

- Should you stick with a provider that takes several days to resolve a serious outage? The previous host of this blog had a major MySQL server outage that lasted for up to three days and seeing that was one of the factors that made me turn tail to go to a more trusted provider that I have used for a number of years. The smoothness of the account creation process might be another point worthy of consideration.

- Sluggish system support really can frustrate, especially if there is no telephone support provided and the online ticketing system seems to take forever to deliver solutions. I would advise strongly that a host who offers a helpline is a much better option than someone who doesn’t. Saying all of that, I think that it’s best to be patient and, when your website is offline, that might not be as easy you’d hope it to be.

- Setting up hosting or changing from one provider to another can take a number of days because of all that needs doing. So, it’s best to allow for this and plan ahead. Account creation can be very quick but setting up the website can take time while domain name transfer can take up to 24 hours.

- It might not take the same amount of time to set up Windows hosting as its Linux equivalent. I don’t know if my experience was typical but I have found that the same provider set up Linux hosting far quicker (within 30 minutes) than it did for a Windows-based package (several hours).

- Be careful what package you select; it can be easy to pick the wrong one depending on how your host’s sight is laid out and what they are promoting at the time.

- You can have a Perl/PHP/MySQL site working on Windows, even with IIS being used in place instead of Apache. The Linux/Apache/Perl/PHP/MySQL approach might still be better, though.

- The Windows option allows for ASP, .Net and other such Microsoft technologies to be used. I have to say that my experience and preference is for open source technologies so Linux is my mainstay but learning about the other side can never hurt from a career point of view. After, I am writing this on a Windows Vista powered laptop to see how the other half live as much as anything else.

- Domains serviced by hosting resellers can be visible to the systems of those from whom they buy their wholesale hosting. This frustrated my initial attempts to move this blog over because I couldn’t get an account set up for technologytales.com because a reseller had it already on the same system. It was only when I got the reseller to delete the account with them that things began to run more smoothly.

- If things are not going as you would like them, getting your account deleted might be easier than you think so don’t procrastinate because you think it a hard thing to do. Of course, it goes without saying that you should back things up beforehand.

12th March 2008 An idea recently popped into my head for my hillwalking website: collecting a listing of bus services of use and interest to hillwalkers. Being rural, these services may not get the publicity that they deserve. In addition, they are generally subsidised so any increase in their patronage can only help maintain their survival.

Currently, the list lives on on several pages page in the blog but another thought has come to mind: using WordPress to host the list as a series of log entries, a sort of blog if you like. Effectively, that would involve having two blogs on the same website it can be done. One way is to set up up two instances of WordPress and they could work from the same database; the facility for this is allowed by the ability to use different table prefixes for the different blogs so that there are no collisions. There’s nothing to stop you having two databases but your hosting provider may charge extra for this. This set up will work but there is a caveat: you now have two blogs to maintain and, with regular WordPress releases, that means an extra overhead. Apart from that, it’s a workable approach.

Another option is to use WordPress MU. That would cut down on the maintenance but there are costs here too. It’s need of virtual hosts is a big one. If my experience is any guide, you probably need a dedicated server to go down this route and they aren’t that cheap. I needed to do a spot of Apache configuration and some editing of my hosts file to get my own installation off the ground; I don’t reckon that would be an option with shared hosting. Once I sorted out the hosts with a something.something.else address, set up was very much quick and easy.

Apart from a tab named Site Admin, the administration dashboard isn’t at all that different from a standard WordPress 2.3.x arrangement. In the extra tab, you can create blogs and users, control blogs and themes as well upgrade everything in a single step. Themes and plugins largely work as usual from an administration point of view. With plugins, you have just to try them and see what happens; one adding FCKEditor threw an error while the editor window was loading but it otherwise worked OK. I had no trouble at all with themes so all looks very well on that front.

Importing and editing posts worked as usual but for two perhaps irritating behaviours: tags are, not unreasonably, removed from titles and inline styled and class declarations are removed from tags in the body of a blog entry. Both could be resolved by post processing in the blog’s theme but the Sniplets plugin allows a better way out for the latter and I have been putting it to good use.

In summary, WordPress MU worked well and looks a very good option for multi-blog sites. However, the need for a dedicated server and the quirks that I have seen when it comes to handling post contents keep me away from using it for production blogs for now. Even so, I’ll be retaining it as a test system anyway. As regards the country bus log, I think that I’ll be sticking with the blog page for the moment.

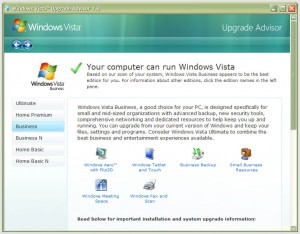

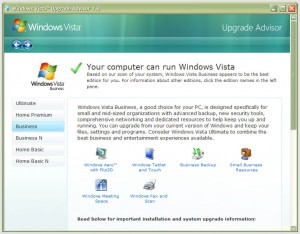

27th January 2007  Following the arrival of Vista, some are probably planning to upgrade straight away; I think that I’ll wait a while. As it happens, we are using Windows 200 at where I work and the ending of Microsoft’s support for this now elderly workhorse is driving a deployment of Windows Vista across the company that is due to start in the summer, very quick turnaround in IT terms. Given that it wants people to upgrade in order to keep its coffers full, Microsoft has made a tool available a tool to test for Vista readiness. Oddly, you have to install it after download. I would have thought that a tool like this should run without installation but there you go. Running it tells you the best version of Vista for you and any actions needed on your part. Vista Business edition was suggested as best for me and the deficiencies included: hard disk space on my Windows drive, a pair of incompatible devices and a number of applications whose compatibility could not be guaranteed. Curiously, some Microsoft packages turned up on the last list. As regards hardware, my sound card and scanner are the offending items. Sound cards are cheap if that needs to be replaced but I had onboard sound capability on my motherboard that can be instated if so required. Throwing away a perfectly good Canon scanner isn’t my idea of sustainable living so I have been on a trip to the Canon website in order to find out more. The good news is that a driver update sets everything in order though there are caveats for Vista 64 bit. All in all, a Vista upgrade is a goer.

Following the arrival of Vista, some are probably planning to upgrade straight away; I think that I’ll wait a while. As it happens, we are using Windows 200 at where I work and the ending of Microsoft’s support for this now elderly workhorse is driving a deployment of Windows Vista across the company that is due to start in the summer, very quick turnaround in IT terms. Given that it wants people to upgrade in order to keep its coffers full, Microsoft has made a tool available a tool to test for Vista readiness. Oddly, you have to install it after download. I would have thought that a tool like this should run without installation but there you go. Running it tells you the best version of Vista for you and any actions needed on your part. Vista Business edition was suggested as best for me and the deficiencies included: hard disk space on my Windows drive, a pair of incompatible devices and a number of applications whose compatibility could not be guaranteed. Curiously, some Microsoft packages turned up on the last list. As regards hardware, my sound card and scanner are the offending items. Sound cards are cheap if that needs to be replaced but I had onboard sound capability on my motherboard that can be instated if so required. Throwing away a perfectly good Canon scanner isn’t my idea of sustainable living so I have been on a trip to the Canon website in order to find out more. The good news is that a driver update sets everything in order though there are caveats for Vista 64 bit. All in all, a Vista upgrade is a goer.

20th January 2007 This morning, I got up to find my main computer powered off after I left it on overnight for a spyware scan by Webroot Spy Sweeper. After satisfying myself that it was dead, I tried popping a new fuse in the plug. What I saw next was far from being a pretty sight: shorting in the PSU. The fact that it took out a new 5 A fuse was neither here nor there (they are 20p a piece at where I replenished my supply: they may be cheaper elsewhere but what’s 20p these days?); thoughts of fried PC hardware are far from pleasant, especially the vision of losing data and expensive software purchases because a hard drive got fried by a shorting PSU. A whole new bare bones system from the likes of Novatech were appearing very ominously in my horizon.

There was only one thing for it: try another PSU and see if everything works. So, it was off to a nearby branch of PC World for a replacement. I know that there were other options but I preferred to get this problem sorted out pronto to put my mind at ease, if at all possible. The old PSU got taken out and the new one plugged in as part of pre-installation testing. Thankfully, I saw the Windows start up screen and the omens were good; it later turned out that my data were safe too. Initial problems with keyboard and mouse recognition were resolved by a reboot, as was an IP address conflict that had resulted because my back up machine was on throughout all of this. All in all, things turned out well after a solid lesson in backing up data outside of the PC on which it resides. Maybe an online service such as Diino could be very useful.

I do seem to have an issue with PSU’s giving up the ghost; maybe its the fact that I run them overnight a lot. This incident caused to upgrade from 450 W Jeantech unit to a 500 W one. The PSU that I had before the 450 W unit was higher rated but it couldn’t cope with the power demands of the machine it was powering up. The result was that it cut out a lot on start up, an annoying habit that I tolerated for longer than I really should. I’ll keeping an eye on things as I go…

22nd March 2015 One thing that I notice with Firefox installations in both Ubuntu and Linux Mint is that a dialogue box appears when closing down the web browser asking whether to save the open session or if you want to have a fresh session the next time that you start it up. Initially, I was always in the latter camp but there are times when I took advantage of that session saving feature for retaining any extra tabs containing websites to which I want to return or editor sessions for any blog posts that I still am writing; sometimes, composing the latter can take a while.

To see where this setting is located, you need to open a new tab and type about:config in the browser’s address bar. This leads to advanced browser settings so you need click OK in answer to a warning message before proceeding. Then, start looking for browser.showQuitWarning using the Search bar; it acts like a dynamic filter on screen entries until you get what you need. On Ubuntu and Linux Mint, the value is set to true but false is the default elsewhere; unlike Opera, Firefox generally does not save sessions by fault unless you tell it to that (at least, that has been my experience anyway). Setting true to false or vice versa will control the appearance or non-appearance of the dialogue box at browser session closure time.