TOPIC: MICROSOFT

On keyboards

17th April 2009While there cannot be too many Linux users who go out and partner a Microsoft keyboard with their system, my recent cable-induced mishap has resulted in exactly that outcome. Keyboards are such standard items that it is not so possible to generate any excitement about them, apart from RSI-related concerns. While I wasn't about to go for something cheap and nasty that would do me an injury, going for something too elaborate wasn't part of the plan either, even if examples of that ilk from Microsoft and Logitech were sorely tempting.

Shopping in a bricks and mortar store, like I was, has its pluses and its minuses. The main plus points are that you see and feel what you are buying, with the main drawback being that the selection on offer isn't likely to be as extensive as you'd find on the web, even if I was in a superstore. Despite the latter, there was still a good deal available. Though there were PS/2 keyboards for anyone needing them, USB ones seemed to be the main offer, with wireless examples showcased too. Strangely, the latter were only available as kits with mice included, further adding to the cost of an already none too cheap item. The result was that I wasn't lured away from the wired option.

While I didn't emerge with what would have been my first choice because that was out of stock, that's not to say that what I have doesn't do the job for me. The key action is soft and cushioned, which is a change from that to which I am accustomed; some keyboards feel like they belong on a laptop, but not this one. There are other bells and whistles too, with a surprising number of them working. The calculator and email buttons number among these along with the play/pause, back and forward ones for a media player; I am not so convinced about the volume controls though an on-screen indicator does pop up. You'd expect a Microsoft item to be more Windows specific than others, yet mine works as well as anything else in the Ubuntu world and I have no reason to suspect that other Linux distros would spurn it either. Keyboards tend to be one of those "buy-it-and-forget-it" items, and the new arrival should be no different.

Running Windows 7 within VirtualBox

12th January 2009With all the fanfare that surrounded the public beta release of Windows 7, I suppose that the opportunity to give it a whirl was too good to miss. Admittedly, Microsoft bodged the roll-out by underestimating the level of interest and corralling everyone into a 24-hour time slot, with one exacerbating the other. In the event, they did eventually get their act together and even removed the 2.5 million licence limit. Thus, I suppose that they really needed to get 7 right after the unloved offering that was Vista, so they probably worked out that the more testers that they get, the better. After, it might be observed that the cynical view that the era of making people pay to "test" your products might be behind us and that users just want things to work well if not entirely faultlessly these days.

After several abortive raids, I eventually managed to snag myself a licence and started downloading the behemoth using the supplied download manager. I foresaw it taking a long time and so stuck with the 32-bit variant so as not to leave open the possibility of that part of the process using up any more of my time. As it happened, the download did take quite a few hours to complete, but this part of the process was without any incident or fuss.

Once the DVD image was downloaded, it was onto the familiar process of building myself a VirtualBox VM as a sandbox to explore the forthcoming incarnation of Windows. After setting up the ISO file as a virtual DVD, installation itself was an uneventful process, yet subsequent activities weren't without their blemishes. The biggest hurdle to be overcome was to get the virtual network adapter set up and recognised by Windows 7. The trick is to update the driver using the VirtualBox virtual CD as the source because Windows 7 will not recognise it using its own driver repository. Installing the other VirtualBox tools is a matter of going to Compatibility page in the Properties for the relevant executable, the one with x86 in the file name in my case, and setting XP as the Windows version (though Vista apparently works just as well, I played safe and depended on my own experience). While I was at it, I allowed the file to run under the administrator account, too. Right-clicking on executable files will bring you to the compatibility troubleshooter that achieves much the same ends but by a different route. With the Tools installed, all was workable rather than completely satisfactory. Shared folders have not worked for, but that might need a new version of the VirtualBox software or getting to know any changes to networking that come with Windows 7. I plan to stick with using USB drives for file transfer for the moment. Though stretching the screen to fit the VirtualBox window was another thing that would not happen, that's a much more minor irritation.

With those matters out of the way, I added security software from the list offered by Windows with AVG, Norton and Kaspersky being the options on offer. I initially chose the last of these but changed my mind after seeing the screen becoming so corrupted as to make it unusable. That set me to rebuilding the VM and choosing Norton 360 after the second Windows installation had finished. That is working much better, and I plan to continue my tinkering beyond this. I have noticed the inclusion of PowerShell and an IDE for the same, so that could be something that beckons. All in all, there is a certain solidity about Windows 7, though I am not so convinced of the claim of speedy startups at this stage. Time will tell and, being a beta release, it's bound to be full of debugging code that will not make it into the final version that is unleashed on the wider public.

Error: User does not have appropriate authorization level for library xxxx

25th June 2008In a world where write access to a folder or directory is controlled by permission settings at the operating system level, a ready answer for when you get the above message in your log when creating a SAS data set would be to check your access. However, if you are working on Windows and your access seems fine, then SAS' generation of an access error message seems all the more perplexing.

Unlike the more black-and-white world of UNIX and Linux, Windows has other ways to change access that could throw things off from the straight and narrow. One of them, it would appear, is to right-click on the file listing pane in Windows Explorer and select "Customize this folder..." to change how it appears. The strange upshot of this is that a perpetual read-only flag is set for the folder in question, and that flag triggers SAS authorisation errors. The behaviour is very strange and unexpected when you find it, and the quickest and easiest solution sounds drastic. This involves deleting the folder and creating a new one in its place, saving anything that you want to retain in another temporary location. An alternative approach uses the attrib command and is less invasive.

It begs the question as to why Microsoft is re-appropriating a flag used for access purposes to be used to determine whether the HTML components of a folder display have been changed or not. This is very strange stuff and does not look like good software design at all. With all the other problems Microsoft creates for itself, I am not holding my breath until it's fixed, either. There seem to be other things like this waiting to catch you out when using Windows SAS, and a good place to start is SAS' own description of the problem that I have just shared.

System error codes for Windows

9th May 2008Windows system error codes can be indecipherable, so it's useful to have a list. Microsoft has one on its Microsoft Learn website that may help. However, the decodes may not as explicit as I would like, but they're better than nothing when you don't get anything other than the number.

Running Photoshop Elements 5 using WINE on Ubuntu and openSUSE

23rd January 2008When you buy a piece of software and get accustomed to its ways of working, it is natural to want to continue using it. That applied to a number of applications when I moved over to Linux in the latter half of last year, and one of these was Adobe's Photoshop Elements 5.0, a purchase made earlier in the year. My way forward was to hang on to Windows by way of VMware. However, Elements fails to edit or save files in the Linux file system accessed through VMware's shared folders feature. I have yet to work out what's happening, but the idea of using a more conventional networking arrangement has come to mind.

Another idea that intrigued me was the idea of using WINE, the Windows API emulator for Linux. You can get it in the Ubuntu and openSUSE software repositories, but the WINE website has more to say on the subject. That's only the first stage, though, as you might see from WINE's Wiki page on Photoshop and its like. However, their advice is a spot incomplete, so I'll make it more explicit here. You need to run Winetricks from its online home as follows:

wget kegel.com/wine/winetricks; sh winetricks fakeie6

wget kegel.com/wine/winetricks; sh winetricks mdac28

wget kegel.com/wine/winetricks; sh winetricks jet40

The first line flicks a switch to fool Microsoft components to install thinking that they are installing into a Windows system with IE on board. Without this, the rest will not happen. The second installs Microsoft's native ODBC drivers; Elements will not function at all without these if my experience is any guide. The last step is to add JET support so that Elements' Organiser can get going. With all of these in place, having a working Photoshop Elements instance under Linux should be a goer. Apart from the odd crash, things seem to be working OK on Ubuntu and openSUSE seems hospitable too. Further experimentation may reveal more.

Update: The WINE Wiki has now been updated (and links back here!). As per dank's comment, the above lines can be condensed into what you see below:

wget kegel.com/wine/winetricks; sh winetricks fakeie6 mdac28 jet40

When desktop.ini files appear on the Windows Vista desktop...

14th January 2008Being an experienced computer user, I set Windows Explorer to display hidden files when using a Windows PC. However, on my Vista-empowered laptop, that causes two desktop.ini files to appear on the desktop, one for all users and one for my user account. And displaying hidden files does not seem to be something that you can do on a folder by folder basis. With XP, this did not cause hidden files to appear on your desktop like this, so the behaviour could be seen as a step backwards.

A spot of googling exposed me to some trite suggestions regarding re-hiding files again, but deleting them seems to be the only way out. Despite the dire warnings being issued, there didn't seem to be any untoward problems caused by my actions. For now, I'll see if they stay away, yet episodes like this do make me wonder if it is time for Microsoft to stop treating us like idiots and give us things that work the way in which we want them to function. Well, I'm glad that Linux is the linchpin of my home computing world...

Onto Norton 360…

20th October 2007

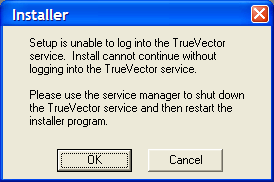

ZoneAlarm cut off VMware's access to the internet, so it was time to reinstall it. However, I messed up the reinstallation and now there seems no way to reinstate things like they were without tampering with my Windows XP installation status, and I have no intention of doing that. The thing seems to think that it can start a TrueVector service that does not exist.

Since I have to have some security software on board, I made a return to the Symantec fold with my purchase of Norton 360. That does sound extreme, but I have been curious about the software for a while now. You get the usual firewall, antivirus and antispam functions with PC tuning, anti-phishing and backup features available as well. It is supposed to be unobtrusive, so we'll see how it goes from here.

Update:

PC Pro rates the software highly, while Tech.co.uk accuses it of being bloatware. Nevertheless, the only issue that I am having with it is its insistence on having Microsoft Update turned on. For now, I am sticking with Shavlik's NetChk Protect, especially seeing what Microsoft has been doing with its update service. Have a look at Windows Secrets.com to see what I mean. Other than that, it seems to working away in the background without intruding at all.

How to map network drives using the Windows command line

5th September 2007Mapping network drives on Windows usually involves shuffling through Explorer menus. There is another way that I consider to be neater: using the Windows command line ("DOS" to some). The basic command for creating a mapping goes like this:

net use w: \\yourserver.address

To ensure persistence of the mapping across different Windows sessions, use this:

net use w: \\yourserver.address /persistent:yes

Here's how to set up a mapping that logs in as a different user:

net use w: \\yourserver.address password /user:you

The above can include domain information as well, and in a number of different forms: domain\username is one.

To delete a mapping, try this:

net use w: /delete

List all existing mappings:

net use

This is a flavour of what is available, and Microsoft does provide documentation. Issuing the following command will bring some of that on the command line:

net help use

Troubleshooting missing HAL.DLL and boot configuration issues in Windows XP

2nd August 2007My PC is very poorly at the moment and Windows XP re-installation is the prescribed course of action. However, I have getting errors reporting missing or damaged HAL.DLL at the first reboot of the system during installation. Because I thought that there might be hard disk confusion, I unplugged all but the Windows boot drive. That only gave me an error about hard drives not being set up properly. Thankfully, a quick outing on Google turned up a few ideas. However, I should really have started with Microsoft, since they have an article on the problem. About.com has also got something to offer on the subject and seems to be a good resource on installing XP to boot: I had forgotten how to do a repair installation and couldn't find the place in the installation menus. In any event, a complete refresh should be a good thing in the long run, even if it will be a very disruptive process. While I did consider moving to Vista at that point, bringing XP back online seemed the quickest route to getting things back together again. Strangely, I feel like a fish out of water right now, but that'll soon change...

Update: It was, in fact, my boot.ini that was causing this and replacement of the existing contents with defaults resolved the problem...

Ditching PC Plus?

28th June 2007When I start to lose interest in the features in a magazine that I regularly buy, then it's a matter of time before I stop buying the magazine altogether. Such a predicament is facing PC Plus, a magazine that I have been buying every month over the last ten years. The fate has already befallen titles like Web Designer, Amateur Photographer and Trail, all of which I now buy sporadically.

Returning to PC Plus, I get the impression that it feels more of a lightweight these days. Over the last decade, Future Publishing has been adding titles to its portfolio that take actually from its long-established stalwart. Both Linux Format and .Net are two that come to mind, while there are titles covering Windows Vista and computer music as well. In short, there may be sense in having just a single title for all things computing.

Being a sucker for punishment, I did pick up this month's PC Plus, only for the issue to be as good an example of the malaise as any. Reviews, once a mainstay of the title, are now less prominent than they were. In place of comparison tests, we now find discussions of topics like hardware acceleration, with some reviews mixed in. Topics such as robotics and artificial intelligence do rear their heads in feature articles, when I cannot say that I have a great deal of time for such futurology. The section containing tutorials remains, even if it has been hived off into a separate mini-magazine, and seemingly fails to escape the lightweight revolution.

All this is leading me to dump PC Plus in favour of PC Pro from Dennis Publishing. This feels reassuringly more heavyweight and, while the basic format has remained unchanged over the years, it still managed to remain fresh. Reviews, of both software and hardware, are very much in evidence while it manages to have those value-adding feature articles; this month, digital photography and rip-off Britain come under the spotlight. Add the Real Word Computing section, and it all makes a good read in these times of behemoths like Microsoft, Apple and Adobe delivering new things on the technology front. While I don't know if I have changed, PC Pro does seem better than PC Plus these days.