TOPIC: LIVE USB

What I learned from manually upgrading to Linux Mint 11

31st May 2011For a Linux distribution that focuses on user-friendliness, it does surprise me that Linux Mint offers no seamless upgrade path. In fact, the underlying philosophy is that upgrading an operating system is a risky business. However, I have been doing in-situ upgrades with both Ubuntu and Fedora for a few years without any real calamities. A mishap with a hard drive that resulted in lost data in the days when I mainly was a Windows user places this into sharp relief. These days, I am far more careful but thought nothing of sticking a Fedora DVD into a drive to move my Fedora machine from 14 to 15 recently. Apart from a few rough edges and the need to get used to GNOME 3 together with making a better fit for me, there was no problem to report. The same sort of outcome used to apply to those online Ubuntu upgrades that I was accustomed to doing.

The recommended approach for Linux Mint is to back up your package lists and your data before the upgrade. Doing the former is a boon because it automates adding the extras that a standard CD or DVD installation doesn't do. While I did do a little backing up of data, it wasn't total because I know how to identify my drives and take my time over things. Apache settings and the contents of MySQL databases were my main concern because of where these are stored.

When I was ready to do so, I popped a DVD in the drive and carried out a fresh installation into the partition where my operating system files are kept. Being a Live DVD, I was able to set up any drive and partition mappings by referring to Mint's Disk Utility. One thing that didn't go so well was the GRUB installation, and it was due to the choice that I made on one of the installation screens. Despite doing an installation of version 10 just over a month ago, I had overlooked an intricacy of the task and placed GRUB on the operating system files partition rather than at the top level of the disk where it is located. Instead of trying to address this manually, I took the easier and more time-consuming step of repeating the installation like I did the last time. If there was a graphical tool for addressing GRUB problems, I might have gone for that instead, but am left wondering at why there isn't one included at all. Maybe it's something that the people behind GRUB should consider creating, unless there is one out there already about which I know nothing.

With the booting problem sorted, I tried logging in, only to find a problem with my desktop that made the system next to unusable. It was back to the DVD, and I moved many of the configuration files and folders (the ones with names beginning with a ".") from my home directory in the belief that there might have been an incompatibility. That action gained me a fully usable desktop environment, but I now think that the cause of my problem may have been different to what I initially suspected. Later I discovered that ownership of files in my home area elsewhere wasn't associated with my user ID, though there was no change to it during the installation. As it happened, a few minutes with the chown command were enough to sort out the permissions issue.

The restoration of the extra software that I had added beyond what standardly gets installed was took its share of time, but the use of a previously prepared list made things so much easier. That it didn't work smoothly because some packages couldn't be found the first time around, so another one was needed. Nevertheless, that is nothing compared to the effort needed to do the same thing by issuing an installation command at a time. Once the usual distribution software updates were in place, all that was left was to update VirtualBox to the latest version, install a Citrix client and add a PHP plugin to NetBeans. Then, nearly everything was in place for me.

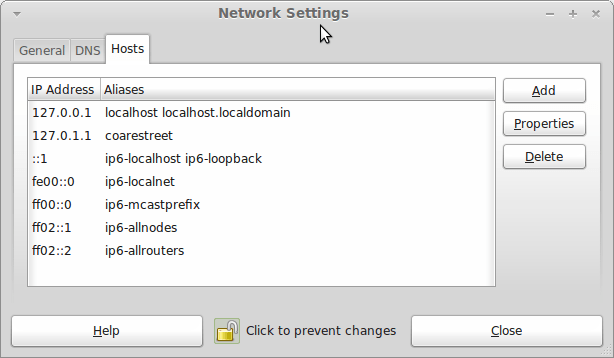

Next, Apache settings were restored, as were the databases that I used for offline web development. That nearly was all that was needed to get offline websites working, but for the need to add an alias for localhost.localdomain. That required installation of the Network Settings tool so that I could add the alias in its Hosts tab. With that out of the way, the system had been settled in and was ready for real work.

Given the glitches I encountered, I can understand the Linux Mint team's caution regarding a more automated upgrade process. Even so, I still wonder if the more manual alternative that they have pursued brings its own problems in the form of those that I met. The fact that the whole process took a few hours in comparison to the single hour taken by the in-situ upgrades that I mentioned earlier is another consideration that makes you wonder if it is all worth it every six months or so. Saying that, there is something to letting a user decide when to upgrade rather than luring one along to a new version, a point that is more than pertinent in light of the recent changes made to Ubuntu and Fedora. Whichever approach you care to choose, there are arguments in favour as well as counterarguments too.

Do we need to pay for disk partitioning tools anymore?

29th November 2010My early explorations of dual-booting of Windows and Linux led me into the world of disk partitioning. It also served another use since any of my Windows 9x installations (that dates things a bit...) didn't have a tendency to last longer than six months at one point; putting the data on another partition meant that a fresh Windows installation didn't jeopardise any data that I had should a mishap occur.

Then, Partition Magic was the favoured tool, and it wasn't free of charge, though it wasn't extortionately priced either. For those operations that couldn't be done with Windows running, you could create bootable floppy disks to get the system going to perform those. Thinking about it now, it all worked well enough, and the usual caveats about taking care with your data applied as much then as they do now.

For the last few years, many Linux distributions have coming in the form of CD's or DVD's from which you can boot into a full operating system session, complete with near enough the same GUI that an installed version. When a PC is poorly, this is a godsend that makes me wonder how we managed without it; having that visual way of saving data sounds all too necessary now. For me, the answer to that is that I misspent too many hours blundering blindly using the very limited Windows command line to get myself out of a crux. Looking back on it now, it all feels very dark compared to today.

Another good aspect of these Live Distribution Disks is that they come with hard disk partitioning tools, such as the effective GParted. They are needed to configure hard drives during the actual installation process, but they serve another process too: they can be used in place of the old proprietary software disks that were in use not so long ago. Being able to deal with the hard disk sizes available today is a good thing, as is coping with NTFS partitions along with the usual Linux options. While the operations may be time-consuming, they have seemed reliable so far, and I hope that it stays that way despite any warning that gets issued before you make any changes. Last weekend, I got to see a lot of what that means when I was setting up my Toshiba Equium laptop for Windows/Ubuntu dual booting.

With the capability that is available both free of charge and free of limitations, you cannot justify paying for disk partitioning software nowadays, and that's handy when you consider the state of the economy. It also shows how things have changed over the last decade. Being able to load up a complete operating system from a DVD also serves to calm any nerves when a system goes down on you, especially when you surf the web to find a solution for the malady that's causing the downtime.

Booting from external drives

16th September 2009Sticking with older hardware may mean that you miss out on the possibilities offered by later kit, and being able to boot from external optical and hard disk drives was something of which I learned only recently. Like many things, a compatible motherboard and my enforced summer upgrade means that I have one with the requisite capabilities.

There is usually an external DVD drive attached to my main PC, so that allowed the prospect of a test. A bit of poking around in the BIOS settings for the Foxconn motherboard was sufficient to get it looking at the external drive at boot time. Popping in a CrunchBang Linux live DVD was all that was needed to prove that booting from a USB drive was a goer. That CrunchBang is a minimalist variant of Ubuntu helped for acceptable speed at system startup and afterwards.

Having lived off them while in home PC limbo, the temptation to test out the idea of installing an operating system on an external HD and booting from that is definitely there, though I think that I'll be keeping mine as backup drives for now. Still, there's nothing to stop me installing an operating system onto of them and giving that a whirl sometime. Of course, speed constraints mean that any use of such an arrangement would be occasional but, in the event of an emergency, such a setup could have its uses and tide you over for longer than a Live CD or DVD. Having the chance to poke around with an alternative operating system as it might exist on a real PC has its appeal too, and avoids the need for any partitioning and other chores that dual booting would require. After all, there's only so much testing that can be done in a virtual machine.