TOPIC: ARTIFICIAL INTELLIGENCE

Advance your Data Science, AI and Computer Science skills using these online learning opportunities

25th July 2025The landscape of online education has transformed dramatically over the past decade, creating unprecedented access to high-quality learning resources across multiple disciplines. This comprehensive examination explores the diverse array of courses available for aspiring data scientists, analysts, and computer science professionals, spanning from foundational programming concepts to cutting-edge artificial intelligence applications.

Data Analysis with R Programming

R programming has established itself as a cornerstone language for statistical analysis and data visualisation, making it an essential skill for modern data professionals. DataCamp's Data Analyst with R programme represents a comprehensive 77-hour journey through the fundamentals of data analysis, encompassing 21 distinct courses that progressively build expertise. Students begin with core programming concepts including data structures, conditional statements, and loops before advancing to sophisticated data manipulation techniques using tools such as dplyr and ggplot2. The curriculum extends beyond basic programming to include R Markdown for reproducible research, data manipulation with data.table, and essential database skills through SQL integration.

For those seeking more advanced statistical expertise, DataCamp's Statistician with R career track provides an extensive 108-hour programme spanning 27 courses. This comprehensive pathway develops essential skills for professional statistician roles, progressing from fundamental concepts of data collection and analysis to advanced statistical methodology. Students explore random variables, distributions, and conditioning through practical examples before advancing to linear and logistic regression techniques. The curriculum encompasses sophisticated topics including binomial and Poisson regression models, sampling methodologies, hypothesis testing, experimental design, and A/B testing frameworks. Advanced modules cover missing data handling, survey design principles, survival analysis, Bayesian data analysis, and factor analysis, making this track particularly suitable for those with existing R programming knowledge who seek to specialise in statistical practice.

The Google Data Analytics Professional Certificate programme, developed by Google and hosted on Coursera with US and UK versions, offers a structured six-month pathway for those seeking industry-recognised credentials. Students progress through eight carefully designed courses, beginning with foundational concepts in "Foundations: Data, Data, Everywhere" and culminating in a practical capstone project. The curriculum emphasises real-world applications, teaching students to formulate data-driven questions, prepare datasets for analysis, and communicate findings effectively to stakeholders.

Udacity's Data Analysis with R course presents a unique proposition as a completely free resource spanning two months of study. This programme focuses intensively on exploratory data analysis techniques, providing students with hands-on experience using RStudio and essential R packages. The course structure emphasises practical application through projects, including an in-depth exploration of diamond pricing data that demonstrates predictive modelling techniques.

Advanced Statistical Learning and Specialised Applications

Duke University's Statistics with R Specialisation elevates statistical understanding through a comprehensive seven-month programme that has earned a 4.6-star rating from participants. This five-course sequence delves deep into statistical theory and application, beginning with probability and data fundamentals before progressing through inferential statistics, linear regression, and Bayesian analysis. The programme distinguishes itself by emphasising both theoretical understanding and practical implementation, making it particularly valuable for those seeking to master statistical concepts rather than merely apply them.

The R Programming: Advanced Analytics course on Udemy, led by instructor Kirill, provides focused training in advanced R techniques within a compact six-hour format. This course addresses specific challenges that working analysts face, including data preparation workflows, handling missing data through median imputation, and working with complex date-time formats. The curriculum emphasises efficiency techniques such as using apply functions instead of traditional loops, making it particularly valuable for professionals seeking to optimise their analytical workflows.

Complementing this practical approach, the Applied Statistical Modelling for Data Analysis in R course on Udemy offers a more comprehensive 9.5-hour exploration of statistical methodology. The curriculum covers linear modelling implementation, advanced regression analysis techniques, and multivariate analysis methods. With its emphasis on statistical theory and application, this course serves those who already possess foundational R and RStudio knowledge but seek to deepen their understanding of statistical modelling approaches.

Imperial College London's Statistical Analysis with R for Public Health Specialisation brings academic rigour to practical health applications through a four-month programme. This specialisation addresses real-world public health challenges, using datasets that examine fruit and vegetable consumption patterns, diabetes risk factors, and cardiac outcomes. Students develop expertise in linear and logistic regression while gaining exposure to survival analysis techniques, making this programme particularly relevant for those interested in healthcare analytics.

Visualisation and Data Communication

Johns Hopkins University's Data Visualisation & Dashboarding with R Specialisation represents the pinnacle of visual analytics education, achieving an exceptional 4.9-star rating across its four-month curriculum. This five-course programme begins with fundamental visualisation principles before progressing through advanced ggplot2 techniques and interactive dashboard development. Students learn to create compelling visual narratives using Shiny applications and flexdashboard frameworks, skills that are increasingly essential in today's data-driven business environment.

The programme's emphasis on publication-ready visualisations and interactive dashboards addresses the growing demand for data professionals who can not only analyse data but also communicate insights effectively to diverse audiences. The curriculum balances technical skill development with design principles, ensuring graduates can create both statistically accurate and visually compelling presentations.

Professional Certification Pathways

DataCamp's certification programmes offer accelerated pathways to professional recognition, with each certification designed to be completed within 30 days. The Data Analyst Certification combines timed examinations with practical assessments to evaluate real-world competency. Candidates must demonstrate proficiency in data extraction, quality assessment, cleaning procedures, and metric calculation, reflecting the core responsibilities of working data analysts.

The Data Scientist Certification expands these requirements to include machine learning and artificial intelligence applications, requiring candidates to collect and interpret large datasets whilst effectively communicating results to business stakeholders. Similarly, the Data Engineer Certification focuses on data infrastructure and preprocessing capabilities, essential skills as organisations increasingly rely on automated data pipelines and real-time analytics.

The SQL Associate Certification addresses the universal need for database querying skills across all data roles. This certification validates both theoretical knowledge through timed examinations and practical application through hands-on database challenges, ensuring graduates can confidently extract and manipulate data from various database systems.

Emerging Technologies and Artificial Intelligence

The rapid advancement of artificial intelligence has created new educational opportunities that bridge traditional data science with cutting-edge generative technologies. DataCamp's Understanding Artificial Intelligence course provides a foundation for those new to AI concepts, requiring no programming background whilst covering machine learning, deep learning, and generative model fundamentals. This accessibility makes it valuable for business professionals seeking to understand AI's implications without becoming technical practitioners.

The Generative AI Concepts course builds upon this foundation to explore the specific technologies driving current AI innovation. Students examine how large language models function, consider ethical implications of AI deployment, and learn to maximise the effectiveness of AI tools in professional contexts. This programme addresses the growing need for AI literacy across various industries and roles.

DataCamp's Large Language Model Concepts course provides intermediate-level exploration of the technologies underlying systems like ChatGPT. The curriculum covers natural language processing fundamentals, fine-tuning techniques, and various learning approaches including zero-shot and few-shot learning. This technical depth makes it particularly valuable for professionals seeking to implement or customise language models within their organisations.

The ChatGPT Prompt Engineering for Developers course addresses the developing field of prompt engineering, a skill that has gained significant commercial value. Students learn to craft effective prompts that consistently produce desired outputs from language models, a capability that combines technical understanding with creative problem-solving. This expertise has become increasingly valuable as organisations integrate AI tools into their workflows.

Working with OpenAI API provides practical implementation skills for those seeking to build AI-powered applications. The course covers text generation, sentiment analysis, and chatbot development, giving students hands-on experience with the tools that are reshaping how businesses interact with customers and process information.

Computer Science Foundations

Stanford University's Computer Science 101 offers an accessible introduction to computing concepts without requiring prior programming experience. This course addresses fundamental questions about computational capabilities and limitations whilst exploring hardware architecture, software development, and internet infrastructure. The curriculum includes essential topics such as computer security, making it valuable for anyone seeking to understand the digital systems that underpin modern society.

The University of Leeds' Introduction to Logic for Computer Science provides focused training in logical reasoning, a skill that underlies algorithm design and problem-solving approaches. This compact course covers propositional logic and logical modelling techniques that form the foundation for more advanced computer science concepts.

Harvard's CS50 course, taught by Professor David Malan, has gained worldwide recognition for its engaging approach to computer science education. The programme combines theoretical concepts with practical projects, teaching algorithmic thinking alongside multiple programming languages including Python, SQL, HTML, CSS, and JavaScript. This breadth of coverage makes it particularly valuable for those seeking a comprehensive introduction to software development.

MIT's Introduction to Computer Science and Programming Using Python focuses specifically on computational thinking and Python programming. The curriculum emphasises problem-solving methodologies, testing and debugging strategies, and algorithmic complexity analysis. This foundation proves essential for those planning to specialise in data science or software development.

MIT's The Missing Semester course addresses practical tools that traditional computer science curricula often overlook. Students learn command-line environments, version control with Git, debugging techniques, and security practices. These skills prove essential for professional software development but are rarely taught systematically in traditional academic settings.

Accessible Learning Resources and Community Support

The democratisation of education extends beyond formal courses to include diverse learning resources that support different learning styles and schedules. YouTube channels such as Programming with Mosh, freeCodeCamp, Alex the Analyst, Tina Huang, and Ken Lee provide free, high-quality content that complements formal education programmes. These resources offer everything from comprehensive programming tutorials to career guidance and project-based learning opportunities.

The 365 Data Science platform contributes to this ecosystem through flashcard decks that reinforce learning of essential terminology and concepts across Excel, SQL, Python, and emerging technologies like ChatGPT. Their statistics calculators provide interactive tools that help students understand the mechanics behind statistical calculations, bridging the gap between theoretical knowledge and practical application.

Udemy's marketplace model supports this diversity by hosting over 100,000 courses, including many free options that allow instructors to share expertise with global audiences. The platform's filtering capabilities enable learners to identify resources that match their specific needs and learning preferences.

Industry Integration and Career Development

Major technology companies have recognised the value of contributing to global education initiatives, with Google, Microsoft and Amazon offering professional-grade courses at no cost. Google's Data Analytics Professional Certificate exemplifies this trend, providing industry-recognised credentials that directly align with employment requirements at leading technology firms.

These industry partnerships ensure that course content remains current with rapidly evolving technological landscapes, whilst providing students with credentials that carry weight in hiring decisions. The integration of real-world projects and case studies helps bridge the gap between academic learning and professional application.

The comprehensive nature of these educational opportunities reflects the complex requirements of modern data and technology roles. Successful professionals must combine technical proficiency with communication skills, statistical understanding with programming capability, and theoretical knowledge with practical application. The diversity of available courses enables learners to develop these multifaceted skill sets according to their career goals and learning preferences.

As technology continues to reshape industries and create new professional opportunities, access to high-quality education becomes increasingly critical. These courses represent more than mere skill development; they provide pathways for career transformation and professional advancement that transcend traditional educational barriers. Whether pursuing data analysis, software development, or artificial intelligence applications, learners can now access world-class education that was previously available only through expensive university programmes or exclusive corporate training initiatives.

The future of professional development lies in this combination of accessibility, quality, and relevance that characterises the modern online education landscape. These resources enable individuals to build expertise that matches industry demands, also maintaining the flexibility to learn at their own pace and according to their specific circumstances and goals.

Synthetic Data: The key to unlocking AI's potential in healthcare

18th July 2025

The integration of artificial intelligence into healthcare is being hindered by challenges such as data scarcity, privacy concerns and regulatory constraints. Healthcare organisations face difficulties in obtaining sufficient volumes of high-quality, real-world data to train AI models, which can accurately predict outcomes or assist in decision-making.

Synthetic data, defined as algorithmically generated data that mimics real-world data, is emerging as a solution to these challenges. This artificially generated data mirrors the statistical properties of real-world data without containing any sensitive or identifiable information, allowing organisations to sidestep privacy issues and adhere to regulatory requirements.

By generating datasets that preserve statistical relationships and distributions found in real data, synthetic data enables healthcare organisations to train AI models with rich datasets while ensuring sensitive information remains secure. The use of synthetic data can also help address bias and ensure fairness in AI systems by enabling the creation of balanced training sets and allowing for the evaluation of model outputs across different demographic groups.

Furthermore, synthetic data can be generated programmatically, reducing the time spent on data collection and processing and enabling organisations to scale their AI initiatives more efficiently. Ultimately, synthetic data are becoming a critical asset in the development of AI in healthcare, enabling faster development cycles, improving outcomes and driving innovation while maintaining trust and security.

What SAS Innovate 2025 revealed about the future of enterprise analytics

21st June 2025SAS Innovate 2025 comprised a global event in Orlando (6th-9th May) followed by regional editions on tour. This document provides observations from both the global event and the London stop (3rd-4th June), covering technical content, platform developments and thematic emphasis across the two occasions. The global event featured extensive recorded content covering platform capabilities, migration approaches and practical applications, whilst the London event incorporated these themes with additional local perspectives and a particular focus on governance and life sciences applications.

Global Event

Platform Expansion and New Capabilities

The global SAS Innovate 2025 event included content on SAS Clinical Acceleration, positioned as a SAS Viya equivalent to SAS LSAF. Whilst much appeared familiar from the predecessor platform, performance improvements and additional capabilities represented meaningful enhancements.

Two presentations, likely restricted to in-person attendees based on their absence from certain schedules, covered AI-powered SAS code generation. Shionogi presented on using AI for clinical studies and real-world evidence generation, with the significant detail that the AI capability existed within SAS Viya rather than depending on external large language models. Another session addressed interrogating and generating study protocols using SAS Viya, including functionality intended to support study planning in ways that could improve success probability.

These sessions collectively indicated a directional shift. The scope extends beyond conventional expectations of "SAS in clinical" contexts, moving into upstream and adjacent activities, including protocol development and more integrated automation.

Architectural Approaches and Data Movement

A significant theme across multiple sessions addressed fundamental shifts in data architecture. The traditional approach of moving massive datasets from various sources into a single centralised analytics engine is being challenged by a new paradigm: bringing analytics to the data. The integration of SAS Viya with SingleStore exemplifies this approach, where analytics processing occurs directly within the source database rather than requiring data extraction and loading. This architectural change can reduce infrastructure requirements for specific workloads by as much as 50 per cent, whilst eliminating the complexity and cost associated with constant data movement and duplication.

Trustworthy AI and Organisational Reflection

Keynote presentations addressed the relationship between AI systems and organisational practices. SAS Vice President of Data Ethics Practice Reggie Townsend articulated a perspective that reframes common concerns about AI bias. When AI produces biased results, the issue is not primarily technical failure, but rather a reflection of biases already embedded within cultural and organisational practices. This view positions AI as a diagnostic tool that surfaces systemic issues requiring organisational attention rather than merely technical remediation.

The focus on trustworthy AI extended beyond bias to encompass governance frameworks, transparency requirements and the persistent challenge that poor data quality leads to ineffective AI regardless of model sophistication. These considerations hold particular significance in probabilistic AI contexts, especially where SAS aims to incorporate deterministic elements into aspects of its AI offering.

Natural Language Interfaces and Accessibility

Content addressing SAS Viya Copilot demonstrated the platform's natural language capabilities, enabling users to interact with analytics through conversational queries rather than requiring technical syntax. This approach aims to democratise data access by allowing users with limited technical knowledge to directly engage with complex datasets. The Copilot functionality, built on Microsoft Azure OpenAI Service, supports code generation, model development assistance and natural language explanations of analytical outputs.

Cloud Migration and Infrastructure Considerations

A presentation on transforming clinical programming using SAS Clinical Acceleration was scheduled but not accessible at the global event. The closing session featured the CIO of Parexel discussing their transition to SAS managed cloud services. Characterised as a modernisation initiative, reported outcomes included reduced outage frequency. This aligns with observations from other multi-tenant systems, where maintaining stability and availability represents a fundamental requirement that often proves more complex than external perspectives might suggest.

Content addressing cloud-native strategies emphasised a fundamental psychological shift in resource management. Rather than the traditional capital expenditure mindset where physical servers run continuously, cloud environments enable strategic use of the capability to create and destroy computing resources on demand. Approaches include spinning up analytics environments at the start of the working day and shutting them down at the end, with more sophisticated implementations that automatically save and shut down environments after periods of inactivity. This dynamic approach ensures organisations pay only for actively used resources.

Presentations on organisational change management accompanying technical migrations emphasised that successful technology projects require attention to human factors alongside technical implementation. Strategies discussed included formal launch events to mark transitions, structured support mechanisms such as office hours for technical questions and community-building activities designed to foster relationships and maintain engagement during periods of change.

Platform Integration and Practical Applications

Content on SAS Viya Workbench covered availability through Azure and AWS, Python integration, R compatibility and interfacing with SAS Enterprise Guide, with demonstrations of several features. As SAS expands support for open-source languages, the presentation illustrated how these capabilities can provide a unified platform for different technical communities.

A presentation on retrieval augmented generation with unstructured data (such as system manuals), combined with agentic AI for diagnosing manufacturing system problems, offered a concrete use case. Given the tendency for these subjects to become abstract, the connected example provided practical insight into how components can function together in operational settings.

Digital Twins and Immersive Simulation

A notable announcement at the global event involved the partnership between SAS and Epic Games to create enhanced digital twins using Unreal Engine. This collaboration applies the same photorealistic 3D rendering technology used in Fortnite to industrial applications. Georgia-Pacific piloted this technology at its Savannah River Mill, which manufactures napkins, paper towels and toilet tissue. The facility was captured using RealityScan, Epic's mobile application, to create photorealistic renderings imported into Unreal Engine.

The application focused on optimising automated guided vehicle deployment and routing strategies. Rather than testing scenarios in the physical environment with associated costs and safety risks, the digital twin enables simulation of complex factory floor operations including AGV navigation, proximity alerts, obstacles and rare adverse events. SAS CTO Bryan Harris emphasised that digital twins should not only function like the real world but also look like it, enabling more accessible decision-making for frontline workers, engineers and machine operators beyond traditional data scientist roles.

The collaboration extends beyond visual fidelity. SAS developed a plugin connecting Unreal Engine to SAS Viya, enabling real-time data from simulated environments to fuel AI models that analyse, optimise and test industrial operations. This approach allows organisations to explore "what-if" scenarios virtually before implementing changes in physical facilities, potentially delivering cost savings whilst improving safety and operational efficiency.

Marketing Intelligence and Customer Respect

Content on SAS Customer Intelligence 360 addressed the platform's marketing decisioning capabilities, including next-best-offer functionality and real-time personalisation across channels. A notable emphasis concerned contact policies and rules that enable marketers to limit communication frequency, reflecting a strategic choice to respect customer attention rather than maximise message volume. This approach recognises that in environments characterised by notification saturation, demonstrating restraint can build trust and ensure greater engagement when communications do occur.

Financial Crime and Integrated Analytics

Presentations on financial crime addressed the value of integrated platforms that connect traditionally siloed functions such as fraud detection, anti-money laundering and sanctions screening. Network analytics capabilities enable identification of patterns and relationships across these domains that might otherwise remain hidden. Examples illustrated how seemingly routine alerts, when analysed within a comprehensive view of connected data, can reveal connections to significant criminal networks, transforming tactical operational issues into sources of strategic intelligence.

Data Lineage and Transformation Planning

Content on data lineage reframed this capability from a purely technical concern to a strategic tool for transformation planning. For large-scale modernisation initiatives, comprehensive mapping of data flows, transformations and dependencies provides the foundation for accurate effort estimation, budgetary planning and risk assessment. This visibility enables organisations to proceed with complex changes whilst maintaining confidence that critical downstream processes will not be inadvertently affected.

Development Practices and Migration Approaches

Sessions included content on using Bitbucket with SAS Viya to support continuous integration and continuous deployment pipelines for SAS code. Git formed the foundation of the approach, with supporting tools such as JQ. Given the current state of manual validation processes, this content addressed a genuine need for more robust validation methods for SAS macros used across clinical portfolios, where these activities can require several weeks and efficiency improvements would represent substantial value.

Another session provided detailed coverage of migrating from SAS 9 to SAS Viya, focusing on assessment methods for determining what requires migration and techniques for locating existing assets. The content reflected the reality that the discovery phase often constitutes the primary work effort rather than a preliminary step.

A presentation on implementing SAS Viya on-premises under restrictive security requirements described a solution requiring sustained collaboration with SAS over multiple years to achieve necessary modifications. This illustrated how certain deployments are defined primarily by governance, controls and assurance requirements rather than by product features.

Technical Fundamentals and Persistent Challenges

A hands-on session on data-driven output programming with SAS macros provided practical content with life sciences examples. Control tables and CALL EXECUTE represented familiar approaches, whilst the data step RESOLVE function offered new functionality worth exploring, particularly given its capability to work with macro expressions rather than being limited to macro variables in the manner of SYMGET.

A recurring theme across multiple contexts emphasised that poor data quality leads to ineffective AI and consequently to flawed decision-making. The technological environment evolves, but fundamental challenges persist. This consideration holds particular significance in probabilistic AI contexts, especially where SAS aims to incorporate deterministic elements into aspects of its AI offering.

London Event

Overview and Core Themes

The London edition of SAS Innovate 2025 on Tour demonstrated the pervasive influence of AI across the programme. The event concluded with Michael Wooldridge from the University of Oxford providing an overview of different categories of AI, offering conceptual grounding for a day when terminology and ambition frequently extended beyond current practical adoption.

The opening session presented SAS' recent offerings, maintaining consistency with content from the global event in Orlando whilst incorporating local perspectives. Trustworthiness, responsibility and governance emerged as prominent themes, particularly relevant given the current industry emphasis on innovation. A panel discussion included a brief exchange regarding the term "digital workforce", reflecting an awareness of the human implications that can be absent from wider industry discussion.

Life Sciences Stream Content

The Life Sciences stream focused heavily on AI, with presentations from AWS, AstraZeneca and IQVIA addressing the subject, followed by a panel discussion continuing this direction. The scale of technological change represents a tangible shift affecting all parts of the ecosystem. A presentation from a healthcare professional provided context regarding the operational environment within which pharmaceutical companies function. SAS CTO Bryan Harris expressed appreciation for pharmaceutical research and development work, an acknowledgement that appeared both substantive and appropriate to the setting.

From mathematical insights to practical applications: Two perspectives on AI

19th April 2025As AI continues to transform our technological landscape, two recent books offer distinct yet complementary perspectives on understanding and working with these powerful tools. Stephen Wolfram's technical deep dive and Ethan Mollick's practical guide approach the subject from different angles, but both provide valuable insights for navigating our AI-integrated future.

- What is ChatGPT Doing?: Wolfram's Technical Lens

Stephen Wolfram's exploration of large language models is characteristically thorough and mathematically oriented. While dense in parts, his analysis reveals fascinating insights about both AI and human cognition.

Perhaps most intriguing is Wolfram's observation that generative AI unexpectedly teaches us about human language production. These systems, in modelling our linguistic patterns with such accuracy, hold up a mirror to our own cognitive processes, perhaps revealing structures and patterns we had not fully appreciated before.

Wolfram does not shy away from highlighting limitations, particularly regarding computational capabilities. As sophisticated as next-word prediction has become through multi-billion parameter neural networks, these systems fundamentally lack true mathematical reasoning. However, his proposal of integrating language models with computational tools like WolframAlpha presents an elegant solution, combining the conversational fluency of AI with precise computational power.

- Co-intelligence: Mollick's Practical Framework

Ethan Mollick takes a decidedly more accessible approach in "Co-intelligence," offering accessible strategies for effective human-AI collaboration across various contexts. His framework includes several practical principles:

- Invite AI to the table as a collaborator rather than merely a tool

- Maintain human oversight and decision-making authority

- Communicate with AI systems as if they were people with specific roles

- Assume current AI represents the lowest capability level you will work with going forward

What makes Mollick's work particularly valuable is its contextual applications. Drawing from his background as a business professor, he methodically examines how these principles apply across different collaborative scenarios: from personal assistant to creative partner, coworker, tutor, coach, and beyond. With a technology, that, even now, retains some of the quality of a solution looking for a problem, these grounded suggestions act as a counterpoint to the torrent of hype that that deluges our working lives, especially if you frequent LinkedIn a lot as I am doing at this time while searching for new freelance work.

- Complementary Perspectives

Though differing significantly in their technical depth and intended audience, both books contribute meaningfully to our understanding of AI. Wolfram's mathematical rigour provides theoretical grounding, while Mollick's practical frameworks offer immediate actionable insights. For general readers looking to productively integrate AI into their work and life, Mollick's accessible approach serves as an excellent entry point. Those seeking deeper technical understanding will find Wolfram's analysis challenging but rewarding.

As we navigate this rapidly evolving landscape, perspectives from both technical innovators and practical implementers will be essential in helping us maximise the benefits of AI while mitigating potential drawbacks. As ever, the hype outpaces the practical experiences, leaving us to suffer the marketing output while awaiting real experiences to be shared. It is the latter is more tangible and will allow us to make use of game-changing technical advances.

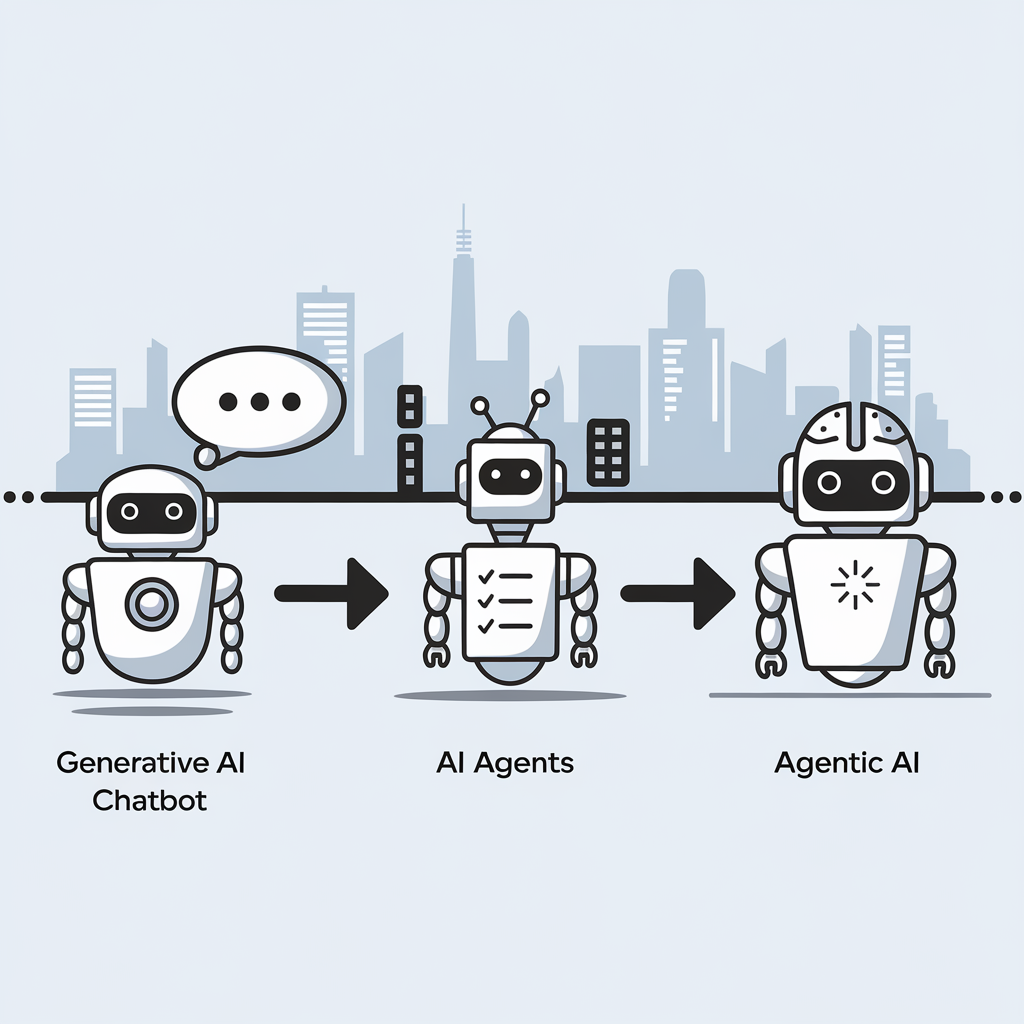

The critical differences between Generative AI, AI Agents, and Agentic Systems

9th April 2025

The distinction between three key artificial intelligence concepts can be explained without technical jargon. Here then are the descriptions:

- Generative AI functions as a responsive assistant that creates content when prompted but lacks initiative, memory or goals. Examples include ChatGPT, Claude and GitHub Copilot.

- AI Agents represent a step forward, actively completing tasks by planning, using tools, interacting with APIs and working through processes independently with minimal supervision, similar to a junior colleague.

- Agentic AI represents the most sophisticated approach, possessing goals and memory while adapting to changing circumstances; it operates as a thinking system rather than a simple chatbot, capable of collaboration, self-improvement and autonomous operation.

This evolution marks a significant shift from building applications to designing autonomous workflows, with various frameworks currently being developed in this rapidly advancing field.

Claude Projects: Reusing your favourite AI prompts

28th March 2025Some things that I do with Anthropic Claude, I end up repeating. Generating titles for pieces of text or rewriting text to make it read better are activities that happen a lot. Others would include the generation of single word previews for a piece or creating a summary.

Python or R scripts come in handy for summarisation, either for a social media post or for introduction into other content. In fact, this is how I go much of the time. Nevertheless, I found another option: using Projects in the Claude web interface.

These allow you to store a prompt that you reuse a lot in the Project Knowledge panel. Otherwise, you need to supply a title and a description too. Once completed, you just add your text in there for the AI to do the rest. Title generation and text rewriting already are set up like this, and keywords could follow. It is a great way to reuse and refine prompts that you use a lot.

Finding human balance in an age of AI code generation

12th March 2025Recently, I was asked about how I felt about AI. Given that the other person was not an enthusiast, I picked on something that happened to me, not so long ago. It involved both Perplexity and Google Gemini when I was trying to debug something: both produced too much code. The experience almost inspired a LinkedIn post, only for some of the thinking to go online here for now. A spot of brainstorming using an LLM sounds like a useful exercise.

Going back to the original question, it happened during a meeting about potential freelance work. Thus, I tapped into experiences with code generators over several decades. The first one involved a metadata-driven tool that I developed; users reported that there was too much imperfect code to debug with the added complexity that dealing with clinical study data brings. That challenge resurfaced with another bespoke tool that someone else developed, and I opted to make things simpler: produce some boilerplate code and let users take things from there. Later, someone else again decided to have another go, seemingly with more success.

It is even more challenging when you are insufficiently familiar with the code that is being produced. That happened to me with shell scripting code from Google Gemini that was peppered with some Awk code. There was no alternative but to learn a bit more about the language from Tutorials Point and seek out an online book elsewhere. That did get me up to speed, and I will return to these when I am in need again.

Then, there was the time when I was trying to get a Julia script to deal with Google Drive needing permissions to be set. This started Google Gemini into adding more and more error checking code with try catch blocks. Since I did not have the issue at that point, I opted to halt and wait for its recurrence. When it did, I opted for a simpler approach, especially with the gdrive CLI tool starting up a web server for completing the process of reactivation. While there are times when shell scripting is better than Julia for these things, I added extra robustness and user-friendliness anyway.

During that second task, I was using VS Code with the GitHub Copilot plugin. There is a need to be careful, yet that can save time when it adds suggestions for you to include or reject. The latter may apply when it adds conditional logic that needs more checking, while simple code outputting useful text to the console can be approved. While that certainly is how I approach things for now, it brings up an increasingly relevant question for me.

How do we deal with all this code production? In an environment with myriads of unit tests and a great deal of automation, there may be more capacity for handling the output than mere human inspection and review, which can overwhelm the limitations of a human context window. A quick search revealed that there are automated tools for just this purpose, possibly with their own learning curves; otherwise, manual working could be a better option in some cases.

After all, we need to do our own thinking too. That was brought home to me during the Julia script editing. To come up with a solution, I had to step away from LLM output and think creatively to come up with something simpler. There was a tension between the two needs during the exercise, which highlighted how important it is to learn not to be distracted by all the new technology. Being an introvert in the first place, I need that solo space, only to have to step away from technology to get that when it was a refuge in the first place.

For anyone with a programming hobby, they have to limit all this input to avoid being overwhelmed; learning a programming language could involve stripping out AI extensions from a code editor, for instance, LLM output has its place, yet it has to be at a human scale too. That perhaps is the genius of a chat interface, and we now have Agentic AI too. It is as if the technology curve never slackens, at least not until the current boom ends, possibly when things break because they go too far beyond us. All this acceleration is fine until we need to catch up with what is happening.

Little helpers

22nd September 2024This could have been a piece that appeared on my outdoors blog until I got second thoughts. One reason why I might have done so is that I am making more use of Perplexity for searching the web and gaining more value from its output. However, that is proving more useful in writing what you find on here. Knowing the sources for a dynamically generated article adds more confidence when fact checking, and it is remarkable what comes up that you would find quickly with Google. There is added value with this one.

A better candidate would have been Anthropic's Claude. That has come in handy when writing trip reports. Being able to use a stub to prototype a blog entry really has its uses. The reality is that everything gets rewritten before anything gets published; these tools are never so good as to feature everything that you want to mention, even if they do a good job of mimicking your writing tone and style. Nevertheless, being able to work with the content beyond doing a brain dump from one's memory is an undeniable advance.

Sometimes, there are occasions when using Bing's access to OpenAI through Copilot helps with production of images. In reality, I do have an extensive personal library of images, so they possibly should suffice in many ways. However, curiosity about the technology overrides the effort that photo processing requires.

While there may be some level of controversy surrounding the use of AI tools in content creation, using such tooling for proofing content should not raise too much ire. Grammarly comes up a lot, though it is LanguageTool that I use to avoid excessive butting into my writing style. That has changed to comply with rules that had passed me without my noticing, but there are other things that need to be turned off. Configuring the proof tools in other ways might be better, so that is something to explore, or we could end up with too much standardisation of writing; there needs to be room for human creativity at all times.

All of these are just a sample of what is available. Just checking in with The Rundown AI will reveal that there is an onslaught of innovation right now. Hype also is a problem, yet we need to learn to use these tools. The changeover is equivalent to the explosive increase in availability of personal computing a generation ago. That brought its own share of challenges (some were on the curve while others were not) until everything settled down, and it will be the same with what is happening now.

Automating writing using R and Claude

16th April 2024Automation of writing using AI has become prominent recently, especially since GPT came to everyone's notice. It is more than automation of proofreading but of producing the content itself, as Mark Hinkle and Luke Matthews can testify. Figuring out how to use Generative AI needs more than one line prompts, so knowing what multi-line ones will work is what is earning six digit annual salaries for some.

Recently, I gave this a go when writing a post that used content from a Reddit post thread. The first step was to extract the content from the thread, and I found that I could use R to do this. That meant installing the RedditExtractoR package using the following command:

install.packages("RedditExtractoR")

Then, I created a short script containing the following lines of code with placeholders added in place of the actual locations:

library("RedditExtractoR")

write.csv(get_thread_content("<URL for Reddit post thread>"), "<location of text file>")

The first line above calls the RedditExtractoR package for use so that its get_thread_content function could be used to scape the thread's textual content. This was then fed to write.csv for writing out a text file with content.

Once I had the text file, I could upload it to Anthropic's Claude for the next steps. Firstly, I got it to give me a summary of the thread discussion before I asked it to give me the suggested solutions to the issue. Impressively, it capably provided me with the latter.

Now armed with these answers, I set to crafting the post from them. Claude did not do all the work for me, but it certainly helped with the writing. This is something that I fancy exploring further, especially given how business computing is likely to proceed in the next few years.

A little bit of abstraction: The quiet utility of generated imagery

21st August 2021

Data science has remained in my awareness since 2017 though my work is more on its fringes in clinical research. In fact, I have been involved more in the standardisation and automation of more traditional data reporting than in the needs of data modelling such as data engineering or other similar disciplines. Much of this effort has meant the use of SAS, with which I have programmed since 2000 and for which I have a licence (an expensive commodity, it has to be said), but other technologies are being explored with R, Python and Julia being among them.

Though the change in technological scope does bring an element of excitement and new interest, there is also some sadness when tried and trusted technologies meet with newer competition and valued skills are no longer as career securing as they once were. Still, there is plenty of online training out there, and I already have collected some of my thoughts on this. The learning continues and the need for repositioning is also clear.

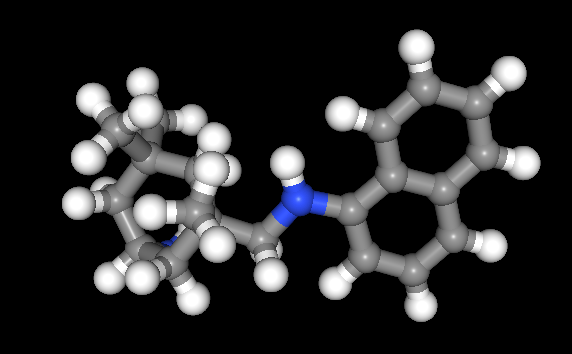

The journey also brought some curios to my notice. One of these is This Person Does Not Exist, a website building photos of non-existent faces using machine learning. Recently, I learned of others like it such as This Artwork Does Not Exist, This Cat Does Not Exist, This Horse Does Not Exist, and This Chemical Does Not Exist. The last of these probably should be entitled "This Molecule Does Not Exist (Yet)" since it is a fictitious molecular structure that has been created and what you get is an actual moving image that spins it around in three-dimensional space. The one with dynamically generated abstract art is the main inspiration for this piece and is of more interest to me, while the other two are more explanatory, though the horse website is not so successful in its execution and one can ask why we need more cat pictures.

To some, the idea of creating fake pictures may feel a little foreboding, and that especially applies to photos of people and the livelihoods of any content creators. Nevertheless, these sources of imagery have their legitimate uses, such as decorating websites or brochures, which is where my interest is piqued. After all, there are some subjects where pictures can be scarce, so any form of decoration that enlivens an article has to have some use. While technology websites like this one can feature images too with screenshots and device photos being commonplace, they can all look like each other, hence the need for a little more variety and having pictures often increases the choice of website themes as well since so many need images to make them work or stand out. As ever, being sparing with any innovations remains in order, which is how I approach this matter as well.