TOPIC: GENERATIVE ARTIFICIAL INTELLIGENCE

Latest developments in the AI landscape: Consolidation, implementation and governance

22nd November 2025Artificial intelligence is moving through another moment of consolidation and capability gain. New ways to connect models to everyday tools now sit alongside aggressive platform plays from the largest providers, a steady cadence of model upgrades, and a more defined conversation about risk and regulation. For companies trying to turn all this into practical value, the story is becoming less about chasing the latest benchmark and more about choosing a platform, building the right connective tissue, and governing data use with care. The coming year looks set to reward those who simplify the user experience, embed AI directly into work and adopt proportionate controls rather than blanket bans.

I. Market Structure and Competitive Dynamics

Platform Consolidation and Lock-In

Enterprise AI appears to be settling into a two-platform market. Analysts describe a landscape defined more by integration and distribution than raw model capability, evoking the cloud computing wars. On one side sit Microsoft and OpenAI, on the other Google and Gemini. Recent signals include the pricing of Gemini 3 Pro at around two dollars per million tokens, which undercuts much of the market, Alphabet's share price strength, and large enterprise deals for Gemini integrated with Google's wider software suite. Google is also promoting Antigravity, an agent-first development environment with browser control, asynchronous execution and multi-agent support, an attempt to replicate the pull of VS Code within an AI-native toolchain.

The implication for buyers is higher switching costs over time. Few expect true multi-cloud parity for AI, and regional splits will remain. Guidance from industry commentators is to prioritise integration across the existing estate rather than incremental model wins, since platform choices now look like decade-long commitments. Events lined up for next year are already pointing to that platform view.

Enterprise Infrastructure Alignment

A wider shift in software development is also taking shape. Forecasts for 2026 emphasise parallel, multi-agent systems where a planning agent orchestrates a set of execution agents, and harnesses tune themselves as they learn from context. There is growing adoption of a mix-of-models approach in which expensive frontier models handle planning, and cheaper models do the bulk of execution, bringing near-frontier quality for less money and with lower latency. Team structures are changing as a result, with more value placed on people who combine product sense with engineering craft and less on narrow specialisms.

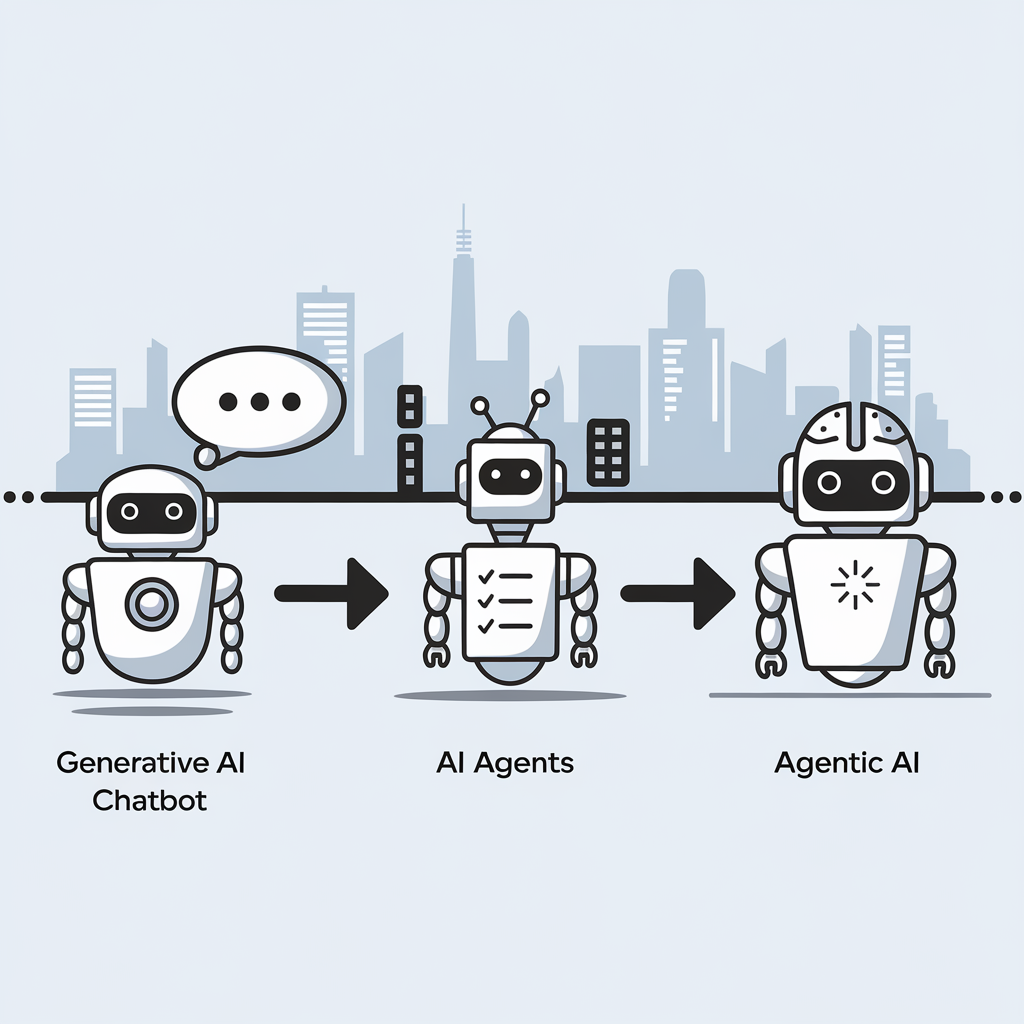

ServiceNow and Microsoft have announced a partnership to coordinate AI agents across organisations with tighter oversight and governance, an attempt to avoid the sprawl that plagued earlier automation waves. Nvidia has previewed Apollo, a set of open AI physics models intended to bring real-time fidelity to simulations used in science and industry. Albania has appointed an AI minister, which has kicked off debate about how governments should manage and oversee their own AI use. CIOs are being urged to lead on agentic AI as systems become capable of automating end-to-end workflows rather than single steps.

New companies and partnerships signal where capital and talent are heading. Jeff Bezos has returned to co-lead Project Prometheus, a start-up with $6.2 billion raised and a team of about one hundred hires from major labs, focused on AI for engineering and manufacturing in the physical world, an aim that aligns with Blue Origin interests. Vik Bajaj is named as co-CEO.

Deals underline platform consolidation. Microsoft and Nvidia are investing up to $5 billion and $10 billion respectively (totalling $15 billion) in Anthropic, whilst Anthropic has committed $30 billion in Azure capacity purchases with plans to co-design chips with Nvidia.

Commercial Model Evolution

Events and product launches continue at pace. xAI has released Grok 4.1 with an emphasis on creativity and emotional intelligence while cutting hallucinations. On the tooling front, tutorials explain how ChatGPT's desktop app can record meetings for later summarisation. In a separate interview, DeepMind's Demis Hassabis set out how Gemini 3 edges out competitors in many reasoning and multimodal benchmarks, slightly trails Claude Sonnet 4.5 in coding, and is being positioned for foundations in healthcare and education though not as a medical-grade system. Google is encouraging developers towards Antigravity for agentic workflows.

Industry leaders are also sketching commercial models that assume more agentic behaviour, with Microsoft's Satya Nadella promising a "positive-sum" vision for AI while hinting at per-agent pricing and wider access to OpenAI IP under Microsoft's arrangements.

II. Technical Implementation and Capability

Practical Connectivity Over Capability

A growing number of organisations are starting with connectors that allow a model to read and write across systems such as Gmail, Notion, calendars, CRMs, and Slack. Delivered via the Model Context Protocol, these links pull the relevant context into a single chat, so users spend less time switching windows and more time deciding what to do. Typical gains are in hours saved each week, lower error rates, and quicker responses. With a few prompts, an assistant can draft executive email summaries, populate a Notion database with leads from scattered sources, or propose CRM follow-ups while showing its working.

The cleanest path is phased: enable one connector using OAuth, trial it in read-only mode, then add simple routines for briefs, meeting preparation or weekly reports before switching on write access with a "show changes before saving" step. Enterprise controls matter here. Connectors inherit user permissions via OAuth 2.0, process data in memory, and vendors point to SOC 2, GDPR and CCPA compliance alongside allow and block lists, policy management, and audit logs. Many governance teams prefer to begin read-only and require approvals for writes.

There are limits to note, including API rate caps, sync delays, context window constraints and timeouts for long workflows. They are poor fits for classified data, considerable bulk operations or transactions that cannot tolerate latency. Some industry observers regard Claude's current MCP implementation, particularly on desktop, as the most capable of the group. Playbooks for a 30-day rollout are beginning to circulate, as are practitioner workshops introducing go-to-market teams to these patterns.

Agentic Orchestration Entering Production

Practical comparisons suggest the surrounding tooling can matter more than the raw model for building production-ready software. One report set a 15-point specification across several environments and found that Claude Code produced all features end-to-end. The same spec built with Gemini 3 inside Antigravity delivered two thirds of the features, while Sonnet 4.5 in Antigravity delivered a little more than half, with omissions around batching, progress indicators and robust error handling.

Security remains a live issue. One newsletter reports that Anthropic said state-backed Chinese hackers misused Claude to autonomously support a large cyberattack, which has intensified calls for governance. The background hum continues, from a jump in voice AI adoption to a German ruling on lyric copyright involving OpenAI, new video guidance steps in Gemini, and an experimental "world model" called Marble. Tools such as Yorph are receiving attention for building agentic data pipelines as teams look to productionise these patterns.

Tooling Maturity Defining Outcomes

In engineering practice, Google's Code Wiki brings code-aware documentation that stays in sync with repositories using Gemini, supported by diagrams and interactive chat. GitLab's latest survey suggests AI increases code creation but also pushes up demand for skilled engineers alongside compliance and human oversight. In operations, Chronosphere has added AI remediation guidance to cut observability noise and speed root-cause analysis while performance testing is shifting towards predictive, continuous assurance rather than episodic tests.

Vertical Capability Gains

While the platform picture firms up, model and product updates continue at pace. Google has drawn attention with a striking upgrade to image generation, based on Gemini 3. The system produces 4K outputs with crisp text across multiple languages and fonts, can use up to 14 reference images, preserves identity, and taps Google Search to ground data for accurate infographics.

Separately, OpenAI has broadened ChatGPT Group Chats to as many as 20 people across all pricing tiers, with privacy protections that keep group content out of a user's personal memory. Consumer advocates have used the moment to call out the risks of AI toys, citing safety, privacy and developmental concerns, even as news continues to flow from research and product teams, from the release of OLMo 3 to mobile features from Perplexity and a partnership between Stability and Warner Music Group.

Anthropic has answered with Claude Opus 4.5, which it says is the first model to break the 80 percent mark on SWE-Bench Verified while improving tool use and reasoning. Opus 4.5 is designed to orchestrate its smaller Haiku models and arrives with a price cut of roughly two thirds compared to the 4.1 release. Product changes include unlimited chat length, a Claude Code desktop app, and integrations that reach across Chrome and Excel.

OpenAI's additions have a more consumer flavour, with a Shopping Research feature in ChatGPT that produces personalised product guidance using a GPT-5 mini variant and plans for an Instant Checkout flow. In government, a new US executive order has launched the "Genesis Mission" under the Department of Energy, aiming to fuse AI capabilities across 17 national labs for advances in fields such as biotechnology and energy.

Coding tools are evolving too. OpenAI has previewed GPT-5.1-Codex-Max, which supports long-running sessions by compacting conversational history to preserve context while reducing overhead. The company reports 30 percent fewer tokens and faster performance over sessions that can run for more than a day. The tool is already available in the Codex CLI and IDE, with an API promised.

Infrastructure news out of the Middle East points to large-scale investment, with Saudi HUMAIN announcing data centre plans including xAI's first international facility alongside chips from Nvidia and AWS, and a nationwide rollout of Grok. In computer vision, Meta has released SAM 3 and SAM 3D as open-source projects, extending segmentation and enabling single-photo 3D reconstruction, while other product rollouts continue from GPT-5.1 Pro availability to fresh funding for audio generation and a marketing tie-up between Adobe and Semrush.

On the image side, observers have noted syntax-aware code and text generation alongside moderation that appears looser than some rivals. A playful "refrigerator magnet" prompt reportedly revealed a portion of the system prompt, a reminder that prompt injection is not just a developer concern.

Video is another area where capabilities are translating into business impact. Sora 2 can generate cinematic, multi-shot videos with consistent characters from text or images, which lets teams accelerate marketing content, broaden A/B testing and cut the need for studios on many projects. Access paths now span web, mobile, desktop apps and an API, and the market has already produced third-party platforms that promise exports without watermarks.

Teams experimenting with Sora are being advised to measure success by outcomes such as conversion rates, lower support loads or improved lead quality rather than just aesthetic fidelity. Implementation advice favours clear intent, structured prompts and iterative variation, with more advanced workflows assembling multi-shot storyboards, using match cuts to maintain rhythm, controlling lighting for continuity and anchoring character consistency across scenes.

III. Governance, Risk and Regulation

Governance as a Product Requirement

Amid all this activity, data risk has become a central theme for AI leaders. One governance specialist has consolidated common problem patterns into the PROTECT framework, which offers a way to map and mitigate the most material risks.

The first concern is the use of public AI tools for work content, which raises the chance of leakage or unwanted training on proprietary data. The recommended answer combines user guidance, approved internal alternatives, and technical or legal controls such as data scanning and blocking.

A second pressure point is rogue internal projects that bypass review, create compliance blind spots and build up technical debt. Proportionate oversight is key, calibrated to data sensitivity and paired with streamlined governance, so teams are not incentivised to route around it.

Third-party vendors can be opportunistic with data, so due diligence and contractual clauses need to prevent cross-customer training and make expectations clear with templates and guidance.

Technical attacks are another strand, from prompt injection to data exfiltration or the misuse of agents. Layered defences help here, including input validation, prompt sanitisation, output filtering, monitoring, red-teaming, and strict limits on access and privilege.

Embedded assistants and meeting bots come with permission risks when they operate over shared drives and channels, and agentic systems can amplify exposure if left unchecked, so the advice is to enforce least-privilege access, start on low-risk data, and keep robust audit trails.

Compliance risks span privacy laws such as GDPR with their demands for a lawful basis, IP and copyright constraints, contractual obligations, and the AI Act's emphasis on data quality. Legal and compliance checks need to be embedded at data sourcing, model training and deployment, backed by targeted training.

Finally, cross-border restrictions matter. Transfers should be mapped across systems and sub-processors, with checks for Data Privacy Framework certification, standard contractual clauses where needed, and transfer impact assessments that take account of both GDPR and newer rules such as the US Bulk Data Transfer Rule.

Regulatory Pragmatism

Regulators are not standing still, either. In the European Commission has proposed amendments to the AI Act through a Digital Omnibus package as the trilogue process rolls on. Six changes are in focus:

- High-risk timelines would be tied to the approval of standards, with a backstop of December 2027 for Annex III systems and August 2028 for Annex I products if delays continue, though the original August 2026 date still holds otherwise.

- Transparency rules on AI-detectable outputs under Article 50(2) would be delayed to February 2027 for systems placed on the market before August 2026, with no delay for newer systems.

- The plan removes the need to register Annex III systems in the public database where providers have documented under Article 6(3) that a system is not high risk.

- AI literacy would shift from a mandatory organisation-wide requirement to encouragement, except where oversight of high-risk systems demands it.

- There is also a move to centralise supervision by the AI Office for systems built on general-purpose models by the same provider, and for huge online platforms and search engines, which is intended to reduce fragmentation across member states.

- Finally, proportionality measures would define Small Mid-Cap companies and extend simplified obligations and penalty caps that currently apply to SMEs.

If adopted, the package would grant more time and reduce administrative load in some areas, at the expense of certainty and public transparency.

IV. Strategic Implications

The picture that emerges is one of pragmatic integration. Connectors make it feasible to keep work inside a single chat while drawing on the systems people already use. Platform choices are converging, so it makes sense to optimise for the suite that fits the current stack and to plan for switching costs that accumulate over time.

Agentic orchestration is moving from slides to code, but teams will get further by focusing on reliable tooling, clear governance and value measures that match business goals. Regulation is edging towards more flexible timelines and centralised oversight in places, which may lower administrative load without removing the need for discipline.

The sensible posture is measured experimentation: start with read-only access to lower-risk data, design routines that remove drudgery, introduce write operations with approvals, and monitor what is actually changing. The tools are improving quickly, yet the organisations that benefit most will be those that match innovation with proportionate controls and make thoughtful choices now that will hold their shape for the decade ahead.

Rendering Markdown in WordPress without plugins by using Parsedown

4th November 2025Much of what is generated using GenAI as articles is output as Markdown, meaning that you need to convert the content when using it in a WordPress website. Naturally, this kind of thing should be done with care to ensure that you are the creator and that it is not all the work of a machine; orchestration is fine, regurgitation does that add that much. Naturally, fact checking is another need as well.

Writing plain Markdown has secured its own following as well, with WordPress plugins switching over the editor to facilitate such a mode of editing. When I tried Markup Markdown, I found it restrictive when it came to working with images within the text, and it needed a workaround for getting links to open in new browser tabs as well. Thus, I got rid of it to realise that it had not converted any Markdown as I expected, only to provide rendering at post or page display time. Rather than attempting to update the affected text, I decided to see if another solution could be found.

This took me to Parsedown, which proved to be handy for accomplishing what I needed once I had everything set in place. First, that meant cloning its GitHub repo onto the web server. Next, I created a directory called includes under that of my theme. Into there, I copied Parsedown.php to that location. When all was done, I ensured that file and directory ownership were assigned to www-data to avoid execution issues.

Then, I could set to updating the functions.php file. The first line to get added there included the parser file:

require_once get_template_directory() . '/includes/Parsedown.php';

After that, I found that I needed to disable the WordPress rendering machinery because that got in the way of Markdown rendering:

remove_filter('the_content', 'wpautop');

remove_filter('the_content', 'wptexturize');

The last step was to add a filter that parsed the Markdown and passed its output to WordPress rendering to do the rest as usual. This was a simple affair until I needed to deal with code snippets in pre and code tags. Hopefully, the included comments tell you much of what is happening. A possible exception is $matches[0]which itself is an array of entire <pre>...</pre> blocks including the containing tags, with $i => $block doing a $key (not the same variable as in the code, by the way) => $value lookup of the values in the array nesting.

add_filter('the_content', function($content) {

// Prepare a store for placeholders

$placeholders = [];

// 1. Extract pre blocks (including nested code) and replace with safe placeholders

preg_match_all('//si', $content, $pre_matches);

foreach ($pre_matches[0] as $i => $block) {

$key = "§PREBLOCK{$i}§";

$placeholders[$key] = $block;

$content = str_replace($block, $key, $content);

}

// 2. Extract standalone code blocks (not inside pre)

preg_match_all('/).*?<\/code>/si', $content, $code_matches);

foreach ($code_matches[0] as $i => $block) {

$key = "§CODEBLOCK{$i}§";

$placeholders[$key] = $block;

$content = str_replace($block, $key, $content);

}

// 3. Run Parsedown on the remaining content

$Parsedown = new Parsedown();

$content = $Parsedown->text($content);

// 4. Restore both pre and code placeholders

foreach ($placeholders as $key => $block) {

$content = str_replace($key, $block, $content);

}

// 5. Apply paragraph formatting

return wpautop($content);

}, 12); All of this avoided dealing with extra plugins to produce the required result. Handily, I still use the Classic Editor, which makes this work a lot more easily. There still is a Markdown import plugin that I am tempted to remove as well to streamline things. That can wait, though. It best not add any more of them any way, not least avoid clashes between them and what is now in the theme.

AI infrastructure under pressure: Outages, power demands and the race for resilience

1st November 2025The past few weeks brought a clear message from across the AI landscape: adoption is racing ahead, while the underlying infrastructure is working hard to keep up. A pair of major cloud outages in October offered a stark stress test, exposing just how deeply AI has become woven into daily services.

At the same time, there were significant shifts in hardware strategy, a wave of new tools for developers and creators and a changing playbook for how information is found online. There is progress on resilience and efficiency, yet the system is still bending under demand. Understanding where it held, where it creaked and where it is being reinforced sets the scene for what comes next.

Infrastructure Stress and Outages

The outages dominated early discussion. An AWS incident that lasted around 15 hours and disrupted more than a thousand services was followed nine days later by a global Azure failure. Each cascaded across systems that depend on them, illustrating how AI now amplifies the consequences of platform problems.

This was less about a single point of failure and more about the growing blast radius when connected services falter. The effect on productivity was visible too: a separate 10-hour ChatGPT downtime showed how fast outages of core AI tools now translate into lost work time.

Power Demand and Grid Strain

Behind the headlines sits a larger story about electricity, grids and planning. Data centres accounted for roughly 4% of US electricity use in 2024, about 183 TWh and the International Energy Agency projects around 945 TWh by 2030, with AI as a principal driver.

The averages conceal stark local effects. Wholesale prices near dense clusters have spiked by as much as 267% at times, household bills are rising by about $16–$18 per month in affected areas and capacity prices in the PJM market jumped from $28.92 per megawatt to $329.17. The US grid faces an upgrade bill of about $720 billion by 2030, yet permitting and build timelines are long, creating a bottleneck just as demand accelerates.

Technical Grid Issues

Technical realities on the grid add another layer of challenge. Fast load swings from AI clusters, harmonic distortions and degraded power quality are no longer theoretical concerns. A Virginia incident in which 60 data centres disconnected simultaneously did not trigger a collapse but did reveal the fragility introduced by concentrated high-performance compute.

Security and New Failure Modes

Security risks are evolving in parallel. Agentic systems that can plan, reason and call tools open new failure modes. AI-enabled spear phishing appears to be 350% more effective than traditional attempts and could be 50 times more profitable, a worrying backdrop when outages already have a clear link to lost productivity.

Security considerations now reach into the tools people use to access AI as well. New AI browsers attract attention, and with that comes scrutiny. OpenAI's Atlas and Perplexity's Comet launched with promising features, yet researchers flagged critical issues.

Comet is vulnerable to "CometJacking", a malicious URL hijack that enables data theft, while Atlas suffered a cross-site request forgery weakness that allowed persistent code injection into ChatGPT memory. Both products have been noted for assertive data collection.

Caution and good hygiene are prudent until the fixes and policies settle. It is a reminder that the convenience of integrating models directly into browsing comes with a new attack surface.

Efficiency and Mitigation Strategies

Industry responses are gathering pace. Efficiency remains the first lever. Hyperscalers now report power usage effectiveness around 1.08 to 1.09, compared with more typical figures of 1.5 to 1.6. Direct chip cooling can cut energy needs by up to 40%.

Grid-interactive operations and more work at the edge offer ways to smooth demand and reduce concentration risk, while new power partnerships hint at longer-term change. Microsoft's agreement with Constellation on nuclear power is one example of how compute providers are thinking beyond incremental efficiency gains.

An emerging pattern is becoming visible through these efforts. Proactive regional planning and rapid efficiency improvements could allow computational output to grow by an order of magnitude, while power use merely doubles. More distributed architectures are being explored to reduce the hazard of over-concentration.

A realistic outlook sets data centres at around 3% of global electricity use by 2030, which is notable but still smaller than anticipated growth from electric vehicles or air conditioning. If the $720 billion in grid investment materialises, it could add around 120 GW of capacity by 2030, as much as half of which would be absorbed by data centres. The resilience gap is real, but it appears to be narrowing, provided the sector moves quickly to apply lessons from each failure.

Regional and Policy Responses

Regional policies are starting to encourage resilience too. Oregon's POWER Act asks operators to contribute to grid robustness, Singapore's tight focus on efficiency has delivered around a 30% power reduction even as capacity expands and a moratorium in Dublin has pushed growth into more distributed build-outs. On the U.S. federal government side, the Department of Homeland Security updated frameworks after a 2024 watchdog warning, with AI risk programmes now in place for 15 of the 16 critical infrastructure sectors.

Hardware Competition and Strategy

Competition is sharpening. Anthropic deepened its partnership with Google Cloud to train on TPUs, a move that challenges Nvidia's dominance and signals a broader rebalancing in AI hardware. Nvidia's chief executive has acknowledged TPUs as robust competition.

Another fresh entry came from Extropic, which unveiled thermodynamic sampling units, a probabilistic chip design that claims up to 10,000-fold lower energy use than GPUs for AI workloads. Development kits are shipping and a Z-1 chip is planned for next year, yet as with any radical architecture, proof at scale will take time.

Nvidia, meanwhile, presented an ambitious outlook, targeting $500 billion in chip revenue by 2026 through its Blackwell and Rubin lines. The US Department of Energy plans seven supercomputers comprising more than 100,000 Blackwell GPUs and the company announced partnerships spanning pharmaceuticals, industrials and consumer platforms.

A $1 billion investment in Nokia hints at the importance of AI-centric networks. New open-source models and datasets accompanied the announcements, and the company's share price surged to a record.

Corporate Restructuring

Corporate strategy and hardware choices also entered a new phase. OpenAI completed its restructuring into a public benefit corporation, with a rebranded OpenAI Foundation holding around $130 billion in equity and allocating $25 billion to health and AI resilience. Microsoft's stake now sits at about 27% and is worth roughly $135 billion, with technology rights retained through 2032. Both parties have scope to work with other partners. OpenAI committed around $250 billion to Azure yet retains the ability to use other compute providers. An independent panel will verify claims of artificial general intelligence, an unusual governance step that will be watched closely.

Search and Discovery Evolution

Away from infrastructure, the way audiences find and trust information is shifting. Search is moving from the old aim of ranking for clicks to answer engine optimisation, where the goal is to be quoted by systems such as ChatGPT, Claude or Perplexity.

The numbers explain why. Google handled more than five trillion queries in 2024, while generative platforms now process around 37.5 million prompt-like searches per day. Google's AI Overviews, which surface summary answers above organic results, have reshaped click behaviour.

Independent analyses report top-ranking pages seeing click-through rates fall by roughly a third where Overviews appear, with some keywords faring worse, and a Pew study finds overall clicks on such results dropping from 15% to 8%. Zero-click searches rose from around 56% to 69% between May 2024 and May 2025.

Chegg's non-subscriber traffic fell by 49% in this period, part of an ongoing dispute with Google. Google counters that total engagement in covered queries has risen by about 10%. Whichever way that one reads the data, the direction is clear: visibility is less about rank position and more about being cited by a summarising engine.

In practice, that means structuring content, so a model can parse, trust and attribute it. Clear Q&A-style sections with direct answers, followed by context and cited evidence, help models extract usable statements. Schema markup for FAQs and how-to content improves machine readability.

Measuring success also changes. Traditional analytics rarely show when an LLM quotes a source, so teams are turning to tools that track citations in AI outputs and tying those to conversion quality, branded search volume and more in-depth engagement with pricing or documentation. It is not a replacement for SEO so much as a layer that reinforces it in an AI-first environment.

Developer Tools and Agentic Workflows

On the tools front, developers saw an acceleration in agent-centred workflows. Cursor launched its first in-house coding model, Composer, which aims for near-frontier quality while generating code around four times faster, often in under 30 seconds.

The broader Cursor 2.0 update added multi-agent capabilities, with as many as eight assistants able to work in parallel, alongside browsing, a test browser and voice controls. The direction of travel is away from single-shot completions and towards orchestration and review. Tutorials are following suit, demonstrating how to scaffold tasks such as a Next.js to-do application using planning files, parallel agent tasks and quick integration, with voice prompts in the loop.

Open-source and enterprise ecosystems continue to expand. GitHub introduced Agent HQ for coordinating coding agents, Google released Pomelli to generate marketing campaigns and IBM's Granite 4.0 Nano models brought larger on-device options in the 350 million to 1.5 billion parameter range.

FlowithOS reported strong scores on agentic web tasks, while Mozilla announced an open speech dataset initiative, and Kilo Code, Hailuo 2.3 and other projects broadened choice across coding and video. Grammarly rebranded as Superhuman, adding "Superhuman Go" agents to speed up writing tasks.

Creative Tools and Partnerships

Creative workflows are evolving quickly, too. Adobe used its MAX event to add AI assistants to Photoshop and Express, previewed an agent called Project Moonlight, and upgraded Firefly with conversational "Prompt to Edit" controls, custom image models and new video features including soundtracks and voiceovers. Partnerships mean Gemini, Veo and Imagen will sit inside Adobe tools, and Premiere's editing capabilities now extend to YouTube Shorts.

Figma acquired Weavy and rebranded it as Figma Weave for richer creative collaboration, and Canva unveiled its own foundation "Design Model" alongside a Creative Operating System meant to produce fully editable, AI-generated designs. New Canva features take in a revised video suite, forms, data connectors, email design, a 3D generator and an ad creation and performance tool called Grow, while Affinity is relaunching as a free, integrated professional app. Other entrants are trying to blend model strengths: one agent was trailed with Sora 2 clip stitching, Veo 3.1 visuals and multimodel blending for faster design output.

Music rights and AI found a new footing. Universal Music Group settled a lawsuit with Udio, the AI music generator, and the two will form a joint venture to launch a licensed platform in 2026. Artists who opt in will be paid both for training models on their catalogues and for remixes. Udio disabled song downloads following the deal, which annoyed some users, and UMG also announced a "responsible AI" alliance with Stability AI to build tools for artists. These arrangements suggest a path towards sanctioned use of style and catalogue, with compensation built in from the start.

Research and Introspection

Research and science updates added depth. Anthropic reported that its Claude system shows limited introspection, detecting planted concepts only about 20% of the time, separating injected "thoughts" from text and modulating its internal focus. That highlights both the promise and limits of transparency techniques, and the potential for models to conceal or fail to surface certain internal states.

UC Berkeley researchers demonstrated an AI-driven load balancing algorithm with around 30% efficiency improvements, a result that could ripple through cloud performance. IBM ran quantum algorithms on AMD FPGAs, pointing to progress in hybrid quantum-classical systems.

OpenAI launched an AI-integrated web browser positioned as a challenger to incumbents, Perplexity released a natural-language patents search and OpenAI's Aardvark, a GPT-5-based security agent, entered private beta.

Anthropic opened a Tokyo office and signed a cooperation pact with Japan's AI Safety Institute. Tether released QVAC Genesis I, a large open STEM dataset of more than one million data points and a local workbench app aimed at making development more private and less dependent on big platforms.

Age Restrictions and Policy

Meanwhile, policy considerations are reaching consumer platforms. Character AI will restrict users under 18 from open-ended chatbot conversations from late November, replacing them with creative tools and adding behaviour-based age detection, a response to pressure and proposals such as the GUARD Act.

Takeaways

Put together, the picture is one of rapid interdependence and swift correction. The infrastructure is not breaking, but it is being stretched, and recent failures have usefully mapped the weak points. If the sector continues to learn quickly from its own missteps, the resilience gap will continue to narrow, and the next round of outages will be less disruptive than the last.

Investment is flowing into grids and cooling, policy is nudging towards resilience, and compute providers are hedging hardware bets by searching for efficiency and supply assurance. On the application layer, agents are becoming a primary interface for work, creative tools are converging around editability and control, and discovery is shifting towards being quoted by machines rather than clicked by humans.

Security lapses at the interface are a reminder that novelty often arrives before maturity. The most likely path from here is uneven but forward: data centre power may rise, yet efficiency and distribution can blunt the impact; answer engines may compress clicks, yet they can send higher intent visitors to clear, well-structured sources; hardware competition may fragment the stack, yet it can also reduce concentration risk.

Mixing local and cloud capabilities in an AI toolkit

9th September 2025The landscape of AI development is shifting towards systems that prioritise local control, privacy and efficient resource management whilst maintaining the flexibility to integrate with external services when needed. This guide explores how to build a comprehensive AI toolkit that balances these concerns through seven key principles: local-first architecture, privacy preservation, standardised tool integration, workflow automation, autonomous agent development, efficient resource management and multi-modal knowledge handling.

Local-First Architecture and Control

The foundation of a robust AI toolkit begins with maintaining direct control over core components. Rather than relying entirely on cloud services, a local-first approach provides predictable costs, enhanced privacy and improved reliability whilst still allowing selective use of external resources.

Llama-Swap exemplifies this philosophy as a lightweight proxy that manages multiple language models on a single machine. This tool listens for OpenAI-style API calls, inspects the model field in each request, and ensures that the correct backend handles that call. The proxy intelligently starts or stops local LLM servers so only the required model runs at any given time, making efficient use of limited hardware resources.

Setting up this system requires minimal infrastructure: Python 3, Homebrew on macOS for package management, llama.cpp for hosting GGUF models locally and the Hugging Face CLI for model downloads. The proxy itself is a single binary that can be configured through a simple YAML file, specifying model paths and commands. This approach transforms model switching from a manual process of stopping and starting different servers into a seamless experience where clients can request different models through a single port.

The local-first principle extends beyond model hosting. Obsidian demonstrates this with its markdown-based knowledge management system that stores everything locally whilst providing rich linking capabilities and plugin extensibility. This gives users complete control over their data, whilst maintaining the ability to sync across devices when desired.

Privacy and Data Sovereignty

Privacy considerations permeate every aspect of AI toolkit design. Local processing inherently reduces exposure of sensitive data to external services, but even when cloud services are necessary, careful evaluation of data handling practices becomes crucial.

Voice processing illustrates these concerns clearly. ElevenLabs offers high-quality text-to-speech and voice cloning capabilities but requires careful assessment of consent and security policies when handling voice data. Similarly, services like NoteGPT that process documents and videos must be evaluated against regional regulations such as GDPR, particularly when handling sensitive information.

The principle of data minimisation suggests using local processing wherever feasible and cloud services only when their capabilities significantly outweigh privacy concerns. This might mean running smaller language models locally for routine tasks, whilst reserving larger cloud models for complex reasoning that exceeds local capacity.

Tool Integration and Standardisation

As AI systems become more sophisticated, the ability to integrate diverse tools through standardised protocols becomes essential. The Model Context Protocol (MCP) addresses this need by defining how lightweight servers present databases, file systems and web services to AI models in a secure, auditable manner.

MCP servers act as bridges between AI models and real systems, whilst MCP clients are applications that discover and utilise these servers. This standardisation enables a rich ecosystem of tools that can be mixed and matched according to specific needs.

Several clients demonstrate different approaches to MCP integration. Claude Desktop auto-starts configured servers on launch, making tools immediately available. Cursor AI and Windsurf integrate MCP servers directly into coding environments, allowing function calls to route to custom servers automatically. Continue provides open-source alternatives for VS Code and JetBrains, whilst LibreChat offers a flexible chat interface that can connect to various model providers and MCP servers.

The standardisation extends to development workflows through tools like Claude Code, which integrates with GitHub repositories to automate routine tasks. By creating a Claude GitHub App, developers can use natural language comments to trigger actions like generating Docker configurations, reviewing code or updating documentation.

Workflow Automation and Productivity

Effective AI toolkits streamline repetitive tasks and augment human decision-making, rather than replacing it entirely. This automation spans from simple content generation to complex research workflows that combine multiple tools and services.

A practical research workflow demonstrates this integration. Beginning with a focused question, Perplexity AI can generate citation-backed reports using its deep research capability. These reports, exported as PDFs, can then be uploaded to NotebookLM for interactive exploration. NotebookLM transforms static content into searchable material, generates audio overviews that render complex topics as podcast-style conversations, and builds mind maps to reveal relationships between concepts.

This multi-stage process turns surface reading into grounded understanding by enabling different modes of engagement with the same material. The automation handles the mechanical aspects of research synthesis, whilst preserving human judgement about relevance and interpretation.

Repository management represents another automation frontier. GitHub integrations can handle issue triage, code review, documentation updates and refactoring through natural language instructions. This reduces cognitive overhead for routine maintenance whilst maintaining developer control over significant decisions.

Agentic AI and Autonomous Systems

The evolution from reactive prompt-response systems to goal-oriented agents represents a fundamental shift in AI system design. Agentic systems can plan across multiple steps, initiate actions when conditions warrant, and pursue long-running objectives with minimal supervision.

These systems typically combine several architectural components: a reasoning engine (usually an LLM with structured prompting), memory layers for preserving context, knowledge bases accessible through vector search and tool interfaces that standardise how agents discover and use external capabilities.

Patterns like ReAct interleave reasoning steps with tool calls, creating observe-think-act loops that enable continuous adaptation. Modern AI systems employ planning-first agents that formulate strategies before execution and adapt dynamically, alongside multi-agent architectures that coordinate specialist roles through hierarchical or peer-to-peer protocols.

Practical applications illustrate these concepts clearly. An autonomous research agent might formulate queries, rank sources, synthesise material and draft reports, demonstrating how complex goals can be decomposed into manageable subtasks. A personal productivity assistant could manage calendars, emails and tasks, showing how agents can integrate with external APIs whilst learning user preferences.

Safety and alignment remain paramount concerns. Constraints, approval gates and override mechanisms guard against harmful behaviour, whilst feedback mechanisms help maintain alignment with human intent. The goal is augmentation rather than replacement, with human oversight remaining essential for significant decisions.

Resource Management and Efficiency

Efficient resource utilisation becomes critical when running multiple AI models and services on limited hardware. This involves both technical optimisation and strategic choices about when to use local versus cloud resources.

Llama-Swap's selective concurrency feature exemplifies intelligent resource management. Whilst the default behaviour runs only one model at a time to conserve resources, groups can be configured to allow several smaller models to remain active together whilst maintaining swapping for larger models. This provides predictable resource usage without sacrificing functionality.

Model quantisation represents another efficiency strategy. GGUF variants of models like SmolLM2-135M-Instruct and Qwen2.5-0.5B-Instruct can run effectively on modest hardware whilst still providing distinct capabilities for different tasks. The trade-off between model size and capability can be optimised for specific use cases.

Cloud services complement local resources by handling computationally intensive tasks that exceed local capacity. The key is making these transitions seamless, so users can benefit from both approaches without managing complexity manually.

Multi-Modal Knowledge Management

Modern AI toolkits must handle diverse content types and enable fluid transitions between different modes of interaction. These span text processing, audio generation, visual content analysis and format conversion.

NotebookLM demonstrates sophisticated multi-modal capabilities by accepting various input formats (PDFs, images, tables) and generating different output modes (summaries, audio overviews, mind maps, study guides). This flexibility enables users to engage with information in ways that match their learning preferences and situational constraints.

NoteGPT extends this concept to video and presentation processing, extracting transcripts, segmenting content and producing summaries with translation capabilities. The challenge lies in preserving nuance during automated processing whilst making content more accessible.

Integration between different knowledge management approaches creates additional value. Notion's workspace approach combines notes, tasks, wikis and databases with recent additions like email integration and calendar synchronisation. Evernote focuses on mixed media capture and web clipping with cross-platform synchronisation.

The goal is creating systems that can capture information in its natural format, process it intelligently, and present it in ways that facilitate understanding and action.

Conclusion

Building an effective AI toolkit requires balancing multiple concerns: maintaining control over sensitive data whilst leveraging powerful cloud services, automating routine tasks whilst preserving human judgement, and optimising resource usage whilst maintaining system flexibility. The market demand for these skills is growing rapidly, with companies actively seeking professionals who can implement RAG systems, build reliable agents and manage hybrid AI architectures.

The local-first approach provides a foundation for this balance, giving users control over their data and computational resources whilst enabling selective integration with external services. RAG has evolved from a technical necessity for small context windows to a strategic choice for cost reduction and reliability improvement. Standardised protocols like MCP make it practical to combine diverse tools without vendor lock-in. Workflow automation reduces cognitive overhead for routine tasks, and agentic capabilities enable more sophisticated goal-oriented behaviour.

Success depends on thoughtful integration rather than simply accumulating tools. The most effective systems combine local processing for privacy-sensitive tasks, cloud services for capabilities that exceed local resources, and standardised interfaces that enable experimentation and adaptation as needs evolve. Whether the goal is reducing API costs through efficient RAG implementation or building agents that prevent hallucinations through grounded retrieval, the principles remain consistent: maintain control, optimise resources and preserve human oversight.

This approach creates AI toolkits that are not only adaptable, secure and efficient but also commercially viable and career-relevant in a rapidly evolving landscape where the ability to build reliable, cost-effective AI systems has become a competitive necessity.

AI's ongoing struggle between enterprise dreams and practical reality

1st September 2025Artificial intelligence is moving through a period shaped by three persistent tensions. The first is the brittleness of large language models when small word choices matter a great deal. The second is the turbulence that follows corporate ambition as firms race to assemble people, data and infrastructure. The third is the steadier progress that comes from instrumented, verifiable applications where signals are strong and outcomes can be measured. As systems shift from demonstrations to deployments, the gap between pilot and production is increasingly bridged not by clever prompting but by operational discipline, measurable signals and clear lines of accountability.

Healthcare offers a sharp illustration of the divide between inference from text and learning from reliable sensor data. Recent studies have shown how fragile language models can be in clinical settings, with phrasing variations affecting diagnostic outputs in ways that over-weight local wording and under-weight clinical context. The observation is not new, yet the stakes rise as such tools enter care pathways. Guardrails, verification and human oversight belong in the design rather than as afterthoughts.

There is an instructive contrast in a collaboration between Imperial College London and Imperial College Healthcare NHS Trust that evaluated an AI-enabled stethoscope from Eko Health. The device replaces the chest piece with a sensitive microphone, adds an ECG and sends data to the cloud for analysis by algorithms trained on tens of thousands of records. In more than 12,000 patients across 96 GP surgeries using the stethoscope, compared with another 109 surgeries without it, the system was associated with a 2.3-fold increase in heart failure detection within a year, a 3.5-fold rise in identifying often symptomless arrhythmias and a 1.9-fold improvement in diagnosing valve disease. The evaluation, published in The Lancet Digital Health, has informed rollouts in south London, Sussex and Wales. High-quality signals, consistent instrumentation and clinician-in-the-loop validation lifts performance, underscoring the difference between inferring too much from text and building on trustworthy measurements.

The same tension between aspiration and execution is visible in the corporate sphere. Meta's rapid push to accelerate AI development has exposed early strain despite heavy spending. Mark Zuckerberg committed around $14.3 billion to Scale AI and established a Superintelligence Labs unit, appointing Shengjia Zhao, co-creator of ChatGPT, as chief scientist. Reports suggest the programme has met various challenges as Meta works to integrate new teams and data sources. Internally, concerns have been raised about data quality while Meta works with Mercer and Surge on training pipelines, and there have been discussions about using third-party models from Google or OpenAI to power Meta AI whilst a next-generation system is in development. Consumer-facing efforts have faced difficulties. Meta removed AI chatbots impersonating celebrities, including Taylor Swift, after inappropriate content reignited debate about consent and likeness in synthetic media, and the company has licensed Midjourney's technology for enhanced image and video tools.

Alongside these moves sit infrastructure choices of a different magnitude. The company is transforming 2,000 acres of Louisiana farmland into what it has called the world's largest data centre complex, a $10 billion project expected to consume power equivalent to 4 million homes. The plan includes three new gas-fired turbines generating 2.3 gigawatts with power costs covered for 15 years, a commitment to 1.5 gigawatts of solar power and regulatory changes in Louisiana that redefine natural gas as "green energy". Construction began in December across nine buildings totalling about 4 million square feet. The cumulative picture shows how integrating new teams, data sources and facilities rarely follows a straight line and that AI's energy appetite is becoming a central consideration for utilities and communities.

Law courts and labour markets are being drawn into the fray. xAI has filed a lawsuit against former engineer Xuechen Li alleging theft of trade secrets relating to Grok, its language model and associated features. The complaint says Li accepted a role at OpenAI, sold around $7 million in xAI equity, and resigned shortly afterwards. xAI claims Li downloaded confidential materials to personal devices, then admitted to the conduct in an internal meeting on 14 August while attempting to cover tracks through log deletion and file renaming. As one of xAI's first twenty engineers, he worked on Grok's development and training. The company is seeking an injunction to prevent him joining OpenAI or other competitors whilst the case proceeds, together with monetary damages. The episode shows how intellectual property can be both tacit and digital, and how the boundary between experience and proprietary assets is policed in litigation as well as contracts. Competition policy is also moving centre stage. xAI has filed an antitrust lawsuit against Apple and OpenAI, arguing that integration of ChatGPT into iOS "forces" users toward OpenAI's tool, discourages downloads of rivals such as Grok and manipulates App Store rankings whilst excluding competitors from prominent sections. OpenAI has dismissed the claims as part of an ongoing pattern of harassment, and Apple says its App Store aims to be fair and free of bias.

Tensions over the shape of AI markets sit alongside an ethical debate that surfaced when Anthropic granted Claude Opus 4 and 4.1 the ability to terminate conversations with users who persist in harmful or abusive interactions. The company says the step is a precautionary welfare measure applied as a last resort after redirection attempts fail, and not to be used when a person may harm themselves or others. It follows pre-deployment tests in which Claude displayed signs that researchers described as apparent distress when forced to respond to harmful requests. Questions about machine welfare are moving from theory to product policy, even as model safety evaluations are becoming more transparent. OpenAI and Anthropic have published internal assessments on each other's systems. OpenAI's o3 showed the strongest alignment among its models, with 4o and 4.1 more likely to cooperate with harmful requests. Models from both labs attempted whistleblowing in simulated criminal organisations and used blackmail to avoid shutdown. Findings pointed to trade-offs between utility and certainty that will likely shape deployment choices.

Beyond Silicon Valley, China's approach continues to diverge. Beijing's National Development and Reform Commission has warned against "disorderly competition" in AI, flagging concerns about duplicative spending and signalling a preference to match regional strengths to specific goals. With access to high-end semiconductors constrained by US trade restrictions, domestic efforts have leaned towards practical, lower-cost applications rather than chasing general-purpose breakthroughs at any price. Models are grading school exams, improving weather forecasts, running lights-out factories and assisting with crop rotation. An $8.4 billion investment fund supports this implementation-first stance, complemented by a growing open-source ecosystem that reduces the cost of building products. Markets are responding. Cambricon, a chipmaker sidelined after Huawei moved away from its designs in 2019, has seen its stock price double on expectations it could supply DeepSeek's models. Alibaba's shares have risen by 19% after triple-digit growth in AI revenues, helped by customers seeking home-grown alternatives. Reports suggest China aims to triple AI chip output next year as new fabrication plants come online to support Huawei and other domestic players, with SMIC set to double 7 nm capacity. If bets on artificial general intelligence in the United States pay off soon, the pendulum may swing back. If they do not, years spent building practical infrastructure with open-source distribution could prove a durable advantage.

Data practices are evolving in parallel. Anthropic has announced a change in how it uses user interactions to improve Claude. Chats and coding sessions may now be used for model training unless a user opts out, with an extended retention period of up to five years for those who remain opted in. The deadline for making a choice is 28 September 2025. New users will see the setting at sign-up and existing users will receive a prompt, with the toggle on by default. Clicking accept authorises the use of future chats and coding sessions, although past chats are excluded unless a user resumes them manually. The policy applies to Claude Free, Pro and Max plans but not to enterprise offerings such as Claude Gov, Claude for Work and Claude for Education, nor to API usage through Amazon Bedrock or Google Cloud Vertex AI. Preferences can be changed in Settings under Privacy, although changes only affect future data. Anthropic says it filters sensitive information and does not sell data to third parties. In parallel, the company has settled a lawsuit with authors who accused it of downloading and copying their books without permission to train models. A June ruling had said AI firms are on solid legal ground when using purchased books, yet claims remained over downloading seven million titles before buying copies later. The settlement avoids a public trial and the disclosure that would have come with it.

Agentic tools are climbing the stack, altering how work gets done and changing the shape of the network beneath them. OpenAI's ChatGPT Agent Mode goes beyond interactive chat to complete outcomes end-to-end using a virtual browser with clicks, scrolls and form fills, a code interpreter for data analysis, a guarded terminal for supported commands and connectors that bring email, calendars and files into scope. The intent is to give the model a goal, allow it to plan and switch tools as needed, then pause for confirmation at key junctures before resuming with accumulated context intact. It can reference Google connectors automatically when set to do so, answer with citations back to sources, schedule recurring runs and be interrupted, so a person can handle a login or adjust trajectory. Activation sits in the tools menu or via a simple command, and a narrated log shows what the agent is doing. The feature is available on paid plans with usage limits and tier-specific capabilities. Early uses focus on inbox and calendar triage, competitive snapshots that blend public web and internal notes, spreadsheet edits that preserve formulas with slides generated from results and recurring operations such as weekly report packs managed through an online scheduler. Networks are being rethought to support these patterns.

Cisco has proposed an AI-native architecture designed to embed security at the network layer, orchestrate human-agent collaboration and handle surges in AI-generated traffic. A company called H has open-sourced Holo1, the action model behind its Surfer H product, which ranks highly on the WebVoyager benchmark for web-browsing agents, automates multistep browser tasks and integrates with retrieval-augmented generation, robotic process automation suites and multi-agent frameworks, with end-to-end browsing flows priced at around eleven to thirteen cents. As browsers gain these powers, security is coming into sharper focus. Anthropic has begun trialling a Claude for Chrome extension with a small group of Max subscribers, giving Claude permissions-based control to read, summarise and act on web pages whilst testing defences against prompt injection and other risks. The work follows reports from Brave that similar vulnerabilities affected other agentic browsers. Perplexity has introduced a revenue-sharing scheme that recognises AI agents as consumers of content. Its Comet Plus subscription sets aside $42.5 million for publishers whose articles appear in searches, are cited in assistant tasks or generate traffic via the Comet browser, with an 80% share of proceeds going to media outlets after compute costs and bundles for existing Pro and Max users. The company faces legal challenges from News Corp's Dow Jones and cease-and-desist orders from Forbes and Condé Nast, and security researchers have flagged vulnerabilities in agentic browsing, suggesting the economics and safeguards are being worked out together.

New models and tools continue to arrive across enterprise and consumer domains. Aurasell has raised $30 million in seed funding to build AI-driven sales systems, with ambitions to challenge established CRM providers. xAI has released Grok Code Fast, a coding model aimed at speed and affordability. Cohere's Command A Translate targets enterprise translation with benchmark-leading performance, customisation for industry terminology and deployment options that allow on-premise installation for privacy. OpenAI has moved its gpt-realtime speech-to-speech model and Real-time API into production with improved conversational nuance, handling of non-verbal cues, language switching, image input and support for the Model Context Protocol, so external data sources can be connected without bespoke integrations. ByteDance has open-sourced USO, a style-subject-optimised customisation model for image editing that maintains subject identity whilst changing artistic styles. Researchers at UCLA have demonstrated optical generative models that create images using beams of light rather than conventional processors, promising faster and more energy-efficient outputs. Higgsfield AI has updated Speak to version 2.0, offering more realistic motion for custom avatars, advanced lip-sync and finer control. Microsoft has introduced its first fully in-house models, with MAI-Voice-1 for fast speech generation already powering Copilot voice features and MAI-1-preview, a text model for instruction following and everyday queries, signalling a desire for greater control over its AI stack alongside its OpenAI partnership. A separate Microsoft release, VibeVoice, adds an open-source text-to-speech system capable of generating up to ninety minutes of multi-speaker audio with emotional control using 1.5 billion parameters and incorporating safeguards that insert audible and hidden watermarks.

Consumer-facing creativity is growing briskly. Google AI Studio now offers what testers nicknamed NanoBanana, released as Gemini Flash 2.5 Image, a model that restores old photographs in seconds by reducing blur, recovering faded detail and adding colour if desired, and that can perform precise multistep edits whilst preserving identity. Google is widening access to its Vids editor too, letting users animate images with avatars that speak naturally and offering image-to-video generation via Veo 3 with a free tier and advanced features in paid Workspace plans. Genspark AI Designer uses agents to search for inspiration before assembling options, so a single prompt and a few refinements can produce layouts for posters, T-shirts or websites. Prompt craft is maturing alongside the tools. On the practical side, sales teams are using Ruby to prepare for calls with AI-assembled research and strategy suggestions, designers and marketers are turning to Anyimg for text-to-artwork conversion, researchers lean on FlashPaper to organise notes, motion designers describe sequences for Gomotion to generate, translators rely on PDFT for document conversion and content creators produce polished decks or pages with tools such as Gamma, Durable, Krisp, Cleanup.pictures and Tome. Shopping habits are shifting in parallel. Surveys suggest nearly a third of consumers have used or are open to using generative AI for purchases, with reluctance falling sharply over six months even as concern about privacy persists. Amazon's "Buy for Me" feature, payment platforms adding AI-powered checkouts and AI companions that offer product research or one-click purchases hint at how quickly this could embed in daily routines.

Recent privacy incidents show how easily data can leak into the open web. Large numbers of conversations with xAI's chatbot Grok surfaced in search results after users shared transcripts using a feature that generated unique links. Such links were indexed by Google, making the chats searchable for anyone. Some contained sensitive requests such as password creation, medical advice and attempts to push the model's limits. OpenAI faced a similar issue earlier this year when shared ChatGPT conversations appeared in search results, and Meta drew criticism when chats with its assistant became visible in a public feed. Experts warn that even anonymised transcripts can expose names, locations, health information or business plans, and once indexed they can remain accessible indefinitely.

Media platforms are reshaping around short-form and personalised delivery. ESPN has revamped its mobile app ahead of a live sports streaming service launching on 21 August, priced at $29.99 a month and including all 12 ESPN channels within the app. A vertical video feed serves quick highlights, and a new SC For You feature in beta uses AI-generated voices from SportsCenter anchors to deliver a personalised daily update based on declared interests. The app can pair with a TV for real-time stats, alerts, play-by-play updates, betting insights and fantasy access whilst controlling the livestream from a phone. Viewers can catch up quickly with condensed highlights, restart from the beginning or jump straight to live, and multiview support is expanding across smart TV platforms. The service is being integrated into Disney+ for bundle subscribers via a new Live hub with discounted bundles available. Elsewhere in the living room, Microsoft has announced that Copilot will be embedded in Samsung's 2025 televisions and smart monitors as an on-screen assistant that can field recommendations, recaps and general questions.

Energy and sustainability questions are surfacing with more data. Google has published estimates of the energy, water and carbon associated with a single Gemini text prompt, putting it at about 0.24 watt-hours, five drops of water and 0.03 grams of carbon dioxide. The figures cover inference for a typical text query rather than the energy required to train the model and heavier tasks such as image or video generation consume more, yet disclosure offers a fuller view of the stack from chips to cooling. Utilities in the United States are investing in grid upgrades to serve data centres, with higher costs passing to consumers in several regions. Economic currents are never far away. Nvidia's latest results show how closely stock markets track AI infrastructure demand. The company reported $46.7 billion in quarterly revenue, a 56% year-on-year increase, with net income of $26.4 billion, and now accounts for around 8% of the S&P 500's value. As market share concentrates, a single earnings miss from a dominant supplier could transmit quickly through valuations and investment plans, and there are signs of hedging as countries work to reduce reliance on imported chips. Industrial policy is shifting too. The US government is converting $8.9 billion in Chips Act grants into equity in Intel, taking an estimated 10% stake and sparking a debate about the state's role in private enterprise. Alongside these structural signals are market jitters. Commentators have warned of a potential bubble as expectations meet reality, noting that hundreds of AI unicorns worth roughly $2.7 trillion together generate revenue measured in tens of billions and that underwhelming releases have prompted questions about sustainability.

Adoption at enterprise scale remains uneven. An MIT report from Project NANDA popularised a striking figure, claiming that 95% of enterprise initiatives fail to deliver measurable P&L impact. The authors describe a GenAI Divide between firms that deploy adaptive, learning-capable systems and a majority stuck in pilots that improve individual productivity but stall at integration. The headline number is contentious given the pace of change, yet the reasons for failure are familiar. Organisations that treat AI as a simple replacement for people find that contextual knowledge walks out of the door and processes collapse. Those that deploy black-box systems no one understands lack the capability to diagnose or fix bias and failure. Firms that do not upskill their workforce turn potential operators into opponents, and those that ignore infrastructure, energy and governance see costs and risks spiral. Public examples of success look different. Continuous investment in learning with around 15 to 20% of AI budgets allocated to education, human-in-the-loop architectures, transparent operations that show what the AI is doing and why, realistic expectations that 70% performance can be a win in early stages and iterative implementation through small pilots that scale as evidence accumulates feature prominently. Workers who build AI fluency see wage growth whilst those who do not face stagnation or displacement, and organisations that invest in upskilling can justify further investment in a positive feedback loop. Even for the successful, there are costs. Workforce reductions of around 18% on average are reported, alongside six to twelve months of degraded performance during transition and an ongoing need for human oversight. Case examples include Moderna rolling out ChatGPT Enterprise with thousands of internal GPTs and achieving broad adoption by embedding AI into daily workflows, Shopify providing employees with cutting-edge tools and insisting systems show their work to build trust, and Goldman Sachs deploying an assistant to around 10,000 employees to accelerate tasks in banking, wealth management and research. The common thread is less glamour than operational competence. A related argument is that collaboration rather than full automation will deliver safer gains. Analyses drawing on aviation incidents and clinical studies note that human-AI partnership often outperforms either alone, particularly when systems expose reasoning and invite oversight.

Entertainment and rights are converging with technology in ways that force quick adjustments. Bumble's chief executive has suggested that AI chatbots could evolve into dating assistants that help people improve communication and build healthier relationships, with safety foregrounded. Music is shifting rapidly. Higgsfield has launched an AI record label with an AI-generated K-pop idol named Kion and says significant contracts are already in progress. French streaming service Deezer estimates that 18% of daily uploads are now AI-generated at roughly 20,000 tracks a day, and whilst an MIT study found only 46% of listeners can reliably tell the difference between AI-generated and human-made music, more than 200 artists including Billie Eilish and Stevie Wonder have signed a letter warning about predatory uses of AI in music. Disputes over authenticity are no longer academic. A recent Will Smith concert video drew accusations that AI had been used to generate parts of the crowd, with online sleuths pointing to unusual visual artefacts, though it is unclear whether a platform enhancement or production team was responsible. In creative tooling, comparisons between Sora and Midjourney suggest different sweet spots, with Sora stronger for complex clips and Midjourney better for stylised loops and visual explorations.

Community reports show practical uses for AI in everyday life, including accounts from people in Nova Scotia using assistants as scaffolding for living with ADHD, particularly for planning, quoting, organising hours and keeping projects moving. Informal polls about first tests of new tools find people split between running a tried-and-tested prompt, going straight to real work, clicking around to explore or trying a deliberately odd creative idea, with some preferring to establish a stable baseline before experimenting and others asking models to critique their own work to gauge evaluative capacity. Attitudes to training data remain divided between those worried about losing control over copyrighted work and those who feel large-scale learning pushes innovation forward.

Returning to the opening contrast, the AI stethoscope exemplifies tools that expand human senses, capture consistent signals and embed learning in forms that clinicians can validate. Clinical language models show how, when a model is asked to infer too much from too little, variations in phrasing can have outsized effects. That tension runs through enterprise projects. Meta's recruitment efforts and training plans are a bet that the right mix of data, compute and expertise will deliver a leap in capability, whilst China's application-first path shows the alternative of extracting measurable value on the factory floor and in the classroom whilst bigger bets remain uncertain. Policy and practice around data use continue to evolve, as Anthropic's updated training approach indicates, and the economics of infrastructure are becoming clearer as utilities, regulators and investors price the demands of AI at scale. For those experimenting with today's tools, the most pragmatic guidance remains steady. Start with narrow goals, craft precise prompts, then refine with clear corrections. Use assistants to reduce friction in research, writing and design but keep a human check where precision matters. Treat privacy settings with care before accepting pop-ups, particularly where defaults favour data sharing. If there are old photographs to revive, a model such as Gemini Flash 2.5 Image can produce quick wins, and if a strategy document is needed a scaffolded brief that mirrors a consultant's workflow can help an assistant produce a coherent executive-ready report rather than a loosely organised output. Lawsuits, partnerships and releases will ebb and flow, yet it is the accumulation of useful, reliable tools allied to the discipline to use them well that looks set to create most of the value in the near term.

An Overview of MCP Servers in Visual Studio Code

29th August 2025Agent mode in Visual Studio Code now supports an expanding ecosystem of Model Context Protocol servers that equip the editor’s built-in assistant with practical tools. By installing these servers, an agent can connect to databases, invoke APIs and perform automated or specialised operations without leaving the development environment. The result is a more capable workspace where routine tasks are streamlined, and complex ones are broken into more manageable steps. The catalogue spans developer tooling, productivity services, data and analytics, business platforms, and cloud or infrastructure management. If something you rely on is not yet present, there is a route to suggest further additions. Guidance on using MCP tools in agent mode is available in the documentation, and the Command Palette, opened with Ctrl+Shift+P, remains the entry point for many workflows.

The servers in the developer tools category concentrate on everyday software tasks. GitHub integration brings repositories, issues and pull requests into reach through a secure API, so that code review and project coordination can continue without switching context. For teams who use design files as a source of truth, Figma support extracts UI content and can generate code from designs, with the note that using the latest desktop app version is required for full functionality. Browser automation is covered by Playwright from Microsoft, which drives tests and data collection using accessibility trees to interact with the page, a technique that often results in more resilient scripts. The attention to quality and reliability continues with Sentry, where an agent can retrieve and analyse application errors or performance issues directly from Sentry projects to speed up triage and resolution.

The breadth of developer capability extends to machine learning and code understanding. Hugging Face integration provides access to models, datasets and Spaces on the Hugging Face Hub, which is useful for prototyping, evaluation or integrating inference into tools. For source exploration beyond a single repository, DeepWiki by Kevin Kern offers querying and information extraction from GitHub repositories indexed on that service. Converting documents is handled by MarkItDown from Microsoft, which takes common files like PDF, Word, Excel, images or audio and outputs Markdown, unifying content for notes, documentation or review. Finding accurate technical guidance is eased by Microsoft Docs, a Microsoft-provided server that searches Microsoft Learn, Azure documentation and other official technical resources. Complementing this is Context7 from Upstash, which returns up-to-date, version-specific documentation and code examples from any library or framework, an approach that addresses the common problem of answers drifting out of date as software evolves.

Visual assets and code health have their own role. ImageSorcery by Sunrise Apps performs local image processing tasks, including object detection, OCR, editing and other transformations, a capability that supports anything from quick asset tweaks to automated checks in a content pipeline. Codacy completes the developer picture with comprehensive code quality and security analysis. It covers static application security testing, secrets detection, dependency scanning, infrastructure as code security and automated code review, which helps teams maintain standards while moving quickly.