TOPIC: FILE TRANSFER PROTOCOL

Running cron jobs using the www-data system account

22nd December 2018When you set up your own web server or use a private server (virtual or physical), you will find that web servers run using the www-data account. That means that website files need to be accessible to that system account if not owned by it. The latter is mandatory if you want WordPress to be able to update itself with needing FTP details.

It also means that you probably need scheduled jobs to be executed using the privileges possessed by the www-data account. For instance, I use WP-CLI to automate spam removal and updates to plugins, themes and WordPress itself. Spam removal can be done without the www-data account, but the updates need file access and cannot be completed without this. Therefore, I got interested in setting up cron jobs to run under that account and the following command helps to address this:

sudo -u www-data crontab -e

For that to work, your own account needs to be listed in /etc/sudoers or be assigned to the sudo group in /etc/group. If it is either of those, then entering your own password will open the cron file for www-data, and it can be edited as for any other account. Closing and saving the session will update cron with the new job details.

In fact, the same approach can be taken for a variety of commands where files only can be accessed using www-data. This includes copying, pasting and deleting files as well as executing WP-CLI commands. The latter issues a striking message if you run a command using the root account, a pervasive temptation given what it allows. Any alternative to the latter has to be better from a security standpoint.

Killing a hanging SSH session

20th April 2018My web hosting provider offers SSH access that I often use for such things as updating Matomo and Drupal, together with more intensive file moving than an FTP session can support. However, I have found recently that I no longer can exit cleanly from such sessions using the exit command.

Because this produces a locked terminal session, I was keen to find an alternative to shutting down the terminal application before starting it again. Handily, there is a keyboard shortcut that does just what I need.

It varies a little according to the keyboard that you have. Essentially, it combines the carriage return key with ones for the tilde (~) and period (.) characters. The tilde may need to be produced by the combining the shift and backtick keys on some keyboard layouts, but that is not needed on mine. So far, I have found that the <CR>+~+. combination does what I need until SSH sessions start exiting as expected.

Turning off seccomp sandbox in vsftpd

21st September 2013Within the last week, I set up a virtual web server using Arch Linux to satisfy my own curiosity, since the DIY nature of Arch means that you can build up exactly what you need without having any real constraints put upon you. Something that didn't surprise me about this was that it took me more work than the virtual server that I created using Ubuntu Server, yet I didn't expect Proftpd to be missing from the main repositories. Though the package can be found in the AUR, I didn't fancy the prospect of dragging more work on myself, so I went with vsftpd (Very Secure FTP Daemon) instead. In contrast to Proftpd, this is available in the standard repositories and there is a guide to its use in the Arch user documentation.

However, while vsftpd worked well just after installation, connections to the virtual FTP soon failed with FileZilla began issuing uninformative messages. In fact, it was the standard command line FTP client on my Ubuntu machine that was more revealing. It issued the following message that let me to the cause after my engaging the services of Google:

500 OOPS: priv_sock_get_cmd

With version 3.0 of vsftpd, a new feature was introduced, and it appears that this has caused problems for a few people. That feature is seccomp_sandbox and it can be turned off by adding the following line in /etc/vsftpd.conf:

seccomp_sandbox=NO

That solved my problem, and version 3.0.2 of vsftpd should address the issue with seccomp sandboxing anyway. In case, this solution isn't as robust as it should be because seccomp is not supported in the Linux kernel that you are using, turning off the new feature still needs to be an option, though.

Recursive FTP with the command line

6th August 2008Here's a piece of Linux/UNIX shell scripting code that will do a recursive FTP refresh of a website for you:

lftp <<~/Tmp/log_file.tmp 2>>~/Tmp/log_file.tmp

open ${HOSTNAME}

user ${USER} ${PSSWD}

mirror -R -vvv "${REP_SRC}" "${REP_DEST}"

EndFTP

When my normal FTP scripting approach left me with a broken WordPress installation and an invalid ticket in the project's TRAC system that I had to close, I turned to looking for a more robust way of achieving the website updates and that's what led me to seek out the options available for FTP transfers that explicitly involve directory recursion. The key pieces in the code above are the use of lftp in place of ftp, my more usual tool for the job, and the invocation of the mirror command that comes with lftp. The -R switch ensures that file transfer is from local to remote (vice versa is the default) and -vvv turns on maximum verbosity, a very useful thing when you find that it takes longer than more usual means. It's all much slicker than writing your own script to do the back-work of ploughing through the directory structure and ensuring that the recursive transfers take place. Saying that, it is possible to have a one line variant of the above, but the way that I have set things up might be more familiar to users of ftp.

Automating FTP II: Windows

15th April 2008Having thought about automating command line FTP on UNIX/Linux, the same idea came to me for Windows too, and you can achieve much the same results, even if the way of getting there is slightly different. The first route to consider is running a script file with the ftp command at the command prompt (you may need %windir%system32ftp.exe to call the right FTP program in some cases):

ftp -s:script.txt

The contents of the script are something like the following:

open ftp.server.host

user

password

lcd destination_directory

cd source_directory

prompt

get filename

bye

It doesn't take much to turn your script into a batch file that takes the username as its first input and your password as its second for the sake of enhanced security and deletes any record thereof for the same reason:

echo open ftp.server.host > script.txt

echo %1 >> script.txt

echo %2 >> script.txt

echo cd htdocs >> script.txt

echo prompt >> script.txt

echo mget * >> script.txt

echo bye >> script.txt

%windir%system32ftp.exe -s:script.txt

del script.txt

The feel of the Windows command line (in Windows 2000, it feels very primitive, but Windows XP is better and there's PowerShell now too) can leave a lot to be desired by someone accustomed to its UNIX/Linux counterpart, yet there's still a lot of tweaking that you can do to the above, given a bit of knowledge of the Windows batch scripting language. Any escape from a total dependence on pointing and clicking can only be an advance.

Automating FTP I: UNIX and Linux

11th April 2008Having got tired of repeated typing in everything at the prompt of an interactive command line FTP session and doing similar things via the GUI route, I started to wonder if there was a scripting alternative and, lo and behold, I found it after a spot of googling. There are various opportunities for its extension such as prompting for username and password instead of the risky approach of including them in a script or cycling through a directory structure but here's the foundation stone for such tinkering anyway:

HOSTNAME='ftp.server.host'

USER='user'

PSSWD='password'

REP_SRC='source_directory'

REP__DEST='destination_directory'

FILENAME='*'

rm -rf log_file.tmp

cd "${REP_DEST}"

ftp -i -n -v <<EndFTP >>log_file.tmp 2>>log_file.tmp

open ${HOSTNAME}

user ${USER} ${PSSWD}

prompt

cd "${REP_SRC}"

mget "${FILENAME}"

EndFTP

cd ~

java.net.MalformedURLException: unknown protocol: j

15th December 2007While I know that there are better things to call a blog post than to use part of an error message that I got from Saxonica's Saxon when I was converting XML files into PHP equivalents for the visitor information section of my main website, it is handy for anyone else needing to look up a solution when they encounter it. In my case, I use the open source Saxon-B rather than the commercial Saxon-SA, and it fulfils all of my needs. Version 8 and later (it has now reached 9.0.0.2) handle the XSLT 2.0 features that I need to make the transformations really clever.

Also, because Saxon is available as a jar file, it is cross-platform so long as you have Java on board. There are, however, some slight differences in behaviour. Now, I run the thing on Linux, where any Windows-style file locations are not recognised. When I had the file path in a DTD declaration starting with J:\, that was thought to be a protocol like file, http, https, ftp and so on because of the colon. Since there's no j protocol, Java gets confused, issuing the rather obscure error that titles this post. Otherwise, the migration of the Perl script that creates XSLT files and fires off the required XML to PHP transformations was a fairly straightforward exercise once file locations and shebang line were set right.

Setting up Quanta Plus to edit files on your web server

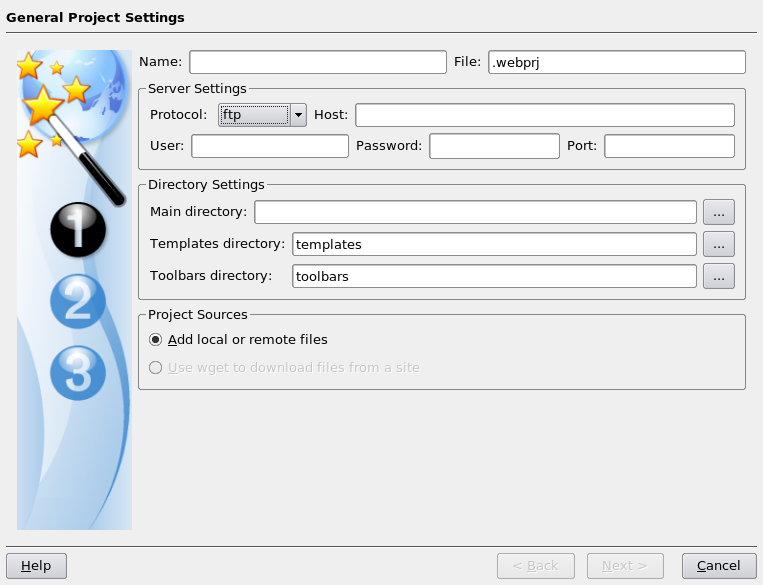

3rd December 2007On Saturday, my hillwalking and photo gallery website suffered an outage thanks to Fasthosts, the site's hosting provider, having a security breach and deciding to change all my passwords. While I won't bore you with the details here, I had to change the password for my MySQL database from their unmemorable suggestion and hence the configuration file for the hillwalking blog. To accomplish this, I set up Quanta Plus to edit the requisite file on the server itself. That was achieved by creating a new project, setting the protocol as FTP and completing the details in the wizard, all relatively straightforward stuff. Since I have a habit of doing this from Dreamweaver, it's nice to see that an open source alternative provides the same sort of functionality.

Using SAS FILENAME statement to extract directory file listings into SAS

30th May 2007The filename statement's pipe option allows you to direct the output of operating system commands into SAS for further processing. Usefully, the Windows dir command (with its /s switch) and the UNIX and Linux equivalent ls allow you to get a file listing into SAS. For example, here's how you extract the list of files in your UNIX or Linux home directory into SAS:

filename dirlist pipe 'ls ~';

data dirlist;

length filename $200;

infile dirlist length=reclen;

input buffer $varying200. reclen;

run;Using the ftp option on the filename statement allows you to get a list of the files in a directory on a remote server, even one with a different operating system to that used on the client (PC or server), very useful for cases where cross-platform systems are involved. Here's some example code:

filename dirlist ftp ' ' ls user='user' host='host' prompt;

data _null_;

length filename $200;

infile dirlist length=reclen;

input buffer $varying200. reclen;

run;The PROMPT option will cause SAS to ask you for a password, and the null string is where you would otherwise specify the name of a file.