TOPIC: CYBERNETICS

Not so fast: When tasks using AI may take more time and attention than you expect

29th November 2025If you believed all the hype that surrounds AI, you might believe that all of us would out of work before we knew it. The truth is that the new technology is not that miraculous, especially when based on some experiences that I have been having. Firstly, there are deficiencies and then there will be new things that need doing as well as becoming possible for the first time.

PowerShell Scripting

One pertained to spinning up PowerShell scripts for doing code reviews of SAS programs submitted by a vendor to a client of mine. While all worked well for simple cases, I found that more complex tasks like finding the datasets using in code and comparing them against what is listed in the program headers became too complicated and probably needed a week of my time to get things in order, which was the amount of time that I did not have.

Picking out macro calls from code and comparing them against lists in the headers was more successful because the code situations were less variable. Other tasks were really handy, though, even if I would benefit from AI teaching me how to write PowerShell scripts by myself. That would give me more scope to critique the code that was being produced. Starting simple and progressing one step at a time would ensure sounder embedding of PowerShell commands in my memory.

Article Writing

It is all too tempting to get AI to write articles on subjects of your choosing for website content production. That which sounds like a labour-saving way to go can command a higher amount of attention than some realise. Sometimes, writing it all by yourself might be a better approach, one that I am using for this piece.

My workflow often involves these steps when AI is involved: assembly of the source material, conversion of source material into an article by one AI, fact checking of the same text by another AI and restructuring by that second AI with added links for those wanting to find out more. While human content production is reduced, the need for human oversight, along with fact and link checking, means that time is used in other ways.

In short, it is best not to rush this, as I found when assembling two articles on Canadian rail travel. You also need to watch how much content is being processed because that can both overwhelm human bandwidth and undermine human engagement. This is more than proofreading of what is produced; you need to know something about a given subject yourself too.

Image Production

While AI can do well with producing some images, there are ones where it will struggle because of lack of training. An example is when I asked for an image with cyclists placing bicycles on a bus before boarding it. None of the generated images worked, meaning that a trip to a stock library was in order.

While some can specify everything in a prompt at one sitting, I work more iteratively, which probably adds to any task, especially with image generation. It proves that still is a place for stock libraries and having your own personal library as well. We need to remain as orchestrators in all of this, and lack of personal talent can remain a limitation.

System Administration

While this may not be something that I do professionally, my keeping an eye on the worlds of DevOps and DevSecOps means that I am seeing that the presence of AI is adding work of its own. This has no sign of lessening, proving that work is changing dramatically instead of reducing, especially you bring Agentic AI into the equation.

It feels much like the advent of personal computing and that produced a similar seismic shift in the workplace in more innocent times. This time around, nefarious actors are misusing AI, a not unexpected if ominous trend, adding to the security woes that have beset computing for a few decades now.

A Human in the Loop?

At a recent conference, much was being made of keeping humanity in the loop when it came to using AI. There is a catch, though: how do we have engaged humans in the loop? After all, creating computer code allows one to get into flow and remain engaged, possibly overriding any feelings of fatigue. This is what needs replicating, hardly an experience reported with automation in other professions.

The use of AI is a developing field, bringing new challenges as well as solving old problems. That also means upskilling on a grand scale, something happened over time with personal and business computing. While it looks as if the process could be faster this time around, it is too early to know enough about where this revolution is going to take us. That may be enough to keep us engaged.

AI infrastructure under pressure: Outages, power demands and the race for resilience

1st November 2025The past few weeks brought a clear message from across the AI landscape: adoption is racing ahead, while the underlying infrastructure is working hard to keep up. A pair of major cloud outages in October offered a stark stress test, exposing just how deeply AI has become woven into daily services.

At the same time, there were significant shifts in hardware strategy, a wave of new tools for developers and creators and a changing playbook for how information is found online. There is progress on resilience and efficiency, yet the system is still bending under demand. Understanding where it held, where it creaked and where it is being reinforced sets the scene for what comes next.

Infrastructure Stress and Outages

The outages dominated early discussion. An AWS incident that lasted around 15 hours and disrupted more than a thousand services was followed nine days later by a global Azure failure. Each cascaded across systems that depend on them, illustrating how AI now amplifies the consequences of platform problems.

This was less about a single point of failure and more about the growing blast radius when connected services falter. The effect on productivity was visible too: a separate 10-hour ChatGPT downtime showed how fast outages of core AI tools now translate into lost work time.

Power Demand and Grid Strain

Behind the headlines sits a larger story about electricity, grids and planning. Data centres accounted for roughly 4% of US electricity use in 2024, about 183 TWh and the International Energy Agency projects around 945 TWh by 2030, with AI as a principal driver.

The averages conceal stark local effects. Wholesale prices near dense clusters have spiked by as much as 267% at times, household bills are rising by about $16–$18 per month in affected areas and capacity prices in the PJM market jumped from $28.92 per megawatt to $329.17. The US grid faces an upgrade bill of about $720 billion by 2030, yet permitting and build timelines are long, creating a bottleneck just as demand accelerates.

Technical Grid Issues

Technical realities on the grid add another layer of challenge. Fast load swings from AI clusters, harmonic distortions and degraded power quality are no longer theoretical concerns. A Virginia incident in which 60 data centres disconnected simultaneously did not trigger a collapse but did reveal the fragility introduced by concentrated high-performance compute.

Security and New Failure Modes

Security risks are evolving in parallel. Agentic systems that can plan, reason and call tools open new failure modes. AI-enabled spear phishing appears to be 350% more effective than traditional attempts and could be 50 times more profitable, a worrying backdrop when outages already have a clear link to lost productivity.

Security considerations now reach into the tools people use to access AI as well. New AI browsers attract attention, and with that comes scrutiny. OpenAI's Atlas and Perplexity's Comet launched with promising features, yet researchers flagged critical issues.

Comet is vulnerable to "CometJacking", a malicious URL hijack that enables data theft, while Atlas suffered a cross-site request forgery weakness that allowed persistent code injection into ChatGPT memory. Both products have been noted for assertive data collection.

Caution and good hygiene are prudent until the fixes and policies settle. It is a reminder that the convenience of integrating models directly into browsing comes with a new attack surface.

Efficiency and Mitigation Strategies

Industry responses are gathering pace. Efficiency remains the first lever. Hyperscalers now report power usage effectiveness around 1.08 to 1.09, compared with more typical figures of 1.5 to 1.6. Direct chip cooling can cut energy needs by up to 40%.

Grid-interactive operations and more work at the edge offer ways to smooth demand and reduce concentration risk, while new power partnerships hint at longer-term change. Microsoft's agreement with Constellation on nuclear power is one example of how compute providers are thinking beyond incremental efficiency gains.

An emerging pattern is becoming visible through these efforts. Proactive regional planning and rapid efficiency improvements could allow computational output to grow by an order of magnitude, while power use merely doubles. More distributed architectures are being explored to reduce the hazard of over-concentration.

A realistic outlook sets data centres at around 3% of global electricity use by 2030, which is notable but still smaller than anticipated growth from electric vehicles or air conditioning. If the $720 billion in grid investment materialises, it could add around 120 GW of capacity by 2030, as much as half of which would be absorbed by data centres. The resilience gap is real, but it appears to be narrowing, provided the sector moves quickly to apply lessons from each failure.

Regional and Policy Responses

Regional policies are starting to encourage resilience too. Oregon's POWER Act asks operators to contribute to grid robustness, Singapore's tight focus on efficiency has delivered around a 30% power reduction even as capacity expands and a moratorium in Dublin has pushed growth into more distributed build-outs. On the U.S. federal government side, the Department of Homeland Security updated frameworks after a 2024 watchdog warning, with AI risk programmes now in place for 15 of the 16 critical infrastructure sectors.

Hardware Competition and Strategy

Competition is sharpening. Anthropic deepened its partnership with Google Cloud to train on TPUs, a move that challenges Nvidia's dominance and signals a broader rebalancing in AI hardware. Nvidia's chief executive has acknowledged TPUs as robust competition.

Another fresh entry came from Extropic, which unveiled thermodynamic sampling units, a probabilistic chip design that claims up to 10,000-fold lower energy use than GPUs for AI workloads. Development kits are shipping and a Z-1 chip is planned for next year, yet as with any radical architecture, proof at scale will take time.

Nvidia, meanwhile, presented an ambitious outlook, targeting $500 billion in chip revenue by 2026 through its Blackwell and Rubin lines. The US Department of Energy plans seven supercomputers comprising more than 100,000 Blackwell GPUs and the company announced partnerships spanning pharmaceuticals, industrials and consumer platforms.

A $1 billion investment in Nokia hints at the importance of AI-centric networks. New open-source models and datasets accompanied the announcements, and the company's share price surged to a record.

Corporate Restructuring

Corporate strategy and hardware choices also entered a new phase. OpenAI completed its restructuring into a public benefit corporation, with a rebranded OpenAI Foundation holding around $130 billion in equity and allocating $25 billion to health and AI resilience. Microsoft's stake now sits at about 27% and is worth roughly $135 billion, with technology rights retained through 2032. Both parties have scope to work with other partners. OpenAI committed around $250 billion to Azure yet retains the ability to use other compute providers. An independent panel will verify claims of artificial general intelligence, an unusual governance step that will be watched closely.

Search and Discovery Evolution

Away from infrastructure, the way audiences find and trust information is shifting. Search is moving from the old aim of ranking for clicks to answer engine optimisation, where the goal is to be quoted by systems such as ChatGPT, Claude or Perplexity.

The numbers explain why. Google handled more than five trillion queries in 2024, while generative platforms now process around 37.5 million prompt-like searches per day. Google's AI Overviews, which surface summary answers above organic results, have reshaped click behaviour.

Independent analyses report top-ranking pages seeing click-through rates fall by roughly a third where Overviews appear, with some keywords faring worse, and a Pew study finds overall clicks on such results dropping from 15% to 8%. Zero-click searches rose from around 56% to 69% between May 2024 and May 2025.

Chegg's non-subscriber traffic fell by 49% in this period, part of an ongoing dispute with Google. Google counters that total engagement in covered queries has risen by about 10%. Whichever way that one reads the data, the direction is clear: visibility is less about rank position and more about being cited by a summarising engine.

In practice, that means structuring content, so a model can parse, trust and attribute it. Clear Q&A-style sections with direct answers, followed by context and cited evidence, help models extract usable statements. Schema markup for FAQs and how-to content improves machine readability.

Measuring success also changes. Traditional analytics rarely show when an LLM quotes a source, so teams are turning to tools that track citations in AI outputs and tying those to conversion quality, branded search volume and more in-depth engagement with pricing or documentation. It is not a replacement for SEO so much as a layer that reinforces it in an AI-first environment.

Developer Tools and Agentic Workflows

On the tools front, developers saw an acceleration in agent-centred workflows. Cursor launched its first in-house coding model, Composer, which aims for near-frontier quality while generating code around four times faster, often in under 30 seconds.

The broader Cursor 2.0 update added multi-agent capabilities, with as many as eight assistants able to work in parallel, alongside browsing, a test browser and voice controls. The direction of travel is away from single-shot completions and towards orchestration and review. Tutorials are following suit, demonstrating how to scaffold tasks such as a Next.js to-do application using planning files, parallel agent tasks and quick integration, with voice prompts in the loop.

Open-source and enterprise ecosystems continue to expand. GitHub introduced Agent HQ for coordinating coding agents, Google released Pomelli to generate marketing campaigns and IBM's Granite 4.0 Nano models brought larger on-device options in the 350 million to 1.5 billion parameter range.

FlowithOS reported strong scores on agentic web tasks, while Mozilla announced an open speech dataset initiative, and Kilo Code, Hailuo 2.3 and other projects broadened choice across coding and video. Grammarly rebranded as Superhuman, adding "Superhuman Go" agents to speed up writing tasks.

Creative Tools and Partnerships

Creative workflows are evolving quickly, too. Adobe used its MAX event to add AI assistants to Photoshop and Express, previewed an agent called Project Moonlight, and upgraded Firefly with conversational "Prompt to Edit" controls, custom image models and new video features including soundtracks and voiceovers. Partnerships mean Gemini, Veo and Imagen will sit inside Adobe tools, and Premiere's editing capabilities now extend to YouTube Shorts.

Figma acquired Weavy and rebranded it as Figma Weave for richer creative collaboration, and Canva unveiled its own foundation "Design Model" alongside a Creative Operating System meant to produce fully editable, AI-generated designs. New Canva features take in a revised video suite, forms, data connectors, email design, a 3D generator and an ad creation and performance tool called Grow, while Affinity is relaunching as a free, integrated professional app. Other entrants are trying to blend model strengths: one agent was trailed with Sora 2 clip stitching, Veo 3.1 visuals and multimodel blending for faster design output.

Music rights and AI found a new footing. Universal Music Group settled a lawsuit with Udio, the AI music generator, and the two will form a joint venture to launch a licensed platform in 2026. Artists who opt in will be paid both for training models on their catalogues and for remixes. Udio disabled song downloads following the deal, which annoyed some users, and UMG also announced a "responsible AI" alliance with Stability AI to build tools for artists. These arrangements suggest a path towards sanctioned use of style and catalogue, with compensation built in from the start.

Research and Introspection

Research and science updates added depth. Anthropic reported that its Claude system shows limited introspection, detecting planted concepts only about 20% of the time, separating injected "thoughts" from text and modulating its internal focus. That highlights both the promise and limits of transparency techniques, and the potential for models to conceal or fail to surface certain internal states.

UC Berkeley researchers demonstrated an AI-driven load balancing algorithm with around 30% efficiency improvements, a result that could ripple through cloud performance. IBM ran quantum algorithms on AMD FPGAs, pointing to progress in hybrid quantum-classical systems.

OpenAI launched an AI-integrated web browser positioned as a challenger to incumbents, Perplexity released a natural-language patents search and OpenAI's Aardvark, a GPT-5-based security agent, entered private beta.

Anthropic opened a Tokyo office and signed a cooperation pact with Japan's AI Safety Institute. Tether released QVAC Genesis I, a large open STEM dataset of more than one million data points and a local workbench app aimed at making development more private and less dependent on big platforms.

Age Restrictions and Policy

Meanwhile, policy considerations are reaching consumer platforms. Character AI will restrict users under 18 from open-ended chatbot conversations from late November, replacing them with creative tools and adding behaviour-based age detection, a response to pressure and proposals such as the GUARD Act.

Takeaways

Put together, the picture is one of rapid interdependence and swift correction. The infrastructure is not breaking, but it is being stretched, and recent failures have usefully mapped the weak points. If the sector continues to learn quickly from its own missteps, the resilience gap will continue to narrow, and the next round of outages will be less disruptive than the last.

Investment is flowing into grids and cooling, policy is nudging towards resilience, and compute providers are hedging hardware bets by searching for efficiency and supply assurance. On the application layer, agents are becoming a primary interface for work, creative tools are converging around editability and control, and discovery is shifting towards being quoted by machines rather than clicked by humans.

Security lapses at the interface are a reminder that novelty often arrives before maturity. The most likely path from here is uneven but forward: data centre power may rise, yet efficiency and distribution can blunt the impact; answer engines may compress clicks, yet they can send higher intent visitors to clear, well-structured sources; hardware competition may fragment the stack, yet it can also reduce concentration risk.

Some Data Science newsletters that may be worth your time

19th October 2025Staying informed about developments in data science and artificial intelligence without drowning in an endless stream of blog posts, research papers and tool announcements presents a genuine challenge for practitioners. The newsletters profiled below offer a solution to this problem by delivering curated digests at weekly or near-weekly intervals, filtering what matters from the constant flow of new content across the field. Each publication serves a distinct purpose, from broad data science coverage and community event notifications to AI business strategy and statistical foundations, allowing readers to select resources that match their specific interests, whether technical depth, practical application, career development or strategic awareness. What follows examines what each newsletter offers, who benefits most from subscribing, and what limitations or trade-offs readers should consider when choosing which digests merit a place in their inbox.

Launched in 2014 by Lon Reisberg, this newsletter distinguishes itself through expert curation with minimal hype. It maintains strong editorial consistency and neutrality, presenting a handful of carefully selected articles that genuinely matter rather than overwhelming subscribers with dozens of links. The free version delivers this curated digest, whilst the Pro tier (fifty dollars annually) offers searchable archives spanning over 250 issues back to 2019, plus AI-powered learning tools including a SQL tutor and interview coach. The newsletter's defining characteristic is its quality-over-quantity approach, serving professionals who trust expert curation to surface what is genuinely important without the noise and hype that characterises many industry publications.

Data Science Weekly Newsletter

One of the oldest independent data science newsletters, having published over 400 issues since 2014, this publication sets itself apart through longevity and unwavering consistency. It delivers every Thursday without fail, maintaining a simple, distraction-free format with no over-commercialisation or fluff. Its unique value lies in this dependability, with subscribers knowing exactly what to expect each week, making it a practical baseline for staying current without surprises or dramatic shifts in editorial direction.

Unlike newsletters that simply curate external content, this publication builds its own ecosystem of learning resources, offering something fundamentally different through its open, community-driven approach. It combines free courses (Zoomcamps), events and a supportive Slack community, with all materials publicly available on GitHub. The newsletter keeps members informed about upcoming cohorts, webinars and talks within this collaborative environment. The defining feature is its entirely open and peer-supported approach, where readers gain access not just to information, but to hands-on learning opportunities and a community of practitioners willing to help each other grow.

Founded in 1997 by Gregory Piatetsky-Shapiro, this publication stands apart through industry authority spanning nearly three decades. It holds unmatched credibility through its longevity and comprehensive coverage, known for its annual software polls, data science career resources and balanced mix of expert articles, surveys and tool trends that appeal equally to technical practitioners and managers seeking a global overview of the field. What sets it apart is this authoritative position, with few publications able to match its track record or breadth of influence across both technical and strategic aspects of data science and AI.

Connected to the Open Data Science Conference network, this newsletter distinguishes itself as the gateway to the global data science event ecosystem. It serves as the practitioner's bridge to events, training, webinars and conferences worldwide. It covers the full stack, from tutorials and research to business use cases and career advice, but its distinctive strength lies in connecting readers to the broader data science community through live events and practical learning opportunities. The defining characteristic is this conference-linked, community-rich approach, proving especially valuable for professionals who want to remain active participants in the field rather than passive consumers of content.

Maintaining a unique position by focusing entirely on statistical foundations, Whilst most data science newsletters chase the latest AI developments, it maintains an unwavering focus on statistics and foundational analysis, providing step-by-step tutorials for Excel, R and Python that emphasise statistical intuition over trendy techniques. This singular focus on fundamentals makes it unique, serving as an essential complement to AI-focused newsletters and helping readers build the statistical knowledge base that underpins sound data science practice.

Created by the makers of KDnuggets, this digital newsletter and media platform carves out a distinctive niche with business-focused AI news for non-technical leaders. It curates AI developments specifically for executives and decision-makers, emphasising practical, non-technical insights about tools, regulations and market moves, backed by an AI tool database and a claimed community of over 400,000 subscribers. What sets it apart is this strategic, implementation-focused perspective, concentrating on what AI means for business strategy rather than explaining how AI works, making it accessible to leaders without deep technical backgrounds.

Published weekly by DeepLearning.AI, co-founded by Andrew Ng, this newsletter offers trusted commentary that combines AI news with insightful analysis. Written by leading experts, it provides a balanced view that merges academic grounding with applied, real-world context. The distinguishing feature is this authoritative perspective on implications, helping engineers, product teams and business leaders understand why developments matter and how to think about their practical impact rather than simply reporting what happened.

An AI email newsletter roundup: Cutting through the noise

23rd August 2025This time last year, I felt out of the loop on all things AI. That was put to rights during the autumn when I experimented a lot with GenAI while enhancing travel content on another portal. In addition, I subscribed to enough email newsletters that I feel the need to cull them at this point. Maybe I should use a service like Kill the Newsletter to consolidate things into an RSS feed instead; that sounds like an interesting option for dealing with any overload.

So much is happening in this area that it is too easy to feel overwhelmed by what is happening. That sense got me compiling the state of things in a previous post using some help from GenAI, though I was making the decisions about what was being consolidated and how it was being done. The whole process took a few hours, an effort clearly beyond a single button push.

This survey is somewhat eclectic in its scope; two of the newsletters are hefty items, while others include brevity as part of their offer. Regarding the latter, I found strident criticism of some of them (The Rundown and Superhuman are two that are mentioned) in an article published in the Financial Times, which is behind a paywall. Their content has been called slop, with the phrase slopaganda being coined and used to describe this. That cannot be applied everywhere, though. Any brevity cannot cloak differences in tone and content choices can help with developing a more rounded view of what is going on with AI.

This newsletter came to my notice because I attended SAS Innovate on Tour 2025 in London last June. Oliver Patel, who authors this and serves as Enterprise AI Governance Lead at AstraZeneca as well as contributing to various international organisations including the OECD Expert Group on AI Risk and Accountability, was a speaker with the theme of his talk naturally being AI governance as well as participating in an earlier panel on the day. Unsurprisingly, the newsletter also got a mention.

It provides in-depth practical guidance on artificial intelligence governance and risk management for professionals working in enterprise environments, though not without a focus on scaling governance frameworks across organisations. Actionable insights are emphasised in place of theoretical concepts, covering areas such as governance maturity models that progress from nascent stages through to transformative governance, implementation strategies and leadership approaches needed to drive effective AI governance within companies.

Patel brings experience from roles spanning policy work, academia and privacy sectors, including positions with the UK government and University College London, which informs his practical approach to helping organisations develop robust AI governance structures. The newsletter targets AI governance professionals, risk managers and executives who need clear, scalable solutions for real-world implementation challenges, and all content remains freely accessible to subscribers.

Unlike other newsletters featured here, this is a seven-day publication that delivers a five‑minute digest on AI industry happenings each day that combines news, productivity tips, polls and AI‑generated art. It was launched in June 2023 by Matt Village and Adam Biddlecombe, using of beehiiv’s content‑focused platform that was acquired by HubSpot in March 2025, placing it within the HubSpot Media Network.

Created by Zain Kahn and based in Toronto, weekday issues of this newsletter typically follow a structured format featuring three AI tools for productivity enhancement, two significant AI developments and one quick tutorial to develop practical skills. On Saturdays, there is a round-up on what is happening in robotics, while the Sunday issue centres on developments in science. Everything is crafted to be brief, possibly allowing a three-minute survey of latest developments.

The Artificially Intelligent Enterprise

My interest in the world of DevOps led me to find out about Mark Hinkle, the solopreneur behind Peripety Labs and his in-depth weekly newsletter published every Friday that features comprehensive deep dives into strategic trends and emerging technologies. This has been complemented by a shorter how-to version which focusses on concrete AI lessons and implementation tips and comes out every Tuesday, taking forward a newsletter acquired from elsewhere. The idea is that we should concentrate on concrete AI lessons and implementation tips in place of hype, particularly in business settings. These forms part of The AIE Network alongside complementary publications including AI Tangle, AI CIO and AI Marketing Advantage.

Found though my following the Artificially Intelligent Enterprise, this daily newsletter delivers artificial intelligence developments and insights within approximately five minutes of reading time per issue. Published by Rowan Cheung, it covers key AI developments, practical guides and tool recommendations, with some articles spanning technology and robotics categories. Beyond the core newsletter, the platform operates AI University, which provides certificate courses, implementation guides, expert-led workshops and community networking opportunities for early adopters.

From boardroom to code: More options for AI and Data Science education

27th July 2025The artificial intelligence revolution has created an unprecedented demand for education that spans from executive strategy to technical implementation. Modern professionals face the challenge of navigating a landscape where understanding AI's business implications proves as crucial as mastering its technical foundations. This comprehensive examination explores five distinguished programmes that collectively address this spectrum, offering pathways for business professionals, aspiring data scientists and technical specialists seeking advanced expertise.

Strategic Business Implementation Through Practical AI Tools

LinkedIn Learning's Applying Generative AI as a Business Professional programme represents the entry point for professionals seeking immediate workplace impact. This focused five-hour curriculum across six courses addresses the practical reality that most business professionals need functional AI literacy rather than technical mastery. The programme emphasises hands-on application of contemporary tools including ChatGPT, Claude and Microsoft Copilot, recognising that these platforms have become integral to modern professional workflows.

The curriculum's strength lies in its emphasis on prompt engineering techniques that yield immediate productivity gains. Participants learn to craft effective queries that consistently produce useful outputs, a skill that has rapidly evolved from novelty to necessity across industries. The programme extends beyond basic tool usage to include strategies for creating custom GPTs without programming knowledge, enabling professionals to develop solutions that address specific organisational challenges.

Communication enhancement represents another critical component, as the programme teaches participants to leverage AI for improving written correspondence, presentations and strategic communications. This practical focus acknowledges that AI's greatest business value often emerges through augmenting existing capabilities rather than replacing human expertise. The inclusion of critical thinking frameworks for AI-assisted decision-making ensures that participants develop sophisticated approaches to integrating artificial intelligence into complex business processes.

Academic Rigour Meets Strategic AI Governance

The University of Pennsylvania's AI for Business Specialisation on Coursera elevates business AI education to an academic level whilst maintaining practical relevance. This four-course programme, completed over approximately four weeks, addresses the strategic implementation challenges that organisations face when deploying AI technologies at scale. The curriculum's foundation in Big Data fundamentals provides essential context for understanding the data requirements that underpin successful AI initiatives.

The programme's exploration of machine learning applications in marketing and finance demonstrates how AI transforms traditional business functions. Participants examine customer journey optimisation techniques, fraud prevention methodologies and personalisation technologies that have become competitive necessities rather than optional enhancements. These applications receive thorough treatment that balances technical understanding with strategic implications, enabling participants to make informed decisions about AI investments and implementations.

Particularly valuable is the programme's emphasis on AI-driven people management practices, addressing how artificial intelligence reshapes human resources, talent development and organisational dynamics. This focus acknowledges that successful AI implementation requires more than technological competence; it demands sophisticated understanding of how these tools affect workplace relationships and employee development.

The specialisation's coverage of strategic AI governance frameworks proves especially relevant as organisations grapple with ethical deployment challenges. Participants develop comprehensive approaches to responsible AI implementation that address regulatory compliance, bias mitigation and stakeholder concerns. This academic treatment of AI ethics provides the foundational knowledge necessary for creating sustainable AI programmes that serve both business objectives and societal responsibilities.

Industry-Standard Professional Development

IBM's Data Science Professional Certificate represents a bridge between business understanding and technical proficiency, offering a comprehensive twelve-course programme designed for career transition. This four-month pathway requires no prior experience whilst building industry-ready capabilities that align with contemporary data science roles. The programme's strength lies in its integration of technical skill development with practical application, ensuring graduates possess both theoretical knowledge and hands-on competency.

The curriculum's progression from Python programming fundamentals through advanced machine learning techniques mirrors the learning journey that working data scientists experience. Participants gain proficiency with industry-standard tools including Jupyter notebooks, GitHub and Watson Studio, ensuring familiarity with the collaborative development environments that characterise modern data science practice. This tool proficiency proves essential for workplace integration, as contemporary data science roles require seamless collaboration across technical teams.

The programme's inclusion of generative AI applications reflects IBM's recognition that artificial intelligence has become integral to data science practice rather than a separate discipline. Participants learn to leverage AI tools for data analysis, visualisation and insight generation, developing capabilities that enhance productivity whilst maintaining analytical rigour. This integration prepares trainees for data science roles that increasingly incorporate AI-assisted workflows.

Real-world project development represents a crucial component, as participants build comprehensive portfolios that demonstrate practical proficiency to potential employers. These projects address authentic business challenges using genuine datasets, ensuring that participants can articulate their capabilities through concrete examples.

Advanced Technical Mastery Through Academic Excellence

Andrew Ng's Machine Learning Specialisation on Coursera establishes the technical foundation for advanced AI practice. This three-course programme, completed over approximately two months, provides comprehensive coverage of core machine learning concepts whilst emphasising practical implementation skills. Andrew Ng's reputation as an AI pioneer lends exceptional credibility to this curriculum, ensuring that participants receive instruction that reflects both academic rigour and industry best practices.

The specialisation's treatment of supervised learning encompasses linear and logistic regression, neural networks and decision trees, providing thorough grounding in the algorithms that underpin contemporary machine learning applications. Participants develop practical proficiency with Python, NumPy and scikit-learn, gaining hands-on experience with the tools that professional machine learning practitioners use daily. This implementation focus ensures that theoretical understanding translates into practical capability.

Unsupervised learning includes clustering algorithms, anomaly detection techniques and certain approaches in recommender systems, all of which contribute to powering modern digital experiences. The programme's exploration of reinforcement learning provides exposure to the techniques driving advances in autonomous systems and game-playing AI. This breadth ensures that participants understand the full spectrum of machine learning approaches, rather than developing narrow expertise in specific techniques.

Cutting-Edge Deep Learning Applications

Again available through Coursera, Andrew Ng's Deep Learning Specialisation extends technical education into the neural network architectures that drives contemporary AI. This five-course programme, spanning approximately three months, addresses the advanced techniques that enable computer vision, natural language processing and complex pattern recognition applications. The intermediate-level curriculum assumes foundational machine learning knowledge whilst building expertise in cutting-edge methodologies.

Convolutional neural network coverage provides comprehensive understanding of computer vision applications, from image classification through object detection and facial recognition. Participants develop practical skills with CNN architectures that power visual AI applications across industries. The programme's treatment of recurrent neural networks and LSTMs addresses sequence processing challenges in speech recognition, machine translation and time series analysis.

The specialisation's exploration of transformer architectures proves particularly relevant given their central role in large language models and natural language processing breakthroughs. Participants gain understanding of attention mechanisms, transfer learning techniques and the architectural innovations that enable modern AI capabilities. This coverage ensures they understand the technical foundations underlying contemporary AI advances.

Real-world application development represents a crucial component, as participants work on speech recognition systems, machine translation applications, image recognition tools and chatbot implementations. These projects utilise TensorFlow, a dominant framework for deep learning development, ensuring that graduates possess practical experience with production-ready tools.

Strategic Integration and Future Pathways

These five programmes collectively address the comprehensive skill requirements of the modern AI landscape, from strategic business implementation through advanced technical development. The progression from practical tool usage through academic business strategy to technical mastery reflects the reality that successful AI adoption requires capabilities across multiple domains. Organisations benefit most when business leaders understand AI's strategic implications, whilst technical teams possess sophisticated implementation capabilities.

The integration of business strategy with technical education acknowledges that artificial intelligence's transformative potential emerges through thoughtful application rather than technological sophistication alone. These programmes prepare professionals to contribute meaningfully to AI initiatives regardless of their specific role or technical background, ensuring that organisations can build comprehensive AI capabilities that serve both immediate needs and long-term strategic objectives.

Advance your Data Science, AI and Computer Science skills using these online learning opportunities

25th July 2025The landscape of online education has transformed dramatically over the past decade, creating unprecedented access to high-quality learning resources across multiple disciplines. This comprehensive examination explores the diverse array of courses available for aspiring data scientists, analysts, and computer science professionals, spanning from foundational programming concepts to cutting-edge artificial intelligence applications.

Data Analysis with R Programming

R programming has established itself as a cornerstone language for statistical analysis and data visualisation, making it an essential skill for modern data professionals. DataCamp's Data Analyst with R programme represents a comprehensive 77-hour journey through the fundamentals of data analysis, encompassing 21 distinct courses that progressively build expertise. Students begin with core programming concepts including data structures, conditional statements, and loops before advancing to sophisticated data manipulation techniques using tools such as dplyr and ggplot2. The curriculum extends beyond basic programming to include R Markdown for reproducible research, data manipulation with data.table, and essential database skills through SQL integration.

For those seeking more advanced statistical expertise, DataCamp's Statistician with R career track provides an extensive 108-hour programme spanning 27 courses. This comprehensive pathway develops essential skills for professional statistician roles, progressing from fundamental concepts of data collection and analysis to advanced statistical methodology. Students explore random variables, distributions, and conditioning through practical examples before advancing to linear and logistic regression techniques. The curriculum encompasses sophisticated topics including binomial and Poisson regression models, sampling methodologies, hypothesis testing, experimental design, and A/B testing frameworks. Advanced modules cover missing data handling, survey design principles, survival analysis, Bayesian data analysis, and factor analysis, making this track particularly suitable for those with existing R programming knowledge who seek to specialise in statistical practice.

The Google Data Analytics Professional Certificate programme, developed by Google and hosted on Coursera with US and UK versions, offers a structured six-month pathway for those seeking industry-recognised credentials. Students progress through eight carefully designed courses, beginning with foundational concepts in "Foundations: Data, Data, Everywhere" and culminating in a practical capstone project. The curriculum emphasises real-world applications, teaching students to formulate data-driven questions, prepare datasets for analysis, and communicate findings effectively to stakeholders.

Udacity's Data Analysis with R course presents a unique proposition as a completely free resource spanning two months of study. This programme focuses intensively on exploratory data analysis techniques, providing students with hands-on experience using RStudio and essential R packages. The course structure emphasises practical application through projects, including an in-depth exploration of diamond pricing data that demonstrates predictive modelling techniques.

Advanced Statistical Learning and Specialised Applications

Duke University's Statistics with R Specialisation elevates statistical understanding through a comprehensive seven-month programme that has earned a 4.6-star rating from participants. This five-course sequence delves deep into statistical theory and application, beginning with probability and data fundamentals before progressing through inferential statistics, linear regression, and Bayesian analysis. The programme distinguishes itself by emphasising both theoretical understanding and practical implementation, making it particularly valuable for those seeking to master statistical concepts rather than merely apply them.

The R Programming: Advanced Analytics course on Udemy, led by instructor Kirill, provides focused training in advanced R techniques within a compact six-hour format. This course addresses specific challenges that working analysts face, including data preparation workflows, handling missing data through median imputation, and working with complex date-time formats. The curriculum emphasises efficiency techniques such as using apply functions instead of traditional loops, making it particularly valuable for professionals seeking to optimise their analytical workflows.

Complementing this practical approach, the Applied Statistical Modelling for Data Analysis in R course on Udemy offers a more comprehensive 9.5-hour exploration of statistical methodology. The curriculum covers linear modelling implementation, advanced regression analysis techniques, and multivariate analysis methods. With its emphasis on statistical theory and application, this course serves those who already possess foundational R and RStudio knowledge but seek to deepen their understanding of statistical modelling approaches.

Imperial College London's Statistical Analysis with R for Public Health Specialisation brings academic rigour to practical health applications through a four-month programme. This specialisation addresses real-world public health challenges, using datasets that examine fruit and vegetable consumption patterns, diabetes risk factors, and cardiac outcomes. Students develop expertise in linear and logistic regression while gaining exposure to survival analysis techniques, making this programme particularly relevant for those interested in healthcare analytics.

Visualisation and Data Communication

Johns Hopkins University's Data Visualisation & Dashboarding with R Specialisation represents the pinnacle of visual analytics education, achieving an exceptional 4.9-star rating across its four-month curriculum. This five-course programme begins with fundamental visualisation principles before progressing through advanced ggplot2 techniques and interactive dashboard development. Students learn to create compelling visual narratives using Shiny applications and flexdashboard frameworks, skills that are increasingly essential in today's data-driven business environment.

The programme's emphasis on publication-ready visualisations and interactive dashboards addresses the growing demand for data professionals who can not only analyse data but also communicate insights effectively to diverse audiences. The curriculum balances technical skill development with design principles, ensuring graduates can create both statistically accurate and visually compelling presentations.

Professional Certification Pathways

DataCamp's certification programmes offer accelerated pathways to professional recognition, with each certification designed to be completed within 30 days. The Data Analyst Certification combines timed examinations with practical assessments to evaluate real-world competency. Candidates must demonstrate proficiency in data extraction, quality assessment, cleaning procedures, and metric calculation, reflecting the core responsibilities of working data analysts.

The Data Scientist Certification expands these requirements to include machine learning and artificial intelligence applications, requiring candidates to collect and interpret large datasets whilst effectively communicating results to business stakeholders. Similarly, the Data Engineer Certification focuses on data infrastructure and preprocessing capabilities, essential skills as organisations increasingly rely on automated data pipelines and real-time analytics.

The SQL Associate Certification addresses the universal need for database querying skills across all data roles. This certification validates both theoretical knowledge through timed examinations and practical application through hands-on database challenges, ensuring graduates can confidently extract and manipulate data from various database systems.

Emerging Technologies and Artificial Intelligence

The rapid advancement of artificial intelligence has created new educational opportunities that bridge traditional data science with cutting-edge generative technologies. DataCamp's Understanding Artificial Intelligence course provides a foundation for those new to AI concepts, requiring no programming background whilst covering machine learning, deep learning, and generative model fundamentals. This accessibility makes it valuable for business professionals seeking to understand AI's implications without becoming technical practitioners.

The Generative AI Concepts course builds upon this foundation to explore the specific technologies driving current AI innovation. Students examine how large language models function, consider ethical implications of AI deployment, and learn to maximise the effectiveness of AI tools in professional contexts. This programme addresses the growing need for AI literacy across various industries and roles.

DataCamp's Large Language Model Concepts course provides intermediate-level exploration of the technologies underlying systems like ChatGPT. The curriculum covers natural language processing fundamentals, fine-tuning techniques, and various learning approaches including zero-shot and few-shot learning. This technical depth makes it particularly valuable for professionals seeking to implement or customise language models within their organisations.

The ChatGPT Prompt Engineering for Developers course addresses the developing field of prompt engineering, a skill that has gained significant commercial value. Students learn to craft effective prompts that consistently produce desired outputs from language models, a capability that combines technical understanding with creative problem-solving. This expertise has become increasingly valuable as organisations integrate AI tools into their workflows.

Working with OpenAI API provides practical implementation skills for those seeking to build AI-powered applications. The course covers text generation, sentiment analysis, and chatbot development, giving students hands-on experience with the tools that are reshaping how businesses interact with customers and process information.

Computer Science Foundations

Stanford University's Computer Science 101 offers an accessible introduction to computing concepts without requiring prior programming experience. This course addresses fundamental questions about computational capabilities and limitations whilst exploring hardware architecture, software development, and internet infrastructure. The curriculum includes essential topics such as computer security, making it valuable for anyone seeking to understand the digital systems that underpin modern society.

The University of Leeds' Introduction to Logic for Computer Science provides focused training in logical reasoning, a skill that underlies algorithm design and problem-solving approaches. This compact course covers propositional logic and logical modelling techniques that form the foundation for more advanced computer science concepts.

Harvard's CS50 course, taught by Professor David Malan, has gained worldwide recognition for its engaging approach to computer science education. The programme combines theoretical concepts with practical projects, teaching algorithmic thinking alongside multiple programming languages including Python, SQL, HTML, CSS, and JavaScript. This breadth of coverage makes it particularly valuable for those seeking a comprehensive introduction to software development.

MIT's Introduction to Computer Science and Programming Using Python focuses specifically on computational thinking and Python programming. The curriculum emphasises problem-solving methodologies, testing and debugging strategies, and algorithmic complexity analysis. This foundation proves essential for those planning to specialise in data science or software development.

MIT's The Missing Semester course addresses practical tools that traditional computer science curricula often overlook. Students learn command-line environments, version control with Git, debugging techniques, and security practices. These skills prove essential for professional software development but are rarely taught systematically in traditional academic settings.

Accessible Learning Resources and Community Support

The democratisation of education extends beyond formal courses to include diverse learning resources that support different learning styles and schedules. YouTube channels such as Programming with Mosh, freeCodeCamp, Alex the Analyst, Tina Huang, and Ken Lee provide free, high-quality content that complements formal education programmes. These resources offer everything from comprehensive programming tutorials to career guidance and project-based learning opportunities.

The 365 Data Science platform contributes to this ecosystem through flashcard decks that reinforce learning of essential terminology and concepts across Excel, SQL, Python, and emerging technologies like ChatGPT. Their statistics calculators provide interactive tools that help students understand the mechanics behind statistical calculations, bridging the gap between theoretical knowledge and practical application.

Udemy's marketplace model supports this diversity by hosting over 100,000 courses, including many free options that allow instructors to share expertise with global audiences. The platform's filtering capabilities enable learners to identify resources that match their specific needs and learning preferences.

Industry Integration and Career Development

Major technology companies have recognised the value of contributing to global education initiatives, with Google, Microsoft and Amazon offering professional-grade courses at no cost. Google's Data Analytics Professional Certificate exemplifies this trend, providing industry-recognised credentials that directly align with employment requirements at leading technology firms.

These industry partnerships ensure that course content remains current with rapidly evolving technological landscapes, whilst providing students with credentials that carry weight in hiring decisions. The integration of real-world projects and case studies helps bridge the gap between academic learning and professional application.

The comprehensive nature of these educational opportunities reflects the complex requirements of modern data and technology roles. Successful professionals must combine technical proficiency with communication skills, statistical understanding with programming capability, and theoretical knowledge with practical application. The diversity of available courses enables learners to develop these multifaceted skill sets according to their career goals and learning preferences.

As technology continues to reshape industries and create new professional opportunities, access to high-quality education becomes increasingly critical. These courses represent more than mere skill development; they provide pathways for career transformation and professional advancement that transcend traditional educational barriers. Whether pursuing data analysis, software development, or artificial intelligence applications, learners can now access world-class education that was previously available only through expensive university programmes or exclusive corporate training initiatives.

The future of professional development lies in this combination of accessibility, quality, and relevance that characterises the modern online education landscape. These resources enable individuals to build expertise that matches industry demands, also maintaining the flexibility to learn at their own pace and according to their specific circumstances and goals.

Synthetic Data: The key to unlocking AI's potential in healthcare

18th July 2025

The integration of artificial intelligence into healthcare is being hindered by challenges such as data scarcity, privacy concerns and regulatory constraints. Healthcare organisations face difficulties in obtaining sufficient volumes of high-quality, real-world data to train AI models, which can accurately predict outcomes or assist in decision-making.

Synthetic data, defined as algorithmically generated data that mimics real-world data, is emerging as a solution to these challenges. This artificially generated data mirrors the statistical properties of real-world data without containing any sensitive or identifiable information, allowing organisations to sidestep privacy issues and adhere to regulatory requirements.

By generating datasets that preserve statistical relationships and distributions found in real data, synthetic data enables healthcare organisations to train AI models with rich datasets while ensuring sensitive information remains secure. The use of synthetic data can also help address bias and ensure fairness in AI systems by enabling the creation of balanced training sets and allowing for the evaluation of model outputs across different demographic groups.

Furthermore, synthetic data can be generated programmatically, reducing the time spent on data collection and processing and enabling organisations to scale their AI initiatives more efficiently. Ultimately, synthetic data are becoming a critical asset in the development of AI in healthcare, enabling faster development cycles, improving outcomes and driving innovation while maintaining trust and security.

From mathematical insights to practical applications: Two perspectives on AI

19th April 2025As AI continues to transform our technological landscape, two recent books offer distinct yet complementary perspectives on understanding and working with these powerful tools. Stephen Wolfram's technical deep dive and Ethan Mollick's practical guide approach the subject from different angles, but both provide valuable insights for navigating our AI-integrated future.

- What is ChatGPT Doing?: Wolfram's Technical Lens

Stephen Wolfram's exploration of large language models is characteristically thorough and mathematically oriented. While dense in parts, his analysis reveals fascinating insights about both AI and human cognition.

Perhaps most intriguing is Wolfram's observation that generative AI unexpectedly teaches us about human language production. These systems, in modelling our linguistic patterns with such accuracy, hold up a mirror to our own cognitive processes, perhaps revealing structures and patterns we had not fully appreciated before.

Wolfram does not shy away from highlighting limitations, particularly regarding computational capabilities. As sophisticated as next-word prediction has become through multi-billion parameter neural networks, these systems fundamentally lack true mathematical reasoning. However, his proposal of integrating language models with computational tools like WolframAlpha presents an elegant solution, combining the conversational fluency of AI with precise computational power.

- Co-intelligence: Mollick's Practical Framework

Ethan Mollick takes a decidedly more accessible approach in "Co-intelligence," offering accessible strategies for effective human-AI collaboration across various contexts. His framework includes several practical principles:

- Invite AI to the table as a collaborator rather than merely a tool

- Maintain human oversight and decision-making authority

- Communicate with AI systems as if they were people with specific roles

- Assume current AI represents the lowest capability level you will work with going forward

What makes Mollick's work particularly valuable is its contextual applications. Drawing from his background as a business professor, he methodically examines how these principles apply across different collaborative scenarios: from personal assistant to creative partner, coworker, tutor, coach, and beyond. With a technology, that, even now, retains some of the quality of a solution looking for a problem, these grounded suggestions act as a counterpoint to the torrent of hype that that deluges our working lives, especially if you frequent LinkedIn a lot as I am doing at this time while searching for new freelance work.

- Complementary Perspectives

Though differing significantly in their technical depth and intended audience, both books contribute meaningfully to our understanding of AI. Wolfram's mathematical rigour provides theoretical grounding, while Mollick's practical frameworks offer immediate actionable insights. For general readers looking to productively integrate AI into their work and life, Mollick's accessible approach serves as an excellent entry point. Those seeking deeper technical understanding will find Wolfram's analysis challenging but rewarding.

As we navigate this rapidly evolving landscape, perspectives from both technical innovators and practical implementers will be essential in helping us maximise the benefits of AI while mitigating potential drawbacks. As ever, the hype outpaces the practical experiences, leaving us to suffer the marketing output while awaiting real experiences to be shared. It is the latter is more tangible and will allow us to make use of game-changing technical advances.

A little bit of abstraction: The quiet utility of generated imagery

21st August 2021

Data science has remained in my awareness since 2017 though my work is more on its fringes in clinical research. In fact, I have been involved more in the standardisation and automation of more traditional data reporting than in the needs of data modelling such as data engineering or other similar disciplines. Much of this effort has meant the use of SAS, with which I have programmed since 2000 and for which I have a licence (an expensive commodity, it has to be said), but other technologies are being explored with R, Python and Julia being among them.

Though the change in technological scope does bring an element of excitement and new interest, there is also some sadness when tried and trusted technologies meet with newer competition and valued skills are no longer as career securing as they once were. Still, there is plenty of online training out there, and I already have collected some of my thoughts on this. The learning continues and the need for repositioning is also clear.

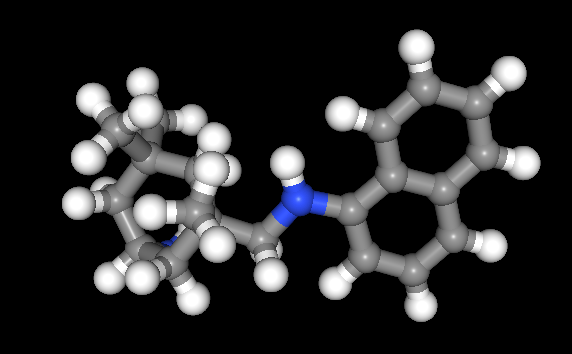

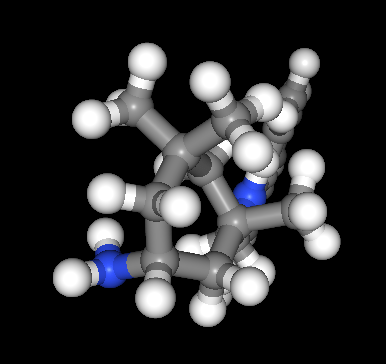

The journey also brought some curios to my notice. One of these is This Person Does Not Exist, a website building photos of non-existent faces using machine learning. Recently, I learned of others like it such as This Artwork Does Not Exist, This Cat Does Not Exist, This Horse Does Not Exist, and This Chemical Does Not Exist. The last of these probably should be entitled "This Molecule Does Not Exist (Yet)" since it is a fictitious molecular structure that has been created and what you get is an actual moving image that spins it around in three-dimensional space. The one with dynamically generated abstract art is the main inspiration for this piece and is of more interest to me, while the other two are more explanatory, though the horse website is not so successful in its execution and one can ask why we need more cat pictures.

To some, the idea of creating fake pictures may feel a little foreboding, and that especially applies to photos of people and the livelihoods of any content creators. Nevertheless, these sources of imagery have their legitimate uses, such as decorating websites or brochures, which is where my interest is piqued. After all, there are some subjects where pictures can be scarce, so any form of decoration that enlivens an article has to have some use. While technology websites like this one can feature images too with screenshots and device photos being commonplace, they can all look like each other, hence the need for a little more variety and having pictures often increases the choice of website themes as well since so many need images to make them work or stand out. As ever, being sparing with any innovations remains in order, which is how I approach this matter as well.