Terminology for larger and larger disk drive data volumes

3rd October 2007When I started into the world of computing at university, 200-300 MB hard drives were the norm for PC's. My own first PC had what was then thought a sizeable 1.6 GB disk, only for things to increase in size since then. Now, I have access to several hundred gigabytes of storage at home, while we are now seeing 1TB offerings for the PC market.

Terabyte storage has been the preserve of the server market, but given the disk sizes that are available now, even larger units are needed to describe the sizes of data volumes, ones that I haven't seen before. So here goes:

|

Unit |

Number of bytes |

Number of bytes |

Number of bytes |

|

petabyte |

1024 TB |

2**50 |

10**15 |

|

exabyte |

1024 PB |

2**60 |

10**18 |

|

zettabyte |

1024 EB |

2**70 |

10**21 |

|

yottabyte |

1024 ZB |

2**80 |

10**24 |

* Binary measurements are used by operating systems like Windows and Linux, while decimal ones are used by hard drive manufacturers

While I know that the above strays into the realms of esoterica, the way that things have been going may mean that we are talking about petabytes before very long. As it so happens, HP recently mentioned zettabytes when talking about its range of UNIX servers and I needed to go looking up what it meant...

Porting SAS files to other platforms and versions

1st October 2007SAS uses its transport file format to port files between operating and, where the need arises, different software versions. As with many things, there is more than one method to create these transport files: PROC CPORT/CIMPORT and PROC COPY with the XPORT engine. The former method is for within version transfer of SAS files between different operating systems (UNIX to Windows, for instance) and the latter is for cross-version transfer (SAS9 to SAS 8, for example). SAS Institute has a page devoted to this subject which may share more details.

Quoshing WordPress 2.3 upgrade gremlins…

26th September 2007Primarily because of the WordPress plugins that I use, a few inconsistencies have leaped out of the woodwork that needed to be fixed. Here are the issues that I encountered:

Database errors appearing in web pages

This was a momentary discovery along the upgrade trail, entirely caused by the way in which I was doing things. As usual, I went and copied over the WordPress 2.3 files to my web server, so I saw these errors before I ran the upgrade script. Then, they were banished, confirming that WordPress 2.3 code was trying to access a WordPress 2.2 type database; 2.3 has made some database changes to incorporate tagging.

Dashboard Editor no longer fully functional

The move to JQuery meant that some of the things for which it was looking had changed. They also changed the incoming links provider from Technorati to Google, now that the former is having a tougher time of it. It took a while to track down why I was unable to remove components from the front page of my dashboard as before, but a quick comparison of 2.3 code with its 2.2.3 forbear revealed all. I can make a copy of the updated code available for those who need it.

WordPress Admin Themer

The plugin works as before and does its job so well that you end up applying an old stylesheet (in the blog's theme folder) to the latest release. It only took a spot of tweaking to put everything in order.

I am not complaining about any of these, partly because they were easy to resolve and, in any event, I don't mind a spot of code cutting. However, I can foresee some users being put out by them, hence my sharing my experiences.

Update: Dashboard Editor has since been updated by the author. Even so, I will stick with my own version of the plugin.

Running SQL scripts with MySQL

23rd September 2007Here's another of those little things that you forget if you aren't using them every day: running MySQL scripts using the Windows command line. Yes, you can also run SQL commands interactively, but there's a certain convenience about scripts. I am putting an example here so that it can be found again easily:

mysql -u username -p databasename < script.sql

Though I wouldn't be at all surprised if the same line worked under Linux and UNIX, I haven't needed to give it a try.

WordPress database error: [Can’t create table … (errno: 121)]

22nd September 2007When I was trying to upgrade one of my test blogs to WordPress 2.3 RC1, I got error messages like the above littering the screen during the installation. The PHP functions mysql_noerr (or mysqli_noerr) and mysql_error (or mysqli_error) seem to have been busily at work. These messages told me that the upgrade hadn't worked, so I went off googling as usual and perusing the MySQL tomes in my possession. Not for the first time, the web yielded nothing but dross and, in the end, I tried deleting the relevant database and starting from scratch again. That resolved the problem.

The reason for database deletion sorting things out is that MySQL got confused when there was a mismatch between what was in the file system and what its InnoDB table was saying. Now, I think that the cause of this was that I naively copied in tables using Windows Explorer. Deleting the database cleared the air and all was well once I allowed WordPress to do the needful in its time-honoured way. With another lesson learned for the future, I wish that frustration wasn't part of the learning experience too...

Trying out WordPress 2.3 RC1

21st September 2007The final release of the next version of WordPress is due out on Monday and because there are sure to be security fixes included, I have been giving the release candidate a go on my offline blogs. Since 2.3 is another major release, I have been doing some preparation. In fact, the WordPress project has a blog entry dedicated to such things for this release. Thankfully, I think that my hillwalking blog should emerge unscathed by this upgrade; I still need to have a go with an offline version of this blog.

The special feature in the new release is tagging, and it is good to see that it has had no impact on legacy set-ups. Though I had the same reservations about 2.2 with its inclusion of sidebar widgets, the backward compatibility was enough to see me through without any hiccups. Tagging is not something that I see myself using, with categories fulfilling much the same type of role; I am unconvinced by the idea of tag clouds, the type of thing that it powers. However, there are some useful extras here, and the filtering of posts by edit status is one of these. Having pending review as a publishing status sounds like a tweak that I might use to allow myself a cooling-off period before I publish a post for all the world to see. Revisiting something with a fresher pair of eyes might stop typographical howlers from emerging into public view...

Update: Another 2.3 feature discovered! I have picked up on multiple category widgets by virtue of the fact that category styling disappeared with the upgrade of the offline version of this blog to 2.3 RC1. Adding "-1" to the relevant CSS class definitions soon sorted things out. It does support the idea of testing before implementation, even if no other unexpected changes were spotted. I still am not sure why anyone would have multiple category listings, though.

SAS Institute enters the blogosphere

19th September 2007To get to the blogs hosted by SAS Institute, all you need to do is go here. I have to say that there is quite a spread of subject matter ranging from the high-level business strategy offerings through to detailed snippets for SAS programmers. There appears to be a lot here for anyone interested in SAS and business intelligence. I must take a longer look.

Update: I have since discovered a central listing of SAS Institute RSS feeds. The list is well worth your perusal.

Twelve months of WordPress

18th September 2007It was on this date several months ago that I moved my hillwalking blog into the world of WordPress. It's a self-hosted WordPress instance and has been for all that time. Because of the move, I was taken into the world of MySQL, one that intrudes still to this day. Most of the time for the migration was spent setting up a theme to fit in with the rest of the website, of which it forms an essential part. The matter of importing all the old posts took up time too, especially when it came to fixing glitches with the XML import. Still, it was all done within a weekend, and my website hasn't looked back since. More people now have a reason to visit, and the blog may even have surpassed the photo gallery as the site's main attraction. I kept up the old blog for a while but have dispensed with that by now; I was keeping both blogs synchronised and that became a tiring manoeuvre. Another upshot of the whole experience is that I have become more aware of the UK outdoor scene and learnt a thing or two too. It might even have encouraged me to go from day tripping to multi-day backpacking, a real-world change that is well removed from the world of technology.

CSS Control of Text Wrapping

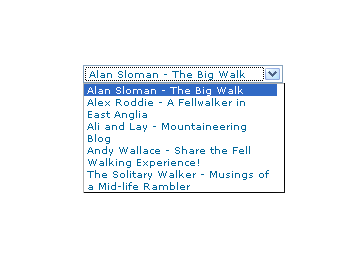

11th September 2007Recently, I spotted a request for a dropdown list like that which you see below. I managed to create it using the CSS, but it only worked for Firefox, so I couldn't suggest it to the requester.

form select, form select option {width: 185px; white-space: normal;}

form select {height: 16px; width: 200px; white-space: normal;}

form {margin: 300px auto 0 auto; width: 300px;}

Here's how it looks in Firefox 2:

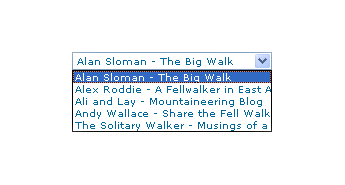

And in IE6:

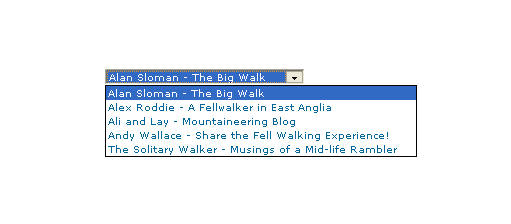

And in Opera 9:

It would be nice if the white-space attribute gave the same result in all three but hey ho... As it happens, the W3C are working up other possible ways of controlling text wrapping in (X)HTML elements, but that's for the future, and I'll be expecting it when I see it.

For menus with wrapped entries, using DHTML menus and DOM scripting seems the best course for now. I suppose that you could always make the entries shorter, which is precisely what I tend to do; I am pragmatic like that. Nevertheless, there's never any harm in attempting to push the boundaries. You just have to come away from the cutting edge at the first sign of bleeding...

Of course, if anyone had other ideas, please let me know.

Another side to hardening WordPress

7th September 2007A little while back, I took to using the wonders of .htaccess directives to make my WordPress deployments more secure. It does work but has the disadvantage that desktop blog editors like Windows Live Writer, Word 2007 and w.bloggar cannot be used to update your blog. Though I must have a look at getting around this, I am sticking with using WordPress itself to do the editing for now (Dean Lee's port of FckEditor for WordPress is working out very well, spurious codes notwithstanding).