A use for choice

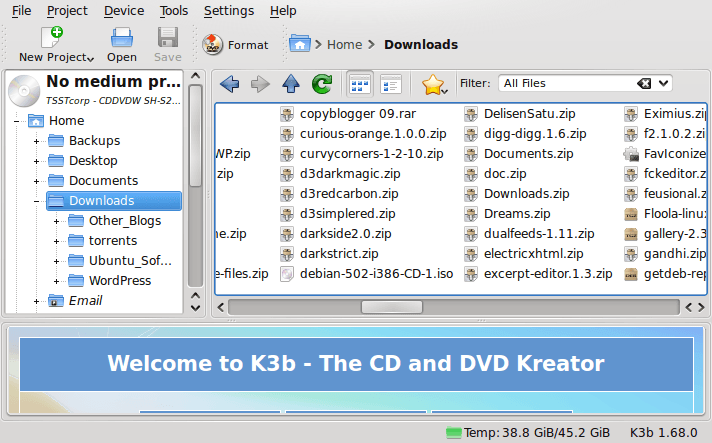

8th November 2009After moving to Ubuntu 9.10, Brasero stopped behaving as well as it did in Ubuntu 9.04. Any bootable disks that I have created with it weren't without glitches. After a recent update, things got better with a live CD actually booting up a PC rather than failing to find a file system like those created with its forbear. While unsure if the observed imperfections stemmed from my using the RC for the upgrades and installations, I got to looking for a solution and gave K3b a go. It certainly behaves like I'd expect it, and a live CD created with it worked without fault. The end result is that Brasero has been booted off my main home system for now. That may mean that all the in-built GNOME convenience is lost to me, but I can live without the extras; after all, it's the quality of the created disks that matters.

DePo Masthead

6th November 2009There is a place on WordPress.com where I share various odds and ends about public transport in the U.K. It's called On Trains and Buses, and I try not to go tinkering with the design side of things too much. You only can change the CSS and my previous experience of doing that with this edifice while it lived on there taught me not to expect too much even if there are sandbox themes for anyone to turn into something presentable, not that I really would want to go doing that in full view of everyone (doing if offline first and copying the CSS afterwards when it's done is my preferred way of going about it). Besides, I wanted to see how WordPress.com fares these days anyway.

While my public transport blog just been around for a little over a year, it's worn a few themes over that time, ranging from the minimalist The Journalist v1.9 and Vigilance through to Spring Reloaded. After the last of these, I am back to minimalist again with DePo Masthead, albeit with a spot of my own colouring to soften its feel a little. Though I must admit growing to like it, it came to my attention that it was a bespoke design from Derek Powazek that Automattic's Noel Jackson turned into reality. The result would appear that you cannot get it anywhere but from the WordPress.com Subversion theme repository. For those not versed in the little bit of Subversion action that is needed to get it, I did it for you and put it all into a zip file without making any changes to the original, hoping that it might make life easier for someone.

Troubleshooting SATA drive detection issues in Ubuntu 9.10

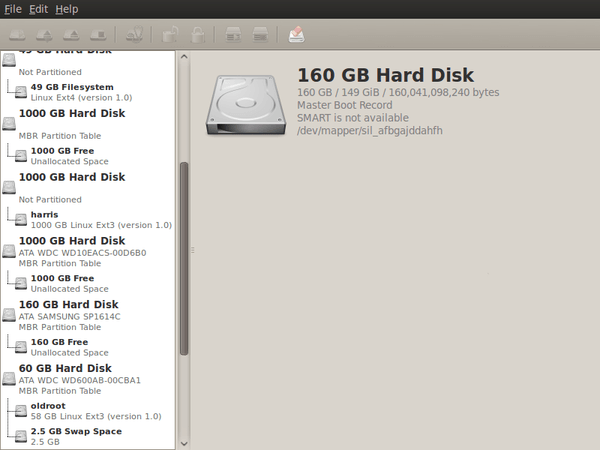

4th November 2009One of the early signs that I noticed after upgrading my main PC to Ubuntu 9.10 was a warning regarding the health of one of my hard disks. While others have reported that this can be triggered by the least bit of roughness in a SMART profile, that's not how it was for me. The PATA disk that has hosted my Ubuntu installation since the move away from Windows had a few bad sectors but no adverse warning. It was a 320 GB Western Digital SATA drive that was raising alarm bells with its 200 bad sectors.

The conveyor of this news was Palimpsest (not sure how it got that name even when I read the Wikipedia entry) and that is part of the subject of this post. Some have been irritated by its disk health warnings, yet it's easy to make them go away by turning off Disk Notifications in the dialogue that going to System > Preferences > Startup Applications will bring up for you. To fire up Palimpsest itself, there's always the command line, but you'll find it at System > Administration > Disk Utility too.

My complaint about it is that I see the same hard drive listed in there more than once, and it takes some finding to separate the real entries from the "bogus" ones. Whether this is because Ubuntu has seen my SATA drives with SIL RAID mappings (for the record, I have no array set up) or not is an open question, but it's one that needs continued investigation and I already have had a go with the dmraid command.

Even GParted shows both the original /dev/sd* type addressing and the /dev/mapper/sil_* equivalent, with the latter being the one with which you need to work (Ubuntu now lives on a partition on one of the SATA drives, which is how I noticed this). All in all, it looks less than tidy, so additional interrogation is in order, especially when I have no recollection of 9.04 doing anything of the sort.

Slower ImageMagick DNG processing in Ubuntu 9.10

2nd November 2009A little while, I encountered a problem with ImageMagick processing DNG files in Ubuntu 9.04. Not realising that I could solve me own problem by editing a file named delegates.xml, I took to getting a Debian VM to do the legwork for me. That's where you'll find all the commands used when helper software is used by ImageMagick to help it on its way. On its own, ImageMagick cannot deal with DNG files, so the command line variant of UFRaw (itself a front end for DCRaw) is used to create a PNM file that ImageMagick can handle. The problem a few months back was that the command in delegates.xml wasn't appropriate for a newer version of UFRaw and I got it into my head that things like this were hard-wired into ImageMagick. Now, I know better and admit my error.

With 9.10, it appears that the command in delegates.xml has been corrected, only for another issue to raise its head. UFRaw 0.15, it seems, isn't the speediest when it comes to creating PNM files and, while my raw file processing script works after a spot of modification to deal with changes in output from the identify command used, it takes far too long to run. Since GIMP also uses UFRaw, I wonder if the same problem has surfaced there too. However, it has been noticed by the Debian team, with the outcome being that they have a package for version 0.16 of the software in their unstable branch that looks as if it has sorted the speed issue. However, I am seeing that 0.15 is in the testing branch, which tempts me to stick with Lenny (5.x) if any successor turns out to have slower DNG file handling with ImageMagick and UFRaw. In my estimation, 0.13 does what I need, so why go for a newer release if it turns out to be slower?

Evoluent Vertical Mouse 3 Configuration in Ubuntu 9.10

31st October 2009On popping Ubuntu 9.10 onto a newly built PC, I noticed that the button mappings weren't as I had expected them to be. The button just below the wheel no longer acted like a right mouse button on a conventional mouse, and it really was throwing me. The cause was found to be in a file name evoluent-verticalmouse3.fdi that is found either in /usr/share/hal/fdi/policy/20thirdparty/ or /etc/hal/fdi/policy/.

So, to get things back as I wanted, I changed the following line:

<merge key="input.x11_options.ButtonMapping" type="string">1 2 2 4 5 6 7 3 8</merge>

to:

<merge key="input.x11_options.ButtonMapping" type="string">1 2 3 4 5 6 7 3 8</merge>

If there is no sign of the file on your system, then create one named evoluent-verticalmouse3.fdi in /etc/hal/fdi/policy/ with the following content and you should be away. All that's needed to set things to rights is to disconnect the mouse and reconnect it again in both cases.

<?xml version="1.0" encoding="ISO-8859-1"?>

<deviceinfo version="0.2">

<device>

<match key="info.capabilities" contains="input.mouse">

<match key="input.product" string="Kingsis Peripherals Evoluent VerticalMouse 3">

<merge key="input.x11_driver" type="string">evdev</merge>

<merge key="input.x11_options.Emulate3Buttons" type="string">no</merge>

<merge key="input.x11_options.EmulateWheelButton" type="string">0</merge>

<merge key="input.x11_options.ZAxisMapping" type="string">4 5</merge>

<merge key="input.x11_options.ButtonMapping" type="string">1 2 3 4 5 6 7 3 8</merge>

</match>

</match>

</device>

</deviceinfo>

While I may not have appreciated the sudden change, it does show how you remap buttons on these mice, and that can be no bad thing. Saying that, hardware settings can be personal things, so it's best not to go changing defaults based on one person's preferences. It just goes to show how valuable discussions like that on Launchpad about this matter can be. For one, I am glad to know what happened and how to make things the way that I want them to be, though I realise that it may not suit everyone; that makes me reticent about asking for such things to be made the standard settings.

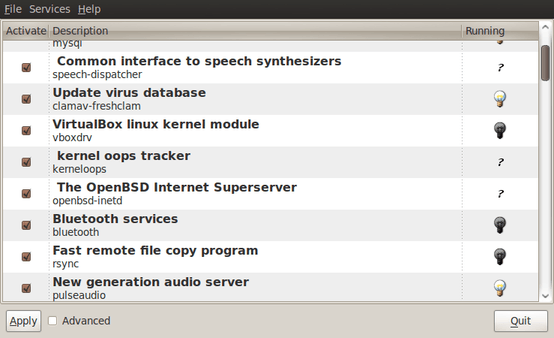

Service management in Ubuntu 9.10

29th October 2009The final release of Ubuntu 9.10 is due out today, but there is a minor item that seems to have disappeared from the System > Administration menu, in the release candidate at least: Services. While some readers may put me right, I can't seem to find it anywhere else. Luckily, there is a solution in the form of the GNOME Boot-Up Manager or BUM as it is known sometimes. It is always handy to have a graphical means of restarting services, and BUM suffices for the purpose. While restarting Apache from the command line is all well and good, but the GUI approach has its place too.

A multitude of operating systems

27th October 2009Like buses, it appears that a whole hoard of operating systems is descending upon us at once. OS X 10.6 came first before it was the turn of Windows 7 last week with all the excitement that it generated in the computing and technology media. Next up will be Ubuntu, already a source of some embarrassment for the BBC's Rory Cellan-Jones when he got his facts muddled; to his credit he later corrected himself, though I do wonder how up to speed is his appreciated that Ubuntu has its distinct flavours with a netbook variant being different to the main offering that I use. Along with Ubuntu 9.10, Fedora 12 and openSUSE 11.2 are also in the wings. As if all these weren't enough, the latest issue of PC Plus gives an airing to less well-known operating systems like Haiku (the project that carries on BeOS).

The inescapable conclusion is that, far from the impressions of mainstream computer users who know only Windows, we are swimming in a sea of operating system options in which you may drown if you decide to try sampling them all. That may explain why I stick with Ubuntu for home use due to reasons of familiarity and reliability and leave much of the distro hopping to others. Of course, it shouldn't surprise anyone that Windows is the choice of where I work, with 2000 being usurped by Vista in the next few weeks (IT managers always like to be behind the curve for the sake of safety).

Still able to build PC systems

25th October 2009This weekend has been something of a success for me on the PC hardware front. Earlier this year, a series of mishaps rendered my former main home PC unusable; it was a power failure that finished it off for good. My remedy was a rebuild using my then usual recipe of a Gigabyte motherboard, AMD CPU and crucial memory. However, assembling the said pieces never returned the thing to life and I ended up in no man's land for a while, dependent on and my backup machine and laptop. That wouldn't have been so bad but for the need for accessing data from the old behemoth's hard drives, but an external drive housing set that in order. Nevertheless, there is something unfinished about work with machines having a series of external drives hanging off them. That appearance of disarray was set to rights by the arrival of a bare-bones system from Novatech in July, with any assembly work restricted to the kitchen table. There was a certain pleasure in seeing a system come to life after my developing a fear that I had lost all of my PC building prowess.

That restoration of order still left finding out why those components bought earlier in the year didn't work together well enough to give me a screen display on start-up. Having electronics testing equipment and the knowledge of its correct use would make any troubleshooting far easier, but I haven't got these. While there is a place near to me where I could go for this, you are left wondering what might be said to a PC build gone wrong. Of course, the last thing that you want to be doing is embarking on a series of purchases that do not resolve the problem, especially in the current economic climate.

One thing to suspect when all doesn't turn out as hoped is the motherboard and, for whatever reason, I always suspect it last. It now looks as if that needs to change after I discovered that it was the Gigabyte motherboard that was at fault. Whether it was faulty from the outset or it came a cropper with a rogue power supply or careless with static protection is something that I'll never know. An Asus motherboard did go rogue on me in the past, and it might be that it ruined CPU's and even a hard drive before I laid it to rest. Its eventual replacement put a stop to a year of computing misfortune and kick-started my reliance on Gigabyte. While that faith is under question now, the 2009 computing hardware mishap seems to be behind me and any PC rebuilds will be done on tables and motherboards will be suspected earlier when anything goes awry.

Returning to the present, my acquisition of an ASRock K10N78 and subsequent building activities has brought a new system using an AMD Phenom X4 CPU and 4 GB of memory into use. In fact, I am writing these very words using the thing. It's all in a new TrendSonic case too (placing an elderly behemoth into retirement) and with a SATA hard drive and DVD writer. Since the new motherboard has onboard audio and graphics, external cards are not needed unless you are an audiophile and/or a gamer; for the record, I am neither. Those additional facilities make for easier building and fault-finding should the undesirable happen.

The new box is running the release candidate of Ubuntu 9.10, which seems to be working without a hitch too. Since earlier builds of 9.10 broke in their VirtualBox VM, you should understand the level of concern that this aroused in my mind; the last thing that you want to be doing is reinstalling an operating system because its booting capability breaks every other day. Thankfully, the RC seems to have none of these rough edges, so I can upgrade the Novatech box, still my main machine and likely to remain so for now, with peace of mind when the time comes.

Never undercutting the reseller: Pondering options for buying Windows 7 licenses in light of Microsoft pricing

23rd October 2009Quite possibly, THE big technology news of the week has been the launch of Windows 7. Regular readers may be aware that I have been having a play with the beta and release candidate versions of the thing since the start of the year. In summary, I have found to work both well and unobtrusively. While there have been some rough edges when accessing files through VirtualBox's means of accessing the host file system from a VM, that's the only perturbation to be reported and, even then, it only seemed to affect my use of Photoshop Elements.

Therefore, I had it in mind to get my hands on a copy of the final release after it came out. Of course, there was the option of pre-ordering, but that isn't for everyone, so there are others. A trip down to the local branch of PC World will allow you to satisfy your needs with different editions: full, upgrade (if you already have a copy of XP or Vista, it might be worth trying out the Windows Secrets double installation trick to get it loaded on a clean system) and family packs. The last of these is very tempting: three Home Premium licences for around £130.

Though wandering around to your local PC components emporium is an alternative, you have to remember that OEM versions of the operating system are locked to the first (self-built) system on which they are installed. Apart from that restriction, the good value compared with retail editions makes them worth considering as long as you realise the commitment that you are making.

The last option that I wish to bring to your attention is buying directly from Microsoft themselves. You would think that this may be cheaper than going to a reseller, but that's not the case with the Family Pack costing around £150 in comparison to PC World's pricing, and it doesn't end there. That they only accept Maestro debit cards along with credit cards from the likes of Visa and Mastercard perhaps is another sign that Microsoft is new to the whole idea of selling online.

In contrast, Tesco is no stranger to online selling, yet they also have Windows 7 on offer though they aren't noted for computer sales; PC World may be forgiven for wondering what that means, but who would buy an operating system along with their groceries? I suppose that the answer to that would be that people who are accustomed to delivering one's essentials at a convenient time should be able to do the same with computer goods too. That convenience of timing is another feature of downloading an OS from the web, and many a Linux fan should know what that means. While Microsoft may have discovered this of late, that's better than never.

Because of my positive experience with the pre-release variants of Windows 7, I am very tempted to get my hands on the commercial release. Because I have until early next year with the release candidate and XP works sufficiently well (it ultimately has given Vista something of a soaking), I will be able to bide my time. When I do make the jump, it'll probably be Home Premium that I'll choose because it seems difficult to justify the extra cost of Professional. It was different in the days of XP, when its Professional edition did have something to offer technically minded home users like me. With 7, XP Mode might be a draw, but with virtualisation packages like VirtualBox available for no cost, it's difficult to justify spending extra. In any case, I have Vista Home Premium loaded on my Toshiba laptop and that seems to work fine, despite all the bad press that Vista has got itself.

Going mobile

20th October 2009Now that the mobile web is upon us, I have been wondering about making my various web presences more friendly for users of that platform, and my interest has been piqued especially by the recent addition of such capability to WordPress.com. With that in mind, I grabbed the WordPress Mobile Edition plugin and set it to work, both on this blog and my outdoors one. Well, the results certainly seem to gain a seal of approval from mobiReady so that's promising. While it comes with a version of the Carrington Mobile theme, you need to pop that into the themes directory on your web server yourself, as WordPress' plugin installation routines won't do that for you. It could be interesting to see how things go from here, and the idea of creating my own theme while using the plugin for redirection honours sounds like a way forward; I have found the place where I can make any changes as needed. Homemade variants of the methodology may find a use with my photo gallery and Textpattern sub-sites.