How mobile device interfaces are Influencing desktop computing environment designs

19th September 2011Could 2011 be remembered as the year when the desktop computing interface got a major overhaul? One part of this, Windows 8, won't be with us until next year, but there has been enough happening so far this year that has resulted in a lot of comment. With many if not all the changes, it is possible to detect the influence of interfaces used on smartphones. After all, the carry-over from Windows Phone 7 to the new Metro interface is unmistakeable.

Two developments in the Linux world have spawned a hell of an amount of comment: Canonical's decision to develop Unity for Ubuntu and the arrival of GNOME 3. While there have been many complaints about the changes made in both, there must be a fair few folk who are just getting on with using them without complaint. Maybe there are many who even quietly like the new interfaces. While I am not so sure about Unity, I surprised myself by taking to GNOME Shell so much that I installed it on Linux Mint. It remains a work in progress, as does Unity, but it'll be very interesting to see it mature. Perhaps a good number of the growing collection of GNOME Shell plugins could make it into the main codebase. If that were to happen, I could see it being welcomed by a good few folk.

There was little doubt that the changes in GNOME 3 looked daunting, so Ubuntu's taking a different approach is understandable until you come to realise how change that involves anyway. With GNOME 3 working so well for me, I feel disinclined to dally very much with Unity at all. In fact, I am writing these words on a Toshiba laptop running UGR, effectively Ubuntu running GNOME 3, and that could become my main home computing operating system in time.

For those who find these changes not to their taste, there are alternatives. Some Linux distributions are sticking with GNOME 2 as long as they can, and there apparently has been some mention of a fork to keep a GNOME 2 interface available indefinitely. However, there are other possibilities such as LXDE and XFCE out there too. In fact, until GNOME 3 won me over, LXDE was coming to mind as a place of safety until I learned that Linux Mint was retaining its desktop identity. As always, there's KDE too, but I have never warmed to that for some reason.

The latest version of OS X, Lion, also included some changes inspired by iOS, the operating system that powers both the iPhone and iPad. However, while the current edition of PC Pro highlights some disgruntlement in professional circles regarding Apple's direction, this does not seem to have aroused the kind of ire that has been abroad in the world of Linux. Is it because Linux users want to feel that they are in charge and that iMac and MacBook users are content to have decisions made for them so long as everything just works? Speaking for myself, the former description seems to fit me, though having choices means that I can reject decisions that I do not like so much.

At the time of writing, the release of a developer preview of the next version of Windows has been generating a lot of attention. It also appears that changes are headed for Windows users too. However, I get the sense that a more conservative interface option will be retained and that could be essential for avoiding the alienation of corporate users. After all, I cannot see the Metro interface gaining much favour in the working environment when so many of us have so much to do. Nevertheless, I plan to get my hands on the developer preview to have a look (the weekend proved too short for this). It will be very interesting to see how the next version of Windows develops, and I plan to keep an eye on it as it does so.

It now looks as if many will have their work cut out if they are to avoid where desktop computing interfaces are going. Established paradigms are being questioned, particularly as a result of touch interfaces on smartphones and tablets. Wii and Kinect have involved other ways of interacting with computers, too, so there's a lot of mileage in rethinking how we work with computers. So far, I have been able to deal with the changes in the world of Linux, but I am left wondering about the changes that Microsoft is making. After Vista, they need to be careful and they know that. Maybe, they'll be better at getting users through changes in computing interfaces than others, but it'll be very interesting to see what happens. Unlike open-source community projects, they have the survival of a massive multinational at stake.

Why the delay?

17th September 2011The time to renew my subscription to .Net magazine came around, and I decided to go for the digital option this time. The main attraction is that new issues come along without their cluttering up my house afterwards. After all, I do get to wondering how much space would be taken up by photos and music if those respective fields hadn't gone down the digital route. Some may decry the non-printing of photos that reside on hard disks or equivalent electronic storage media, but they certainly take up less physical space like that. Of course, ensuring that they are backed up in case of a calamity then becomes an important concern.

As well as the cost of a weekly magazine that I didn't read as much as I should, it was concerns about space that drove me to go the electronic route with New Scientist a few years back. They were early days for digital magazine publishing and felt like it, too. Eventually, I weaned myself from NS and the move to digital helped. Maybe trying to view magazine articles on a 17" screen wasn't as good an experience as seeing them on the 24" one that I possess these days.

That bigger screen has come in very handy for Zinio's Adobe AIR application for viewing issues of .Net and any other magazine that I happen to get from them. There's quite a selection on there, and it's not limited to periodicals from Future Media, either. Other titles include The Economist, Amateur Photographer, Countryfile, What Car and the aforementioned New Scientist as well. That's just a sample of the eclectic selection that is on offer.

For some reason, Future seem to wait a few days for the paper versions of their magazines to arrive in shops before the digital ones become available. To me, this seems odd, given that you'd expect the magazines to exist on computer systems before they come off the presses. Not only that, but subscribers to the print editions get them before they reach the shops at all anyway. This is the sort of behaviour that makes you wonder if someone somewhere is attempting to preserve print media.

In contrast, Scientific American get this right by making PDF's of their magazines available earlier than print editions. Given that it takes time for an American magazine to reach the U.K. and Eire, this is an excellent idea. There was a time when I was a subscriber to this magazine and I found it infuriating to see the latest issues on newsagent shelves, and I am still waiting for mine to arrive in the post. It was enough to make me vow not to become a subscriber to anything that left me in this situation every month.

Some won't pass on any savings with their digital editions. Haymarket Publishing come to mind here for What Car, but they aren't alone. Cicerone, Cumbrian publishers of excellent guidebooks for those seeking to enjoy the outdoors, do much the same with their wares, so you really want to save on space and gain extra convenience when going digital with either of these. In this respect, the publishers of Amateur Photographer have got it right with a great deal for a year's digital subscription. New Scientist did the same in those early days when I dabbled in digital magazines.

Of course, there are some who dislike reading things on a screen, and digital publishing will need to lure those too if it is to succeed. Nevertheless, we now have tablet computers and eBook readers such as Amazon's Kindle are taking hold too. Reading things on these should feel more natural than on a vertical desktop monitor or even a laptop screen.

Nevertheless, there are some magazines that even I would like to enjoy in print as opposed to on a screen. These also are the ones that I like to retain for future consultation, too. Examples include Outdoor Photography and TGO, and it is the content that drives my thinking here. The photographic reproduction in the former probably is best reserved for print, while the latter is more interesting. TGO does do its own digital edition, but the recounting of enjoyment of the outdoors surpassed presentation until a few months ago. It is the quality of the writing that makes me want to have them on a shelf as opposed to being stored on a computer disk.

The above thought makes me wonder why I'd go for digital magazines instead of their print counterparts. Thinking about it now, I am so sure that there is a clear-cut answer. Saving money and not having clutter does a have a lot to do with it, but there is a sense that keeping copies .Net is less essential to me, though I do enjoy seeing what is happening in the world of web design and am open to any new ideas too. Maybe the digital magazine scene is still an experiment for me.

Setting VIEWTABLE to show column names in SAS

15th September 2011The following is the default behaviour in the DMS: Base SAS opens datasets from its Explorer using VIEWTABLE and with variable labels in the column headings and not variable names. Because I have been fortunate to use systems with SAS/FSP both installed and licensed, I have taken to using FSVIEW for browsing SAS datasets as a workaround and, though the interface may look old to some, it proves to be a very flexible tool that still has a few things to teach newer ones. With SAS Enterprise Guide, the dataset viewing functionality is different to both VIEWTABLE and FSVIEW, but I have been to make it work for me. While the SAS EG dataset viewing tool may appear like the former of these, it has a few tricks to teach its forbear.

Now that I find myself working again with the traditional SAS DMS interface and without SAS/FSP, I decided to see if there was a way to get VIEWTABLE to display variable names instead of variable labels by default. As it happened, the answer was found in an internet forum discussion. From the SAS command line, you can achieve the result by issuing a command like the following:

VT SASHELP.VCOLUMN COLHEADING=NAMES

Above VT is the VIEWTABLE shortcut, while it is the COLHEADING=NAMES option on the line that gets variable names shown in column headings. Taking it further, you can set this as the default setting for datasets opened using a mouse from Explorer panes using the following procedure:

- Click in or on the Explorer pane to highlight the Explorer window.

- Select Tools > Options > Explorer in the menus.

- Select the Members tab.

- Double-click on the TABLE icon.

- Double-click on the

&Openaction. - Set the Action command to:

VIEWTABLE %8b.'%s'.DATA COLHEADING=NAMES. - Click on the Set Default button.

- Save changes and close the Explorer Options window.

Because the DMS looks similar across versions 8.0 through to 9.2, the above instructions should be relevant to all of those. While I have yet to get the opportunity to use SAS 9.3, I would be surprised to find that the traditional SAS interface has changed there too, even though much else has changed about SAS. In fact, the latest version of SAS has brought quite a few interesting new features for programmers, so it appears that you can do more through a familiar interface, not entirely a bad thing. It looks as if this VIEWTABLE tweak could be useful for a while yet.

TypeError: unable to create a wrapper for GLib.Variant

31st August 2011A little while ago, I wrote a piece on here telling of how I got GNOME 3 installed and working on Linux Mint. However, I have discovered since that there was an Achilles heel in the approach that I had taken: using the ricotz/testing PPA so that I could gain additional extensions for use with GNOME Shell. If this was just a repository of GNOME Shell extensions, that would be well and good, but the maintainer(s) also has a more cutting edge of GNOME Shell in there too. Occasionally, updates from ricotz/testing have been the cause of introducing rough edges to my desktop environment that have resolved themselves within a few hours or days. However, updates came through in the last few days that broke GNOME Tweak Tool. When I tried running it from the command line, all I got was a load of output that included the message that heads this posting and no window popping up that I could use. Because that made me see sense, I stopped living dangerously by using that testing repository. Apparently, there is a staging variant too, but a forum posting elsewhere on the web has warded me off from that too.

Until I encountered the latter posting, I had not heard of the ppa-purge tool, and it came in handy for ridding my system of all packages from the ricotz repository and replacing with alternatives from more stable ones such as that from the gnome3-team. Since this wasn't installed on my computer, I added it in the usual fashion by issuing the following command:

sudo apt-get install ppa-purge

Once that was complete, I executed the following command with the ricotz/testing repository still active:

sudo ppa-purge testing ricotz

Once that was complete and everything was very nicely automated too, GNOME Tweak Tool was working again as intended and that's the way that I intend to keep things. Another function of ppa-purge is that it has excised any mention of the ricotz/testing repos from my system too, so nothing more can come from there.

While I was in the business of stabilising GNOME Shell on my system, I decided to add in UGR too. First, another repository needed to be added as follows:

sudo add-apt-repository ppa:ubuntugnometeam/ppa-gen

sudo apt-get update

Because the next steps were to install UGR once that was in place, these commands were issued to do the job:

sudo apt-get dist-upgrade

sudo apt-get install ugr-desktop-g3

sudo apt-get upgrade

While that had the less desirable effect of adding games that I didn't need and have since removed, it otherwise worked well, and I now have a new splash screen at starting up and shutting down times for my pains. Hopefully, it will mean that any updates to GNOME Shell that come my way should be a little more polished, too. All that's needed now is for someone to set up a dedicated PPA for GNOME Shell Extensions so I could regain dropdown menus in the top panel for things such as virtual desktops, places and other handy operations that perhaps should have been in GNOME Shell from the beginning. However, that's another discussion, so I'll content myself with what I now have and see if my wish ever gets granted.

A waiting game

20th August 2011Having been away every weekend in July, I was looking forward to a quiet one at home to start August. However, there was a problem with one of my websites hosted by Fasthosts that was set to occupy me for the weekend and a few weekday evenings afterwards.

Since the issue appeared to be slow site response, I followed advice given to me by second line support when this website displayed the same type of behaviour: upgrade from Apache 1.3 to 2.2 using the control panel. Unfortunately for me, that didn't work smoothly at all and there seemed to be serious file loss as a result. Raising a ticket with the support desk only got me the answer that I had to wait for completion, and I now have come to the conclusion that the migration process may have got stuck somewhere along the way. Maybe another ticket is in order.

Several factors contributed to the waiting period referenced in the post title. First, support response times for budget hosting packages are notably slow, which makes me question whether higher-profile websites receive better service. Second, restoring websites via FTP consumes significant time, as does rebuilding and repopulating databases. Third, DNS configuration changes also introduce delays.

In retrospect, these time demands might be reduced through various approaches. Phone support could prove faster than email tickets, unless during major incidents, like the security changeover that left numerous Fasthosts users waiting for hours one night. However, phone support isn't a universal solution, as we've known since those cautionary tales began circulating in the mid-1990's.

Regular backups would streamline restoration processes. While my existing backups were adequate, they weren't comprehensive, necessitating additional work later. As for DNS changes, more frequent PC restarts would help detect propagation sooner, a lesson I will remember despite having no immediate plans to relocate websites. Ultimately, accelerating DNS propagation makes a substantial difference in minimizing downtime.

While awaiting a response from Fasthosts, I began to ponder the idea of using an alternative provider. Perusal of the latest digital edition of .Net (I now subscribe to the non-paper edition to cut down on the clutter caused by having paper copies about the place) ensued before I decided to investigate the option of using Webfusion. Having decided to stick with shared hosting, I gave their Unlimited Linux option a go. For someone accustomed to monthly billing, it was unusual to see annual biannual and triannual payment schemes too. Because the first of these appears to be the default option, a little care and attention is needed if you want something else. Also, Webfusion offers a sliding scale pricing model: the longer your commitment period, the lower your monthly hosting cost becomes; it is a pricing structure designed to encourage longer-term subscriptions.

Once the account was set up, I added a database and set to the long process of uploading files from my local development site using FileZilla. Having got a MySQL backup from the Fasthosts site, I used the provided PhpMyAdmin interface to upload the data in a piecemeal manner without exceeding the 8 MB file size limitation. It isn't possible to connect remotely to the MySQL server using the likes of MySQL Administrator, while SSH is another connection option that isn't available. There were some questions for the support people along the way; the first of these got a timely answer, though later ones took longer before I got an answer. Still, getting advice on the address of the test website was a big help while I was sorting out the DNS changeover.

Regarding domain setup, it required considerable effort and searching through Webfusion's FAQ's before I succeeded. Initially, I attempted to use name servers mentioned in an article, but this approach failed to achieve my goal. The effects might have been noticed sooner had I rebooted my PC earlier, though this didn't occur to me at the time. Ultimately, I reverted to using my domain provider's name servers and configured them with the necessary information. This solution successfully brought my website back online, allowing me to address any remaining issues.

With the site essentially operating again, it was time to iron out the rough edges. The biggest of these was that MOD_REWRITE doesn't seem to work the same on the Webfusion server like it does on the Fasthosts ones. This meant that I needed to use the SCRIPT_URI CGI variable instead of PATH_INFO to keep using clean URL's for a PHP-powered photo gallery that I have. It took me a while to figure that out, and only to feel much better when I managed to get the results that I needed. However, I also took the chance to tidy up site addresses with redirections in my .htaccess file in an attempt to ensure that I lost no regular readers, something that I seem to have achieved with some success because one such visitor later commented on a new entry in the outdoors blog.

Once any remaining missing images were instated or references to them removed, it was then time to do a full backup for the sake of safety. The first of these activities was yet another time consumer, while the second didn't take so long, which is just as well given how important it is to have frequent backups. Hopefully, though, the relocated site's performance continues to be as solid as it is now.

The question as to what to do with the Fasthosts webspace remains outstanding. Currently, they are offering free upgrades to existing hosting packages for an annual commitment. After my recent experience, I cannot say that I'm so sure about going down that route. In fact, the observation leaves me wondering if instating that very extension was the cause of breaking my site. All in all, what happened to that Fasthosts website wasn't the greatest of experiences, while the service offered by Webfusion is rock solid thus far. Although I question whether Fasthosts' service quality has declined from its previous standards, I remain open to reassessing my opinion as I observe their performance over time.

Setting up GNOME 3 on Arch Linux

22nd July 2011It must have been my curiosity that drove me to explore Arch Linux a few weeks ago. Its inclusion on a Linux Format DVD and a few kind words about its being a cutting edge distribution were enough to set me installing it into a VirtualBox virtual machine for a spot of investigation. Despite warnings to the contrary, I took the path of least resistance with the installation, even though I did look among the packages to see if I could select a desktop environment to be added as well. Not finding anything like GNOME in there, I left everything as defaulted and ended up with a command line interface, as I suspected. The next job was to use the pacman command to add the extras that were needed to set in place a fully functioning desktop.

For this, the Arch Linux wiki is a copious source of information, even if it didn't stop me doing things out of sequence. That I didn't go about perusing it linearly was part of the cause of this, but you have to know which place to start first as well. As a result, I have decided to draw everything together here so that it's all in one place and in a more sensible order, even if it wasn't the one that I followed.

The first thing to do is add X.org using the following command:

pacman -Syu xorg-server

The -Syu switch tells pacman to update the package list, upgrade any packages that require it, and adds the listed package if it isn't in place already; that's X.org in this case. For my testing, I added xor-xinit too. This puts that startx command in place. This is the command for adding it:

pacman -S xorg-xinit

With those in place, I would add the VirtualBox Guest Additions next. GNOME Shell requires 3D capability, so you need to have this done while the machine is off or when setting it up in the first place. This command will add the required VirtualBox extensions:

pacman -Syu virtualbox-guest-additions

Once that's done, you need to edit /etc/rc.conf by adding vboxguest vboxsf vboxvideo within the brackets on the MODULES line and adding rc.vboxadd within the brackets on the DAEMONS line. On restarting, everything should be available to you, but the modprobe command is there for any troubleshooting.

With the above pre-work done, you can set to installing GNOME, and I added the basic desktop from the gnome package and the other GNOME applications from the gnome-extra one. GDM is the login screen manager, so that's needed too, and the GNOME Tweak Tool is a very handy thing to have for changing settings that you otherwise couldn't. Here are the commands that I used to add all of these:

pacman -Syu gnome

pacman -Syu gnome-extra

pacman -Syu gdm

pacman -Syu gnome-tweak-tool

With those in place, some configuration files were edited so that a GUI was on show instead of a black screen with a command prompt, as useful as that can be. The first of these was /etc/rc.conf where dbus was added within the brackets on the DAEMONS line and fuse was added between those on the MODULES one.

Creating a file named .xinitrc in the root home area with the following line to that file makes running a GNOME session from issuing a startx command:

exec ck-launch-session gnome-session

With all those in place, all that was needed to get a GNOME 3 login screen was a reboot. Arch is so pared back that I could log in as root, not the safest of things to be doing, so I added an account for more regular use. After that, it has been a matter of tweaking the GNOME desktop environment and adding missing applications. The bare-bones installation that I allowed to happen meant that there were a surprising number of them, but that isn't difficult to fix using pacman.

All of this emphasises that Arch Linux is for those who want to pick what they want from an operating system rather than having that decided for you by someone else, an approach that has something going for it with some of the decisions that make their presence felt in computing environments from time to time. While there's no doubt that this isn't for everyone, the documentation is complete enough for the minimalism not to be a problem for experienced Linux users, and I certainly managed to make things work for me once I got them in the right order. Another thing in its favour is that Arch also is a rolling distribution, so you don't need to have to go through the whole set up routine every six months, unlike some others. So far, it does seem stable enough and even has set me to wondering if I could pop it on a real computer sometime.

Getting GNOME Shell 3 running on Linux Mint 11 with extensions and Cantarell font

3rd June 2011On the surface of it, this probably sounds a very strange thing to do: choose Linux Mint because they plan to stick with their current desktop interface for the foreseeable future, and then stick a brand new one on there. However, that's what last weekend's dalliance with Fedora 15 caused. Not only did I find that I could find my way around GNOME Shell, but I actually got to like it so much that I missed it on returning to using my Linux Mint machine again.

The result was that I started to look on the web to see if there was anyone else like me who had got the same brainwave. In fact, it was Mint's being based on Ubuntu that allowed me to get GNOME 3 on there. The task could be summarised as involving three main stages: getting GNOME 3 installed, adding extensions and adding the Cantarell font that is used by default. After these steps, I gained a well-running GNOME 3 desktop running on Linux Mint, and it looks set to stay that way unless something untoward emerges.

Installing GNOME 3

The first step is to add the PPA repository for GNOME 3 using the following command:

sudo add-apt-repository ppa:gnome3-team/gnome3

The, it was a case of issuing my usual update/upgrade command:

sudo apt-get update && sudo apt-get upgrade && sudo apt-get dist-upgrade

When that had done its thing and downloaded and installed quite a few upgrades along the way, it was time to add GNOME Shell using this command:

sudo apt-get install gnome-shell

When that was done, I rebooted my system to be greeted by a login screen very reminiscent of what I had seen in Fedora. While compiling this piece, I noticed that GNOME Session could need to be added before GNOME Shell, but I do not recall doing so myself. Maybe dependency resolution kept any problems at bay, but there weren't any issues that I could remember beyond things not being configured as fully as I would have liked without further work. For the sake of safety, it might be a good idea to run the following before adding GNOME Shell to your PC.

sudo apt-get install gnome-session && sudo apt-get dist-upgrade

Configuration and Customisation

Once I had logged in, the desktop that I saw wasn't at all unlike the Fedora one and everything seemed stable too. However, there was still work to do before I was truly at home with it. One thing that was needed was the ever useful GNOME Tweak Tool. This came in very handy for changing the theme that was on display to the standard Adwaita one that caught my eye while I was using Fedora 15. Adding buttons to application title bars for minimising and maximising their windows was another job that the tool allowed me to do. The command to get this goodness added in the first place is this:

sudo apt-get install gnome-tweak-tool

Since the next thing that I wanted to do was add some extensions, I added a repository from which to do this using the command below. Downloading them via Git and compiling them just wasn't working for me, so I needed another approach.

sudo add-apt-repository ppa:ricotz/testing

With that is place, I issued the following commands to gain the Dock, the Alternative Status Menu and the Windows Navigator. The second of these would have added a shutdown option in the me-menu, but it seems to have got deactivated after a system update. Holding down the ALT key to change the Suspend entry to Power off... will have to do me for now. Having the dock is the most important and that, thankfully, is staying the course and works exactly as it does for Fedora.

sudo apt-get install gnome-shell-extensions-dock

sudo apt-get install gnome-shell-extensions-alternative-status-menu

sudo apt-get install gnome-shell-extensions-windows-navigator

Adding Cantarell

The default font used by GNOME 3 in various parts of its interface is Cantarell, and it was defaulting to that standard sans-serif font on my system because this wasn't in place. That font didn't look too well, so I set to tracking the freely available Cantarell down on the web. When that search brought me to Font Squirrel, I downloaded the zip file containing the required TTF files. The next step was to install them and, towards that end, I added Fontmatrix using this command:

sudo apt-get install fontmatrix

That gave me a tool with a nice user interface, but I made a mistake when using it. This was because I (wrongly) thought that it would copy files from the folder that I wanted the import function to use. Extracting the TTF files to /tmp meant that would have had to happen, but Fontmatrix just registered them instead. A reboot confirmed that they hadn't been copied or moved at all, and I had rendered the user interface next to unusable through my own folly; the default action on Ubuntu and Linux Mint is that files are deleted from /tmp on shutdown. The font selection capabilities of the GNOME Tweak Tool came in very handy for helping me to convert useless boxes into letters that I could read.

Another step was to change the font line near the top of the GNOME Shell stylesheet (never thought that CSS usage would end up in places like this...) so that Cantarell wasn't being sought and text in sans-serif font replaced grey and white boxes. The stylesheet needs to be edited as superuser, so the following command is what's needed for this and, while I used sudo, gksu is just as useful here if it isn't what I should have been using.

sudo gedit /usr/share/gnome-shell/theme/gnome-shell.css

Once I had extricated my system from that mess, a more conventional approach was taken and the command sequence below was what I followed, with extensive use of sudo to get done what I wanted. A new directory was created and the TTF files copied in there.

cd /usr/share/fonts/truetype

sudo mkdir ttf-cantarell

cd ttf-cantarell

sudo mv /tmp/*.ttf .

To refresh the font cache, I resorted to the command described in a tutorial in the Ubuntu Wiki:

sudo fc-cache -f -v

Once that was done, it was then time to restore the reference to Cantarell in the GNOME Shell stylesheet and reinstate its usage in application windows using the GNOME Tweak Tool. Since then, I have suffered no mishap or system issue with GNOME 3. Everything seems to be working quietly, and I am pleased to see that replacement of Unity with the GNOME Shell will become an easier task in Ubuntu 11.10, the first alpha release of which is out at the time of my writing these words. Could it lure me back from my modified instance of Linux Mint yet? While I cannot say that I am sure of those, but it certainly cannot be ruled out at this stage.

Pondering storage options

1st June 2011The combination of curiosity and a little spare time had me browsing online computing technology stores recently. A spot of CD and DVD burning brought on by a flurry of Linux distribution testing reminded me of the possibility. Because I have built up a sizeable library of digital photos, ensuring that I have backups of them is something that needs doing. While a 2 GB Samsung external hard drive is brought to life every now and again for that purpose, the prospect of using Blu-ray discs has appealed to me. After all, capacities of 25 GB for single-layer discs and 50 GB for dual-layer ones sound not inappropriate for my purposes. However, they aren't a cheap option at the time of writing, with each disc costing in the region of £3-4 at one place where I was looking. The cost of BD writers themselves seems not to be so bad though, with a few in the £60-100 bracket; any lower than this and you could end up with a combo drive that reads Blu-ray discs and writes to DVD's and CD's, so a modicum of concentration is needed. As attractive as the idea might be, the cost of BD media means that I'll wait a little while before deciding to take the plunge. The price premium at the moment is a reminder of the way that things used to be when CD and DVD writers first came on the market. It is very telling when discs come packaged in jewel cases, something that you won't see too often with CD's or DVD's.

Another piece of storage excitement that hasn't escaped me is the advent of SSD hard drives. With no moving parts like in conventional hard drives, they bring a speed boost. Concerns about their lifetimes and the numbers of read/write events per drive would stall me when it comes to storing personal data on them but using them for the likes of operating system files sounds attractive, especially with my partiality to Linux perhaps not hammering drives so much. As with any new technology, there is a price premium, even though a drive big enough for hosting an operating system can be acquired for less than £100. As with many of my hardware purchase brainwaves, there's no rush, but this is an option that I'll keep at the back of my mind.

Another appealing notion is the idea of getting a NAS so that files can be shared between a few computers. While I have seen prices starting at just above £70 for single disk enclosures, these generally are a more expensive option than external drives, and that's before you consider the cost of any hard drives. Nevertheless, the advantages of a unit containing more than a single hard drive while operating as a print server for any compatible printer, too. When you get to 4 or 5 hard drive trays, then the cost has mounted, but that could be when they pay their way too. What reminded me of these was a bookazine on home networking that I recently found at a branch of WHSmith's and their attractions are subject to the networking side of things being made to work without a drama. Once that's out of the way, then their usefulness really does appeal.

Mulling over all these brainwaves is one thing, but it doesn't mean that the purse strings will become too loose in this age of economic constraint. In fact, pondering them may serve to staunch any impulse purchases. Sometimes, a spot of virtual shopping serves to control things rather than losing the run of oneself.

What I learned from manually upgrading to Linux Mint 11

31st May 2011For a Linux distribution that focuses on user-friendliness, it does surprise me that Linux Mint offers no seamless upgrade path. In fact, the underlying philosophy is that upgrading an operating system is a risky business. However, I have been doing in-situ upgrades with both Ubuntu and Fedora for a few years without any real calamities. A mishap with a hard drive that resulted in lost data in the days when I mainly was a Windows user places this into sharp relief. These days, I am far more careful but thought nothing of sticking a Fedora DVD into a drive to move my Fedora machine from 14 to 15 recently. Apart from a few rough edges and the need to get used to GNOME 3 together with making a better fit for me, there was no problem to report. The same sort of outcome used to apply to those online Ubuntu upgrades that I was accustomed to doing.

The recommended approach for Linux Mint is to back up your package lists and your data before the upgrade. Doing the former is a boon because it automates adding the extras that a standard CD or DVD installation doesn't do. While I did do a little backing up of data, it wasn't total because I know how to identify my drives and take my time over things. Apache settings and the contents of MySQL databases were my main concern because of where these are stored.

When I was ready to do so, I popped a DVD in the drive and carried out a fresh installation into the partition where my operating system files are kept. Being a Live DVD, I was able to set up any drive and partition mappings by referring to Mint's Disk Utility. One thing that didn't go so well was the GRUB installation, and it was due to the choice that I made on one of the installation screens. Despite doing an installation of version 10 just over a month ago, I had overlooked an intricacy of the task and placed GRUB on the operating system files partition rather than at the top level of the disk where it is located. Instead of trying to address this manually, I took the easier and more time-consuming step of repeating the installation like I did the last time. If there was a graphical tool for addressing GRUB problems, I might have gone for that instead, but am left wondering at why there isn't one included at all. Maybe it's something that the people behind GRUB should consider creating, unless there is one out there already about which I know nothing.

With the booting problem sorted, I tried logging in, only to find a problem with my desktop that made the system next to unusable. It was back to the DVD, and I moved many of the configuration files and folders (the ones with names beginning with a ".") from my home directory in the belief that there might have been an incompatibility. That action gained me a fully usable desktop environment, but I now think that the cause of my problem may have been different to what I initially suspected. Later I discovered that ownership of files in my home area elsewhere wasn't associated with my user ID, though there was no change to it during the installation. As it happened, a few minutes with the chown command were enough to sort out the permissions issue.

The restoration of the extra software that I had added beyond what standardly gets installed was took its share of time, but the use of a previously prepared list made things so much easier. That it didn't work smoothly because some packages couldn't be found the first time around, so another one was needed. Nevertheless, that is nothing compared to the effort needed to do the same thing by issuing an installation command at a time. Once the usual distribution software updates were in place, all that was left was to update VirtualBox to the latest version, install a Citrix client and add a PHP plugin to NetBeans. Then, nearly everything was in place for me.

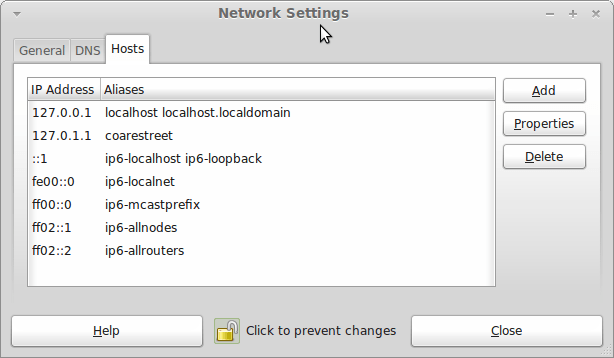

Next, Apache settings were restored, as were the databases that I used for offline web development. That nearly was all that was needed to get offline websites working, but for the need to add an alias for localhost.localdomain. That required installation of the Network Settings tool so that I could add the alias in its Hosts tab. With that out of the way, the system had been settled in and was ready for real work.

Given the glitches I encountered, I can understand the Linux Mint team's caution regarding a more automated upgrade process. Even so, I still wonder if the more manual alternative that they have pursued brings its own problems in the form of those that I met. The fact that the whole process took a few hours in comparison to the single hour taken by the in-situ upgrades that I mentioned earlier is another consideration that makes you wonder if it is all worth it every six months or so. Saying that, there is something to letting a user decide when to upgrade rather than luring one along to a new version, a point that is more than pertinent in light of the recent changes made to Ubuntu and Fedora. Whichever approach you care to choose, there are arguments in favour as well as counterarguments too.

Improving Font Display in Fedora 15

30th May 2011When I first started to poke around Fedora 15 after upgrading my Fedora machine, the definition of the font display was far from being acceptable to me. Thankfully, it was something that I could resolve, and I am writing these words with the letters forming them being shown in a way that was acceptable to me. The main thing that I did to achieve this was to add a file named 99-autohinter-only.conf in the folder /etc/fonts/conf.d. The file contains the following:

<?xml version="1.0"?>

<!DOCTYPE fontconfig SYSTEM "fonts.dtd">

<fontconfig>

<match target="font">

<edit name="autohint" mode="assign">

<bool>true</bool>

</edit>

</match>

</fontconfig>

Enabling autohinting improves font appearance in Fedora 15. The TrueType bytecode interpreter (BCI) was recently added to FreeType after its patent expired, but this actually decreased font quality on my system. I applied Kevin Kofler's autohinting fix and installed GNOME Tweak Tool, which lets you adjust autohinting settings. This combination solved my problems, particularly with letters like "k". Now, I am considering trying the same solution in openSUSE, which also has unsatisfactory font rendering, though I'll have to wait for GNOME Tweak Tool until they release a GNOME 3 version.