A quick look at the 7G Firewall

17th October 2021There is a simple principal with the 7G Firewall from Perishable press: it is a set of mod_rewrite rules for the Apache web server that can be added to a .htaccess file, and there also is a version for the Nginx web server as well. These check query strings, request Uniform Resource Identifiers (URI's), user agents, remote hosts, HTTP referrers and request methods for any anomalies and blocks those that appear dubious.

Unfortunately, I found that the rules heavily slowed down a website with which I tried them, so I am going to have to wait until that is moved to a faster system before I really can give them a go. This can be a problem with security tools, as I also found with adding a modsec jail to a Fail2Ban instance. As it happens, both sets of observations were made using the GTMetrix tool, making it appear that there is a trade-off between security and speed that needs to be assessed before adding anything to block unwanted web visitors.

How QuillBot, Paraphraser, Dupli Checker and Pre Post SEO can help your writing

15th October 2021Every week, I get an email newsletter from Woody's Office Watch. This was something to which I started subscribing in the 1990's, but I took a break from it for a good while for reasons that I cannot recall and returned to it only lately. This week's issue featured a list of online paraphrasing tools that are part of what is offered by QuillBot, Paraphraser, Dupli Checker and Pre Post SEO. Each got their own reviews in the newsletter, so I will just outline other features in this posting.

In QuillBot's case, the toolkit includes a grammar checker, summary generator, and citation generator. In addition to the online offering, there are extensions for Microsoft Word, Google Chrome, and Google Docs. A paid subscription option is available In addition to the free version.

Despite the name, Paraphraser is about more than what the title purports to do. There is article rewriting, plagiarism checking, grammar checking and text summarisation. Because there is no premium version, the offering is funded by advertising, and it will not work with an ad blocker enabled. The mention of plagiarism suggests a perhaps murkier side to writing that cuts both ways: one is to avoid copying other work, while another is the avoidance of groundless accusations of copying.

It was apparent that the main role of Dupli Checker is to avoid accusations of plagiarism by checking what you write, yet there is a grammar checker as well as a paraphrasing tool on there too. When I tried it, the English that it produced looked a little convoluted and there is a lack of fluency in what is written on its website as well. Together with a free offering that is supported by ads that were not blocked by my ad blocker, there are premium subscriptions too.

In web publishing, they say that content is king, so the appearance of an option using the acronym for Search Engine Optimisation in its name may not be as strange as it might at first glance. There are numerous tools here with both free and paid tiers of service. While paraphrasing and plagiarism checking get top billing in the main menu on the home page, further inspection reveals that there is a lot more to check on this site.

In writing, inspiration is a fleeting and ephemeral quantity, so anything that helps with this has to be of interest. While any rewriting of initial content may appear less smooth than the starting point, any help with the creation process cannot go amiss. For that reason alone, I might be tempted to try these tools occasionally, and they might assist with proofreading as well because that can be a hit-and-miss affair for some.

Broadening data science horizons: Useful Python packages for working with data

14th October 2021My response to changes in the technology stack used in clinical research is to develop some familiarity with programming and scripting platforms that complement and compete with SAS, a system with which I have been programming since 2000. While one of these has been R, Python is another that has taken up my attention, and I now also have Julia in my sights as well. There may be others to assess in the fullness of time.

While I first started to explore the Data Science world in the autumn of 2017, it was in the autumn of 2019 that I began to complete LinkedIn training courses on the subject. Good though they were, I find that I need to actually use a tool to better understand it. At that time, I did get to hear about Python packages like Pandas, NumPy, SciPy, Scikit-learn, Matplotlib, Seaborn and Beautiful Soup though it took until of spring of this year for me to start gaining some hands-on experience with using any of these.

During the summer of 2020, I attended a BCS webinar on the CodeGrades initiative, a programming mentoring scheme inspired by the way classical musicianship is assessed. In fact, one of the main progenitors is a trained classical musician and teacher of classical music who turned to Python programming when starting a family to have a more stable income. The approach is that a student selects a project and works their way through it, with mentoring and periodic assessments carried out in a gentle and discursive manner. Of course, the project has to be engaging for the learning experience to stay the course, and that point came through in the webinar.

That is one lesson that resonates with me with subjects as diverse as web server performance and the ongoing pandemic supplying data, and there are other sources of public data to examine as well before looking through my own personal archive gathered over the decades. Though some subjects are uplifting while others are more foreboding, the key thing is that they sustain interest and offer opportunities for new learning. Without being able to dream up new things to try, my knowledge of R and Python would not be as extensive as it is, and I hope that it will help with learning Julia too.

In the main, my own learning has been a solo effort with consultation of documentation along with web searches that have brought me to the likes of Real Python, Stack Abuse, Data Viz with Python and R and others for longer tutorials as well as threads on Stack Overflow. Usually, the web searching begins when I need a steer on a particular or a way to resolve a particular error or warning message, but books are always worth reading even if that is the slower route. While those from the Dummies series or from O'Reilly have proved must useful so far, I do need to read them more completely than I already have; it is all too tempting to go with the try the "programming and search for solutions as you go" approach instead.

To get going, many choose the Anaconda distribution to get Jupyter notebook functionality, but I prefer a more traditional editor, so Spyder has been my tool of choice for Python programming and there are others like PyCharm as well. Because Spyder itself is written in Python, it can be installed using pip from PyPi like other Python packages. It has other dependencies like Pylint for code management activities, but these get installed behind the scenes.

The packages that I first met in 2019 may be the mainstays for doing data science, but I have discovered others since then. It also seems that there is porosity between the worlds of R and Python, so you get some Python packages aping R packages and R has the Reticulate package for executing Python code. There are Python counterparts to such Tidyverse stables as dplyr and ggplot2 in the form of Siuba and Plotnine, respectively. Though the syntax of these packages are not direct copies of what is executed in R, they are close enough for there to be enough familiarity for added user-friendliness compared to Pandas or Matplotlib. The interoperability does not stop there, for there is SQLAlchemy for connecting to MySQL and other databases (PyMySQL is needed as well) and there also is SASPy for interacting with SAS Viya.

While Python may not have the speed of Julia, there are plenty of packages for working with larger workloads. Of these, Dask, Modin and RAPIDS all have their uses for dealing with data volumes that make Pandas code crawl. As if to prove that there are plenty of libraries for various forms of data analytics, data science, artificial intelligence and machine learning, there also are the likes of Keras, TensorFlow and NetworkX. These are just a selection of what is available, and there is always the possibility of checking out others. It may be tempting to stick with the most popular packages all the time, especially when they do so much, but it never hurts to keep an open mind either.

Stopping Firefox from launching on the wrong virtual desktop on Linux Mint

12th October 2021During the summer, I discovered that Firefox was steadfastly opening on the same virtual desktop on Linux Mint (the Cinnamon version) regardless of the one on which it was started. Being a creature of habit who routinely opens Firefox within the same virtual desktop all the time, this was not something that I had noticed until the upheaval of a system rebuild. The supposed cause is setting the browser to reopen tabs from the preceding session. The settings change according to the version of Firefox, but it is found in Settings > General in the version in which I am writing these words (Firefox Developer Edition 94.0b4) and the text beside the tick box is "Open previous windows and tabs".

While disabling the aforementioned setting could work, there is another less intrusive solution. This needs the opening of a new tab and the entering of the address about:config in the address bar. If you see a warning message about the consequences of proceeding further, accept responsibility using the interface as you do just that. In the resulting field marked Search preference name, enter the text widget.disable-workspace-management and toggle the setting from false to true to activate it. Then, Firefox should open on the desktop where you want it and not some other default location.

Behind the scenes of a website refresh and security overhaul

11th October 2021Things have been changing on here. Much of that has been behind the scenes with a move to a new VPS for extra speed and all the upheaval that brings. It also gained me a better and more responsive system for less money than the old upgrade path was costing me. Extra work has gone into securing the website too, something that has taught me a lot as that has progressed. New lessons were added to older, and sometimes forgotten, ones.

The more obvious change for those who have been here before is that the visual appearance has been refreshed. A new theme has been applied with a multitude of tweaks to make it feel unique and to iron out any rough edges that there may be. This remains a WordPress-based website, and the new theme is a variant of the Appointee child theme of the Appointment theme. Since WordPress does only support child theming but not grandchild theming, I had to make a copy of Appointee of my own so I could modify things as I see fit.

To my eyes, things do look cleaner, crisper and brighter, so I hope that it feels the same to you. Like so many designs these days, the basis is the Bootstrap framework and that is no bad thing in my mind, though the standardisation may be too much for some tastes. What has become challenging is that it is getter harder to find new spins on more traditional layouts, with everything going for a more magazine-like appearance and summaries being shown on the front page instead of complete articles. That probably reflects how things are going for websites these days, which could make the next refresh a more home-grown effort, even if that is a while away yet.

As the website heads towards its sixteenth year, there is bound to be continuing change. In some ways, I prefer that some things remain unchanged, so I use the classic editor instead of Gutenberg because that works best for me. Block-based editing is not for me, since I prefer to tinker with code anyway. Still, not all of its influences can be avoided, leaving me to figure out the new widgets interface. While it did not feel that intuitive, I suppose that I will grow accustomed to it.

My interest in technology continues, even if it saddens me at this time and some things do not impress me; the Windows 11 taskbar is one of those, so I will not be in any hurry to move away from Windows 10. Still, the pandemic has offered its own learning, with virtual conferencing allowing one to lurk and learn new things. For me, this has included R, Python, Julia and DevOps among other things. That proved worthwhile during a time with many restrictions. All that could yield more content yet, and some already is on the way.

As ever, it is my own direct working with technology that yields some real niche ideas that others have not covered. With so many technology blogs out there, they may be getting less and less easy to find, yet everyone has their own journey, so I hope to encounter more of them. There remain times when doing precedes telling, which is how it is on here. It is not all about appearances, since content matters as much as it ever did.

Something to watch with the SYSODSESCAPECHAR automatic SAS macro variable

10th October 2021Recently, a client of mine updated one of their systems from SAS 9.4 M5 to SAS 9.4 M7. Despite performing due diligence regarding changes between the maintenance release, a change in behaviour of the SYSODSESCAPECHAR automatic macro variable surprised them. The macro variable captures the assignment of the ODS escape character used to prefix RTF codes for page numbering and other things. That setting is made using an ODS ESCAPECHAR statement like the following:

ods escapechar="~";

In the M5 release, the tilde character in this example was output by the automatic macro variable, but that changed in the M7 release to 7E, the hexadecimal code for the same and this tripped up one of their validated macro programs used in output production. The adopted solution was to use the escape sequence (ESC) that gave the same outcome that was there before the change. That was less verbose than alternative code changing the hexadecimal code into the expected ASCII character that follows.

data _null_;

call symput("new",byte(input("&sysodsescapechar.",hex.)));

run;The above supplies a hexadecimal code to the BYTE function for correct rendering, with the SYMPUT routine assigning the resulting value to a macro variable named new. Just using the escape sequence is far more succinct, though there is now an added validation need once user pilot testing has completed. In my line of business, the updating of code is the quickest part of many such changes; documentation and testing always take longer.

Limiting Google Drive upload & synchronisation speeds using Trickle

9th October 2021Having had a mishap that lost me some photos in the early days of my dalliance with digital photography, I have been far more careful since then and that now applies to other files as well. Doing regular backups is a must that you find reiterated by many different authors, and the current computing climate makes doing that more vital than it ever was.

So, as well as having various local backups, I also have remote ones in the form of OneDrive, Dropbox and Google Drive. While these more correctly are file synchronisation services, disciplined use can make them useful as additional storage facilities in the interests of maintaining added resilience. There also are dedicated backup services that I have seen reviewed in the likes of PC Pro magazine, but I have to make use of those.

Insync

Part of my process for dealing with new digital photo files is to back them up to Google Drive, and I did that with a Windows client in the early days but then moved to Insync running on Linux Mint. One drawback to the approach is that this hogs the upload bandwidth of an internet connection that has yet to move to fibre from copper cabling. While having fibre connections to a local cabinet helps, a 100 KiB/s upload speed is easily overwhelmed and digital photo file sizes keep increasing. It does not help that I insist on using more flexible raw formats like DNG, CR2 or CR3 either.

While making fewer images could help to cut the load, I still come away from an excursion with many files because I get so besotted with my surroundings. This means that upload sessions take numerous hours and can extend across calendar days. Ultimately, this makes my internet connection far less usable; hence I want to throttle upload speed, much like what is possible in the Transmission BitTorrent client or in the Dropbox client. Since this is not available in Insync, I have tried using the trickle command instead, and an example is below:

trickle -d 2000 -u 50 insync

Here, the upload speed is limited to 50 KiB/s while the download speed is limited to 2000 KiB/s. In my case, the latter of these hardly matters, while the former leaves me with acceptable internet usability. Insync does not work smoothly with this, though, so occasional restarts are needed to keep file uploads progressing and CPU load also is higher. As rough as the user experience feels, uploads can continue in parallel with other work.

gdrive

One other option that I am exploring is the use of the command-line tool gdrive and this appears to work well with trickle. After downloading and installing the tool, getting going is a matter of issuing the following command and following the instructions:

gdrive about

On web servers, I even have the tool backing up things to Google Drive on a scheduled basis. Because of a Google Drive limitation that I have encountered not only with gdrive but also with Insync and Google's own Windows Google Drive client, synchronisation only happens with two new folders, one local and the other remote. Handily, gdrive supports the usual bash style commands for working with remote directories, so something like the following will create a directory on Google Drive:

gdrive mkdir ttdc [ID for parent folder]

Here, the ID for the parent folder may be omitted, though it can be obtained by going to Google Drive online and getting a link location by right-clicking on a folder and choosing the appropriate context menu item. This gets you something like the following and the required identifier is found between the last slash and the first question mark in the address string (so as not to share any real links, I made the address more general below):

https://drive.google.com/drive/folders/[remote folder ID]?usp=sharing

Then, synchronisation uses a command like the following:

gdrive sync upload [local folder or file path] [remote folder ID]

There also is the option to do a one-way upload, and this is the form of the command used:

gdrive upload [local folder or file path] -p [remote folder ID]

Because every file or folder object has its own ID on Google Drive, it is possible to create two objects on there that appear to have the same name, though that is sure to cause confusion even if you know what is happening. It is possible in each of the above to throttle them using trickle as well:

trickle -d 2000 -u 50 gdrive sync upload [local folder or file path] [remote folder ID]

trickle -d 2000 -u 50 gdrive upload [local folder or file path] -p [remote folder ID]

Handily, this works without the added drama seen with Insync and lends itself to scripting as well, so it could be something that I will incorporate into my current workflow. One thing that needs to be watched is file upload failures, but there may be ways to catch those and retry them, which would be another thing that needs doing. This is built into Insync, and it would be a learning opportunity if I were to stick with gdrive instead.

When CRON is stalled by incorrect file and folder permissions

8th October 2021During the past week, I rebooted my system only to find that a number of things no longer worked, and my Pi-hole DNS server was among them. Having exhausted other possibilities by testing out things on another machine, I did a status check when I spotted a line like the following in my system logs and went investigating further:

cron[322]: (root) INSECURE MODE (mode 0600 expected) (crontabs/root)

It turned out to be more significant than I had expected because this was why every CRON job was failing and that included the network set up needed by Pi-hole; a script is executed using the @reboot directive to accomplish this, and I got Pi-hole working again by manually executing it. The evening before, I did introduce some changes to file permissions under /var/www, but I was not expecting it to affect other parts of the /var, though that may have something to do with some forgotten heavy-handedness. The cure was to issue a command like the following for execution in a terminal session:

sudo chmod -R 600 /var/spool/cron/crontabs/

Then, CRON itself needed to start since it had not been running at all and executing this command did the needful without restarting the system:

sudo systemctl start cron

That outcome was proved by executing the following command to issue some terminal output that include the welcome text "active (running)" highlighted in green:

sudo systemctl status cron

There was newly updated output from a frequently executing job that checked on web servers for me, but this was added confirmation. It was a simple solution to a perplexing situation that led up all sorts of blind alleys before I alighted on the right solution to the problem.

Some books and other forms of documentation on R

11th September 2021The thrust of an exhortation from a computing handbook publisher comes to mind here: don't just look things up on Google, read a book so you really understand what you are doing. A form of words like that was used to sell an eBook on GitHub, but the same sentiment applies to R or any other computing language. While using a search engine will get you going or add to existing knowledge, only a book or a training course will help to embed real competence.

In the case of R, there is a myriad of blogs out there that can be consulted, as well as function and package documentation on RDocumentation or rrdr.io. For the former, R-bloggers or R Weekly can make good places to start, while ones like Stats and R, Statistics Globe, STHDA, PSI's VIS-SIG and anything from Posit (including their main blog as well as their AI one) can be worth consulting. Additionally, there is also RStudio Education and the NHS-R Community, which also have a GitHub repository together with a YouTube channel. Many packages have dedicated websites as well, so there is no lack of documentation with all of these, so here is a selection:

To come to the real subject of this post, R is unusual in that books that you can buy also have companion websites that contain the same content with the same structure. Whatever funds this approach (and some appear to be supported by RStudio itself by the looks of things), there certainly are many books available freely online in HTML as you will see from the list below, while a few do not have a print counterpart as far as I know:

R Programming for Data Science

R Markdown: The Definitive Guide

bookdown: Authoring Books and Technical Documents with R Markdown

blogdown: Creating Websites with R Markdown

pagedown: Create Paged HTML Documents for Printing from R Markdown

Dynamic Documents with R and knitr

Engineering Production-Grade Shiny Apps

Outstanding User Interfaces with Shiny

Happy Git and GitHub for the useR

Outstanding User Interfaces with Shiny

Engineering Production-Grade Shiny Apps

Many of the above have counterparts published by O'Reilly or Chapman & Hall, to name the two publishers that I have found so far. Aside from sharing these with you, there is also the personal motivation of having the collection of links somewhere so I can close tabs in my Firefox session. There are other web articles open in other tabs that I need to retain and share, but these will need to do for now, and I hope that you find them as useful as I do.

A little bit of abstraction: The quiet utility of generated imagery

21st August 2021

Data science has remained in my awareness since 2017 though my work is more on its fringes in clinical research. In fact, I have been involved more in the standardisation and automation of more traditional data reporting than in the needs of data modelling such as data engineering or other similar disciplines. Much of this effort has meant the use of SAS, with which I have programmed since 2000 and for which I have a licence (an expensive commodity, it has to be said), but other technologies are being explored with R, Python and Julia being among them.

Though the change in technological scope does bring an element of excitement and new interest, there is also some sadness when tried and trusted technologies meet with newer competition and valued skills are no longer as career securing as they once were. Still, there is plenty of online training out there, and I already have collected some of my thoughts on this. The learning continues and the need for repositioning is also clear.

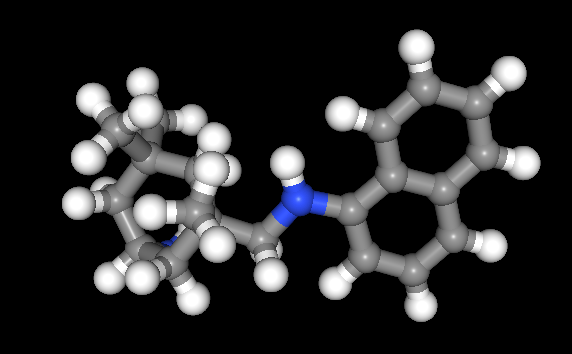

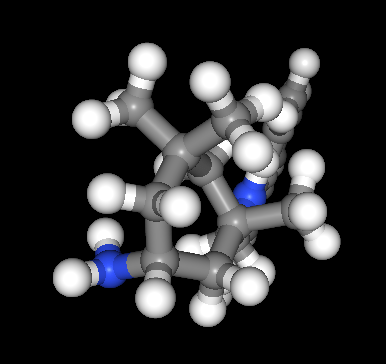

The journey also brought some curios to my notice. One of these is This Person Does Not Exist, a website building photos of non-existent faces using machine learning. Recently, I learned of others like it such as This Artwork Does Not Exist, This Cat Does Not Exist, This Horse Does Not Exist, and This Chemical Does Not Exist. The last of these probably should be entitled "This Molecule Does Not Exist (Yet)" since it is a fictitious molecular structure that has been created and what you get is an actual moving image that spins it around in three-dimensional space. The one with dynamically generated abstract art is the main inspiration for this piece and is of more interest to me, while the other two are more explanatory, though the horse website is not so successful in its execution and one can ask why we need more cat pictures.

To some, the idea of creating fake pictures may feel a little foreboding, and that especially applies to photos of people and the livelihoods of any content creators. Nevertheless, these sources of imagery have their legitimate uses, such as decorating websites or brochures, which is where my interest is piqued. After all, there are some subjects where pictures can be scarce, so any form of decoration that enlivens an article has to have some use. While technology websites like this one can feature images too with screenshots and device photos being commonplace, they can all look like each other, hence the need for a little more variety and having pictures often increases the choice of website themes as well since so many need images to make them work or stand out. As ever, being sparing with any innovations remains in order, which is how I approach this matter as well.