TOPIC: WESTERN DIGITAL

When a hard drive is unrecognised by the Linux hddtemp command

15th August 2021One should not do a new PC build in the middle of a heatwave if you do not want to be concerned about how fast fans are spinning and how hot things are getting. Yet, that is what I did last month after delaying the act for numerous months.

My efforts mean that I have a system built around an AMD Ryzen 9 5950X CPU and a Gigabyte X570 Aorus Pro with 64 GB of memory, and things are settling down after the initial upheaval. That also meant some adjustments to the CPU fan profile in the BIOS for quieter running while the use of Be Quiet! Dark Rock 4 cooler also helps, as does a Be Quiet! Silent Wings 3 case fan. All are components from trusted brands, though I wonder how much abuse they got during their installation and subsequent running in.

Fan noise is a non-quantitative indicator of heat levels as much as touch, so more quantitative means are in order. Aside from using a thermocouple device, there are in-built sensors too. My using Linux Mint means that I have the sensors command from the lm-sensors package for checking on CPU and other temperatures, though hddtemp is what you need for checking on the same for hard drives. The latter can be used as follows:

sudo hddtemp /dev/sda /dev/sdb

This has to happen using administrator access and a list of drives needs to be provided because it cannot find them by itself. In my case, I have no mechanical hard drives installed in non-NAS systems and I even got to replace a 6 TB Western Digital Green disk with an 8 TB SSD, but I got the following when I tried checking on things with hddtemp:

WARNING: Drive /dev/sda doesn't seem to have a temperature sensor.

WARNING: This doesn't mean it hasn't got one.

WARNING: If you are sure it has one, please contact me (hddtemp@guzu.net).

WARNING: See --help, --debug and --drivebase options.

/dev/sda: Samsung SSD 870 QVO 8TB: no sensor

The cause of the message for me was that there is no entry for Samsung SSD 870 QVO 8TB in /etc/hddtemp.db so that needed to be added there. Before that could be rectified, I had to get some additional information using smartmontools and these had to be installed using the following command:

sudo apt-get install smartmontools

What I had to do was check the drive's SMART data output for extra information, and that was achieved using the following command:

sudo smartctl /dev/sda -a | grep -i Temp

What this does is to look for the temperature information from smartctl output using the grep command, with output from the first being passed to the second through a pipe. This yielded the following:

190 Airflow_Temperature_Cel 0x0032 072 050 000 Old_age Always - 28

The first number in the above (190) is the thermal sensor's attribute identifier, and that was needed in what got added to /etc/hddtemp.db. The following command added the necessary data to the aforementioned file:

echo \"Samsung SSD 870 QVO 8TB\" 190 C \"Samsung SSD 870 QVO 8TB\" | sudo tee -a /etc/hddtemp.db

Here, the output of the echo command was passed to the tee command for adding to the end of the file. In the echo command output, the first part is the name of the drive, the second is the heat sensor identifier, the third is the temperature scale (C for Celsius or F for Fahrenheit) and the last part is the label (it can be anything that you like, but I kept it the same as the name). On re-running the hddtemp command, I got output like the following, so all was as I needed it to be.

/dev/sda: Samsung SSD 870 QVO 8TB: 28°C

Since then, temperatures may have cooled and the weather become more like what we usually get, yet I am still keeping an eye on things, especially when the system is put under load using Perl, R, Python or SAS. There may be further modifications such as changing the case or even adding water cooling, not least to have a cooler power supply unit, but nothing is being rushed as I monitor things to my satisfaction.

Setting up a WD My Book Live NAS on Ubuntu GNOME 13.10

1st December 2013The official line from Western Digital is this: they do not support the use of their My Book Live NAS drives with Linux or UNIX. However, what that means is that they only develop tools for accessing their products for Windows and maybe OS X. It still doesn't mean that you cannot access the drive's configuration settings by pointing your web browser at http://mybooklive.local/. In fact, not having those extra tools is no drawback at all since the drive can be accessed through your file manager of choice under the Network section and the default name is MyBookLive too, so you easily can find the thing once it is connected to a router, or switch anyway.

Once you are in the server's web configuration area, you can do things like changing its name, updating its firmware, finding out what network has been assigned to it, creating and deleting file shares, password protecting file shares and other things. These are the kinds of things that come in handy if you are going to have a more permanent connection to the NAS from a PC that runs Linux. The steps that I describe have worked on Ubuntu 12.04 and 13.10 with the GNOME desktop environment.

What I was surprised to discover that you cannot just set up a symbolic link that points to a file share. Instead, it needs to be mounted and this can be done from the command line using mount or at start-up with /etc/fstab. For this to happen, you need the Common Internet File System utilities and these are added as follows if you need them (check in the Software Centre or in Synaptic):

sudo apt-get install cifs-utils

Once these are added, you can add a line like the following to /etc/fstab:

//[NAS IP address]/[file share name] /[file system mount point] cifs

credentials=[full file location]/.creds,

iocharset=utf8,

sec=ntlm,

gid=1000,

uid=1000,

file_mode=0775,

dir_mode=0775

0 0

Though I have broken it over several lines above, this is one unwrapped line in /etc/fstab with all the fields in square brackets populated for your system and with no brackets around these. Though there are other ways to specify the server, using its IP address is what has given me the most success; this is found under Settings > Network on the web console. Next up is the actual file share name on the NAS; I have used a custom term instead of the default of Public. The NAS file share needs to be mounted to an actual directory in your file system like /media/nas or whatever you like; however, you will need to create this beforehand. After that, you have to specify the file system, and it is cifs instead of more conventional alternatives like ext4 or swap. After this and before the final two space delimited zeroes in the line comes the chunk that deals with the security of the mount point.

What I have done in my case is to have a password-protected file share and the user ID and password have been placed in a file in my home area with only the owner having read and write permissions for it (600 in chmod-speak). Preceding the filename with a "." also affords extra invisibility. That file then is populated with the user ID and password like the following. Of course, the bracketed values have to be replaced with what you have in your case.

username=[NAS file share user ID]

password=[NAS file share password]

With the credentials file created, its options have to be set. First, there is the character set of the file (usually UTF-8 and I got error code 79 when I mistyped this) and the security that is to be applied to the credentials (ntlm in this case). To save having no write access to the mounted file share, the uid and gid for your user needs specification, with 1000 being the values for the first non-root user created on a Linux system. After that, it does no harm to set the file and directory permissions because they only can be set at mount time; using chmod, chown and chgrp afterwards, has no effect whatsoever. Here, I have set permissions to read, write and execute for the owner and the user group while only allowing read and execute access for everyone else (that's 775 in the world of chmod).

All of what I have described here worked for me and had to be gleaned from disparate sources like Mount Windows Shares Permanently from the Ubuntu Wiki, another blog entry regarding the permissions settings for a CIFS mount point and an Ubuntu forum posting on mounting CIFS with UTF-8 support. Because of the scattering of information, I just felt that it needed to all together in one place for others to use, and I hope that fulfils someone else's needs similarly to mine.

Upgrading from Windows 7 to Windows 8 within a VMWare Virtual Machine

1st November 2012Though my main home PC runs Linux Mint, I do like to have the facility to use Windows software occasionally, and virtualisation has allowed me to continue doing that. For a good while, it was a Windows 7 guest within a VirtualBox virtual machine and, before that, one running Windows XP fulfilled the same role. However, it did feel as if things were running slower in VirtualBox than once might have been the case, so I jumped ship to VMware Player. While it may be proprietary and closed source, it is free of charge and has been doing what was needed. A subsequent recent upgrade of a video driver on the host operating system allowed the enabling of a better graphical environment in the Windows 7 guest.

Instability

However, there were issues with stability and I lost the ability to flit from the VM window to the Linux desktop at will, with the system freezing on me and needing a reboot. Working in Windows 7 using full-screen mode avoided this, yet it did feel as I was constrained to working on a Windows-only machine whenever I did so. The graphics performance was imperfect too, with screening refreshing being very blocky with some momentary scrambling whenever I opened the Start menu. Others would not have been as patient with that as I was, though there was the matter of an expensive Photoshop licence to be guarded too.

In hindsight, a bit of pruning could have helped. An example would have been driver housekeeping in the form of removing VirtualBox Guest Additions because they could have been conflicting with their VMware counterparts. For some reason, those thoughts entered my mind to make me consider another, more expensive option instead.

Considering NAS & Windows/Linux Networking

That would have taken the form of setting aside a PC for running Windows 7 and having a NAS for sharing files between it and my Linux system. In fact, I did get to exploring what a four bay QNAP TS-412 would offer me and realised that you cannot put normal desktop hard drives into devices like that. For a while, it looked as if it would be a matter of getting drives bundled with the device or acquiring enterprise grade disks to main the required continuity of operation. The final edition of PC Plus highlighted another one, though: the Western Digital Red Pro range. These are part way been desktop and enterprise classifications and have been developed in association with NAS makers too.

While looking at the NAS option certainly became an education, it has exited any sort of wish list that I have. After all, it is the cost of such a setup that gets me asking if I really need such a thing. While the purchase of a Netgear FS 605 Ethernet switch would have helped incorporate it, there has been no trouble sorting alternative uses for that device since it bumps up the number of networked devices that I can have, never a bad capability to have. As I was to find, there was a less expensive alternative that would become sufficient for my needs.

In-situ Windows 8 Upgrade

Microsoft has been making available evaluation copies of Windows 8 Enterprise that last for 90 days before expiring. One is in my hands has been running faultlessly in a VMware virtual machine for the past few weeks. That made me wonder if upgrading from Windows 7 to Windows 8 help with my main Windows VM problems. Being a curious risk-taking type I decided to answer the question for myself using the £24.99 Windows Pro upgrade offer that Microsoft have been running for those not needing a disk up front; they need to pay £49.99 while you can get one afterwards for an extra £12.99 and £3.49 postage if you wish, a slightly cheaper option. Though there also was a time cost in that it occupied a lot of a weekend for me, it seems to have done what was needed, so it was worth the outlay.

Given the element of risk, Photoshop was deactivated to be on the safe side. That wasn't the only pre-upgrade action that was needed because the Windows 8 Pro 32-bit upgrade needs at least 16 GB before it will proceed. Of course, there was the matter of downloading the installer from the Microsoft website too. This took care of system evaluation and paying for the software, as well as the actual upgrade itself.

The installation took a few hours, with virtual machine reboots along the way. Naturally, the licence key was needed too, as well as the selection of a few options, though there weren't many of these. Being able to carry over settings from the pre-existing Windows 7 instance certainly helped with this and with making the process smoother too. No software needed reinstatement, and it doesn't feel as if the system has forgotten very much at all, a successful outcome.

Post-upgrade Actions

Just because I had a working Windows 8 instance didn't mean that there wasn't more to be done. In fact, it was the post-upgrade sorting that took up more time than the actual installation. For one thing, my digital mapping software wouldn't work without .Net Framework 3.5 and turning on the operating system feature from the Control Panel fell over at the point where it was being downloaded from the Microsoft Update website. Even removing Avira Internet Security after updating it to the latest version had no effect, and that was a finding during the Windows 8 system evaluation process. The solution was to mount the Windows 8 Enterprise ISO installation image that I had and issue the following command from a command prompt running with administrative privileges:

dism.exe /online /enable-feature /featurename:NetFX3 /Source:d:\sources\sxs /LimitAccess

For sake of assurance regarding compatibility, Avira has been replaced with Trend Micro Titanium Internet Security. The Avira licence won't go to waste, since I have another home in mind for it. Removing Avira without crashing Windows 8 proved impossible, though, and necessitating booting Windows 8 into Safe Mode. Because of much faster startup times, that cannot be achieved with a key press at the appropriate moment because the time window is too short now. One solution is to set the Safe Boot tick box in the Boot tab of MSCONFIG (or System Configuration, as it otherwise calls itself) before the machine is restarted. While there may be others, this was the one that I used. With Avira removed, clearing the same setting and rebooting restored normal service.

Dealing with a Dual Personality

One observer has stated that Windows 8 gives you two operating systems for the price of one: the one on the Start screen and the one on the desktop. Having got to wanting to work with one at a time, I decided to make some adjustments. Adding Classic Shell got me back a Start menu, and I omitted the Windows Explorer (or File Explorer as it is known in Windows 8) and Internet Explorer components. Though Classic Shell will present a desktop like what we have been getting from Windows 7 by sweeping the Start screen out of the way for you, I found that this wasn't quick enough for my liking, so I added Skip Metro Suite to speed up things. Though the tool does more than sweeping the Start screen out of the way, I have switched off these functions. Classic Shell has been configured too, so the Start screen can be accessed with a press of the Windows key. It has updated too so that boot into the desktop should be faster now. As for me, I'll leave things as they are for now. Even the possibility of using Windows' own functionality to go directly to the traditional desktop will be left untested while things are left to settle. Tinkering can need a break.

Outcome

After all that effort, I now have a seemingly more stable Windows virtual machine running Windows 8. Flitting between it and other Linux desktop applications has not caused a system freeze so far, and that was the result that I wanted. There now is no need to consider having separate Windows and Linux PC's with a NAS for sharing files between them, so that option is well off my wish-list. There are better uses for my money.

Not everyone has had my experience, though, because I saw a report that one user failed to update a physical machine to Windows 8 and installed Ubuntu instead; they were a Linux user anyway, even if they used Fedora more than Ubuntu. It is possible to roll back from Windows 8 to the previous version of Windows because there is a windows.old directory left primarily for that purpose. However, that may not help you if you have a partially operating system that doesn't allow you to do just that. In time, I'll remove it using the Disk Clean-up utility by asking it to remove previous Windows installations or running File Explorer with administrator privileges. Somehow, the former approach sounds the safer.

What About Installing Afresh?

While there was a time when I went solely for upgrades when moving from one version of Windows to the next, the annoyance of the process got to me. If I had known that installing the upgrade twice onto a computer with a clean disk would suffice, it would have saved me a lot. Staring from Windows 95 (from the days when you got a full installation disk with a PC and not the rescue media that we get now) and moving through a sequence of successors not only was time-consuming, but it also revealed the limitations of the first in the series when it came to supporting more recent hardware. It was enough to have me buying the full retailed editions of Windows XP and Windows 7 when they were released; the latter got downloaded directly from Microsoft. While these were retail versions that you could move from one computer to another, Windows 8 will not be like that. In fact, you will need to get its System Builder edition from a reseller and that can only be used on one machine. It is the merging of the former retail and OEM product offerings.

What I have been reading is that the market for full retail versions of Windows was not a big one anyway. However, it was how I used to work as you have read above, and it does give you a fresh system. Most probably get Windows with a new PC and don't go building them from scratch like I have done for more than a decade. Maybe the System Builder version would apply to me anyway, and it appears to be intended for virtual machine use as well as on physical ones. More care will be needed with those licences by the looks of things, and I wonder what needs not to be changed so as not to invalidate a licence. After all, making a mistake might cost between £75 and £120 depending on the edition.

Final Thoughts

So far, Windows 8 is treating me well, and I have managed to bend it to my will too, always a good thing to be able to say. In time, it might be that a System Builder copy could need buying yet, but I'll leave well alone for now. Though I needed new security software, the upgrade still saved me money over a hardware solution to my home computing needs and I have a backup disk on order from Microsoft too. That I have had to spend some time settling things was a means of learning new things for me but others may not be so patient and, with Windows 7 working well enough for most, you have to ask if it's only curious folk like me who are taking the plunge. Still, the dramatic change has re-energised the PC world in an era when smartphones and tablets have made so much of the running recently. That too is no bad thing because an unchanging technology is one that dies and there are times when significant changes are needed, as much as they upset some folk. For Microsoft, this looks like one of them, and it'll be interesting to see where things go from here for PC technology.

Troubleshooting SATA drive detection issues in Ubuntu 9.10

4th November 2009One of the early signs that I noticed after upgrading my main PC to Ubuntu 9.10 was a warning regarding the health of one of my hard disks. While others have reported that this can be triggered by the least bit of roughness in a SMART profile, that's not how it was for me. The PATA disk that has hosted my Ubuntu installation since the move away from Windows had a few bad sectors but no adverse warning. It was a 320 GB Western Digital SATA drive that was raising alarm bells with its 200 bad sectors.

The conveyor of this news was Palimpsest (not sure how it got that name even when I read the Wikipedia entry) and that is part of the subject of this post. Some have been irritated by its disk health warnings, yet it's easy to make them go away by turning off Disk Notifications in the dialogue that going to System > Preferences > Startup Applications will bring up for you. To fire up Palimpsest itself, there's always the command line, but you'll find it at System > Administration > Disk Utility too.

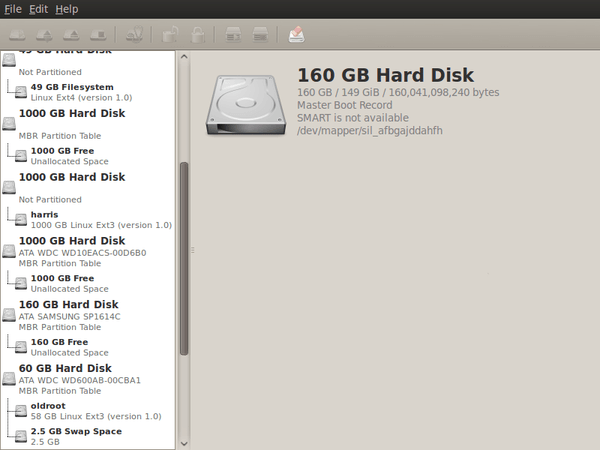

My complaint about it is that I see the same hard drive listed in there more than once, and it takes some finding to separate the real entries from the "bogus" ones. Whether this is because Ubuntu has seen my SATA drives with SIL RAID mappings (for the record, I have no array set up) or not is an open question, but it's one that needs continued investigation and I already have had a go with the dmraid command.

Even GParted shows both the original /dev/sd* type addressing and the /dev/mapper/sil_* equivalent, with the latter being the one with which you need to work (Ubuntu now lives on a partition on one of the SATA drives, which is how I noticed this). All in all, it looks less than tidy, so additional interrogation is in order, especially when I have no recollection of 9.04 doing anything of the sort.

From laptop limbo to a new desktop: A weekend restoration of computing order

12th July 2009This weekend, I finally put my home computing displacement behind me. My laptop had become my main PC, with a combination of external hard drives and an Octigen external hard drive enclosure keeping me motoring in laptop limbo. Having had no joy in the realm of PC building, I decided to go down the partially built route and order a bare-bones system from Novatech. That gave me a Foxconn case and motherboard loaded up with an AMD 7850 dual-core CPU and 2 GB of RAM. With the motherboard offering onboard sound and video capability, all that was needed was to add drives. I added no floppy drive but instead installed a SATA DVD Writer (not sure that it was a successful purchase, though, but that can be resolved at my leisure) and the hard drives from the old behemoth that had been serving me until its demise. A session of work on the kitchen table and some toing and froing ensued as I inched my way towards a working system.

Once I had set all the expected hard disks into place, Ubuntu was capable of being summoned to life, with the only impediment being an insistence of scanning the 1 TB Western Digital and getting stuck along the way. Not having the patience, I skipped this at start up and later unmounted the drive to let fsck to do its thing while I got on with other tasks; the hold up had been the presence of VirtualBox disk images on the drive. Speaking of VirtualBox, I needed to scale back the capabilities of Compiz, so things would work as they should. Otherwise, it was a matter of updating various directories with files that had appeared on external drives without making it into their usual storage areas. Windows would never have been so tolerant and, as if to prove the point, I needed to repair an XP installation in one of my virtual machines.

In the instructions that came with the new box, Novatech stated that time was a vital ingredient for a build, and they weren't wrong. While the delivery arrived at 09:30, I later got a shock when I saw the time to be 15:15! However, it was time well spent when I noticed the speed increase on putting ImageMagick through its paces with a Perl script. In time, I might get brave and be tempted to add more memory to get up to 4 GB; the motherboard may only have two slots, but that's not such a problem with my planning on sticking with 32-bit Linux for a while to come. My brief brush with its 64-bit counterpart revealed some roughness that warded me off for a little while longer. For now, I'll leave well alone and allow things to settle down again. Lessons for the future remain, over which I may even mull in another post...

Adding a new hard drive to Ubuntu

19th January 2009While this is a subject that I thought that I had discussed on this blog before, I can't seem to find any reference to it now. Instead, I have discussed the subject of adding hard drives to Windows machines a while back, which might explain what I was thinking. Trusting the searchability of what you find on here, I'll go through the process.

The rate at which digital images were filling my hard disks brought all of this to pass. Because even extra housekeeping could not stop the collection growing, I went and ordered a 1TB Western Digital Caviar Green Power from Misco. City Link did the honours with the delivery, and I can credit their customer service for organising that without my needing to get to the depot to collect the thing; that was a refreshing experience that left me pleasantly surprised.

For the most of the time, hard drives that I have had generally got on with the job. However, there was one experience from a time laden with computing mishaps that has left me wary. Assured by good reviews, I went and got myself an IBM DeskStar and its reliability didn't fill me with confidence. Though the business was acquired by Hitachi equivalents, that means that I am touching their version of the same product line either. Travails with an Asus motherboard put me off that brand around the same time as well; I now blame it for going through a succession of AMD Athlon CPU's on me.

The result of that episode is that I have a tendency to go for brands that I can trust from personal experience. Western Digital falls into this category, as does Gigabyte for motherboards, which explains my latest hard drive purchasing decision. That's not to say that other hard drive makers wouldn't satisfy my needs, since I have had no problems with disks from Maxtor or Samsung. For now though, I am sticking with those makers that I know until they leave me down, something that I hope never happens.

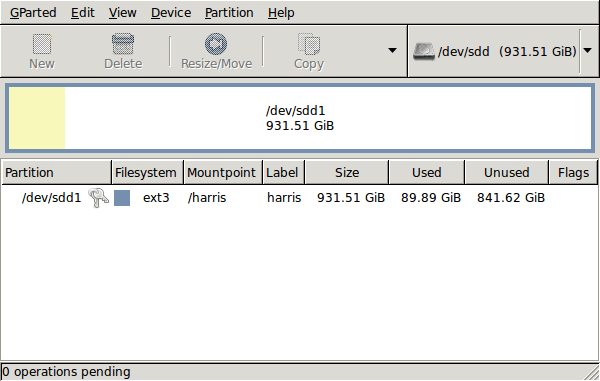

GParted running on Ubuntu

Anyway, let's get back to installing the hard drive. The physical side of the business was the usual shuffle within the PC to add the SATA drive before starting up Ubuntu. From there, it was a matter of firing up GParted (System > Administration > Partition Editor on the menus if you already have it installed). The next step was to find the new empty drive and create a partition table on it. At this point, I selected msdos from the menu before proceeding to set up a single ext3 partition on the drive. You need to select Edit > Apply All Operations from the menus to set things into motion before sitting back and waiting for GParted to do its thing.

After the GParted activities, the next task is to set up automatic mounting for the drive to make it available every time that Ubuntu starts up. The first thing to be done is to create the folder that will be the mount point for your new drive, /newdrive in this example. This involves editing /etc/fstab with superuser access to add a line like the following with the correct UUID for your situation:

UUID="32cf775f-9d3d-4c66-b943-bad96049da53" /newdrive ext3 defaults,noatime,errors=remount-ro

You can also add a comment like "# /dev/sdd1" above that so that you know what's what in the future. To get the actual UUID that you need to add to fstab, issue a command like one of those below, changing /dev/sdd1 to what is right for you:

sudo vol_id /dev/sdd1 | grep "UUID=" /* Older Ubuntu versions */

sudo blkid /dev/sdd1 | grep "UUID=" /* Newer Ubuntu versions *

This is the sort of thing that you get back, and the part beyond the "=" is what you need:

ID_FS_UUID=32cf775f-9d3d-4c66-b943-bad96049da53

Once all of this has been done, a reboot gets done to mount the device. Once that is complete, you then need to set up folder permissions as required before you can use the drive. This part gets me firing up Nautilus, using gksu and adding myself to the user group in the Permissions tab of the Properties dialogue for the mount point (/newdrive, for example). After that, I issued something akin to the following command to set global permissions:

chmod 775 /newdrive

With that, I had completed what I needed to do to get the WD drive going under Ubuntu. After that IBM DeskStar experience, the new drive remains on probation but moving some non-essential things on there has allowed me to free some space elsewhere and carry out a reorganisation. Further consolidation will follow while I hope that the new 931.51 GiB (binary gigabytes or 102410241024 rather the decimal gigabytes (1,000,000,000) preferred by hard disk manufacturers) will keep me going for a good while before I need to add extra space again.

The irritation of a 4 GB file size limitation

20th November 2007Recently, I got myself a 500GB Western Digital My Book, an external hard drive in other words. Bizarrely, the thing is formatted using the FAT32 file system. While I appreciate that backward compatibility for Windows 9x might seem desirable, using NTFS would be more understandable, particularly given that the last of the 9x line, Windows ME, is now eight years old (there cannot be anybody who still uses that, can there?). The result is that I got core dump messages from cp commands issued from the terminal on my Ubuntu system to copy files of size exceeding 4GB last night. It surprised me at first, but it now seems to be a FAT32 limitation. The idea of formatting the drive as NTFS did occur to me, only for GParted not to do that, at least not with my current configuration. While the ext3 file system is an option, I have a spare PC with Windows 2000 so that will be a step too far for now, unless I take the plunge and bring that into the Linux universe too.

Other than the 4GB irritation, the new drive works well and was picked up and supported by Ubuntu without any hassle beyond getting it out of the box, finding a place for it on my desk and plugging in a few cables. While needing judiciousness about file sizes, it played an important role while I converted a 320 GB internal WD drive from NTFS to ext3 and may yet be vital if my Windows 2000 box gets a migration to Linux. In the interim, 500 GB is a lot of space, and having an external drive that size is a bonus these days. That is especially the case when you consider that the 1 terabyte threshold is on the verge of getting crossed. It certainly makes DVD's, flash drives and other multi-gigabyte media less impressive than they otherwise might appear.