TOPIC: PACKAGE MANAGER

Installing Citrix Receiver 13.0 in Ubuntu GNOME 13.10 64-bit

28th November 2013Installing the latest version of Citrix Receiver (13.0 at the time of writing) on 64-bit Ubuntu should be as simple as downloading the required DEB package and double-clicking on the file so that Ubuntu Software Centre can work its magic. Unfortunately, the 64-bit DEB file is faulty, so that means that the Ubuntu community how-to guide for Citrix still is needed. In fact, any user of Linux Mint or another distro that uses Ubuntu as its base would do well to have a look at that Ubuntu link.

For the sake of completeness, I still am going to let you in on the process that worked for me. Once the DEB file has been downloaded, the first task is to create a temporary folder where the DEB file's contents can be extracted:

mkdir ica_temp

With that in place, it then is time to do the extraction, and it needs two commands with the second of these need to extract the control file while the first extracts everything else.

sudo dpkg-deb -x icaclient- ica_temp

sudo dpkg-deb --control icaclient- ica_temp/DEBIAN

It is the control file that has been the cause of all the bother because it refers to unavailable dependencies that it really doesn't need anyway. To open the file for editing, issue the following command:

sudo gedit ica_temp/DEBIAN/control

Then change line 7 (it should begin with Depends:) to: Depends: libc6-i386 (>= 2.7-1), lib32z1, nspluginwrapper. While there are other software packages in there that Ubuntu no longer supports, they are not needed anyway. With the edit made, and the file saved, the next step is to build a new DEB package with the corrected control file:

dpkg -b ica_temp icaclient-modified.deb

Once you have the package, the next step is to install it using the following command:

sudo dpkg -i icaclient-modified.deb

If it fails, then you have missing dependencies and the following command should sort these before a re-run of the above command again:

sudo apt-get install libmotif4:i386 nspluginwrapper lib32z1 libc6-i386

With Citrix Receiver installed, there is one more thing that is needed before you can use it freely. This is to put Thawte security certificate files into /opt/Citrix/ICAClient/keystore/cacerts. What I had not realised until recently was that many of these already are in /usr/share/ca-certificates/mozilla and linking to them with the following command makes them available to Citrix Receiver:

sudo ln -s /usr/share/ca-certificates/mozilla/* /opt/Citrix/ICAClient/keystore/cacerts/

Another approach is to download the Thawte certificates and extract the archive to /tmp/. From there they can be copied to /opt/Citrix/ICAClient/keystore/cacerts and I copied the Thawte Personal Premium certificate as follows:

sudo cp /tmp/Thawte Root Certificates/Thawte Personal Premium CA/Thawte Personal Premium CA.cer /opt/Citrix/ICAClient/keystore/cacerts/

Until I found out about what was in the Mozilla folder, I simply picked out the certificate mentioned in the Citrix error message and copied it over like the above. Of course, all of this may seem like a lot of work to those who are non-tinkerers and I have added a repaired 64-bit DEB package that incorporates all of the above and should not need any further intervention aside from installing it using GDebi, Ubuntu's Software Centre, dpkg or anything else that does what's needed.

Building and installing Microsoft Core Fonts on Fedora 19 with RPM

6th July 2013While I have a previous posting from 2009 that discusses adding Microsoft's Core Fonts to the then current version of Fedora, it did strike me that I hadn't laid out the series of commands that were used. Instead, I referred to an external and unofficial Fedora FAQ. That's still there, yet I also felt that I was leaving things a little to chance, given how websites can disappear quite suddenly.

Even after next to four years, it still amazes me that you cannot install Microsoft's Core Fonts in Fedora as you would on Ubuntu, Linux Mint or even Debian. Therefore, the following series of steps is as necessary now as it was then.

The first step is to add in a number of precursor applications such as wget for command line file downloading from websites, cabextract for extracting the contents of Windows CAB files, rpmbuild for creating RPM installers and utilities for the XFS file system that chkfontpath needs:

sudo yum -y install rpm-build cabextract ttmkfdir wget xfs

Here, I have gone with terminal commands that use sudo, but you could become the superuser (root) for all of this and there are those who believe you should. The -y switch tells yum to go ahead with prompting you for permission before it does any installations. The next step is to download the Microsoft fonts package with wget:

sudo wget http://corefonts.sourceforge.net/msttcorefonts-2.0-1.spec

Once that is done, you need to install the chkfontpath package because the RPM for the fonts cannot be built without it:

sudo rpm -ivh http://dl.atrpms.net/all/chkfontpath

Once that is in place, you are ready to create the RPM file using this command:

sudo rpmbuild -ba msttcorefonts-2.0-1.spec

After the RPM has been created, it is time to install it:

sudo yum install --nogpgcheck ~/rpmbuild/RPMS/noarch/msttcorefonts-2.0-1.noarch.rpm

When installation has completed, the process is done. Because I used sudo, all of this happened in my own home area, so there was a need for some housekeeping afterwards. If you did it by becoming the root user, then the files would be there instead, and that's the scenario in the online FAQ.

Synchronising package selections between Linux Mint and Linux Mint Debian Edition

18th April 2012To generate the package list on the GNOME version of Linux Mint, I used the Backup Tool. It simply was a matter of using the Backup Software Selection button and telling it where to put the file that it generates. Alternatively, dpkg can be used from the command line like this:

sudo dpkg --get-selections > /backup/installed-software.txt

After transferring the file to the machine with Linux Mint Debian Edition, I tried using the Backup Tool on there too. However, using the Restore Software Selection button and loading the required only produced an irrecoverable error. Therefore, I set to looking around the web and found a command line approach that did the job for me.

The first step is to load the software selection using dpkg by issuing this command (it didn't matter that the file wasn't made using the dpkg command, though I suspect that's what the Linux Mint Backup Tool was doing that behind the scenes):

sudo dpkg --set-selections < /backup/installed-software.txt

Then, I started dselect and chose the installation option from the menu that appeared. The first time around, it fell over but trying again was enough to complete the job. Packages available to the vanilla variant of Linux Mint but not found in the LMDE repositories were overlooked as I had hoped, and installation of the extra packages had no impact on system stability either.

sudo dselect

Apparently, there is an alternative to using dselect that is based on the much used apt-get command, but I didn't make use of it so cannot say more:

sudo apt-get dselect-upgrade

All that I can say is that the dpkg/dselect combination did what I wanted, so I'll keep them in mind if ever need to synchronise software selections between two Debian-based distributions in the future again. While the standard edition of Linux Mint may be based on Ubuntu rather than Debian, Ubuntu is itself based on Debian. Thus, the description holds here.

Adding Software to Arch Linux from the AUR

3rd December 2011There are packages absent from the Arch Linux repositories that could come in useful. When you are after one of these, then it's time to search the Arch User Repository (AUR). In here, I have found the likes of Microsoft Core Fonts, Adobe Reader and Dropbox. While there may be others, these examples are what comes to mind as I write this. In time, it may be that packages make if from the AUR into the Arch community repository, but you have to use the former if you cannot wait.

Just search the AUR for what you want and download the compressed tarball (tar.gz file) from the webpage where you find it. Then, I recommend extracting it to /tmp where clearance at boot time means that you don't need to do it yourself. Then, go into the appropriate subfolder in /tmp (acroread for Adobe Reader, for instance) and issue the following command:

makepkg

This will attempt to create a package file where you are working for installation by pacman. If dependencies are absent, you will be told and these may need another AUR search in some cases, though most are included in the repositories. Once dependencies, have been sorted, just issue the makepkg command again to create the xz file that pacman needs to perform the installation. To do so, issue the following command from the same directory either as root or by using sudo if your user account has such privileges:

pacman -U *.xz

There is usually just one xz archive in a package folder, so I have been taking the easy route of not looking up the name all the time. Of course, you can do that for safety if you like.

With pacman not looking at the AUR, you have to do more work to get upgrades to happen should you want to avoid without having to repeat the above process all the time. There is a package in the AUR called yaourt that needs package-query from the same place as well. Before any of these, yajl needs to be installed from one of the default repositories. Once yaourt is in place, then the following does the updates for you:

yaourt -Syu --aur

Again, it might be best to run this as root or using sudo though that gives messages from makepkg about not running it as a privileged user. However, I reckon that those might need to be ignored. When I tried it, the Citrix update failed, though the Dropbox one succeeded. This experience might be worth bearing in mind. Saying that, I have found installing and updating software from the AUR not to be too onerous a process so far. Anything that gives a little more freedom only can be a good thing.

Sorting out MySQL on Arch Linux

5th November 2011Seeing Arch Linux running so solidly in a VirtualBox virtual box has me contemplating whether I should have it installed on a real PC. Saying that, recent announcements regarding the implementation of GNOME 3 in Linux Mint have caught my interest, even if the idea of using a rolling distribution as my main home operating system still has a lot of appeal for me. Having an upheaval come my way every six months when a new version of Linux Mint is released is the main cause of that.

While remaining undecided, I continue to evaluate the idea of Arch Linux acting as my main OS for day-to-day home computing. Towards that end, I have set up a working web server instance on there using the usual combination of Apache, Perl, PHP and MySQL. Of these, it was MySQL that went the least smoothly of all because the daemon wouldn't start for me.

It was then that I started to turn to Google for inspiration, and a range of actions resulted that combined to give the result that I wanted. One problem was a lack of disk space caused by months of software upgrades. Since tools like it in other Linux distros allow you to clear some disk space of obsolete installation files, I decided to see if it was possible to do the same with pacman, the Arch Linux command line package manager. The following command, executed as root, cleared about 2 GB of cruft for me:

pacman -Sc

The S in the switch tells pacman to perform package database synchronization, while the c instructs it to clear its cache of obsolete packages. In fact, using the following command as root every time an update is performed both updates software and removes redundant or outmoded packages:

pacman -Syuc

So I don't forget the needful housekeeping, this will be what I use from this point forward, with y being the switch for a refresh and u triggering a system upgrade. It's nice to have everything happen together without too much effort.

To do the required debugging that led me to the above along with other things, I issued the following command:

mysqld_safe --datadir=/var/lib/mysql/ &

This starts up the MySQL daemon in safe mode if all is working properly, and it wasn't in my case. Nevertheless, it creates a useful log file called myhost.err in /var/lib/mysql/. This gave me the messages that allowed the debugging of what was happening. It led me to installing net-tools and inettools using pacman; it was the latter of these that put hostname on my system and got the MySQL server startup a little further along. Other actions included unlocking the ibdata1 data file and removing the ib_logfile0 and ib_logfile1 files to gain something of a clean sheet. The kill command was used to shut down any lingering mysqld sessions too. To ensure that the ibdata1 file was unlocked, I executed the following commands:

mv ibdata1 ibdata1.bad

cp -a ibdata1.bad ibdata1

These renamed the original and then crafted a new duplicate of it, with the -a switch on the cp command forcing copying with greater integrity than normal. Along with the various file operations, I also created a link to my.cnf, the MySQL configuration file on Linux systems, in /etc using the following command executed by root:

ln -s /etc/mysql/ my.cnf /etc/my.cnf

While I am unsure if this made a real difference, uncommenting the lines in the same file that pertained to InnoDB tables. What directed me to these were complaints from mysqld_safe in the myhost.err log file. All I did was to uncomment the lines beginning with innodb and these were 116-118, 121-122 and 124-127 in my configuration file, but it may be different in yours.

After all the above, the MySQL daemon ran happily and, more importantly, started when I rebooted the virtual machine. Thinking about it now, I believe that it was a lack of disk space, the locking of a data file and the lack of InnoDB support that was stopping the MySQL service from running. Running commands like mysqld start weren't yielding useful messages, so a lot of digging was needed to get the result that I needed. In fact, that's one of the reasons why I am sharing my experiences here.

In the end, creating databases and loading them with data were all that was needed for me to start to see functioning websites on my (virtual) Arch Linux system. It turned out to be another step on the way to making it workable as a potential replacement for the Linux distributions that I use most often (Linux Mint, Fedora and Ubuntu).

TypeError: unable to create a wrapper for GLib.Variant

31st August 2011A little while ago, I wrote a piece on here telling of how I got GNOME 3 installed and working on Linux Mint. However, I have discovered since that there was an Achilles heel in the approach that I had taken: using the ricotz/testing PPA so that I could gain additional extensions for use with GNOME Shell. If this was just a repository of GNOME Shell extensions, that would be well and good, but the maintainer(s) also has a more cutting edge of GNOME Shell in there too. Occasionally, updates from ricotz/testing have been the cause of introducing rough edges to my desktop environment that have resolved themselves within a few hours or days. However, updates came through in the last few days that broke GNOME Tweak Tool. When I tried running it from the command line, all I got was a load of output that included the message that heads this posting and no window popping up that I could use. Because that made me see sense, I stopped living dangerously by using that testing repository. Apparently, there is a staging variant too, but a forum posting elsewhere on the web has warded me off from that too.

Until I encountered the latter posting, I had not heard of the ppa-purge tool, and it came in handy for ridding my system of all packages from the ricotz repository and replacing with alternatives from more stable ones such as that from the gnome3-team. Since this wasn't installed on my computer, I added it in the usual fashion by issuing the following command:

sudo apt-get install ppa-purge

Once that was complete, I executed the following command with the ricotz/testing repository still active:

sudo ppa-purge testing ricotz

Once that was complete and everything was very nicely automated too, GNOME Tweak Tool was working again as intended and that's the way that I intend to keep things. Another function of ppa-purge is that it has excised any mention of the ricotz/testing repos from my system too, so nothing more can come from there.

While I was in the business of stabilising GNOME Shell on my system, I decided to add in UGR too. First, another repository needed to be added as follows:

sudo add-apt-repository ppa:ubuntugnometeam/ppa-gen

sudo apt-get update

Because the next steps were to install UGR once that was in place, these commands were issued to do the job:

sudo apt-get dist-upgrade

sudo apt-get install ugr-desktop-g3

sudo apt-get upgrade

While that had the less desirable effect of adding games that I didn't need and have since removed, it otherwise worked well, and I now have a new splash screen at starting up and shutting down times for my pains. Hopefully, it will mean that any updates to GNOME Shell that come my way should be a little more polished, too. All that's needed now is for someone to set up a dedicated PPA for GNOME Shell Extensions so I could regain dropdown menus in the top panel for things such as virtual desktops, places and other handy operations that perhaps should have been in GNOME Shell from the beginning. However, that's another discussion, so I'll content myself with what I now have and see if my wish ever gets granted.

Setting up GNOME 3 on Arch Linux

22nd July 2011It must have been my curiosity that drove me to explore Arch Linux a few weeks ago. Its inclusion on a Linux Format DVD and a few kind words about its being a cutting edge distribution were enough to set me installing it into a VirtualBox virtual machine for a spot of investigation. Despite warnings to the contrary, I took the path of least resistance with the installation, even though I did look among the packages to see if I could select a desktop environment to be added as well. Not finding anything like GNOME in there, I left everything as defaulted and ended up with a command line interface, as I suspected. The next job was to use the pacman command to add the extras that were needed to set in place a fully functioning desktop.

For this, the Arch Linux wiki is a copious source of information, even if it didn't stop me doing things out of sequence. That I didn't go about perusing it linearly was part of the cause of this, but you have to know which place to start first as well. As a result, I have decided to draw everything together here so that it's all in one place and in a more sensible order, even if it wasn't the one that I followed.

The first thing to do is add X.org using the following command:

pacman -Syu xorg-server

The -Syu switch tells pacman to update the package list, upgrade any packages that require it, and adds the listed package if it isn't in place already; that's X.org in this case. For my testing, I added xor-xinit too. This puts that startx command in place. This is the command for adding it:

pacman -S xorg-xinit

With those in place, I would add the VirtualBox Guest Additions next. GNOME Shell requires 3D capability, so you need to have this done while the machine is off or when setting it up in the first place. This command will add the required VirtualBox extensions:

pacman -Syu virtualbox-guest-additions

Once that's done, you need to edit /etc/rc.conf by adding vboxguest vboxsf vboxvideo within the brackets on the MODULES line and adding rc.vboxadd within the brackets on the DAEMONS line. On restarting, everything should be available to you, but the modprobe command is there for any troubleshooting.

With the above pre-work done, you can set to installing GNOME, and I added the basic desktop from the gnome package and the other GNOME applications from the gnome-extra one. GDM is the login screen manager, so that's needed too, and the GNOME Tweak Tool is a very handy thing to have for changing settings that you otherwise couldn't. Here are the commands that I used to add all of these:

pacman -Syu gnome

pacman -Syu gnome-extra

pacman -Syu gdm

pacman -Syu gnome-tweak-tool

With those in place, some configuration files were edited so that a GUI was on show instead of a black screen with a command prompt, as useful as that can be. The first of these was /etc/rc.conf where dbus was added within the brackets on the DAEMONS line and fuse was added between those on the MODULES one.

Creating a file named .xinitrc in the root home area with the following line to that file makes running a GNOME session from issuing a startx command:

exec ck-launch-session gnome-session

With all those in place, all that was needed to get a GNOME 3 login screen was a reboot. Arch is so pared back that I could log in as root, not the safest of things to be doing, so I added an account for more regular use. After that, it has been a matter of tweaking the GNOME desktop environment and adding missing applications. The bare-bones installation that I allowed to happen meant that there were a surprising number of them, but that isn't difficult to fix using pacman.

All of this emphasises that Arch Linux is for those who want to pick what they want from an operating system rather than having that decided for you by someone else, an approach that has something going for it with some of the decisions that make their presence felt in computing environments from time to time. While there's no doubt that this isn't for everyone, the documentation is complete enough for the minimalism not to be a problem for experienced Linux users, and I certainly managed to make things work for me once I got them in the right order. Another thing in its favour is that Arch also is a rolling distribution, so you don't need to have to go through the whole set up routine every six months, unlike some others. So far, it does seem stable enough and even has set me to wondering if I could pop it on a real computer sometime.

You always can install things yourself...

26th November 2009With Linux distributions offering you everything on a plate, there is a temptation to stick with what they offer rather than taking things into your own hands. For example, Debian's infrequent stable releases and the fact that they don't seem to change software versions throughout the lifetime of such a release means that things such as browser versions are fixed for the purposes of stability; Lenny has stuck with Firefox 3.06 and called it IceWeasel for some unknown reason. However, I soon got to grab a tarball for 3.5 and popped its contents into /opt where the self-contained package worked without a hitch. The same modus operandi was used to add Eclipse PDT and that applied to Ubuntu too until buttons stopped working, forcing a jumping of ship to NetBeans.

Of course, you could make a mess when veering away from what is in a distribution, but that should be good enough reason not to get carried away with software additions. With the availability of DEB packages for things like Adobe Reader, RealPlayer, VirtualBox, Google Chrome and Opera, keeping things clean isn't so hard. While your mileage may vary when it comes to how well things work out for you, I have only ever had the occasional problem anyway.

What reminded me of this was a recent irritation with the OpenOffice package included in Ubuntu 9.10 whereby spell checking wasn't working. While there were thoughts about in situ fixes like additional dictionary installations, I ended up plumping for what could be called the lazy option: grabbing a tarball full of DEB packages from the OpenOffice website and extracting its contents into /tmp and, once the URE package was in place, installing from there using the command:

dpkg -i o*

To get application shortcuts added to the main menu, it was a matter of diving into the appropriate subfolder and installing from the GNOME desktop extension package. Of course, Ubuntu's OpenOffice variant was removed as part of all this but, if you wanted to live a little more dangerously, the external installation goes into /opt which means that there shouldn't be too much of a conflict anyway. In any case, the DIY route got me the spell checking in OpenOffice Writer that I needed, so all was well and another Ubuntu rough edge eradicated from my life, for now anyway.

Converting from CGM to Postscript

24th November 2009One thing that I recently had to investigate was the possibility of converting CGM vector graphics files into Postscript and from there into PDF. Having used ImageMagick for converting images before, that was an obvious option. However, that cannot process CGM files on its own and needs a delegate or helper application as well. This is the case with raw digital camera files too, with UFRaw being the program chosen. For CGM images, the more obscure RALCGM is what's needed, and tracking it down is a bit of an art. Though the history is that it was developed at the U.K.'s Rutherford Appleton Laboratory, it appears that it was left to go off into the wilderness rather than someone keeping an eye on things. With that in mind, here are the installation packages for Windows and Linux (RPM):

RALCGM is a handy command line tool that can covert from CGM to Postscript on its own without any need for ImageMagick at all. From what I have seen, fonts on graphical output may look greyer than black, but it otherwise does its job well. However, considering that it is a freely available tool, one cannot complain too much. There are other packages for doing vector to raster conversion and the ones that I have seen do have GUI's but the freedom to look at for cost software wasn't mine to have. The required command looks something like the following:

ralcgm -d PS -oL test.cgm test.ps

The switch -d PS uses the software's Postscript driver and -oL specifies landscape orientation. If you like to find out more, here's a PDF rendition of the help file that comes with the thing:

Adding Microsoft core fonts to Debian

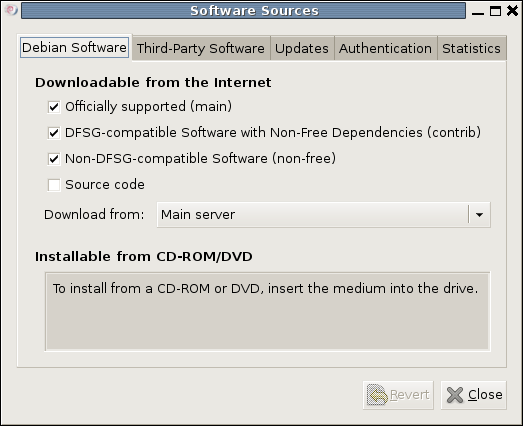

18th June 2009When setting up Ubuntu, I usually add in Microsoft's core fonts by installing the msttcorefonts package using either Synaptic or apt-get. Though I am not sure why I didn't try doing the same thing for Debian until now, it's equally feasible. Just pop over to System > Administration > Software Sources and ensure that the check-boxes for the contrib and non-free categories are checked like you see below.

You could also achieve the same end by editing /etc/apt/sources.list and adding the non-free and contrib keywords to make lines look like these before issuing the command apt-get update as root:

deb http://ftp.debian.org/debian/ lenny main non-free contrib

deb-src http://ftp.debian.org/debian/ lenny main non-free contrib

All that you are doing with the manual editing route is performing the same operations that the more friendly front end would do for you anyway. After that, it's a case of going with the installation method of your choice and restarting Firefox or IceWeasel to see the results.