TOPIC: NUMERICAL SOFTWARE

Creating placeholder graphics in SAS using PROC GSLIDE for when no data are available

18th March 2012Recently, I found myself with a plot to produce, but there were no data to be presented, so a placeholder output was needed. For a listing or a table, this is a matter of detecting if there are observations to be listed or summarised and then issuing a placeholder listing using PROC REPORT if there are no data available. Using SAS/GRAPH, something similar can be achieved using one of its curiosities.

In the case of SAS/GRAPH, PROC GSLIDE looks like the tool to user for the same purpose. The procedure does get covered as part of a SAS Institute SAS/GRAPH training course, but they tend to gloss over it. After all, there is little reason to go creating presentations in SAS when PowerPoint and its kind offer far more functionality. However, it would make an interesting tale to tell how GSLIDE became part of SAS/GRAPH in the first place. Its existence makes me wonder if it pre-exists the main slideshow production tools that we use today.

The code that uses PROC GSLIDE to create a placeholder graphic is as follows (detection of the number of observations in a SAS dataset is another entry on here):

proc gslide;

note height=10;

note j=center "No data are available";

run;

quit;PROC GSLIDE is one of those run group procedures in SAS so a QUIT statement is needed to close it. The NOTE statements specify the text to be added to the graphic. The first of these creates a blank line of the required height for placing the main text in the middle of the graphic. It is the second one that adds the centred text that tells users of the generated output what has happened.

Dealing with variable length warnings in SAS 9.2

11th January 2012A habit of mine is to put a LENGTH or ATTRIB statement between DATA and SET statements in a SAS data step to reset variable lengths. By default, it appears that this triggers truncation warnings in SAS 9.2 or SAS 9.3 when it didn't in previous versions. SAS 9.1.3, for instance, allowed you to have something like the following for shortening a variable length without issuing any messages at all:

data b;

length x $100;

set a;

run;In this case, x could have a length of 200 previously and SAS 9.1.3 wouldn't have complained. Now, SAS 9.2 and 9.3 will issue a warning if the new length is less than the old length. This can be useful to know, but it can be changed using the VARLENCHK system option. Though the default value is WARN, it can be set to ERROR if you really want to ensure that there is no chance of truncation. Then, you get error messages and the program fails where it normally would run with warnings. Setting the value of the option to NOWARN restores the type of behaviour seen in SAS 9.1.3 and versions before that.

The SAS documentation says that the ability to change VARLENCHK can be restricted by an administrator, so you might need to deal with this situation in a more locked down environment. Then, one option would be to do something like the following:

data b;

drop x;

rename _x=x;

set a;

length _x $100;

_x=strip(x);

run;While It's a bit more laborious than setting the VARLENCHK option to NOWARN, the idea is that you create a new variable of the right length and replace the old one with it. That gets rid of warnings or errors in the log and resets the variable length as needed. Of course, you have to ensure that there is no value truncation with either remedy. If any is found, then the dataset specification probably needs updating to accommodate the length of the values in the data. After all, there is no substitute for getting to know your data and doing your own checking should you decide to take matters into your hands.

There is a use for the default behaviour, though. If you use a specification to specify a shell for a dataset, then you will be warned when the shell shortens variable lengths. That allows you to either adjust the dataset or your program. Also, it provides additional information when you get variable length mismatch warnings when concatenating or merging datasets. There was a time when SAS wasn't so communicative in these situations and some investigation was needed to establish which variable was affected. Now, that has changed without leaving the option to work differently if you so do desire. Sometimes, what can seem like an added restriction can have its uses.

Setting VIEWTABLE to show column names in SAS

15th September 2011The following is the default behaviour in the DMS: Base SAS opens datasets from its Explorer using VIEWTABLE and with variable labels in the column headings and not variable names. Because I have been fortunate to use systems with SAS/FSP both installed and licensed, I have taken to using FSVIEW for browsing SAS datasets as a workaround and, though the interface may look old to some, it proves to be a very flexible tool that still has a few things to teach newer ones. With SAS Enterprise Guide, the dataset viewing functionality is different to both VIEWTABLE and FSVIEW, but I have been to make it work for me. While the SAS EG dataset viewing tool may appear like the former of these, it has a few tricks to teach its forbear.

Now that I find myself working again with the traditional SAS DMS interface and without SAS/FSP, I decided to see if there was a way to get VIEWTABLE to display variable names instead of variable labels by default. As it happened, the answer was found in an internet forum discussion. From the SAS command line, you can achieve the result by issuing a command like the following:

VT SASHELP.VCOLUMN COLHEADING=NAMES

Above VT is the VIEWTABLE shortcut, while it is the COLHEADING=NAMES option on the line that gets variable names shown in column headings. Taking it further, you can set this as the default setting for datasets opened using a mouse from Explorer panes using the following procedure:

- Click in or on the Explorer pane to highlight the Explorer window.

- Select Tools > Options > Explorer in the menus.

- Select the Members tab.

- Double-click on the TABLE icon.

- Double-click on the

&Openaction. - Set the Action command to:

VIEWTABLE %8b.'%s'.DATA COLHEADING=NAMES. - Click on the Set Default button.

- Save changes and close the Explorer Options window.

Because the DMS looks similar across versions 8.0 through to 9.2, the above instructions should be relevant to all of those. While I have yet to get the opportunity to use SAS 9.3, I would be surprised to find that the traditional SAS interface has changed there too, even though much else has changed about SAS. In fact, the latest version of SAS has brought quite a few interesting new features for programmers, so it appears that you can do more through a familiar interface, not entirely a bad thing. It looks as if this VIEWTABLE tweak could be useful for a while yet.

Using ODS Graphics to Create Plots Using PROC LIFETEST

3rd September 2010One of the nice things about SAS 9.2 is that creation of statistical graphics is enhanced using the Output Delivery System (ODS). One of the beneficiaries of this is PROC LIFETEST, a procedure that gained a lot when data sets could be created from it using ODS OUTPUT statements. Before that, it was a matter of creating text output and converting it to a SAS data set using Data Step, and that was a nuisance on a system that attached special significance to output destinations set up using PROC PRINTTO. What you’ll find below is a sample of the type of code for creating a Kaplan-Meier survival plot for time to adverse events resulting in discontinuation of study treatment, with actual and censored times. The IMAGENAME parameter on the ODS GRAPHICS statement line controls the name of the file, and it is possible to change the type using the IMAGEFMT parameter too.

ods graphics on / imagename=”fig5″;

proc lifetest data=km3 method=km plots=survival;

time timetoae*cens_ae(0);

run;

ods graphics off;ERROR 22-322: Syntax error, expecting one of the following: a name, *.

14th June 2010This is one of the classic SAS errors that you can get from PROC SQL, and it can be thrown by a number of things. Missing out a comma in a list of variables in a SELECT statement is one situation that will do it, as will be having an extraneous one. As I discovered recently, an ill-defined SAS function nesting like LEFT(TRIM(PERIOD,BEST.)) will have the same effect; notice the missing PUT function in the example. The latter surprised me because I might have expected something more descriptive for this, as would be the case in data step code. In the event, it took some looking before the problem hit me because it's remarkable how blind you can become to things that are staring you in the face. Familiarity really can make you pay less attention.

Reading data into SAS using the EXCEL and PCFILES library engines

4th March 2010Recently, I had the opportunity to have a look at the Excel library engine again because I need to read Excel data into SAS. You need SAS Access for PC Files licensed for it to work, but it does simplify the process of getting data from spreadsheets into SAS. It all revolves around setting up a library pointing at the Excel file using the Excel engine. The result is that every worksheet in the file is treated like a SAS dataset, even if their names contain characters that SAS considers invalid for dataset names. The way around that is to enclose the worksheet name in single quotes with the letter n straight after the closing quote, much in the same way as you'd read in text strings as SAS date values ('04MAR2010'd, for example). To make all of this clearer, I have added some example code below.

libname testxl excel 'c:\test.xls';

data test;

set testxl.'sheet1$'n;

run;All of the above does apply to SAS on Windows (I have used it successfully in 9.1.3 and 9.2) but there appears to be a way of using the same type of thing on UNIX too. Again, SAS Access for PC Files is needed as well as a SAS PC Files server on an available Windows machine, and it is the PCFILES engine that is specified. While I cannot say that I have had the chance to see it working in practice but seeing it described in SAS Online Documentation corrected my previous misimpressions about the UNIX variant of SAS and its ability to read in Excel or Access data. Well, you learn something new every day.

Working with the ODS templates and styles when batch processing

8th December 2008I ran into some trouble with creating new templates and styles while running a SAS job in batch mode. The cause was the user of the RSASUSER switch on the SAS command. This sets the SASUSER library to be read-only, and that is what is used to store new templates and styles by default. The fix is to switch these to another library to which there is write-access, WORK, for example. Here's the line of code that achieves the manoeuvre:

ODS PATH work.templat(update) sasuser.templat(read) sashelp.tmplmst(read);

Apparently, the same change might be needed for stored processes too, so it's one to keep in mind.

SAS Macro and Dataline/Cards Statements in Data Step

28th October 2008Recently, I tried code like this in a SAS macro:

data sections;

infile datalines dlm=",";

input graph_table_number $15. text_line @1 @;

datalines;

"11.1 ,Section 11.1",

"11.2 ,Section 11.2",

"11.3 ,Section 11.3"

;

run;While it works in its own right, including it as part of a macro yielded this type of result:

ERROR: The macro X generated CARDS (data lines) for the DATA step, which could cause incorrect results. The DATA step and the macro will stop executing.

A bit of googling landed me on SAS-L where I spotted a solution like this one that didn't involve throwing everything out:

filename temp temp;

data _null_;

file temp;

put;

run;data sections;

length graph_table_number $15 text_line $100;

infile temp dlm=",";

input @;

do _infile_=

"11.1 ,Section 11.1",

"11.2 ,Section 11.2",

"11.3 ,Section 11.3"

;

input graph_table_number $15. text_line @1 @;

output;

end;

run;filename temp clear;

The filename statement and ensuing data step creates a dummy file in the SAS work area that gets cleared at the end of every session. That seems to fool the macro engine into thinking that input is from a file and not the CARDS/DATALINES method, to which it takes grave exception. The trailing @'s hold an input record for the execution of the next INPUT statement within the same iteration of the DATA step so that the automatic variable _infile_ can be fed as part of the input process in a do block with the output statement ensure that all records from the input buffer reach the data set being created.

While this method does work, I would like to know the underlying reason as to why SAS Macro won't play well with included data entry using DATALINES or CARDS statements in a data step, particularly when it allows other methods that using either SQL insert statements or standard variable assignment in data step. I find it such a curious behaviour that I remain on the lookout for the explanation why it is like this.

Transferring data between SAS and R

5th June 2008A question regarding the ability to transfer of data between SAS and R set me off on a spot of investigation a while back, and I have always planned to share the results of my labours. Once I managed to locate the required documentation, things became clearer with further inspection. Functions from the foreign package seem to offer the most from the data import and export point of view, so they're what I'll be featuring in this posting.

Here, I am starting with importing, and using the read.ssd function makes life so much easier for getting SAS data into R. When I discovered that the foreign package may not be loaded by default, that could be determined easily using the following command:

search()

If package:foreign isn't in the list, then you need to issue the following function call:

library(foreign)

Of course, if the foreign package isn't installed, none of this will work. It should live in the library sub-folder of the main R installation directory, but if it isn't there, then downloading the relevant binary package from CRAN is in order. Assuming that all is installed, then a command like the following will perform the needful:

read.ssd("c:/data","data1",sascmd="C:/Program Files/SAS Institute/SAS/V8/sas.exe")

This creates a temporary SAS program that converts the SAS data set into a transport file for reading by another R function that is called in the background, read.xport. From my experience, it all seems to work fairly seamlessly.

To get data out of R and into SAS is a multi-stage process, even with the foreign package. While there are other ways, using the write.foreign seems more useful than most. Here is an example function call:

write.foreign(data1,"C:/test.txt","C:/test.sas",package="SAS",dataname="data1",validvarname="V7")

While no SAS data sets are created at this stage, a text file is generated along with a SAS program for converting it into a data set. Running the SAS program is a separate step that follows the creation of the two files. Even if it is less streamlined than read.ssd, write.foreign does make it easier to transfer data into SAS than having to write a program from scratch to read in write.table output.

In summary, R can neither read nor write SAS data sets by itself, so you need SAS installed to really make things happen. SAS gets called by read.ssd and I feel that it would be better if was called by write.foreign also rather than a SAS program generated for execution later on. Even so, it is good to see some custom functionality being provided that makes life easier. There's also the hmisc package, but my experiences while working with that on S-Plus have been such that it compares less favourably with foreign on the reliability front. Saying that, things may have changed since I last tried it.

Controlling what the wpgm command calls in Windows SAS

30th November 2007Recently, I was setting up a key mapping in SAS 8.1 such that the log and output windows are cleared and a SAS program run in the most recently used program editor window. The idea was that debugging would be easier, and the command was what you see below:

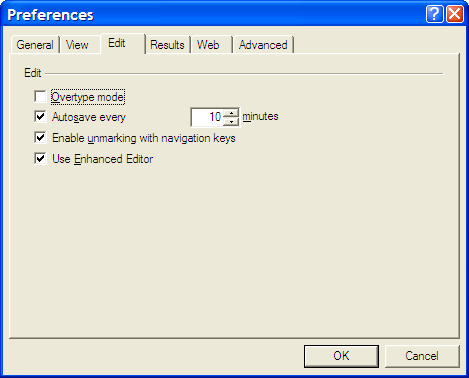

log; clear; output; clear; wpgm; submit

However, I was having trouble getting SAS to pick up the most recently used Enhanced Editor window, and it was opening up an old style Program Editor window in its place. If I had wanted to use that, I would have used pgm and not wpgm. What was conspiring against me was a pesky system option. Pottering over to Tools > Options > Preferences and navigating to the Edit tab brought me to the cause of the problem: the Use Enhanced Editor check box was in the clear, and fixing that set me on my way. SAS 9 could also be afflicted by the same irritation and that is where I got the screenshot that you see below where everything is hunky-dory.