TOPIC: KDE

Upgrading avahi-dnsconfd on Ubuntu

This is how I got around a problem that occurred when I was updating a virtualised Ubuntu 16.04 instance that I have. My usual way to do this is using apt-get or apt from the command line and the process halted because a pre-removal script for the upgrade of avahi-dnsconf failed. The cause was its failure to disable the avahi daemon beforehand, so I needed to execute the following command before repeating the operation:

sudo systemctl disable avahi-daemon

Once the upgrade had completed, then it was time to re-enable the service using the following command:

sudo systemctl enable avahi-daemon

Ideally, this would be completed without such manual intervention. As it happens, there is a bug report for the unexpected behaviour. Hopefully, it will be sorted soon, but these steps will fix things for now.

Setting up GNOME 3 on Arch Linux

It must have been my curiosity that drove me to explore Arch Linux a few weeks ago. Its inclusion on a Linux Format DVD and a few kind words about its being a cutting edge distribution were enough to set me installing it into a VirtualBox virtual machine for a spot of investigation. Despite warnings to the contrary, I took the path of least resistance with the installation, even though I did look among the packages to see if I could select a desktop environment to be added as well. Not finding anything like GNOME in there, I left everything as defaulted and ended up with a command line interface, as I suspected. The next job was to use the pacman command to add the extras that were needed to set in place a fully functioning desktop.

For this, the Arch Linux wiki is a copious source of information, even if it didn't stop me doing things out of sequence. That I didn't go about perusing it linearly was part of the cause of this, but you have to know which place to start first as well. As a result, I have decided to draw everything together here so that it's all in one place and in a more sensible order, even if it wasn't the one that I followed.

The first thing to do is add X.org using the following command:

pacman -Syu xorg-server

The -Syu switch tells pacman to update the package list, upgrade any packages that require it, and adds the listed package if it isn't in place already; that's X.org in this case. For my testing, I added xor-xinit too. This puts that startx command in place. This is the command for adding it:

pacman -S xorg-xinit

With those in place, I would add the VirtualBox Guest Additions next. GNOME Shell requires 3D capability, so you need to have this done while the machine is off or when setting it up in the first place. This command will add the required VirtualBox extensions:

pacman -Syu virtualbox-guest-additions

Once that's done, you need to edit /etc/rc.conf by adding vboxguest vboxsf vboxvideo within the brackets on the MODULES line and adding rc.vboxadd within the brackets on the DAEMONS line. On restarting, everything should be available to you, but the modprobe command is there for any troubleshooting.

With the above pre-work done, you can set to installing GNOME, and I added the basic desktop from the gnome package and the other GNOME applications from the gnome-extra one. GDM is the login screen manager, so that's needed too, and the GNOME Tweak Tool is a very handy thing to have for changing settings that you otherwise couldn't. Here are the commands that I used to add all of these:

pacman -Syu gnome

pacman -Syu gnome-extra

pacman -Syu gdm

pacman -Syu gnome-tweak-tool

With those in place, some configuration files were edited so that a GUI was on show instead of a black screen with a command prompt, as useful as that can be. The first of these was /etc/rc.conf where dbus was added within the brackets on the DAEMONS line and fuse was added between those on the MODULES one.

Creating a file named .xinitrc in the root home area with the following line to that file makes running a GNOME session from issuing a startx command:

exec ck-launch-session gnome-session

With all those in place, all that was needed to get a GNOME 3 login screen was a reboot. Arch is so pared back that I could log in as root, not the safest of things to be doing, so I added an account for more regular use. After that, it has been a matter of tweaking the GNOME desktop environment and adding missing applications. The bare-bones installation that I allowed to happen meant that there were a surprising number of them, but that isn't difficult to fix using pacman.

All of this emphasises that Arch Linux is for those who want to pick what they want from an operating system rather than having that decided for you by someone else, an approach that has something going for it with some of the decisions that make their presence felt in computing environments from time to time. While there's no doubt that this isn't for everyone, the documentation is complete enough for the minimalism not to be a problem for experienced Linux users, and I certainly managed to make things work for me once I got them in the right order. Another thing in its favour is that Arch also is a rolling distribution, so you don't need to have to go through the whole set up routine every six months, unlike some others. So far, it does seem stable enough and even has set me to wondering if I could pop it on a real computer sometime.

Choices, choices…

While choice is a great thing, too much of it can be confusing, and the world of Linux is a one very full of decisions. The first of these centres around the distro to use when taking the plunge; you quickly find that there can be quite a lot to it. In fact, it is a little like buying your first SLR/DSLR or your first car: you only really know what you are doing after your first one. Putting it another way, you only know how to get a house built after you have done just that.

With that in mind, it is probably best to play a little on the fringes of the Linux world before committing yourself. It used to be that you had two main choices for your dabbling:

- using a spare PC

- dual booting with Windows by either partitioning a hard drive or dedicating one for your Linux needs.

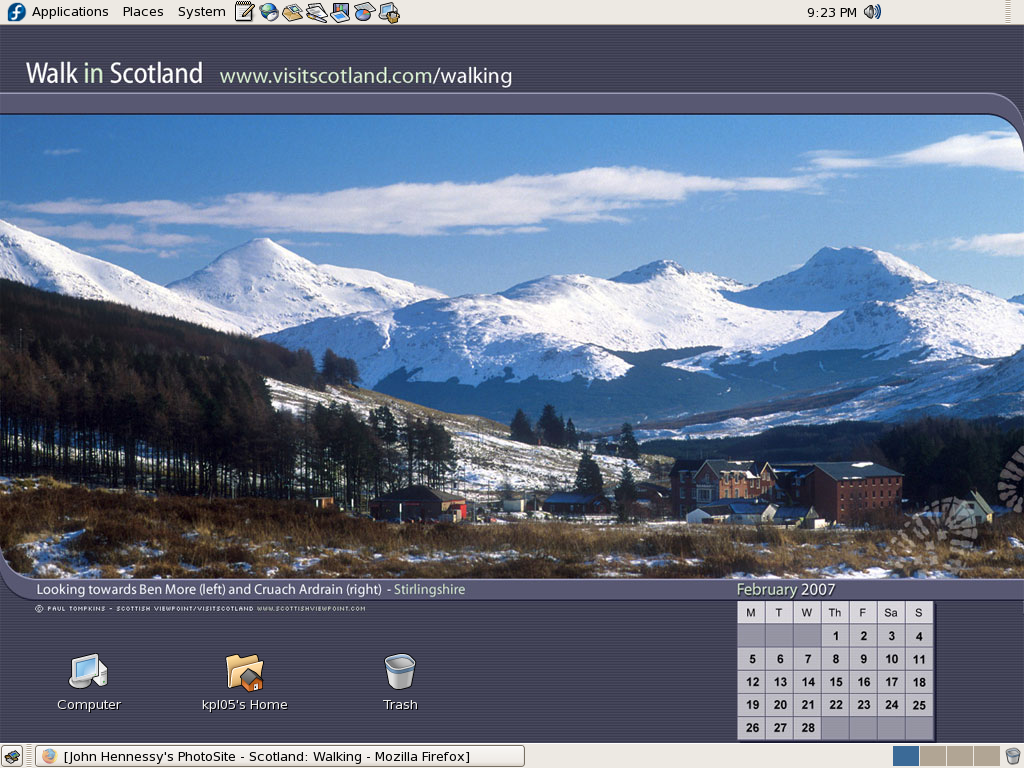

In these times, innovations such as Live CD distributions and virtualisation technology keep you away from such measures. In fact, I would suggest starting with the former and progressing to the latter for more detailed perusal; it's always easy to wipe and restore virtual machines anyway, so you can evaluate several distros at the same time if you have the hard drive space. It also a great way to decide which desktop environment you like. Otherwise, terms like KDE, GNOME, XFCE, etc. might not mean much.

The mention of desktop environments brings me to software choices because they do drive what software is available to you. For instance, the Outlook lookalike that is Evolution is more likely to appear where GNOME is installed than where you have KDE. The opposite applies to the music player Amarok. Nevertheless, you do find certain stalwarts making a regular appearance; Firefox, OpenOffice and the GIMP all fall into this category.

The nice thing about Linux is that distros more often than not contain all the software that you are likely to need. However, that doesn't mean that it is all on the disk and that you have to select what you need during the installation. Though there might have been a time when it might have felt like that, my recent experience has been that a minimum installation is set in place that does all the basics for you to easily add the extras later on an as needed basis. I have also found that online updates are a strong feature too.

Picking up what you need when you need it has major advantages, the big one being that Linux grows with you. You can add items like Apache, PHP and MySQL when you know what they are and why you need them. It's a long way from picking applications of which you know very little at installation time and with the suspicion that any future installation might land you in dependency hell while performing compilation of application source code; the temptation to install everything that you saw was a strong one. The "learn before you use" approach favoured by how things are done nowadays is an excellent one.

Even if life is easier in the Linux camp these days, there is no harm in sketching out your software needs. Any distribution should be able to fulfil most if not all of them. As it happened, the only third party application that I have needed to install on Ubuntu without recourse to Synaptic was VMware Workstation, and that procedure thankfully turned out to be pretty painless.

A fallback installation routine?

In a previous sustained spell of Linux meddling, the following installation routine was one that I encountered rather too often when RPM's didn't do what I required of them (having a SUSE distro in a world dominated by a Red Hat standard didn't make things any easier...):

tar xzvf progname.tar.gz; cd progname

The first part of the command extracts from a tarball compressed using gzip and the second one changes into the new directory created by the extraction. For files compressed with bzip use:

tar xjvf progname.tar.bz2; cd progname

The command below configures, compiles and installs the package, running the last part of the command in its own shell.

./configure; make; su -c make install

Yes, the procedure is a bit convoluted, but it would have been fine if it always worked. My experience was that the process was a far from foolproof one. For instance, an unsatisfied dependency is all that is needed to stop you in your tracks. Attempting to install a GNOME application on a KDE-based system is as good a way to encounter this result as any. Other horrid errors also played havoc with hopeful plans from time to time.

It shouldn't surprise you to find that I will be staying away from the compilation/installation business with my main Ubuntu system. Synaptic Package Manager and its satisfactory dependency resolution fulfil my needs well and there is the Update Manager too; I'll be leaving it for Canonical to do the testing and make the decisions regarding what is ready for my PC as they maintain their software repositories. My past tinkering often created a mess, and I'll be leaving that sort of experimentation for the safe confines of a virtual machine from now on...

A perspective on Linux

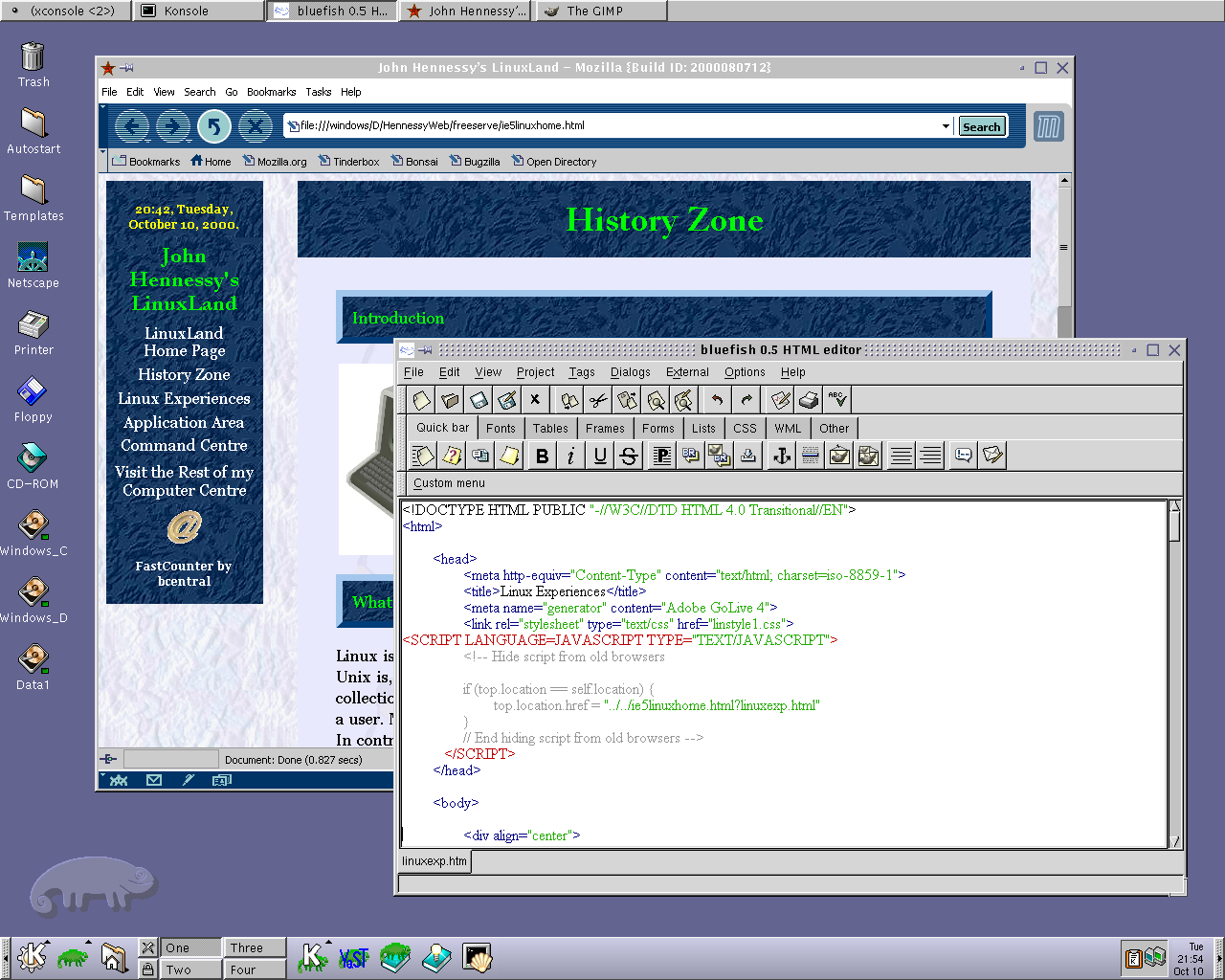

Recently, I have revisited an old website that I used to have online in and around 2000 that has since been retired for a while. One thing that it had in common with this blog was its focus on computer technology. While I don’t remember blogging being bandied about as a term back then, a weblog would have fulfilled the site’s much better. One of the sections of this old world website was dedicated to Linux and UNIX; this was where I collected and shared experienced my experiences of these. These days, unless it is held in some cache somewhere (rather unlikely, I think), the only place that it is found is what I bundled together in a tar.gz file for transfer to Linux. Irony strikes…

Back then, my choice of Linux was SuSE 6.2, followed by 6.4 from PC Plus DVD. It was the first, and only, Linux distro that I bought after exploring a selection of distros from cover-mounted CD’s in books and magazines. While I did like it, it wasn’t enough to tempt me away from Windows. I had issues with hardware, and they got in the way of a move. Apart from what some might judge to be clunkiness, there were fewer impediments on the software side.

I am a DIY system builder and there were issues with Linux support of my hardware, particularly my modem. Rather than being in possession of all the electronic wherewithal that a full modem would need, it got the operating system to do some of the work. The trouble was that this locked you into using Windows, hence its Winmodem moniker. Besides this, my Zip drive was vital to me and SuSE didn’t support it out of the box: a kernel recompilation was in order and could involve losing any extensions that SuSE had actually added. Another foible was non-support of a now obsolete UDMA 66 expansion card.

But improvements in hardware support were coming on the scene. Support for printing with CUPS, scanning with SANE and audio with ALSA was coming along nicely and has matured nicely. Apart from cases where vendors refuse to help the Open-Source community and bleeding-edge hardware that needs drivers to recoded according to the demands of GPL, things have come a long, long way.

Software-wise, the only thing holding me from migrating to Linux was my use of Microcal (now OriginLab) Origin, a scientific data visualisation and analysis package that was invaluable for my work. Even then, that could be run using WINE, the Windows API library for Linux. OpenOffice could easily have replaced MS Office for my purposes, unless formula editing was a feature outstanding from the specification. GIMP, once I had ascended the learning curve, would have coped with my graphics processing needs. After committing myself to non-visual web development, Bluefish and Quanta+ would have fulfilled my web development needs. Web technologies such as Perl, PHP, Apache and SQL have always been very much at home on Linux, so no issue there. At that stage, experimenting with these was very much in my future. Surprisingly, web browsing wasn’t that strong in Linux then. Mozilla was still in the alpha/beta development phase and needed many rough ends sorting, while the dreadful Netscape 4 was in full swing with offerings like nautilus coming on stream. Typography support was another area of development at the time, which fed through into how browsers rendered web pages. Downloading and compiling xfstt did resolve the situation.

These days, I have virtual machines set up for Ubuntu, Fedora Core and Mandriva while openSUSE is another option. I spent Saturday night poking around in Fedora (I know, I should have better things to be doing…) and it feels very slick, a world away from where Red Hat was a decade ago. The same applies to Ubuntu, which is leagues ahead of Debian, on which it is based. With both of these, you get applications for updating the packages in the distribution; not something that you might have seen a few years ago. Support for audio and printing comes straight out of the box. I assume that scanner and digital camera support are the same; they need to be. Fedora includes the virtual machine engine that is Xen. I am intrigued by this but running a VM within a VM does seem peculiar. Nevertheless, if that comes off, it might be that Fedora goes onto my spare PC with Windows loaded onto one or more virtual machines. It’s an intriguing idea and having Fedora installed on a real PC might even allow me to see workspaces changed onscreen as if they were the sides of a cube, very nice. Mandriva also offers the same visual treat, but is not a distro that I have been using a lot. The desktop environment may be KDE rather than Gnome as it is in the others, but all the same features are on board. The irony though is that, after starting out my Linux voyage on KDE, I am now more familiar with Gnome these days and, aesthetically speaking, it does look that little better to my eye.

So, would I move to Linux these days? Well, it is supported by a more persuasive case than ever it has been, and I would have to say that it is only logistics and the avoidance of upheaval that is stopping me now. If I were to move to Linux, then it would be by reversing the current situation: going from Linux running in a VM on Windows to Windows running in a VM on Linux. Having Windows around would be good for my personal education and ease the upheaval caused by the migration. Then, it would be a matter of watching what hardware gets installed.