TOPIC: GITHUB

SAS Packages: Revolutionising code sharing in the SAS ecosystem

26th July 2025In the world of statistical programming, SAS has long been the backbone of data analysis for countless organisations worldwide. Yet, for decades, one of the most significant challenges facing SAS practitioners has been the efficient sharing and reuse of code. Knowledge and expertise have often remained siloed within individual developers or teams, creating inefficiencies and missed opportunities for collaboration. Enter the SAS Packages Framework (SPF), a solution that changes how SAS professionals share, distribute and utilise code across their organisations and the broader community.

The Problem: Fragmented Knowledge and Complex Dependencies

Anyone who has worked extensively with SAS knows the frustration of trying to share complex macros or functions with colleagues. Traditional code sharing in SAS has been plagued by several issues:

- Dependency nightmares: A single macro often relies on dozens of utility macros working behind the scenes, making it nearly impossible to share everything needed for the code to function properly

- Version control chaos: Keeping track of which version of which macro works with which other components becomes an administrative burden

- Platform compatibility issues: Code that works on Windows might fail on Linux systems and vice versa

- Lack of documentation: Without proper documentation and help systems, even the most elegant code becomes unusable to others

- Knowledge concentration: Valuable SAS expertise remains trapped within individuals rather than being shared with the broader community

These challenges have historically meant that SAS developers spend countless hours reinventing the wheel, recreating functionality that already exists elsewhere in their organisation or the wider SAS community.

The Solution: SAS Packages Framework

The SAS Packages Framework, developed by Bartosz Jabłoński, represents a paradigm shift in how SAS code is organised, shared and deployed. At its core, a SAS package is an automatically generated, single, standalone zip file containing organised and ordered code structures, extended with additional metadata and utility files. This solution addresses the fundamental challenges of SAS code sharing by providing:

- Functionality over complexity: Instead of worrying about 73 utility macros working in the background, you simply share one file and tell your colleagues about the main functionality they need to use.

- Complete self-containment: Everything needed for the code to function is bundled into one file, eliminating the "did I remember to include everything?" problem that has plagued SAS developers for years.

- Automatic dependency management: The framework handles the loading order of code components and automatically updates system options like

cmplib=andfmtsearch=for functions and formats. - Cross-platform compatibility: Packages work seamlessly across different operating systems, from Windows to Linux and UNIX environments.

Beyond Macros: A Spectrum of SAS Functionality

One of the most compelling aspects of the SAS Packages Framework is its versatility. While many code-sharing solutions focus solely on macros, SAS packages support a wide range of SAS functionality:

- User-defined functions (both FCMP and CASL)

- IML modules for matrix programming

- PROC PROTO C routines for high-performance computing

- Custom formats and informats

- Libraries and datasets

- PROC DS2 threads and packages

- Data generation code

- Additional content such as documentation PDF files

This comprehensive approach means that virtually any SAS functionality can be packaged and shared, making the framework suitable for everything from simple utility macros to complex analytical frameworks.

Real-World Applications: From Pharmaceutical Research to General Analytics

The adoption of SAS packages has been particularly notable in the pharmaceutical industry, where code quality, validation and sharing are critical concerns. The PharmaForest initiative, led by PHUSE Japan's Open-Source Technology Working Group, exemplifies how the framework is being used to revolutionise pharmaceutical SAS programming. PharmaForest offers a collaborative repository of SAS packages specifically designed for pharmaceutical applications, including:

- OncoPlotter: A comprehensive package for creating figures commonly used in oncology studies

- SAS FAKER: Tools for generating realistic test data while maintaining privacy

- SASLogChecker: Automated log review and validation tools

- rtfCreator: Streamlined RTF output generation

The initiative's philosophy captures perfectly the spirit of the SAS Packages Framework: "Through SAS packages, we want to actively encourage sharing of SAS know-how that has often stayed within individuals. By doing this, we aim to build up collective knowledge, boost productivity, ensure quality through standardisation and energise our community".

The SASPAC Archive: A Growing Ecosystem

The establishment of SASPAC (SAS Packages Archive) represents the maturation of the SAS packages ecosystem. This dedicated repository serves as the official home for SAS packages, with each package maintained as a separate repository complete with version history and documentation. Some notable packages available through SASPAC include:

- BasePlus: Extends BASE SAS with functionality that many developers find themselves wishing was built into SAS itself. With 12 stars on GitHub, it's become one of the most popular packages in the archive.

- MacroArray: Provides macro array functionality that simplifies complex macro programming tasks, addressing a long-standing gap in SAS's macro language capabilities.

- SQLinDS: Enables SQL queries within data steps, bridging the gap between SAS's powerful data step processing and SQL's intuitive query syntax.

- DFA (Dynamic Function Arrays): Offers advanced data structures that extend SAS's analytical capabilities.

- GSM (Generate Secure Macros): Provides tools for protecting proprietary code while still enabling sharing and collaboration.

Getting Started: Surprisingly Simple

Despite the capabilities, getting started with SAS packages is fairly straightforward. The framework can be deployed in multiple ways, depending on your needs. For a quick test or one-time use, you can enable the framework directly from the web:

filename packages "%sysfunc(pathname(work))";

filename SPFinit url "https://raw.githubusercontent.com/yabwon/SAS_PACKAGES/main/SPF/SPFinit.sas";

%include SPFinit;

For permanent installation, you simply create a directory for your packages and install the framework:

filename packages "C:SAS_PACKAGES";

%installPackage(SPFinit)

Once installed, using packages becomes as simple as:

%installPackage(packageName)

%helpPackage(packageName)

%loadPackage(packageName)

Developer Benefits: Quality and Efficiency

For SAS developers, the framework offers numerous advantages that go beyond simple code sharing:

- Enforced organisation: The package development process naturally encourages better code organisation and documentation practices.

- Built-in testing: The framework includes testing capabilities that help ensure code quality and reliability.

- Version management: Packages include metadata such as version numbers and generation timestamps, supporting modern DevOps practices.

- Integrity verification: The framework provides tools to verify package authenticity and integrity, addressing security concerns in enterprise environments.

- Cherry-picking: Users can load only specific components from a package, reducing memory usage and namespace pollution.

The Future of SAS Code Sharing

The growing adoption of SAS packages represents more than just a new tool, it signals a fundamental shift towards a more collaborative and efficient SAS ecosystem. The framework's MIT licensing and 100% open-source nature ensure that it remains accessible to all SAS users, from individual practitioners to large enterprise installations. This democratisation of advanced code-sharing capabilities levels the playing field and enables even small teams to benefit from enterprise-grade development practices.

As the ecosystem continues to grow, with contributions from pharmaceutical companies, academic institutions and individual developers worldwide, the SAS Packages Framework is proving that the future of SAS programming lies not in isolated development, but in collaborative, community-driven innovation.

For SAS practitioners looking to modernise their development practices, improve code quality and tap into the collective knowledge of the global SAS community, exploring SAS packages isn't just an option, it's becoming an essential step towards more efficient and effective statistical programming.

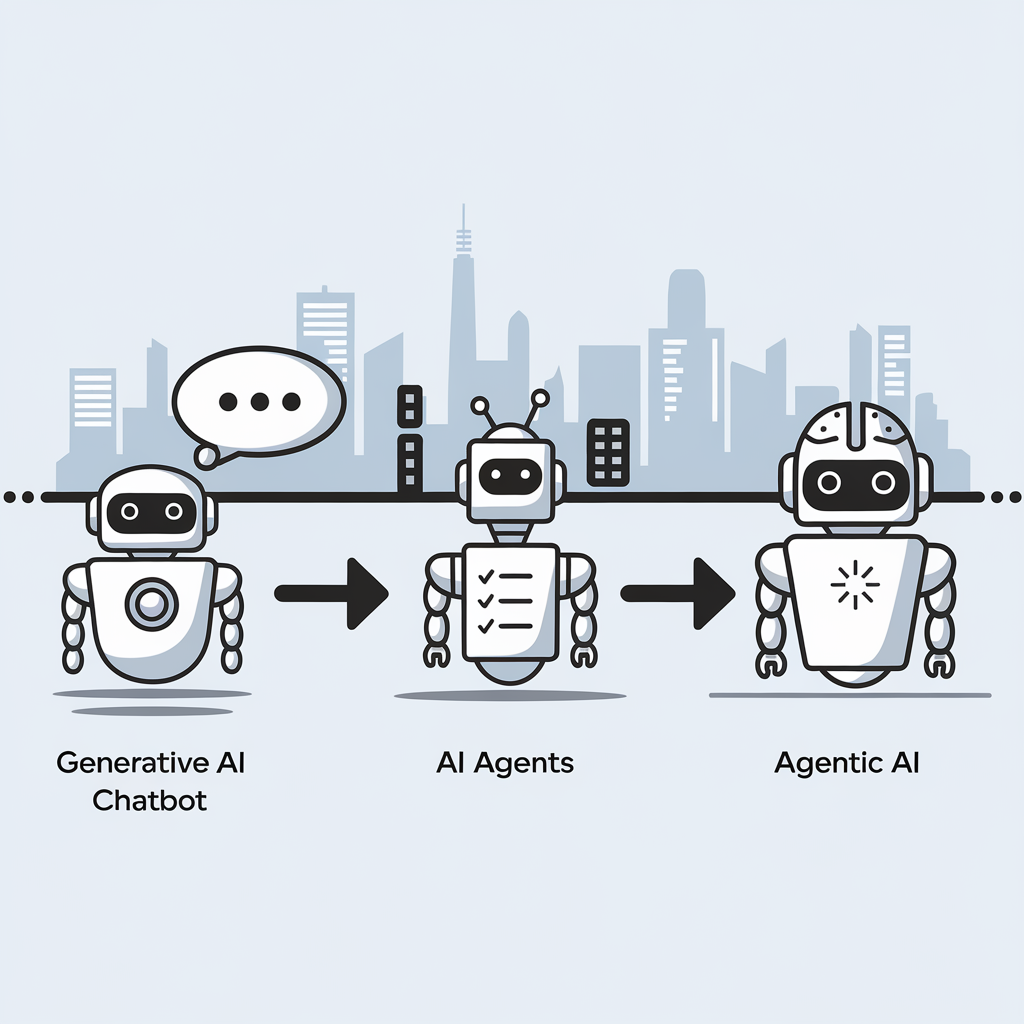

The critical differences between Generative AI, AI Agents, and Agentic Systems

9th April 2025

The distinction between three key artificial intelligence concepts can be explained without technical jargon. Here then are the descriptions:

- Generative AI functions as a responsive assistant that creates content when prompted but lacks initiative, memory or goals. Examples include ChatGPT, Claude and GitHub Copilot.

- AI Agents represent a step forward, actively completing tasks by planning, using tools, interacting with APIs and working through processes independently with minimal supervision, similar to a junior colleague.

- Agentic AI represents the most sophisticated approach, possessing goals and memory while adapting to changing circumstances; it operates as a thinking system rather than a simple chatbot, capable of collaboration, self-improvement and autonomous operation.

This evolution marks a significant shift from building applications to designing autonomous workflows, with various frameworks currently being developed in this rapidly advancing field.

Finding human balance in an age of AI code generation

12th March 2025Recently, I was asked about how I felt about AI. Given that the other person was not an enthusiast, I picked on something that happened to me, not so long ago. It involved both Perplexity and Google Gemini when I was trying to debug something: both produced too much code. The experience almost inspired a LinkedIn post, only for some of the thinking to go online here for now. A spot of brainstorming using an LLM sounds like a useful exercise.

Going back to the original question, it happened during a meeting about potential freelance work. Thus, I tapped into experiences with code generators over several decades. The first one involved a metadata-driven tool that I developed; users reported that there was too much imperfect code to debug with the added complexity that dealing with clinical study data brings. That challenge resurfaced with another bespoke tool that someone else developed, and I opted to make things simpler: produce some boilerplate code and let users take things from there. Later, someone else again decided to have another go, seemingly with more success.

It is even more challenging when you are insufficiently familiar with the code that is being produced. That happened to me with shell scripting code from Google Gemini that was peppered with some Awk code. There was no alternative but to learn a bit more about the language from Tutorials Point and seek out an online book elsewhere. That did get me up to speed, and I will return to these when I am in need again.

Then, there was the time when I was trying to get a Julia script to deal with Google Drive needing permissions to be set. This started Google Gemini into adding more and more error checking code with try catch blocks. Since I did not have the issue at that point, I opted to halt and wait for its recurrence. When it did, I opted for a simpler approach, especially with the gdrive CLI tool starting up a web server for completing the process of reactivation. While there are times when shell scripting is better than Julia for these things, I added extra robustness and user-friendliness anyway.

During that second task, I was using VS Code with the GitHub Copilot plugin. There is a need to be careful, yet that can save time when it adds suggestions for you to include or reject. The latter may apply when it adds conditional logic that needs more checking, while simple code outputting useful text to the console can be approved. While that certainly is how I approach things for now, it brings up an increasingly relevant question for me.

How do we deal with all this code production? In an environment with myriads of unit tests and a great deal of automation, there may be more capacity for handling the output than mere human inspection and review, which can overwhelm the limitations of a human context window. A quick search revealed that there are automated tools for just this purpose, possibly with their own learning curves; otherwise, manual working could be a better option in some cases.

After all, we need to do our own thinking too. That was brought home to me during the Julia script editing. To come up with a solution, I had to step away from LLM output and think creatively to come up with something simpler. There was a tension between the two needs during the exercise, which highlighted how important it is to learn not to be distracted by all the new technology. Being an introvert in the first place, I need that solo space, only to have to step away from technology to get that when it was a refuge in the first place.

For anyone with a programming hobby, they have to limit all this input to avoid being overwhelmed; learning a programming language could involve stripping out AI extensions from a code editor, for instance, LLM output has its place, yet it has to be at a human scale too. That perhaps is the genius of a chat interface, and we now have Agentic AI too. It is as if the technology curve never slackens, at least not until the current boom ends, possibly when things break because they go too far beyond us. All this acceleration is fine until we need to catch up with what is happening.

Avoiding errors caused by missing Julia packages when running code on different computers

15th September 2024As part of an ongoing move to multi-location working, I am sharing scripts and other artefacts via GitHub. This includes Julia programs that I have. That has led me to realise that a bit of added automation would help iron out any package dependencies that arise. Setting up things as projects could help, yet that feels a little too much effort for what I have. Thus, I have gone for adding extra code to check on and install any missing packages instead of having failures.

For adding those extra packages, I instate the Pkg package as follows:

import Pkg

While it is a bit hackish, I then declare a single array that lists the packages to be checked:

pkglits =["HTTP", "JSON3", "DataFrames", "Dates", "XLSX"]

After that, there is a function that uses a try catch construct to find whether a package exists or not, using the inbuilt eval macro to try a using declaration:

tryusing(pkgsym) = try

@eval using $pkgsym

return true

catch e

return false

end

The above function is called in a loop that both tests the existence of a package and, if missing, installs it:

for i in 1:length(pkglits)

rslt = tryusing(Symbol(pkglits[i]))

if rslt == false

Pkg.add(pkglits[i])

end

end

Once that has completed, using the following line to instate the packages required by later processing becomes error free, which is what I sought:

using HTTP, JSON3, DataFrames, Dates, XLSX

What to do when a GPG signature becomes invalid for a package repository on Linux Mint

12th September 2024During a package update on my main Linux system, I encountered the following kind of error message:

An error occurred during the signature verification. The repository is not updated and the previous index files will be used. GPG error: https://cli.github.com/packages stable InRelease: The following signatures were invalid: EXPKEYSIG <GPG Key> GitHub CLI

The message indicated a problem with the GPG signature verification for the GitHub CLI repository. The cause was that the signature for the repository was invalid, preventing the package manager from updating the repository's index files. The first step then was to remove the invalid GPG key using the following command:

sudo apt-key del <GPG Key>

With the invalid GPG key removed, the next step is to add the new GPG key for the GitHub CLI repository by issuing the following command:

curl -fsSL https://cli.github.com/packages/githubcli-archive-keyring.gpg | sudo tee /usr/share/keyrings/githubcli-archive-keyring.gpg > /dev/null

Once I had the new GPG key, I was able to use my usual system update process without any problem. The error message was gone, and updates and upgrades proceeded as intended.

Version control of large files on GitHub

27th August 2024When you try pushing large files to a GitHub repository, you may find that you breach its 100 MB limit. When you do, you either need to buy a data pack or exclude the file from being tracked. In my case, I decided that the monthly fee for 50 GB was not overly onerous, so I added that. Excluding such files using the .gitignore functionality makes a lot of sense, too.

If you decide to proceed as I did, you will need to install git-lfs. Since that may vary by operating system, I am leaving to you to look for those details on the website that I have linked to earlier. Activating it for your user account needs the following:

git lfs install

Following that, you need to flag the file or type of file using a command like the following:

git lfs track "[file path with name or search pattern]"

Executing the above adds the file path including the file name or the search pattern (normal operating system wildcards like * work here) to a file named .gitattributes in the root of the repository folder hierarchy. If that file no longer exists, it will get created the first time that this is done. It will also need to be added to the repository using git add like any other file. A general command like the following will also do it anyway, since it covers everything in the relevant folder:

git add .

After making a commit, the next step is to push the contents into GitHub. At this stage, the large file or files will be recognised and sent to large file storage with only a text link in the main area. Everything else will be handled as normal.

While on this subject, I need to add a few words of warning. Pushing a large file to GitHub without doing things up front will cause the operation to fail. That may make the transition over to large file storage all the more tricky, since things will be out of order. Moving everything to a temporary folder and again cloning the repository was how I got out of this impasse when it happened to me. Then, I could get the large file handling set up before getting going again. It is better to sort things like this out at the start of the process, rather than attempting to remedy things part way through the process.

Keeping a file or directory out of a Git or GitHub repository

26th August 2024Recently, I have begun to do more version control of files with Git and GitHub. However, GitHub is not a place to keep files with log in credentials. Thus, I wanted to keep these locally but avoid having them being tracked in either Git or GitHub.

Adding the names to a .gitignore file will avoid their inclusion prospectively, but what can you do if they get added in error before you do? The answer that I found is to execute a command like the following:

git rm -r --cached [path to file or directory with its name]

That takes it out of the staging area and allows the .gitignore functionality to do its job. The -r switch makes the command recursive, should you be working with the contents of a directory. Then, the --cached flag is what does the removal from the staging area.

While the aforementioned worked for me when I had an oversight, the following is also suggested:

git update-index --assume-unchanged [path to file or directory with its name]

That may be working without a .gitignore file, which was not how I was doing things. Nevertheless, it may have its uses for someone else, so that is why I include it above.

Choosing a desktop Markdown editor for producing grammar-checked static website content

8th November 2022Earlier this year, I changed over two websites from dynamic versions using content management systems to static ones by using Hugo to build them from Markdown files. That meant that I needed to look at the editing of Markdown, even if it is a fairly simple file format. For one thing, Grammarly can be incorporated into WordPress, so I did not want to lose something like that.

The latter point meant that I was steered away from plain text editors. Otherwise, there are online ones like StackEdit and Dillinger, but the Firefox Grammarly plugin only appears to work on the first of these, and even then, only partially in my experience. While Dillinger does offer connections to online file storage providers like Google, Dropbox and OneDrive, I wanted to store files on my desktop for upload to a web server. It also works with GitHub, but I prefer to use another web hosting provider.

There are various specialised Markdown editors for desktop usage like Typora, ReText, Formiko or Ghostwriter, yet I chose none of these. My actual choice may surprise many: it was Visual Studio Code. The availability of a Grammarly plug-in was what swayed it for me, even if it did need to be switched on for Markdown files. In many ways, it does work as smoothly as elsewhere because it gets fooled by links and other code-like pieces of text. Also, having the added ability to add words to a custom dictionary would be ideal. Some rule overriding is available, but I am not sure that everything is covered, even if the list of options is lengthy. Some time is needed to inspect all of them before I proceed any further. Thus far, things are working well enough for me.

Some books and other forms of documentation on R

11th September 2021The thrust of an exhortation from a computing handbook publisher comes to mind here: don't just look things up on Google, read a book so you really understand what you are doing. A form of words like that was used to sell an eBook on GitHub, but the same sentiment applies to R or any other computing language. While using a search engine will get you going or add to existing knowledge, only a book or a training course will help to embed real competence.

In the case of R, there is a myriad of blogs out there that can be consulted, as well as function and package documentation on RDocumentation or rrdr.io. For the former, R-bloggers or R Weekly can make good places to start, while ones like Stats and R, Statistics Globe, STHDA, PSI's VIS-SIG and anything from Posit (including their main blog as well as their AI one) can be worth consulting. Additionally, there is also RStudio Education and the NHS-R Community, which also have a GitHub repository together with a YouTube channel. Many packages have dedicated websites as well, so there is no lack of documentation with all of these, so here is a selection:

To come to the real subject of this post, R is unusual in that books that you can buy also have companion websites that contain the same content with the same structure. Whatever funds this approach (and some appear to be supported by RStudio itself by the looks of things), there certainly are many books available freely online in HTML as you will see from the list below, while a few do not have a print counterpart as far as I know:

R Programming for Data Science

R Markdown: The Definitive Guide

bookdown: Authoring Books and Technical Documents with R Markdown

blogdown: Creating Websites with R Markdown

pagedown: Create Paged HTML Documents for Printing from R Markdown

Dynamic Documents with R and knitr

Engineering Production-Grade Shiny Apps

Outstanding User Interfaces with Shiny

Happy Git and GitHub for the useR

Outstanding User Interfaces with Shiny

Engineering Production-Grade Shiny Apps

Many of the above have counterparts published by O'Reilly or Chapman & Hall, to name the two publishers that I have found so far. Aside from sharing these with you, there is also the personal motivation of having the collection of links somewhere so I can close tabs in my Firefox session. There are other web articles open in other tabs that I need to retain and share, but these will need to do for now, and I hope that you find them as useful as I do.