TOPIC: APT

Adding Microsoft core fonts to Debian

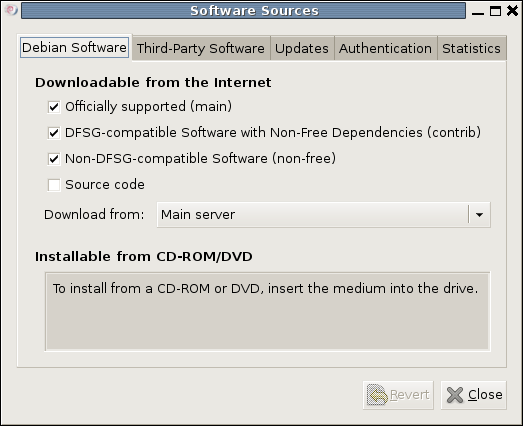

18th June 2009When setting up Ubuntu, I usually add in Microsoft's core fonts by installing the msttcorefonts package using either Synaptic or apt-get. Though I am not sure why I didn't try doing the same thing for Debian until now, it's equally feasible. Just pop over to System > Administration > Software Sources and ensure that the check-boxes for the contrib and non-free categories are checked like you see below.

You could also achieve the same end by editing /etc/apt/sources.list and adding the non-free and contrib keywords to make lines look like these before issuing the command apt-get update as root:

deb http://ftp.debian.org/debian/ lenny main non-free contrib

deb-src http://ftp.debian.org/debian/ lenny main non-free contrib

All that you are doing with the manual editing route is performing the same operations that the more friendly front end would do for you anyway. After that, it's a case of going with the installation method of your choice and restarting Firefox or IceWeasel to see the results.

Ubuntu 9.04 and Tracker

30th April 2009Shortly after it was released, I did the upgrade shuffle very painlessly, and it didn't take up so much time either. There was only one issue: Tracker falling over, complaining about corrupted indices. That got it removed from my system using apt-get remove (apt-get purge is another option, especially if you need to rid yourself of nefarious configuration files). After having a bit of a dig around the web, I found that I wasn't the only one seeing the problem. To me, it looks as if the upgrade to 9.04 doesn't work so well when it comes to Tracker, and it needs to be removed and reinstalled to ensure that all required dependencies are correctly set in place. Since I restored it on my system, all is working without complaint. Other than the Tracker issue, it has been a case of another uneventful upgrade. Though the evolutionary path that Ubuntu is following may disappoint anyone looking for excitement, no one would upgrade every six months if they knew that disruptive damage or upheaval might be caused. While I may do a clean installation at some point, that is well down the priority list right now.

Ubuntu upgrades: do a clean installation or use Update Manager?

9th April 2009Part of some recent "fooling" brought on by the investigation of what turned out to be a duff DVD writer was a fresh installation of Ubuntu 8.10 on my main home PC. It might have brought on a certain amount of upheaval, but it was nowhere near as severe as that following the same sort of thing with a Windows system. While a few hours was all that was needed, whether it is better to perform just an upgrade every time a new Ubuntu release is unleashed on the world or to go for a complete virgin installation instead. With Ubuntu 9.04 in the offing, that question takes on a more immediate significance than it otherwise might do.

Various tricks make the whole reinstallation idea more palatable. For instance, many years of Windows usage have taught me the benefits of separating system and user files. The result is that my home directory lives on a different disk to my operating system files. Add to that the experience of being able to reuse that home drive across different Linux distros, and even swapping from one distro to another becomes feasible. From various changes to my secondary machine, I can vouch that this works for Ubuntu, Fedora and Debian; the latter is what currently powers the said PC. Though you might have to use superuser powers to attend to ownership and access issues, the portability is certainly there, and it applies to anything kept on other disks too.

Naturally, there's always the possibility of losing programs that you have had installed, but losing the clutter can be liberating too. However, assembling a script made up of one or more apt-get install commands can allow you to get many things back at a stroke. For example, I have a test web server (Apache/MySQL/PHP/Perl) set up, so this would be how I'd get everything back in place before beginning further configuration. It might be no bad idea to back up your collection of software sources, either; I have yet to add all the ones that I have been using back into Synaptic. Then there are closed source packages such as VirtualBox (yes, I know that there is an open-source edition) and Adobe Reader. After reinstating the former, all my virtual machines were available for me to use again, without further ado. Restoring the latter allowed me to grab version 9.1 (probably more secure anyway) and it inveigles itself into Firefox now too so the number of times that I need to go through the download shuffle before seeing the contents of a PDF are much reduced, though not eliminated by the Windows-like ability to see a PDF loaded in a browser tab. Moving from software to hardware for a moment, it looks like any bespoke actions such as my activating an Epson Perfection 4490 Photo scanner need to be repeated, but that was all that I had to do. Getting things back into order is not so bad, even if you have to allow a modicum of time for this.

What I have discussed so far are what might be categorised as the common or garden aspects of a clean installation, yet I have seen some behaviours that make me wonder if the usual Ubuntu upgrade path is sufficiently complete in its refresh of your system. The counterpoint to all of this is that I may not have been looking for some of these things before now. That may apply to my noticing that DSLR support seems to be better with my Canon and Pentax cameras both being picked up and mounted for me as soon as they are connected to a PC, the caveat being that they are themselves powered on for this to happen. Another surprise that may be new is that the BBC iPlayer's Listen Again works without further work from the user, a very useful development. It obviously wasn't that way before I carried out the invasive means. My previous tweaking might have prevented the in situ upgrade from doing its thing, but I do see the point of not upsetting people's systems with an overly aggressive update process, even if it means that some advances do not make themselves known.

So what's my answer regarding which way to go once Ubuntu Jaunty Jackalope appears? For the sake of avoiding initial disruption, I'd be inclined to go down the Update Manager route first, while reserving the right to do a fresh installation later on. All in all, I am left with the gut feeling is that the jury is still out on this one.

A quick way to do an update

8th August 2008Here's a quick way to get the latest updates on your PC using the command line if you are using Ubuntu or Debian:

sudo apt-get update && sudo apt-get upgrade

Of course, you can split these commands up if you prefer to look before you leap. At the very least, it's so much slicker than the GUI route.

Cleaning up Ubuntu

1st June 2008Uninstalling software still leaves any dependencies that came with them in place, so a quick way to get rid of any detritus is always useful. Here's a command that achieves this in a painless manner:

apt-get autoremove

Just say yes to what it suggests, to allow it on its way.

A fallback installation routine?

9th November 2007In a previous sustained spell of Linux meddling, the following installation routine was one that I encountered rather too often when RPM's didn't do what I required of them (having a SUSE distro in a world dominated by a Red Hat standard didn't make things any easier...):

tar xzvf progname.tar.gz; cd progname

The first part of the command extracts from a tarball compressed using gzip and the second one changes into the new directory created by the extraction. For files compressed with bzip use:

tar xjvf progname.tar.bz2; cd progname

The command below configures, compiles and installs the package, running the last part of the command in its own shell.

./configure; make; su -c make install

Yes, the procedure is a bit convoluted, but it would have been fine if it always worked. My experience was that the process was a far from foolproof one. For instance, an unsatisfied dependency is all that is needed to stop you in your tracks. Attempting to install a GNOME application on a KDE-based system is as good a way to encounter this result as any. Other horrid errors also played havoc with hopeful plans from time to time.

It shouldn't surprise you to find that I will be staying away from the compilation/installation business with my main Ubuntu system. Synaptic Package Manager and its satisfactory dependency resolution fulfil my needs well and there is the Update Manager too; I'll be leaving it for Canonical to do the testing and make the decisions regarding what is ready for my PC as they maintain their software repositories. My past tinkering often created a mess, and I'll be leaving that sort of experimentation for the safe confines of a virtual machine from now on...

Setting up a test web server on Ubuntu

1st November 2007Installing all the bits and pieces is painless enough so long as you know what's what; Synaptic does make it thus. Interestingly, Ubuntu's default installation is a lightweight affair with the addition of any additional components involving downloading the packages from the web. The whole process is all very well integrated and doesn't make you sweat every time you need to install additional software. In fact, it resolves any dependencies for you so that those packages can be put in place too; it lists them, you select them and Synaptic does the rest.

Returning to the job in hand, my shopping list included Apache, Perl, PHP and MySQL, the usual suspects in other words. Perl was already there, as it is on many UNIX systems, so installing the appropriate Apache module was all that was needed. PHP needed the base installation as well as the additional Apache module. MySQL needed the full treatment too, though its being split up into different pieces confounded things a little for my tired mind. Then, there were the MySQL modules for PHP to be set in place too.

The addition of Apache preceded all of these, but I have left it until now to describe its configuration, something that took longer than for the others; the installation itself was as easy as it was for the others. However, what surprised me were the differences in its configuration set up when compared with Windows. There are times when we get the same software but on different operating systems, which means that configuration files get set up differently. The first difference is that the main configuration file is called apache2.conf on Ubuntu rather than httpd.conf as on Windows. Like its Windows counterpart, Ubuntu's Apache does use subsidiary configuration files. However, there is an additional layer of configurability added courtesy of a standard feature of UNIX operating systems: symbolic links. Rather than having a single folder with the all configuration files stored therein, there are two pairs of folders, one pair for module configuration and another for site settings: mods-available/mods-enabled and sites-available/sites-enabled, respectively. In each pair, there is a folder with all the files and another containing symbolic links. It is the presence of a symbolic link for a given configuration file in the latter that activates it. I learned all this when trying to get mod_rewrite going and changing the web server folder from the default to somewhere less susceptible to wrecking during a re-installation or, heaven forbid, a destructive system crash. It's unusual, but it does work, even if it takes that little bit longer to get things sorted out when you first meet up with it.

Apart from the Apache set up and finding the right things to install, getting a test web server up and running was a fairly uneventful process. All's working well now, and I'll be taking things forward from here; making website Perl scripts compatible with their new world will be one of the next things that need to be done.