A pleasant surprise…

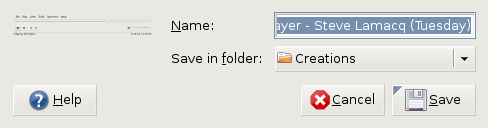

4th December 2007Yesterday, when taking the screen grab for my post on Quanta Plus, I did the Alt + Print Screen shuffle as usual. However, when I did so, I was greeted with a dialogue box asking me where I wanted to store the PNG file that was to be produced and what I wanted to call it. The operation was as swish as that. On Windows, the screenshot gets stuffed into the clipboard for you to extricate it with your graphics editor of choice, so this was an interesting surprise. It's the sort of thing that can make a good impression, and it is striking that Linux seems to be ahead of Windows on this one. Who said Linux was less than user-friendly?

Setting up Quanta Plus to edit files on your web server

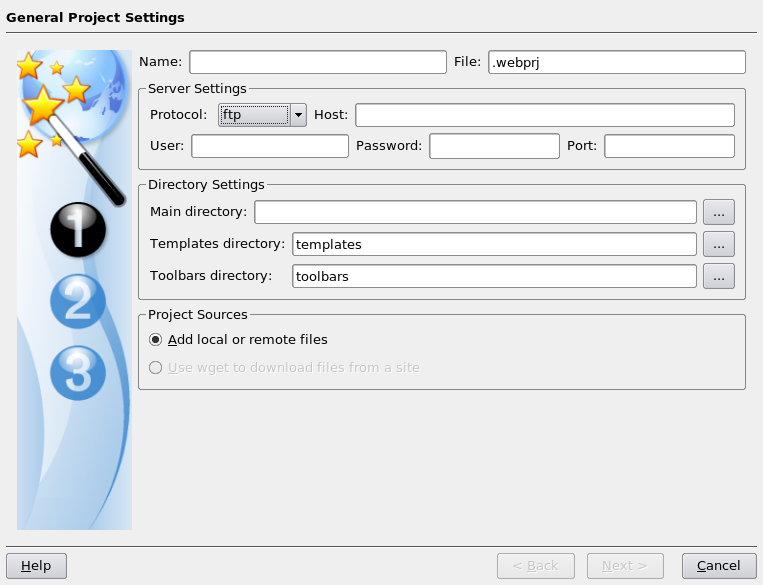

3rd December 2007On Saturday, my hillwalking and photo gallery website suffered an outage thanks to Fasthosts, the site's hosting provider, having a security breach and deciding to change all my passwords. While I won't bore you with the details here, I had to change the password for my MySQL database from their unmemorable suggestion and hence the configuration file for the hillwalking blog. To accomplish this, I set up Quanta Plus to edit the requisite file on the server itself. That was achieved by creating a new project, setting the protocol as FTP and completing the details in the wizard, all relatively straightforward stuff. Since I have a habit of doing this from Dreamweaver, it's nice to see that an open source alternative provides the same sort of functionality.

Why I will be keeping Windows close to hand for a while to come after a switch to Linux

2nd December 2007Even though I have moved to Linux, and it has been fulfilling nearly all of my home computing needs, I do and plan to continue to retain access to Windows courtesy of virtualisation technology. Thought keeping current with the world of the ever pervasive Windows is one motivation, there are others. In fact, now that Windows is more of a sideline, I may even get my hands on Vista at some point to take a further in-depth look at it, hopefully without having to suffer the consequences of my curiosity.

Talking of other reasons for hanging onto Windows, listening to music secured by DRM does come to mind. DRM is seen in a negative light by many in the open-source world, so Linux remains unencumbered by the beast. That isn't necessarily a bad thing, and the whole furore about Vista and DRM earlier this year had me wondering about a Linux future. However, I have been known to buy music from iTunes and would like to continue doing so. Though WINE might be one way to achieve this, retaining Windows seems a sounder option. That way, I am saved from having to convert my protected music files into either Ogg Vorbis or FLAC; the latter involves a lossless compression unlike the former, so the files are bigger with the additional quality that an audiophile would seek. MP3 is another option, yet there are those in the Linux world who frown upon anything patented. That makes getting MP3 support an additional task for those of us wanting it.

In my wisdom, I have succumbed to the delights of expensive web development tools like Altova's XMLSpy and Adobe's Dreamweaver. While I have found a way to get Quanta Plus to edit files on the web server directly and code hacking is my main way to improve my websites, I still will be having a bimble into Dreamweaver from time to time. I have yet to see XMLSpy's grid view replicated in the open-source world, so that should remain a key tool in my arsenal. While I haven't been looking too hard at open-source XML editors recently, there remains unexplored functionality in XMLSpy that I should really explore to see if it could be harnessed.

While I have included implicit references to this already, it needs saying that keeping Windows around also allows you to continue using familiar software. For some, this might be Microsoft Office, but OpenOffice and Evolution have usurped this in my case. Photoshop Elements is a better example for me. Digital transfers from scanners and DSLR's will stay in the world of Linux, while virtualisation allows me to process the images in whatever way I want. For now, I might just stick with the familiar before jumping ship to GIMP at some point in the future. With all that is written on Photoshop, having it there for learning new things seems a very sensible idea.

While open-source software can conceivably address every possible, there are bound to be niches that remain outside its reach. I use mapping software from Anquet when planning hillwalking excursions. It seems very much to be a Windows only offering and I have already downloaded a good amount of mapping, so Windows has to stay if I need to use this and the routes that I have plotted out before now. Another piece of software that finds its way into this bracket is my copy of SAS Learning Edition; there are times when a spot of learning at home goes a long way at work.

So, in summary, my reasons for keeping Windows around are as follows:

- Learning new things about the thing, since I am unlikely to escape its influence in the world of work

- Using iTunes to download new music and to continue to listen to what I have already

- Using and learning about industry standard web development tools like Dreamweaver and XMLSpy

- Easing the transition, by continuing to use Photoshop Elements, for example

- Using niche software like Anquet mapping

Though I suppose that many will relate to the above, Linux still has plenty to take over some of the above. In time, DRM may disappear from the music scene and not before time; accountants and shareholders may need to learn to trust customers. NVu and Quanta Plus could yet usurp Dreamweaver, and there may be an open-source alternative to XMLSpy like there is for so many other areas. The Photoshop versus GIMP choice will continue to prevent itself and all that is written about the former makes it seem silly to throw it away, however good the latter is. Even with changing over Linux equivalents of applications fulfilling standard needs, it still leaves niche applications like hillwalking mapping and that, together with the need to know what Windows might offer in the enterprise space, could be the enduring reasons for keeping it near to hand. That said, I can now go through whole days without firing up a Windows VM, a big change from how it was a few months ago. Still, I suppose that it's all too easy to stick with using one operating system at a time, which is Linux for me these days.

Setting up automatic Firefox updates on Ubuntu with Ubuntuzilla

1st December 2007No sooner had we received Firefox 2.0.0.10 than they have already started talking about 2.0.0.11. Apparently, the latest update broke support for a tag that I have never used: canvas. This is stuff that makes you wonder about their quality control.

Because the 2.0.0.10 was a security update, Ubuntu volunteered it to me without any effort on my part. However, I am using Ubuntuzilla, so I didn't get the update coming through to my browsing world without further intervention. Launching Firefox using the gksu command allowed me to update the thing like I have been doing on Windows: Help > Check for Updates... Now, I have got a more permanent check set up, thanks to my issuing the following command:

ubuntuzilla.py -a installupdater -p firefox

Controlling what the wpgm command calls in Windows SAS

30th November 2007Recently, I was setting up a key mapping in SAS 8.1 such that the log and output windows are cleared and a SAS program run in the most recently used program editor window. The idea was that debugging would be easier, and the command was what you see below:

log; clear; output; clear; wpgm; submit

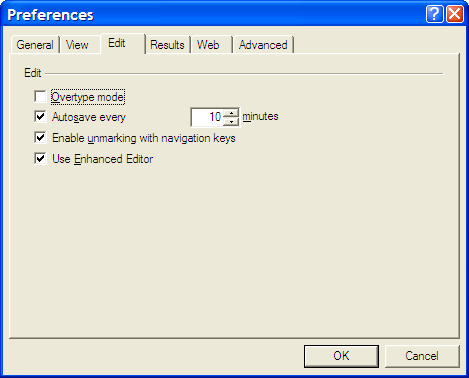

However, I was having trouble getting SAS to pick up the most recently used Enhanced Editor window, and it was opening up an old style Program Editor window in its place. If I had wanted to use that, I would have used pgm and not wpgm. What was conspiring against me was a pesky system option. Pottering over to Tools > Options > Preferences and navigating to the Edit tab brought me to the cause of the problem: the Use Enhanced Editor check box was in the clear, and fixing that set me on my way. SAS 9 could also be afflicted by the same irritation and that is where I got the screenshot that you see below where everything is hunky-dory.

Correcting or updating blog posts

29th November 2007Recently, I have grown to notice that certain bloggers are not removing old content from a blog, only striking it through using something like CSS. Though there is a place for this, it does strike me as overkill sometimes and can look untidy. Sometimes, that lack of neatness is a trade-off, since the highlighting of a correction itself conveys a message. While I have been known to tweak posts immediately after publishing without leaving the previous content on view, my changes generally are readability improvements more than anything else. Typos and spelling mistakes also get corrected like this; nobody needs them highlighted for all to see. I am not trying to fool anyone and, if I want to update the content, I either add another post or use another tactic that I have seen others use: updates at the bottom of the post that are denoted as such. It's another transparent approach that preserves the authenticity of the piece.

Using SAS FILE statements with the MOD option to append output to plain text files

25th November 2007SAS can generate many types of output: plain text, XML, PDF, RTF, Excel, etc. With all of these and the SAS procedures like PROC REPORT, PROC TABULATE and so on, it might seem surprising for me to say that I have been generating output with data step PUT and FILE statements. There was, of course, a reason for this: creating text files for loading into a new database-driven software application. At one stage, I also did some data interleaving at the output stage and that's when I discovered that the default behaviour for SAS FILE statements is to completely overwrite a file unless the MOD option was specified. Adding that switches on APPEND behaviour. The code below adds a header in one step, while adding data below it in another. While I know that there are better ways to achieve this, like setting up your data as you want it or using _N_ to ensure that something only appears once, here's another way. As per the Perl, there's often more than one way to do something with SAS.

data _null_;

file ds_data;

put "fieldtype;datasetname;datasetlabel;datasetlayout;datasetclass;datasetstandardversion";

run;data _null_;

set ds_ispec;

file ds_data mod;

line="datasetstandard;"||trim(memname)||";"||trim(memlabel)||";;;"||trim(memver);

put line;

run;The irritation of a 4 GB file size limitation

20th November 2007Recently, I got myself a 500GB Western Digital My Book, an external hard drive in other words. Bizarrely, the thing is formatted using the FAT32 file system. While I appreciate that backward compatibility for Windows 9x might seem desirable, using NTFS would be more understandable, particularly given that the last of the 9x line, Windows ME, is now eight years old (there cannot be anybody who still uses that, can there?). The result is that I got core dump messages from cp commands issued from the terminal on my Ubuntu system to copy files of size exceeding 4GB last night. It surprised me at first, but it now seems to be a FAT32 limitation. The idea of formatting the drive as NTFS did occur to me, only for GParted not to do that, at least not with my current configuration. While the ext3 file system is an option, I have a spare PC with Windows 2000 so that will be a step too far for now, unless I take the plunge and bring that into the Linux universe too.

Other than the 4GB irritation, the new drive works well and was picked up and supported by Ubuntu without any hassle beyond getting it out of the box, finding a place for it on my desk and plugging in a few cables. While needing judiciousness about file sizes, it played an important role while I converted a 320 GB internal WD drive from NTFS to ext3 and may yet be vital if my Windows 2000 box gets a migration to Linux. In the interim, 500 GB is a lot of space, and having an external drive that size is a bonus these days. That is especially the case when you consider that the 1 terabyte threshold is on the verge of getting crossed. It certainly makes DVD's, flash drives and other multi-gigabyte media less impressive than they otherwise might appear.

Do I still need serial numbers?

19th November 2007My spot of bad luck with Windows in August highlighted the importance of hanging on to serial numbers for software that I had purchased over the internet and downloaded. Though I could get at the ones that I needed, they were retained in a motley mix of text files and emails; one even was rediscovered by pottering back to the website of the purveyor. While the security of the installation files themselves was another matter of some concern, I was rather more organised in that regard. Both of these are things that need checking before Windows falls to pieces on you and needs to be reinstalled. Of course, human nature, being what it is, means that we often find ourselves picking up the pieces after a calamity has struck when a spot of planning would have made things that bit easier.

Linux does make life easier on this front: commercial applications are anything but the dominant force that they are in the world of Windows. That means that serial numbers are few and far between, and I only need the one for VMware Workstation. The mention of VMware brings me to my retention of Windows, so knowing where serial numbers are located remains a good idea. Even so, I cloned my Windows VM so that any Windows restoration following a destructive crash should be a quicker affair. Now that I am a Linux user, Windows crashes should not encroach as much on my home computing any more and Linux should be more stable anyway...

A different Firefox II: cross-platform font display issues

18th November 2007One of the things that I have been sorting out on this blog is how the fonts appear in Firefox running on Ubuntu. Even with the same fonts and the same browser, serif fonts were being displayed smaller and appeared more fuzzy in Linux than on Windows. And that's even with the font sharpening that comes with turning on Ubuntu's visual effects. So, there was a spot of swapping between Ubuntu and Windows (running on VMware) while I was increasing font sizes to improve legibility on the Linux side without things going all Blue Peter on Windows. Along the way, I added a mention of the Ubuntu font ae_AlArabiya in the CSS to further spruce up things. In my earlier web building efforts, I was having to make serif fonts bigger because of those serifs. From the on-screen legibility point of view, there's a lot to be said for sans serif fonts and I may yet alter this blog's theme to use them instead, but I'll ponder the idea a bit more before taking the plunge.