Protecting your photos with copyright metadata using ExifTool

8th July 2013There is a bill making its way through the U.K. parliament at this time that could reduce the power of copyright when it comes to images placed on the web. The current situation is that anyone who creates an image automatically holds the copyright for it. However, the new legislation will remove that if it becomes law as it stands. As it happens, the Royal Photographic Society is doing what it can to avoid any changes to what we have now.

Though there may be the barrier of due diligence, how many of us take steps to mark our own intellectual property? For one, I have been less that attentive to this and now wonder if there is anything more that I should be doing. While others may copyleft their images instead, I don't want to find myself unable to share my own photos because another party is claiming rights over them. There's watermarking as an object, yet I also want to add something to the image metadata too.

That got me wondering about adding metadata to any images that I post online that assert my status as the copyright holder. It may not be perfect, but any action is better than doing nothing at all. Given that I don't post photos where EXIF metadata is stripped as part of the uploading process, it should be there to see for anyone who bothers to check, and there may not be many who do.

Because I also wanted to batch process images, I looked for a command line tool to do the needful and found ExifTool. Being a Perl library, it is cross-platform so you can use it on Linux, Windows and even OS X. To install it on a Debian or Ubuntu-based Linux distro, just use the following command:

sudo apt-get install libimage-exiftool-perl

The form of the command that I found useful for adding the actual copyright information is below:

exiftool -p "-copyright=(c) John ..." -ext jpg -overwrite_original

The -p switch preserves the timestamp of the image file, while the -overwrite_original one ensures that you don't end up with unwanted backup files. The copyright message goes within the quotes along with the -copyright option. With a little shell scripting, you can traverse a directory structure and change the metadata for any image files contained in different sub-folders. If you wish to do more than this, there's always the user documentation to be consulted.

Building and installing Microsoft Core Fonts on Fedora 19 with RPM

6th July 2013While I have a previous posting from 2009 that discusses adding Microsoft's Core Fonts to the then current version of Fedora, it did strike me that I hadn't laid out the series of commands that were used. Instead, I referred to an external and unofficial Fedora FAQ. That's still there, yet I also felt that I was leaving things a little to chance, given how websites can disappear quite suddenly.

Even after next to four years, it still amazes me that you cannot install Microsoft's Core Fonts in Fedora as you would on Ubuntu, Linux Mint or even Debian. Therefore, the following series of steps is as necessary now as it was then.

The first step is to add in a number of precursor applications such as wget for command line file downloading from websites, cabextract for extracting the contents of Windows CAB files, rpmbuild for creating RPM installers and utilities for the XFS file system that chkfontpath needs:

sudo yum -y install rpm-build cabextract ttmkfdir wget xfs

Here, I have gone with terminal commands that use sudo, but you could become the superuser (root) for all of this and there are those who believe you should. The -y switch tells yum to go ahead with prompting you for permission before it does any installations. The next step is to download the Microsoft fonts package with wget:

sudo wget http://corefonts.sourceforge.net/msttcorefonts-2.0-1.spec

Once that is done, you need to install the chkfontpath package because the RPM for the fonts cannot be built without it:

sudo rpm -ivh http://dl.atrpms.net/all/chkfontpath

Once that is in place, you are ready to create the RPM file using this command:

sudo rpmbuild -ba msttcorefonts-2.0-1.spec

After the RPM has been created, it is time to install it:

sudo yum install --nogpgcheck ~/rpmbuild/RPMS/noarch/msttcorefonts-2.0-1.noarch.rpm

When installation has completed, the process is done. Because I used sudo, all of this happened in my own home area, so there was a need for some housekeeping afterwards. If you did it by becoming the root user, then the files would be there instead, and that's the scenario in the online FAQ.

A look at Windows 8.1

4th July 2013Last week, Microsoft released a preview of Windows 8.1 and some hailed the return of the Start button, yet the reality is not as simple as that. Being a Linux user, I am left wondering if ideas have been borrowed from GNOME Shell instead of putting back the Start Menu like it was in Windows 7. What we have got is a smoothing of the interface that is there for those who like to tweak settings and not available by default. GNOME Shell has been controversial too, so borrowing from it is not an uncontentious move, even if there are people like me who are at home with that kind of interface.

What you get now is more configuration options to go with the new Start button. While right-clicking on the latter does get you a menu, this is no Start Menu like we had before. Instead, we get a settings menu with a "Shut down" entry. That's better than before, which might be saying something about what was done in Windows 8, and it produces a sub-menu with options of shutting down or restarting your PC as well as putting it to sleep. Otherwise, it is a place for accessing system configuration items and not your more usual software, not a bad thing, but it's best to be clear about these things. Holding down the Windows key and pressing X will pop up the same menu if you prefer keyboard shortcuts, and I have a soft spot for them too.

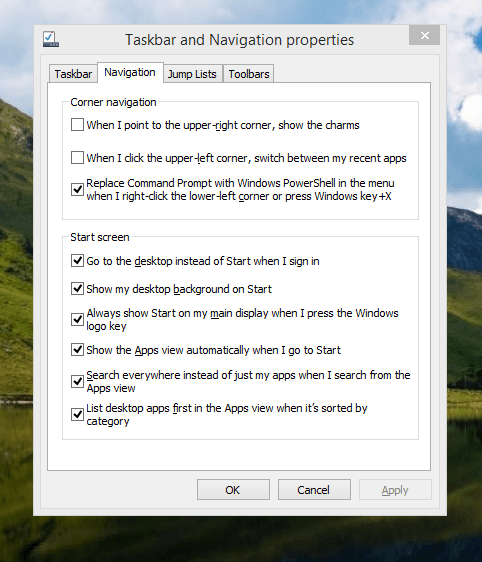

The real power is to be discovered when you right-click on the task bar and select Properties from the pop-up menu. Within the dialogue box that appears, there is the Navigation tab that contains a whole plethora of interesting options. Corner navigation can be scaled back to remove the options of switching between applications in the upper-left corner or getting what is called the Charms menu from the upper-right corner. Things get interesting in the Start Screen section. This where you tell Windows to boot to the desktop instead of the Start Screen and adjust what the Start button gives you. For instance, you can make it use your desktop background and display the Start Screen Apps View. Both of these make the new Start interface less intrusive and make the Apps View feel not unlike the way GNOME Shell overlays your screen when you hit the Activities button or hover over the upper-left corner of the desktop.

It all seems rather more like a series of little concessions, and not the restoration that some (many?) would prefer. Classic Shell still works for all those seeking an actual Start Menu and even replaces the restored Microsoft Start button too. So, if the new improvements aren't enough for you, you still can take matters into your own hands until you start to take advantage of what's new in 8.1.

Apart from the refusal to give us back a Windows 7 style desktop experience, we now have a touchscreen keyboard button added to the taskbar. So far, it always appears there even when I try turning it off. For me, that's a bug, so it's something that I'd like to see fixed before the final release.

All in all, Windows 8.1 feels more polished than Windows 8 was and will be a free update when the production version is released. My explorations have taken place within a separate VMware virtual machine because updating a Windows 8 installation to the 8.1 preview is forcing a complete re-installation on yourself later on. Though there are talks about Windows 9 now, I am left wondering if going for point releases like 8.2, 8.3, etc. might be a better strategy for Microsoft. It still looks as if Windows 8 could do with continual polishing before it gets more acceptable to users. 8.1 is a step forward, and more like it may be needed yet.

Turning on autocompletion for the bash shell in terminal sessions

26th June 2013At some point, I managed to lose the ability to have tab-key-based autocompletion on terminal sessions on my Ubuntu GNOME machine. Wanting it caused had me to turn to the web for an answer, and I found it on a Linux Mint forum; the bash shell is so pervasive in the UNIX and Linux worlds that you can look anywhere for a fix like this.

The problem centred around the .bashrc file in my home area. It does have quite a few handy custom aliases, and I must have done a foolish spring-clean on the file sometime. That is the only way that I can explain how the following lines got removed:

if [ -f /etc/bash_completion ]; then

. /etc/bash_completion

fiWhat they do is look to see if /etc/bash_completion can be found on your system and to use it for tab-based autocompletion. With the lines not in .bashrc, it couldn't happen. Others may replace bash_completion with bash.bashrc to get a fuller complement of features, but I'll stick with what I have for now.

Installing Nightingale music player on Ubuntu 13.04

25th June 2013Ever since the Songbird project concentrated its efforts to support only Windows and OS X, the Firefox-based music player has been absent from a Linux user's world. However, the project is open source and a fork called Nightingale now fulfils the same needs. Intriguingly, it too is available for Windows and OS X users, which leaves me wondering why that overlap has happened. However, Songbird also is available as a web app and as an app on both Android and iOS, while Nightingale sticks to being a desktop application.

To add it to Ubuntu, you need to set up a new repository. That can be done using the Software Centre but issuing a command in a terminal can be so much quicker and cleaner, so here it is:

sudo add-apt-repository ppa:nightingaleteam/nightingale-release

Apart from entering your password, there will be a prompt to continue by pressing the carriage return key or cancelling with CTRL + C. For our purposes, it is the first action that's needed and once that's done the needful, you can execute the following command:

sudo apt-get update && sudo apt-get install nightingale

This is in two parts: the first updates the repositories on your system, while the second actually installs the software. When that is complete, you are ready to run Nightingale and, with the repository, staying up to date is not a chore either. In fact, using the above commands brings another advantage: it is that they should work in any Ubuntu derivatives, such as Linux Mint.

A need to update graphics hardware

16th June 2013As someone who doesn't play computer games, I rarely prioritise graphics card upgrades. Yet, I recently upgraded graphics cards in two of my PCs despite nothing being broken. My backup machine, built nearly four years ago, has run multiple Linux distributions. It uses an ASRock K10N78 motherboard from MicroDirect with an integrated NVIDIA graphics chip that performs adequately, if not exceptionally. The only issue was slightly poor text rendering in web browsers, but this alone wasn't enough to justify adding a dedicated graphics card.

More recently, I ran into trouble with Sabayon 13.04 with only the 2D variant of the Cinnamon desktop environment working on it and things getting totally non-functional when a full re-installation of the GNOME edition was attempted. Everything went fine until I added the latest updates to the system, when a reboot revealed that it was impossible to boot into a desktop environment. Some will relish this as a challenge, but I need to admit that I am not one of those. In fact, I tried out two Arch-based distros on the same PC and got the same results following a system update on each. So, my explorations of Antergos and Manjaro have to continue in virtual machines instead.

When I tried Linux Mint 15 Cinnamon, it worked perfectly. However, newer distributions with systemd didn't work with my onboard NVIDIA graphics. Since systemd will likely come to Linux Mint eventually, I decided to add a dedicated graphics card. Based on good past experiences with Radeon, I chose an AMD Radeon HD 6450 from PC World, confirming it had Linux driver support. Installation was simple: power off, insert card, close case, power on. Later, I configured the BIOS to prioritise PCI Express graphics, though this step wasn't necessary. I then used Linux Mint's Additional Driver applet to install the proprietary driver and restarted. To improve web browser font rendering, I selected full RGBA hinting in the Fonts applet. The improvement was obvious, though still not as good as on my main machine. Overall, the upgrade improved performance and future-proofed my system.

After upgrading my standby machine, I examined my main PC. It has both onboard Radeon graphics and an added Radeon 4650 card. Ubuntu GNOME 12.10 and 13.04 weren't providing 3D support to VMware Player, which complained when virtual machines were configured for 3D. Installing the latest fglrx driver only made things worse, leaving me with just a command line instead of a graphical interface. The only fix was to run one of the following commands and reboot:

sudo apt-get remove fglrx

sudo apt-get remove fglrx-updates

Looking at the AMD website revealed that they no longer support 2000, 3000 or 4000 series Radeon cards with their latest Catalyst driver, the last version that did not install on my machine since it was built for version 3.4.x of the Linux kernel. A new graphics card then was in order if I wanted 3D graphics in VMware VM's and both GNOME and Cinnamon appear to need this capability. Another ASUS card, a Radeon HD 6670, duly was acquired and installed in a manner similar to the Radeon HD 6450 on the standby PC. Apart from not needing to alter the font rendering (there is a Font tab on the Gnome Tweak Tool where this can be set), the only real exception was to add the Jockey software to my main PC for installation of the proprietary Radeon driver. The following command does this:

sudo apt-get install jockey-kde

After completing installation, I ran the jockey-kde command and selected the first driver option. Upon restart, the system worked properly except for an AMD message in the bottom-right corner warning about unrecognised hardware. Since there were two identical entries in the Jockey list, I tried the second option. After restarting, the incompatibility message disappeared and everything functioned correctly. VMware even ran virtual machines with 3D support without any errors, confirming the upgrade had solved my problem.

Hearing of someone doing two PC graphics card upgrades during a single weekend may make you see them as an enthusiast, but my disinterest in computer gaming belies this. Maybe it highlights that Linux operating systems need 3D more than might be expected. The Cinnamon desktop environment now issues messages if it is operating in 2D mode with software 3D rendering and GNOME always had the tendency to fall back to classic mode, as it had been doing when Sabayon was installed on my standby PC. However, there remain cases where Linux can rejuvenate older hardware and I installed Lubuntu onto a machine with 10-year-old technology on there (an 1100 MHz Athlon CPU, 1GB of RAM and 60GB of hard drive space in a case dating from 1998) and it works surprisingly well too.

It appears that having fancier desktop environments like GNOME Shell and Cinnamon means having the hardware on which it could run. For a while, I have been tempted by the possibility of a new PC, since even my main machine is not far from four years old either. However, I also spied a CPU, motherboard and RAM bundle featuring an Intel Core i5-4670 CPU, 8GB of Corsair Vengeance Pro Blue memory and a Gigabyte Z87-HD3 ATX motherboard included as part of a pre-built bundle (with a heat sink and fan for the CPU) for around £420. Even for someone who has used AMD CPU's since 1998, that does look tempting, but I'll hold off before making any such upgrade decisions. Apart from exercising sensible spending restraint, waiting for Linux UEFI support to mature a little more may be no bad idea either.

Update 2013-06-23: The new graphics card in my main machine works well and has reduced system error messages; Ubuntu GNOME 13.04 likely had issues with my old card. On my standby machine, I found and removed a rogue .fonts.conf file in my home directory, which dramatically improved font display. If you find this file on your system, consider removing or renaming it to see if it helps. Alternatively, adjusting font rendering settings can improve display quality, even on older systems like Debian 6 with GNOME 2. I may test these improvements on Debian 7.1 in the future.

Creating empty text files and changing file timestamps using Windows Command Prompt & Powershell

17th May 2013Linux and UNIX have the touch command for changing the creation dates and times for files. However, it also creates empty text files for you as well. In fact, there are times when I feel the need to do this sort of thing on Windows too and the following command accomplishes the deed when run in a Command Prompt window:

type nul > command.bat

Essentially, null output is sent to a file that is created anew, command.bat in this case. Then, you can edit it in Notepad (or whatever is your choice of text editor) and add in what you need. This will not work in PowerShell, so you need another command for that:

New-Item command.bat -type file1

This uses the New-Item command, which also can be used to create folders as well if you so desire. Then, the command becomes the following:

New-Item c:\commands -type directory1

Note that file1 in the previous example has become directory1 and there is the -force option should you need to overwrite what already exists for some reason...

That other use of the UNIX/Linux touch command can be performed from the Command Prompt too, and here is an example command:

copy /b file.txt +,,

The /b switch switches on binary behaviour for the copy command, though that appears to be the default action anyway. The + operator triggers concatenation and ,, gets around not having a defined destination because you cannot copy a file over itself. If that were possible, then there would no need for special syntax for changing the date and time for a file.

For doing the same thing with PowerShell, try the following:

(GetChildItem test.txt).LastWriteTime=Get-Date

The GetChildItem command has aliases of gci, dir and ls and the last two of these give away its essential purpose. Here, it is used to pick out the test.txt file so that its timestamp can be replaced with the current date and time returned by the Get-Date command. The syntax looks a little more complex, even if it achieves the same end. Somehow, that touch command is easier to explain. Are Linux and UNIX that complicated, after all?

Saving Windows Command Prompt & Powershell command history to a file for later useage

15th May 2013It's remarkable what ideas Linux gives that you wouldn't encounter that clearly in the world of Windows. One of these is output and command line history, so a script can be created. In the Windows world, this would be called a batch file. Linux usefully has the history command, and it does the needful for taking a snapshot like so:

history > ~/commands.sh

All the commands stored in a terminal's command history get stored in the commands.sh in the user's home area. The command for doing the same thing from the Windows command line is not as obvious because it uses the doskey command that is intended for command line macro writing and execution. Usefully, it has a history option that tells it to output all the commands issued in a command line session. Unless, you create a file with them in there, there appears to be no way to store all those commands across sessions, unlike UNIX and Linux. Therefore, a command like the following is a partial solution that is more permanent than using the F7 key on your keyboard:

doskey /history > c:\commands.bat

Windows PowerShell has something similar too, and it even has aliases of history and even h. All PowerShell scripts have file extensions of ps1 and the example below follows that scheme:

get-history > c:\commands.ps1

However, I believe that even PowerShell doesn't carry over command history between sessions, though Microsoft is working on adding this useful functionality. While they could co-opt Cygwin of course, that doesn't seem to be their way of going about things.

A little look at Debian 7.0

12th May 2013Having a virtual machine with Debian 6 on there, I was interested to hear that Debian 7.0 is out. In another VM, I decided to give it a go. Installing it on there using the Net Install CD image took a little while but proved fairly standard with my choice of the GUI-based option. GNOME was the desktop environment with which I went and all started up without any real fuss after the installation was complete; it even disconnected the CD image from the VM before rebooting, a common failing in many Linux operating installations that lands into the installation cycle again unless you kill the virtual machine.

Though the GNOME desktop looked familiar, a certain amount of conservatism reigned too, since the version was 3.4.2. That was no bad thing, since raiding the GNOME Extension site for a set of mature extensions was made easier. In fact, a certain number of these were included in the standard installation anyway and the omission of a power off entry on the user menu was corrected as a matter of course without needing any intervention from this user. Adding to what already was there made for a more friendly desktop experience in a short period of time.

Debian's variant of Firefox, IceWeasel, is version 10 so a bit of tweaking is needed to get the latest version. LibreOffice is there now too, and it's version 3.5 rather than 4. Shotwell too is the older 0.12 and not the 0.14 that is found in the likes of Ubuntu 13.04. As it happens, GIMP is about the only software with a current version and that is 2.8; a slower release cycle may be the cause of that, though. All in all, the general sense is that older versions of current software are being included for the sake of stability and that is sensible too, so I am not complaining very much about this at all.

The reason for not complaining is that the very reason for having a virtual machine with Debian 6 on there is to have Zinio and Dropbox available too. Adobe's curtailment of support for Linux means that any application needing Adobe Air may not work on a more current Linux distribution. That affects Zinio, so I'll be retaining a Debian 6 instance for a while yet, unless a bout of testing reveals that a move to the newer version is possible. As for Dropbox, I am sure that I can recall why I moved it onto Debian, but it's working well on there so I am in no hurry to move it over either. There are times when slower software development cycles are better...

Piggybacking an Android Wi-Fi device off your Windows PC's internet connection

16th March 2013One of the disadvantages of my Google/Asus Nexus 7 is that it needs a Wi-Fi connection to use. Most of the time, this is not a problem since I also have a Huawei mobile Wi-Fi hub from T-Mobile and this seems to work just about anywhere in the U.K. Away from the U.K. though, it won't work because roaming is not switched on for it and that may be no bad thing with the fees that could introduce. While my HTC Desire S could deputise, I need to watch costs with that too.

There's also the factor of download caps, and those apply both to the Huawei and to the HTC. Recently, I added Anquet's Outdoor Map Navigator (OMN) to my Nexus 7 through the Google Play Store for a fee of £7 and that allows access to any walking maps that I have bought from Anquet. However, those are large downloads, so the caps start to come into play. Frugality would help, but I began to look at other possibilities that make use of a laptop's Wi-Fi functionality.

Looking on the web, I found two options for this that work on Windows 7 (8 should be OK too): Connectify Hotspot and Virtual Router Manager. The first of these is commercial software, yet there is a Lite edition for those wanting to try it out; that it is not a time limited demo is not something that I can confirm though that did not seem to be the case since it looked as if only features were missing from it that you'd get if you paid for the Pro variant. The second option is an open source one that is free of charge apart from an invitation to donate to the project.

Though online tutorials show the usage of either of these to be straightforward, my experiences were not all that positive at the outset. In fact, there was something that I needed to do, which is how this post has come to exist at all. That happened even after the restart that Connectify Hotspot needed as part of its installation; it runs as a system service, which is why the restart was needed. In fact, it was Virtual Router Manager that told me what the issue was, and it needed no reboot. Neither did it cause network disconnection of a laptop like the Connectify offering did on me and that was the cause of its ejection from that system; limitations in favour of its paid addition aside, it may have the snazzier interface, but I'll take effective simplicity any day.

Using Virtual Router Manager turns out to be simple enough. It needs a network name (also known as an SSID), a password to restrict who accesses the network and the internet connection to be shared. In my case, the was Local Area Connection on the dropdown list. With all the required information entered, I was ready to start the router using the Start Network Router button. The text on this button changes to Stop Network Router when the hub is operational, or at least it should have done for me on the first time that I ran it. What I got instead was the following message:

The group or resource is not in the correct state to perform the requested operation.

While the above may not say all that much, it becomes more than ample information if you enter it into the likes of Google. Behind the scenes, Virtual Router Manager uses native Windows functionality to create a Wi-Fi hub from a PC, and it appears to be the Microsoft Virtual Wi-Fi Miniport Adapter from what I have seen. When I tried setting up an ad hoc Wi-Fi network from a laptop to the Nexus 7 using Windows' own network set up capability via its Control Panel, it didn't do what I needed, so there might be something that third party software can do. So, the interesting thing about the solution to my Virtual Router Manager problem was that it needed me to delve into the innards of Windows a little.

Firstly, there's running Command Prompt (All Programs > Accessories) from the Start Menu with Administrator privileges. It helps here if the account with which you log into Windows is in the Administrator group, since all you have to do then is right-click on the Start Menu entry and choose Run as administrator entry in the pop-up context menu. With a command line window now open, you then need to issue the following command:

netsh wlan set hostednetwork mode=allow ssid=[network name] key=[password] keyUsage=persistent

When that had done its thing, Virtual Router Manager worked without a hitch though it did turn itself after a while and that may be no bad thing from the security standpoint. On the Android side, it was a matter of going in Settings > Wi-Fi and choosing the new network that was created on the laptop. This sort of thing may apply to other types of tablet (Dare I mention iPads?) so you could connect anything to the hub without needing to do any more on the Windows side.

For those wanting to know what's going on behind the scenes on Windows, there's a useful tutorial on Instructables that shows what third party software is saving you from having to do. Even if I never go down the more DIY route, I probably have saved myself having to buy a mobile Wi-Fi hub for any trips to Éire. For now, the Irish 3G dongle that I already have should be enough.