Building a sitemap in XML

24th November 2022While there are many tools that will build XML site maps, there is some satisfaction to be had in creating your own. This is despite there being a multitude of search engine optimisation plugins for content management systems like WordPress or what is built into static site generators like Hugo. Sometimes, building your own allows for added simplicity, and that is shared with recent efforts in WordPress theme development.

The sitemap XML protocol is simple enough to offer a short coding project. The basis was what Hugo generates, and I used Python to create the XML files. The only libraries that I needed were configparser, SQLAlchemy and pandas. The first two of these allowed databases to be queried, and the last on the list was used for data processing. Otherwise, it was a case of using what is built into the Python language, like file writing and looping.

Once the scripts were ready, they could be uploaded to web servers and executed by scheduled jobs using CRON to keep things up to date. Along the way, I also uncovered a way to publicise the locations of the sitemap files to search engine bots using robots.txt. The structure of the instruction is the following:

User-agent: *

Sitemap: sitemap.xml

This means that it announces to all bots the location of the sitemap file. In my case, I always included the full URL for the XML file, and that clearly varies by website location.

Fixing Windows 11 freezing issues in VirtualBox with KVM paravirtualisation

23rd November 2022Recently, I have been trying to get Windows 11 to run smoothly within a VirtualBox virtual machine, and there has been a lot of experimentation along the way. This was to eradicate intermittent freezes that escalated CPU usage and necessitated hard restarts. If I were to use Windows 11 as a long-term replacement for Windows 10, these needed to go.

An internet search showed that others faced the same predicament, yet a range of proposed solutions did nothing for me. The suggestion of enabling 3D graphics capability did nothing but produce a black screen at startup time, so that was not a runner. It might have been the combination of underlying graphics hardware and the drivers on my Linux Mint machine that hindered me when it helped others.

In the end, a look at the bug tracker for Windows guest operating systems running on VirtualBox sent me in another direction. The Paravirtualisation interface also may have caused issues with Windows 10 virtual machines, since these were all set to KVM. Doing the same for Windows 11 seems to have stopped the freezing behaviour so far. It meant going to the virtual machine settings, navigating to System > Acceleration and changing the dropdown menu value from Default to KVM before clicking on the OK button.

Before that, I have been blaming the newness of VirtualBox 7 (it is best not to expect too much of a fresh release bringing such major changes) and even the way that I installed Windows 11 using the streamlined installation or licensing issues. Now that things are going better, it may have been a lesson from Windows 10 that I had forgotten. The EFI, Secure Boot and TPM 2.0 requirements of Windows 11 also blind sided me, especially given the long wait for VirtualBox to add such compatibility, but that is behind me at this stage.

Given that Windows 11 is not perfect, Start11 makes it usable and the October 2025 expiry for Windows 10 also focuses my mind. It is time to move over for the sake of future-proofing if nothing else. In time, we may get a better operating system as Windows 11 matures and some minds surely are thinking of a "Windows 12". Depending on how things go, we may get to a point where something vintage in the nature of Windows XP, Windows 7 or Windows 10 appears. Those older versions of Windows became like old gold during their lives.

Resolving a clash between Homebrew and Python

22nd November 2022For reasons that I cannot recall now, I installed the Hugo static website generator on my Linux system and web servers using Homebrew. The only reason that I suggest is that it might have been a way to get the latest version at the time because Linux Mint only does major changes like that every two years, keeping it in line with long-term support editions of Ubuntu.

When Homebrew was installed, it changed the lookup path for command line executables by adding the following line to my .bashrc file:

eval "$(/home/linuxbrew/.linuxbrew/bin/brew shellenv)"

This executed the following lines:

export HOMEBREW_PREFIX="/home/linuxbrew/.linuxbrew";

export HOMEBREW_CELLAR="/home/linuxbrew/.linuxbrew/Cellar";

export HOMEBREW_REPOSITORY="/home/linuxbrew/.linuxbrew/Homebrew";

export PATH="/home/linuxbrew/.linuxbrew/bin:/home/linuxbrew/.linuxbrew/sbin${PATH+:$PATH}";

export MANPATH="/home/linuxbrew/.linuxbrew/share/man${MANPATH+:$MANPATH}:";

export INFOPATH="/home/linuxbrew/.linuxbrew/share/info:${INFOPATH:-}";

While the result suits Homebrew, it changed the setup of Python and its packages on my system. Eventually, this had undesirable consequences, like messing up how Spyder started, so I wanted to change this. There are other things that I have automated using Python and these were not working either.

One way that I have seen suggested is to execute the following command, but I cannot vouch for this:

brew unlink python

What I did was to comment out the offending line in .bashrc and replace it with the following:

export PATH="$PATH:/home/linuxbrew/.linuxbrew/bin:/home/linuxbrew/.linuxbrew/sbin"

export HOMEBREW_PREFIX="/home/linuxbrew/.linuxbrew";

export HOMEBREW_CELLAR="/home/linuxbrew/.linuxbrew/Cellar";

export HOMEBREW_REPOSITORY="/home/linuxbrew/.linuxbrew/Homebrew";

export MANPATH="/home/linuxbrew/.linuxbrew/share/man${MANPATH+:$MANPATH}:";

export INFOPATH="${INFOPATH:-}/home/linuxbrew/.linuxbrew/share/info";

The first command adds Homebrew paths to the end of the PATH variable rather than the beginning, which was the previous arrangement. This ensures system folders are searched for executable files before Homebrew folders. It also means Python packages load from my user area instead of the Homebrew location, which happened under Homebrew's default configuration. When working with Python packages, remember not to install one version at the system level and another in your user area, as this creates conflicts.

So far, the result of the Homebrew changes is not unsatisfactory, and I will watch for any rough edges that need addressing. If something comes up, then I will set things up in another way.

A look at the Julia programming language

19th November 2022Several open-source computing languages get mentioned when talking about working with data. Among these are R and Python, but there are others; Julia is another one of these. It took a while before I got to check out Julia because I felt the need to get acquainted with R and Python beforehand. There are others like Lua to investigate too, but that can wait for now.

With the way that R is making an incursion into clinical data reporting analysis following the passage of decades when SAS was predominant, my explorations of Julia are inspired by a certain contrariness on my part. Alongside some small personal projects, there has been some reading in (digital) book form and online. Concerning the latter of these, there are useful tutorials like Introduction to Data Science: Learn Julia Programming, Maths & Data Science from Scratch or Julia Programming: a Hands-on Tutorial. Like what happens with R, there are online versions of published books available free of charge, and they include Julia Data Science and Interactive Visualization and Plotting with Julia. Video learning can help too and Jane Herriman has recorded and shared useful beginner's guides on YouTube that start with the basics before heading onto more advanced subjects like multiple dispatch, broadcasting and metaprogramming.

This piece of learning has been made of simple self-inspired puzzles before moving on to anything more complex. That differs from my dalliance with R and Python, where I ventured into complexity first, not least because of testing them out with public COVID data. Eventually, I got around to doing that with Julia too, though my interest was beginning to wane by then, and Julia's abilities for creating multipage PDF files were such that the PDF Toolkit was needed to help with this. Along the way, I have made use of such packages as CSV.jl, DataFrames.jl, DataFramesMeta, Plots, Gadfly.jl, XLSX.jl and JSON3.jl, among others. After that, there is PrettyTables.jl to try out, and anyone can look at the Beautiful Makie website to see what Makie can do. There are plenty of other packages creating graphs, such as SpatialGraphs.jl, PGFPlotsX and GRUtils.jl. For formatting numbers, options include Format.jl and Humanize.jl.

So far, my primary usage has been with personal financial data together with automated processing and backup of photo files. The photo file processing has taken advantage of the ability to compile Julia scripts for added speed because just-in-time compilation always means there is a lag before the real work begins.

VS Code is my chosen editor for working with Julia scripts, since it has a plugin for the language. That adds the REPL, syntax highlighting, execution and data frame viewing capabilities that once were added to the now defunct Atom editor by its own plugin. While it would be nice to have a keyboard shortcut for script execution, the whole thing works well and is regularly updated.

Naturally, there have been a load of queries as I have gone along and the Julia Documentation has been consulted as well as Julia Discourse and Stack Overflow. The latter pair have become regular landing spots on many a Google search. One example followed a glitch that I encountered after a Julia upgrade when I asked a question about this and was directed to the XLSX.jl Migration Guides where I got the information that I needed to fix my code for it to run properly.

There is more learning to do as I continue to use Julia for various things. Once compiled, it does run fast like it has been promised. The syntax paradigm is akin to R and Python, but there are Julia-specific features too. If you have used the others, the learning curve is lessened but not eliminated completely. This is not an object-oriented language as such, but its functional nature makes it familiar enough for getting going with it. In short, the project has come a long way since it started more than ten years ago. There is much for the scientific programmer, but only time will tell if it usurped its older competitors. For now, I will remain interested in it.

Self hosting Google fonts

14th November 2022One thing that I have been doing across my websites is to move away from using Google web fonts, using the advice on the Switching.Software website. While I have looked at free web font directories like 1001 Free Fonts or DaFont, they do not have the full range of bolding and character sets that I desire, so I opted instead for the Google Webfonts Helper website. That not only offered copies of what Google has, but also created a portion of CSS that I could add to a stylesheet on a website, making things more streamlined. At the same time, I also took the opportunity to change some of the fonts that were being used for the sake of added variety. Open Sans is good, but there are other acceptable sans-serif options like Mulish or Nunita as well, so these got used.

Choosing a desktop Markdown editor for producing grammar-checked static website content

8th November 2022Earlier this year, I changed over two websites from dynamic versions using content management systems to static ones by using Hugo to build them from Markdown files. That meant that I needed to look at the editing of Markdown, even if it is a fairly simple file format. For one thing, Grammarly can be incorporated into WordPress, so I did not want to lose something like that.

The latter point meant that I was steered away from plain text editors. Otherwise, there are online ones like StackEdit and Dillinger, but the Firefox Grammarly plugin only appears to work on the first of these, and even then, only partially in my experience. While Dillinger does offer connections to online file storage providers like Google, Dropbox and OneDrive, I wanted to store files on my desktop for upload to a web server. It also works with GitHub, but I prefer to use another web hosting provider.

There are various specialised Markdown editors for desktop usage like Typora, ReText, Formiko or Ghostwriter, yet I chose none of these. My actual choice may surprise many: it was Visual Studio Code. The availability of a Grammarly plug-in was what swayed it for me, even if it did need to be switched on for Markdown files. In many ways, it does work as smoothly as elsewhere because it gets fooled by links and other code-like pieces of text. Also, having the added ability to add words to a custom dictionary would be ideal. Some rule overriding is available, but I am not sure that everything is covered, even if the list of options is lengthy. Some time is needed to inspect all of them before I proceed any further. Thus far, things are working well enough for me.

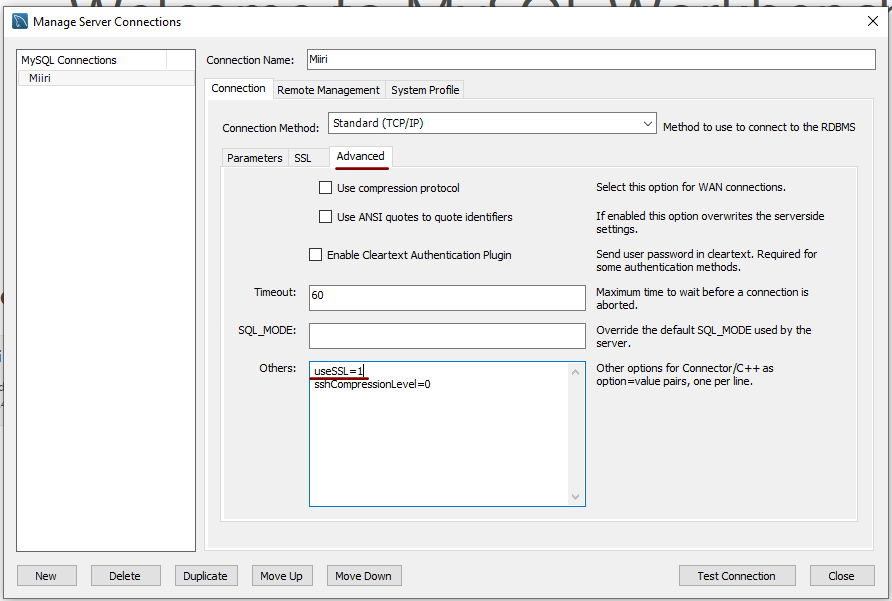

Disabling the SSL connection requirement in MySQL Workbench

7th November 2022A while ago, I found that MySQL Workbench would only use SSL connections and that was stopping it from connecting to local databases. Thus, I looked for a way to override this: the cure was to go to Database > Manage Connections... in the menus for the application's home tab. In the dialogue box that appeared, I chose the connection of interest and went to the Advanced panel under Connection and removed the line useSSL=1 from the Others field. The screenshot below shows you what things looked like before the change was made. Naturally, the best practice would be to secure a remote database connection using SSL, so this approach is best reserved for remote non-production databases. While it may be that this does not happen now, I thought I would share this in case the problem persists for anyone.

Redirecting a WordPress site to its home page when its loop finds no posts

5th November 2022Since I created a bespoke theme for this site, I have been tweaking things as I go. The basis came from the WordPress Theme Developer Handbook, which gave me a simpler starting point shorn of all sorts of complexity that is encountered with other themes. Naturally, this means that there are little rough edges that need tidying over time.

One of these is dealing with errors on the site, like when content is not found. This could be a wrong address or a search query that finds no matching posts. When that happens, there is a redirection to the home page using some simple JavaScript within the loop fallback code enclosed within script start and end tags (including the whole code triggers the action from this post so it cannot be shown here):

location.href="[blog home page ]";

The bloginfo function can be used with the url keyword to find the home page, avoiding hard-coding. For now, this works so long as JavaScript is enabled, but a more robust approach may come in time. It is not possible to do a PHP redirect because of the nature of HTTP: when headers have been sent, it is not possible to do server redirects. At this stage, things become client side, so using JavaScript is one way to go instead.

Minimum viable product?

31st October 2022While I have done my styling for websites that I have, this one has used third-party themes since its inception. This approach does have its advantages because you can benefit from the efforts of others; it can be a way to get added functionality and gain an appearance that is more contemporary in feel.

Naturally, there also are drawbacks. Getting the desired appearance can be challenging without paying for it, and your tastes may not match current fashions. Then, there are restrictions on customisation. Where user interfaces are available, these cannot be limitless. A fallback is to tweak code, but ever-increasing complexity hampers that and an automated update can erase a modification, even if child themes are a possibility on at least one content management system.

For me, the drawbacks now outweigh the advantages, so I have created my own design and that is what you now see. Behind the scenes, there is a back-to-basics approach and everything should look brighter. As the title of this post suggests, this is a start, with further tweaks coming in time. For now, I hope that what you find will be sufficient to please.

Converting QEMU disk images to VirtualBox images on Linux Mint 21

30th October 2022Recently, VirtualBox gained fuller support for Windows 11 and I successively set up a new Windows 11 virtual machine that, I hope, will supplant a Windows 10 counterpart in time. While the setup itself was streamlined, I ran into such stability issues that I set the new VM aside until a new version of VirtualBox got released. That has happened with the appearance of version 7.0.2, but Windows 11 remains prone to freezing on my Linux Mint machine. Thankfully, that now is much less frequent, yet the need for added stability remains outstanding.

While I was thinking about trying out VirtualBox 7.0.0, I remembered a QEMU machine that I had running Windows 11. Though QEMU proved more limited than VirtualBox when it came to having easy availability of functionality like moving data in and out of the virtual machine or support for sound, there was no problem with TPM support or system stability. Since it did contain some useful data, I wondered about converting its virtual hard disk to VirtualBox format, and it is easy to do. First, you need to install qemu-img and other utilities as follows:

sudo apt-get install qemu-utils

With that in place, executing a command like the following performs the required conversion. Here, the -O switch specifies the required file type of vdi in this case.

qemu-img convert -O vdi [virtual hard disk].qcow2 [virtual hard disk].vdi

While I have yet to mount it on the new VirtualBox Windows 11 virtual machine, it is good to have the old virtual hard disk available for doing so. The thought of using it as a boot drive in VirtualBox did enter my mind, but the required change of drivers and other incompatibilities dissuaded me from doing so.