ERROR: Can’t find the archive-keyring

10th April 2014When I recently did my usual system update for the stable version Ubuntu GNOME, there were some updates pertaining to apt and the process failed when I executed the following command:

sudo apt-get upgrade

Usefully, some messages were issued and here’s a flavour:

Setting up apt (0.9.9.1~ubuntu3.1) …

ERROR: Can’t find the archive-keyring

Is the ubuntu-keyring package installed?

dpkg: error processing apt (--configure):

subprocess installed post-installation script returned error exit status 1

Errors were encountered while processing:

apt

E: Sub-process /usr/bin/dpkg returned an error code (1)

Some searching on the web revealed that the problem was that there were no files in /usr/share/keyring when there should have been and I had not removed them myself so I have no idea how they disappeared. Various remedies were tried and any that needed software installed were non-starters because apt was disabled by the lack of keyring files. The workaround that restored things for me was to take a copy of the files in /usr/share/keyring from an Ubuntu GNOME 14.04 installation in a VirtualBox VM and copy them in to the same location in its Ubuntu GNOME 13.10 host. For those without such resources, I have packaged them in a zip file below. Other remedies like Y PPA also were suggested where I was reading but that software package needed installing beforehand so it was little use to me when the likes of Synaptic were disabled. If there are other remedies that do not involve an operating system re-installation, I would like to know about them too as well as possible causes for the file loss in the first place and how to avoid these.

Setting up a WD My Book Live NAS on Ubuntu GNOME 13.10

1st December 2013The official line from Western Digital is that they do not support the use of their My Book Live NAS drives with Linux or UNIX. However, what that means is that they only develop tools for accessing their products for Windows and maybe OS X. It still doesn’t mean that you cannot access the drive’s configuration settings by pointing your web browser at http://mybooklive.local/. In fact, not having those extra tools is no drawback at all since the drive can be accessed through your file manager of choice under the Network section and the default name is MyBookLive too so you easily can find the thing once it is connected to a router or switch anyway.

Once you are in the servers web configuration area, you can do things like changing its name, updating its firmware, finding out what network has been assigned to it, creating and deleting file shares, password protecting file shares and other things. These are the kinds of things that come in handy if you are going to have a more permanent connection to the NAS from a PC that runs Linux. The steps that I describe have worked on Ubuntu 12.04 and 13.10 with the GNOME desktop environment.

What I was surprised to discover that you cannot just set up a symbolic link that points to a file share. Instead, it needs to be mounted and this can be done from the command line using mount or at start-up with /etc/fstab. For this to happen, you need the Common Internet File System utilities and these are added as follows if you need them (check on the Software Software or in Synaptic):

sudo apt-get install cifs-utils

Once these are added, you can add a line like the following to /etc/fstab:

//[NAS IP address]/[file share name] /[file system mount point] cifs

credentials=[full file location]/.creds,

iocharset=utf8,

sec=ntlm,

gid=1000,

uid=1000,

file_mode=0775,

dir_mode=0775

0 0

Though I have broken it over several lines above, this is one unwrapped line in /etc/fstab with all the fields in square brackets populated for your system and with no brackets around these. Though there are other ways to specify the server, using its IP address is what has given me the most success and this is found under Settings > Network on the web console. Next up is the actual file share name on the NAS and I have used a custom them instead of the default of Public. The NAS file share needs to be mounted to an actual directory in your file system like /media/nas or whatever you like and you will need to create this beforehand. After that, you have to specify the file system and it is cifs instead of more conventional alternatives like ext4 or swap. After this and before the final two space delimited zeroes in the line comes the chunk that deals with the security of the mount point.

What I have done in my case is to have a password protected file share and the user ID and password have been placed in a file in my home area with only the owner having read and write permissions for it (600 in chmod-speak). Preceding the filename with a “.” also affords extra invisibility. That file then is populated with the user ID and password like the following. Of course, the bracketed values have to be replaced with what you have in your case.

username=[NAS file share user ID]

password=[NAS file share password]

With the credentials file created, its options have to be set. First, there is the character set of the file (usually UTF-8 and I got error code 79 when I mistyped this) and the security that is to be applied to the credentials (ntlm in this case). To save having no write access to the mounted file share, the uid and gid for your user needs specification with 1000 being the values for the first non-root user created on a Linux system. After that, it does no harm to set the file and directory permissions because they only can be set at mount time; using chmod, chown and chgrp later on has no effect whatsoever. Here, I have set permissions to read, write and execute for the owner and the user group while only allowing read and execute access for everyone else (that’s 775 in the world of chmod).

All of what I have described here worked for me and had to gleaned from disparate sources like Mount Windows Shares Permanently from the Ubuntu Wiki, another blog entry regarding the permissions settings for a CIFS mount point and an Ubuntu forum posting on mounting CIFS with UTF-8 support. Because of the scattering of information, I just felt that it needed to all together in one place for others to use and I hope that fulfils someone else’s needs in a similar way to mine.

An in situ upgrade to Linux Mint 12

4th December 2011Though it isn’t the recommended approach, I have ended up upgrading to Linux Mint 12 from Linux Mint 11 using an in situ route. Having attempted this before with a VirtualBox hosted installation, I am well aware of the possibility of things going wrong. Then, a full re-installation was needed to remedy the situation. With that in mind, I made a number of backups in the case of an emergency fresh installation of the latest release of Linux Mint. Apache and VirtualBox configuration files together with MySQL backups were put where they could be retrieved should that be required. The same applied to the list of installed packages on my system. So far, I haven’t needed to use these, but there is no point in taking too many chances.

The first step in an in-situ Linux Mint upgrade is to edit /etc/apt/sources.list. In the repository location definitions, any reference to katya (11) was changed to lisa (for 12) and the same applied to any appearance of natty (Ubuntu 11.04) which needed to become oneiric (Ubuntu 11.10). With that done, it was time to issue the following command (all one line even if it is broken here):

sudo apt-get update && sudo apt-get upgrade && sudo apt-get dist-upgrade

Once that had completed, it was time to add the new additions that come with Linux Mint 12 to my system using a combination of apt-get, aptitude and Synaptic; the process took a few cycles. GNOME already was in place from prior experimentation, so there was no need to add this anew. However, I need to instate MGSE to gain the default Linux Mint customisations of GNOME 3. Along with that, I decided to add MATE, the fork of GNOME 2. That necessitated the removal of two old libraries (libgcr0 and libgpp11, if I recall correctly but it will tell you what is causing any conflict) using Synaptic. With MGSE and MATE in place, it was time to install LightDM and its Unity greeter to get the Linux Mint login screen. Using GDM wasn’t giving a very smooth visual experience and Ubuntu, the basis of Linux Mint, uses LightDM anyway. Even using the GTK greeter with LightDM produced a clunky login box in front of a garish screen. Configuration tweaks could have improved on this but it seems that using LightDM and Unity greeter is what gives the intended setup and experience.

With all of this complete, the system seemed to be running fine until the occasional desktop freeze occurred with Banshee running. Blaming that, I changed to Rhythmbox instead, though that helped only marginally. While this might be blamed on how I upgraded my system, things seemed to have steadied themselves in the week since then. As a test, I had the music player going for a few hours and there was no problem. With the call for testing of an update to MATE a few days ago, it now looks as if there may have been bugs in the original release of Linux Mint 12. Daily updates have added new versions of MGSE and MATE so that may have something to do with the increase in stability. Even so, I haven’t discounted the possibility of needing to do a fresh installation of Linux Mint 12 just yet. However, if things continue as they are, then it won’t be needed and that’s an upheaval avoided should things go that way. That’s why in situ upgrades are attractive though rolling distros like Arch Linux (these words are being written on a system running this) and LMDE are more so.

Extending ASUS Eee PC Battery Life Without Changing From Ubuntu 11.04

25th May 2011It might just be my experience of the things but I do tend to take claims about laptop or netbook battery life with a pinch of salt. After all, I have a Toshiba laptop that only lasts an hour or two away from the mains and that runs Windows 7. For a long time, my ASUS Eee PC netbook was looking like that too but a spot of investigation reveals that there is something that I could do to extend the length of time before the battery ran out of charge. For now, the solution would seem to be installing eee-control and here’s what I needed to do that for Ubuntu 11.04, which has gained a reputation for being a bit of a power hog on netbooks if various tests are to be believed.

Because eee-control is not in the standard Ubuntu repositories, you need to add an extra one for install in the usual way. To make this happen, launch Synaptic and find the Repositories entry on the Settings menu and click on it. If there’s no sign of it , then Software Sources (this was missing on my ASUS) needs to be installed using the following command:

sudo apt-get install software-properties-gtk

Once Software Sources opens up after you entering your password, go to the Other Software tab. The next step is to click on the Add button and enter the following into the APT Line box before clicking on the Add Source button:

ppa:eee-control/eee-control

With that done, all that’s need is to issue the following command before rebooting the machine on completion of the installation:

sudo apt-get install eee-control

When you are logged back in to get your desktop, you’ll notice a new icon in your top with the Eee logo and clicking on this reveals a menu with a number of useful options. Among these is the ability to turn off a number of devices such as the camera, WiFi or card reader. After that there’s the Preferences entry in the Advanced submenu for turning on such things as setting performance to Powersave for battery-powered operation or smart fan control. The notifications issued to you can be controlled too as can be a number of customisable keyboard shortcuts useful for quickly starting a few applications.

So far, I have seen a largely untended machine last around four hours and that’s around double what I have been getting until now. Of course, what really is needed is a test with constant use to see how it gets on. Even if I see lifetimes of around 3 hours, this still will be an improvement. Nevertheless, being of a sceptical nature, I will not scotch the idea of getting a spare battery just yet.

Adding Microsoft core fonts to Debian

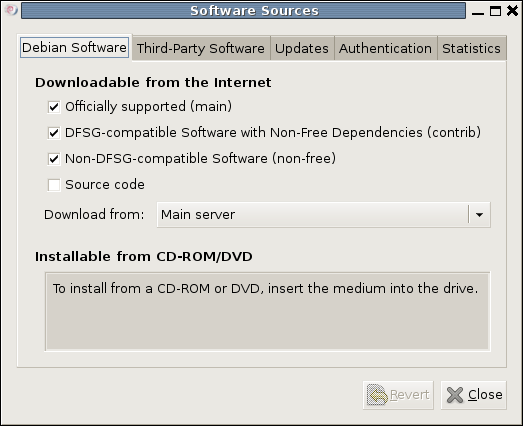

18th June 2009When setting up Ubuntu, I usually add in Microsoft’s core fonts by installing the msttcorefonts package using either Synaptic or apt-get. I am not sure why I didn’t try doing the same thing for Debian until now but it’s equally as feasible. Just pop over to System > Administration > Software Sources and ensure that the check-boxes for the contrib and non-free categories are checked like you see below.

You could also achieve the same end by editing /etc/apt/sources.list and adding the non-free and contrib keywords to make lines look like these before issuing the command apt-get update as root:

deb http://ftp.debian.org/debian/ lenny main non-free contrib

deb-src http://ftp.debian.org/debian/ lenny main non-free contrib

All that you are doing with the manual editing route is performing the same operations that the more friendly front end would do for you anyway. After that, it’s a case of going with the installation method of your choice and restarting Firefox or Iceweasel to see the results.

Getting Fedora working in VirtualBox

12th May 2009After a hiatus induced by disk errors seen on start up, I have gone having a go with Fedora again. In the world of real PC’s, its place has been taken by Debian so virtualisation was brought into play for my most recent explorations. I could have gone with 10, the current stable version, but curiosity got the better of me and I downloaded a pre-release version of 11 instead.

On my way to getting that instated, I encountered two issues. The first of these was boot failure with the message like this:

FATAL: INT18: BOOT FAILURE

As it turned out, that was easily sorted. I was performing the installation from a DVD image mounted as if it were a real DVD and laziness or some other similar reason had me rebooting with still mounted. There is an option to load the hard disk variant but it wasn’t happening, resulting in the message that’s above. A complete shut down and replacement of the virtual DVD with a real one set matters to rights.

The next trick was to get Guest Additions added but Fedora’s 2.6.29 was not what VirtualBox was expecting and it demanded the same ransom as Debian: gcc, make and kernel header files. Unfamiliarity had me firing up Fedora’s software installation software only to find that Synaptic seems to beat it hands down in the search department. Turning to Google dredged up the following command to be executed and that got me further:

yum install binutils gcc make patch libgomp glibc-headers glibc-devel kernel-headers kernel-devel

However, the installed kernel headers didn’t match the kernel but a reboot fixed that once the kernel was updated. Then, the Guest Additions installed themselves as intended with necessary compilations to match the installed kernel.

The procedures that I have described here would, it seems, work for Fedora 10 and they certainly have bequeathed me a working system. I have had a little poke and a beta of Firefox 3.5 is included and I saw sign of OpenOffice 3.1 too. So, it looks very cutting edge, easily so in comparison with Ubuntu and Debian. Apart from one or niggles, it seems to run smoothly too. Firstly, don’t use the command shutdown -h now to close the thing down or you’ll cause VirtualBox to choke. Using the usual means ensures that all goes well, though. The other irritation is that it doesn’t connect to the network without a poke from me. Whether SELinux is to blame for this or not, I cannot tell but it might be something for consideration by the powers than be. That these are the sorts of things that I have noticed should itself be telling you that I have no major cause for complaint. I have mulled over a move to Fedora in the past and that option remains as strong as ever but Ubuntu is not forcing me to look at an alternative and the fact that I know how to achieve what I need is resulting in inertia anyway.

Ubuntu upgrades: do a clean installation or use Update Manager?

9th April 2009Part of some recent “fooling” brought on by the investigation of what turned out to be a duff DVD writer was a fresh installation of Ubuntu 8.10 on my main home PC. It might have brought on a certain amount of upheaval but it was nowhere near as severe as that following the same sort of thing with a Windows system. A few hours was all that was needed but the question as to whether it is better to do an upgrade every time a new Ubuntu release is unleashed on the world or to go for a complete virgin installation instead. With Ubuntu 9.04 in the offing, that question takes on a more immediate significance than it otherwise might do.

Various tricks make the whole reinstallation idea more palatable. For instance, many years of Windows usage have taught me the benefits of separating system and user files. The result is that my home directory lives on a different disk to my operating system files. Add to that the experience of being able to reuse that home drive across different Linux distros and even swapping from one distro to another becomes feasible. From various changes to my secondary machine, I can vouch that this works for Ubuntu, Fedora and Debian; the latter is what currently powers the said PC. You might have to user superuser powers to attend to ownership and access issues but the portability is certainly there and it applies anything kept on other disks too.

Naturally, there’s always the possibility of losing programs that you have had installed but losing the clutter can be liberating too. However, assembling a script made up up of one of more apt-get install commands can allow you to get many things back at a stroke. For example, I have a test web server (Apache/MySQL/PHP/Perl) set up so this would be how I’d get everything back in place before beginning further configuration. It might be no bad idea to back up your collection of software sources either; I have yet to add all of the ones that I have been using back into Synaptic. Then there are closed source packages such as VirtualBox (yes, I know that there is an open source edition) and Adobe Reader. After reinstating the former, all my virtual machines were available for me to use again without further ado. Restoring the latter allowed me to grab version 9.1 (probably more secure anyway) and it inveigles itself into Firefox now too so the number of times that I need to go through the download shuffle before seeing the contents of a PDF are much reduced, though not completely eliminated by the Windows-like ability to see a PDF loaded in a browser tab. Moving from software to hardware for a moment, it looks like any bespoke actions such as my activating an Epson Perfection 4490 Photo scanner need to be repeated but that was all that I needed to do. Getting things back into order is not so bad but you need to allow a modicum of time for this.

What I have discussed so far are what might be categorised as the common or garden aspects of a clean installation but I have seen some behaviours that make me wonder if the usual Ubuntu upgrade path is sufficiently complete in its refresh of your system. The counterpoint to all of this is that I may not have been looking for some of these things before now. That may apply to my noticing that DSLR support seems to be better with my Canon and Pentax cameras both being picked up and mounted for me as soon as they are connected to a PC, the caveat being that they are themselves powered on for this to happen. Another surprise that may be new is that the BBC iPlayer’s Listen Again works without further work from the user, a very useful development. It very clearly wasn’t that way before I carried out the invasive means. My previous tweaking might have prevented the in situ upgrade from doing its thing but I do see the point of not upsetting people’s systems with an overly aggressive update process, even if it means that some advances do not make themselves known.

So what’s my answer regarding which way to go once Ubuntu Jaunty Jackalope appears? For sake of avoiding initial disruption, I’d be inclined to go down the Update Manager route first while reserving the right to do a fresh installation later on. All in all, I am left with the gut feeling is that the jury is still out on this one.

Getting VirtualBox working on Ubuntu after a kernel upgrade

27th July 2008In previous posts, I have talked about getting VMware Workstation back on its feet again after a kernel upgrade. It also seems that VirtualBox is prone to the same sort of affliction. However, while VMware Workstation fails to start at all, VirtualBox at least starts itself even if it cannot get a virtual machine going and generates errors instead.

My usual course of action is to fire up Synaptic and install the drivers for the relevant kernel. Looking for virtualbox-ose-modules-[kernel version and type] and installing that usually resolves the problem. For example, at the time of writing, the latest file available for my system would be virtualbox-ose-modules-2.6.24-19-generic. If you are a command line fan, the command for this would be:

sudo apt-get install virtualbox-ose-modules-2.6.24-19-generic

The next thing to do would be to issue the command to start the vboxdrv service and you’d be all set:

sudo /etc/init.d/vboxdrv start

There is one point of weakness (an Achilles heal, if you like) with all of this: the relevant modules need to be available in the first place and I hit a glitch after updating the kernel to 2.6.24-20 when they weren’t; I do wonder why Canonical fail to keep both in step with one another and why the new kernel modules don’t come through the updates automatically either. However, there is a way around this too. That means installing virtualbox-ose-source via either Synaptic or the command line:

sudo apt-get install virtualbox-ose-source

The subsequent steps involve issuing more commands to perform a reinstallation from the source code:

sudo m-a prepare

sudo m-a auto-install virtualbox-ose

Once these are complete, the next is to start the vbox drv as described earlier and to add yourself to vboxusers group if you’re still having trouble:

sudo adduser [your username] vboxusers

The source code installation option certainly got me up and running again and I’ll be keeping it on hand for use should the situation raise its head again.

Another alpha release of Ubuntu 8.10 is out

26th July 2008It’s probably about time that I drew attention to Ubuntu’s The Fridge. While the strap line says “News for Human Beings”, it seems to be the place to find out about development releases of the said Linux distribution. Today, there’s a new alpha release of Intrepid Ibex (8.10) out and they have the details. As for me, I’ll stick to updating my 8.10 installation using Synaptic rather than going through the whole risky process of a complete installation following a download of the CD image. Saying that, it would be nice to see the System Monitor indicating which alpha release I have. I didn’t notice anything very dramatic after I did the update, apart maybe from the hiding away of boot messages at system startup and shutdown or the appearance of a button for changing display settings in the panel atop the desktop.

A fallback installation routine?

9th November 2007In a previous sustained spell of Linux meddling, the following installation routine was one that I encountered rather too often when RPM’s didn’t do what I required of them (having a SUSE distro in a world dominated by a Red Hat standard didn’t make things any easier…):

tar xzvf progname.tar.gz

cd progname

The first line extracts from a gziped tarball and the second one changes into the new directory created by the extraction. For bzipped files use:

tar xjvf progname.tar.bz2

The next three lines below configure, compile and install the package, running the command in its own shell.

./configure

make

su -c make install

Yes, the procedure is a bit convoluted but it would have been fine if it always worked. My experience was that the process was a far from foolproof one. For instance, an unsatisfied dependency is all that is needed to stop you in your tracks. Attempting to install a GNOME application on a KDE-based system is as good a way to encounter this result as any. Other horrid errors also played havoc with hopeful plans from time to time.

It shouldn’t surprise you to find that I will be staying away from the compilation/installation business with my main Ubuntu system. Synaptic Package Manager and its satisfactory dependency resolution fulfill my needs well and there is the Update Manager too; I’ll be leaving it to Canonical to do the testing and make the decisions regarding what is ready for my PC as they maintain their software repositories. My past tinkering often created a mess and I’ll be leaving that sort of experimentation for the safe confines of a virtual machine from now on…