A look at Ubuntu GNOME 13.10

12th October 2013With its final release being near at hand, I decided to have a look at the beta release of Ubuntu GNOME 13.10 to get a sense of what might be coming. A misstep along the way had me inadvertently download and install the 64-bit edition of 13.04 into a VirtualBox virtual machine. The intention to update that to its soon to be released successor was scuppered by instability so I never did get to try out an in situ upgrade to 13.10. What I had in mind was to issue the following command:

gksu update-manager -d

However, I found another one when considering how Ubuntu Server might be upgraded without the GUI application that is the Update Manager. To update to a development version, the following command is what you need:

sudo do-release-upgrade -d

To upgrade to a final release of of a new version of Ubuntu, drop the -d switch from the above to use the following:

sudo do-release-upgrade

There is one further option that isn’t recommended for moving between Ubuntu versions but I use it to get updates such as new kernel subversions that are released:

sudo apt-get dist-upgrade

Rather than trying out the above, I downloaded the latest ISO image for the beta release of Ubuntu GNOME 13.10 and installed onto a VM that instead. Though it is the 32 bit version of the distro that is installed on my main home PC, it has been the 64 bit version that I have been trying. So far, that seems to be behaving itself even if it feels a little sluggish but that could be down to the four year old PC that hosts the virtual machine. For a while, I have been playing with the possibility of an upgrade involving an Intel Core i5 4670K CPU and 16 GB of RAM (useful for running multiple virtual machines at a time) along with any motherboard that supports those so looking at a 64 bit operating system has its uses.

The Linux kernel may be 3.11 but that is not my biggest concern. Neither is the fact that LibreOffice 4.1.2.3 was included and GIMP wasn’t, especially when that could be added easily anyway and it is version 2.8.6 that you get. The move to GNOME Shell 3.8 was what drew me to seeing what was coming because I have been depending on a number extensions. As with WordPress and plugins, GNOME Shell seems to have a tempestuous relationship with some of its extensions and I wanted to see which ones still worked. There also has been a change to the backstage application view in that you either get all installed applications displayed when you browse them or you have to start typing the name of the one you want to select it. Losing the categorical view that has been there until GNOME Shell 3.6 is a step backwards and I hope that version 3.10 has seen some sort of a reinstatement. There is a way to add these categories and the result is not as it once was either; also, it shouldn’t be necessary for anyone to dive into a systems innards to address things like this. With all the constant change, it is little wonder that Cinnamon has become a standalone entity with the release of its version 2.0 and that Debian’s toyed with not going with GNOME for its latest version (7.1 at the time of writing and it picked a good GNOME Shell version in 3.4).

Having had a look at other distribution that already have GNOME Shell 3.8, I knew that a few of my extensions worked with it. The list includes Frippery Bottom Panel, Frippery Move Clock, Places Status Indicator, Removable Drive Menu, Remove Rounded Corners (not really needed with the GNOME Shell theme that I use, Elementary Luna 3.4, but I retain it anyway), Show Desktop Button, User Themes and Ignore_Request_Hide_Titlebar. Because of the changes to the backstage view, I added Frippery Applications Menu in preference to Applications Menu because I have found that to be unstable. Useful new discoveries have included Curtains Up and GNOME Shell Open Terminal while Shell Restart User Menu Entry has made a return and found a use this time around too.

There have been some extensions that were not updated to work with GNOME Shell 3.8 that I have got working. In some cases, it was as simple as updating the metadata.json file for an extension with new version numbers of 3.8 and 3.84 to the list associated with the shell version property. All extensions are to be found in the .local/share/gnome-shell/extensions location in your home directory and each has a dedicated file containing the aforementioned file.

With others, it was a matter of looking in the Looking Glass (execute lg in the box that ALT + F2 brings up on your screen to access this) and seeing what error messages were to be found in there before attempting to correct these in either the extensions’ extension.js files or whatever JavaScript (*.js) file was causing the problem. With either or both of these remedies, I managed to port the four extensions below to GNOME Shell 3.8. In fact, you can download these zip files and install them yourself to see how you get on with them.

Advanced Settings in User Menu

There is a Remove Panel App Menu that works with GNOME Shell 3.8 but I found that it got rid of the Places menu instead of the panel’s App Menu so I tried porting the older extension to see if it behaved itself and it does. With these in place, I have bent Ubuntu GNOME 13.10 to my will ahead of its final release next week and that includes customising Nautilus too. Other than a new version of GNOME Shell, it looks as if it will come with less in the way of drama and a breather like that is no bad thing given that personal computing continues to remain in a state of flux these days.

Upgrading from Windows 7 to Windows 8 in a VMWare Virtual Machine

1st November 2012Though my main home PC runs Linux Mint, I do like to have the facility to use Windows software from time to time and virtualisation has allowed me to continue doing that. For a good while, it was a Windows 7 guest within a VirtualBox virtual machine and, before that, one running Windows XP fulfilled the same role. However, it did feel as if things were running slower in VirtualBox than once might have been the case and I jumped ship to VMware Player. It may be proprietary and closed source but it is free of charge and has been doing what was needed. A subsequent recent upgrade of video driver on the host operating system allowed the enabling of a better graphical environment in the Windows 7 guest.

Instability

However, there were issues with stability and I lost the ability to flit from the VM window to the Linux desktop at will with the system freezing on me and needing a reboot. Working in Windows 7 using full screen mode avoided this but it did feel as I was constrained to working in a Windows machine whenever I did so. The graphics performance was imperfect too with screening refreshing being very blocky with some momentary scrambling whenever I opened the Start menu. Others would not have been as patient with that as I was though there was the matter of an expensive Photoshop licence to be guarded too.

In hindsight, a bit of pruning could have helped. An example would have been driver housekeeping in the form of removing VirtualBox Guest Additions because they could have been conflicting with their VMware counterparts. For some reason, those thoughts entered my mind and I was pondering another more expensive option instead.

Considering NAS & Windows/Linux Networking

That would have taken the form of setting aside a PC for running Windows 7 and having a NAS for sharing files between it and my Linux system. In fact, I did get to exploring what a four bay QNAP TS-412 would offer me and realised that you cannot put normal desktop hard drives into devices like that. For a while, it looked as if it would be a matter of getting drives bundled with the device or acquiring enterprise grade disks so as to main the required continuity of operation. The final edition of PC Plus highlighted another one though: the Western Digital Red range. These are part way been desktop and enterprise classifications and have been developed in association with NAS makers too.

Looking at the NAS option certainly became an education but it has exited any sort of wish list that I have. After all, there is the cost of such a setup and it’s enough to get me asking if I really need such a thing. The purchase of a Netgear FS 605 ethernet switch would have helped incorporate it but there has been no trouble sorting alternative uses for it since it bumps up the number of networked devices that I can have, never a bad capability to have. As I was to find, there was a less expensive alternative that became sufficient for my needs.

In-situ Windows 8 Upgrade

Microsoft have been making available evaluation copies of Windows 8 Enterprise that last for 90 days before expiring. One is in my hands has been running faultlessly in a VMware virtual machine for the past few weeks. That made me wonder if upgrading from Windows 7 to Windows 8 help with my main Windows VM problems. Being a curious risk-taking type I decided to answer the question for myself using the £24.99 Windows Pro upgrade offer that Microsoft have been running for those not needing a disk up front; they need to pay £49.99 but you can get one afterwards for an extra £12.99 and £3.49 postage if you wish, a slightly cheaper option. There also was a time cost in that it occupied a lot of a weekend on me but it seems to have done what was needed so it was worth the outlay.

Given the element of risk, Photoshop was deactivated to be on the safe side. That wasn’t the only pre-upgrade action that was needed because the Windows 8 Pro 32-bit upgrade needs at least 16 GB before it will proceed. Of course, there was the matter of downloading the installer from the Microsoft website too. This took care of system evaluation and paying for the software as well as the actual upgrade itself.

The installation took a few hours with virtual machine reboots along the way. Naturally, the licence key was needed too as well as the selection of a few options though there weren’t many of these. Being able to carry over settings from the pre-exisiting Windows 7 instance certainly helped with this and with making the process smoother too. No software needed reinstatement and it doesn’t feel as if the system has forgotten very much at all, a successful outcome.

Post-upgrade Actions

Just because I had a working Windows 8 instance didn’t mean that there wasn’t more to be done. In fact, it was the post-upgrade sorting that took up more time than the actual installation. For one thing, my digital mapping software wouldn’t work without .Net Framework 3.5 and turning on the operating system feature form the Control Panel fell over at the point where it was being downloaded from the Microsoft Update website. Even removing Avira Internet Security after updating it to the latest version had no effect and it was a finding during the Windows 8 system evaluation process. The solution was to mount the Windows 8 Enterprise ISO installation image that I had and issue the following command from a command prompt running with administrative privileges (it’s all one line though that’s wrapped here):

dism.exe /online /enable-feature /featurename:NetFX3 /Source:d:\sources\sxs /LimitAccess

For sake of assurance regarding compatibility, Avira has been replaced with Trend Micro Titanium Internet Security. The Avira licence won’t go to waste since I have another another home in mind for it. Removing Avira without crashing Windows 8 proved impossible though and necessitating booting Windows 8 into Safe Mode. Because of much faster startup times, that cannot be achieved with a key press at the appropriate moment because the time window is too short now. One solution is to set the Safe Boot tickbox in the Boot tab of Msconfig (or System Configuration as it otherwise calls itself) before the machine is restarted. There may be others but this was the one that I used. With Avira removed, clearing the same setting and rebooting restored normal service.

Dealing with a Dual Personality

One observer has stated that Windows 8 gives you two operating systems for the price of one: the one in the Start screen and the one on the desktop. Having got to wanting to work with one at a time, I decided to make some adjustments. Adding Classic Shell got me back a Start menu and I left out the Windows Explorer (or File Explorer as it is known in Windows 8) and Internet Explorer components. Though Classic Shell will present a desktop like what we have been getting from Windows 7 by sweeping the Start screen out of the way for you, I found that this wasn’t quick enough for my liking so I added Skip Metro Suite to do this and it seemed to do that a little faster. The tool does more than sweeping the Start screen out of the way but I have switched off these functions. Classic Shell also has been configured so the Start screen can be accessed with a press of Windows key but you can have it as you wish. It has updated too so that boot into the desktop should be faster now. As for me, I’ll leave things as they are for now. Even the possibility of using Windows’ own functionality to go directly to the traditional desktop will be left untested while things are left to settle. Tinkering can need a break.

Outcome

After all that effort, I now have a seemingly more stable Windows virtual machine running Windows 8. Flitting between it and other Linux desktop applications has not caused a system freeze so far and that was the result that I wanted. There now is no need to consider having separate Windows and Linux PC’s with a NAS for sharing files between them so that option is well off my wish-list. There are better uses for my money.

Not everyone has had my experience though because I saw a report that one user failed to update a physical machine to Windows 8 and installed Ubuntu instead; they were a Linux user anyway even if they used Fedora more than Ubuntu. It is possible to roll back from Windows 8 to the previous version of Windows because there is a windows.old directory left primarily for that purpose. However, that may not help you if you have a partially operating system that doesn’t allow you to do just that. In time, I’ll remove it using the Disk Clean-up utility by asking it to remove previous Windows installations or running File Explorer with administrator privileges. Somehow, the former approach sounds the safer.

What About Installing Afresh?

While there was a time when I went solely for upgrades when moving from one version of Windows to the next, the annoyance of the process got to me. If I had known that installing the upgrade twice onto a computer with a clean disk would suffice, it would have saved me a lot. Staring from Windows 95 (from the days when you got a full installation disk with a PC and not the rescue media that we get now) and moving through a sequence of successors not only was time consuming but it also revealed the limitations of the first in the series when it came to supporting more recent hardware. It was enough to have me buying the full retailed editions of Windows XP and Windows 7 when they were released; the latter got downloaded directly from Microsoft. These were retail versions that you could move from one computer to another but Windows 8 will not be like that. In fact, you will need to get its System Builder edition from a reseller and that can only be used on one machine. It is the merging of the former retail and OEM product offerings.

What I have been reading is that the market for full retail versions of Windows was not a big one anyway. However, it was how I used to work as you have read above and it does give you a fresh system. Most probably get Windows with a new PC and don’t go building them from scratch like I have done for more than a decade. Maybe the System Builder version would apply to me anyway and it appears to be intended for virtual machine use as well as on physical ones. More care will be needed with those licences by the looks of things and I wonder what needs not to be changed so as not to invalidate a licence. After all, making a mistake might cost between £75 and £120 depending on the edition.

Final Thoughts

So far Windows 8 is treating me well and I have managed to bend to my will too, always a good thing to be able to say. In time, it might be that a System Builder copy could need buying yet but I’ll leave well alone for now. Though I needed new security software, the upgrade still saved me money over a hardware solution to my home computing needs and I have a backup disk on order from Microsoft too. That I have had to spend some time settling things was a means of learning new things for me but others may not be so patient and, with Windows 7 working well enough for most, you have to ask if it’s only curious folk like me who are taking the plunge. Still, the dramatic change has re-energised the PC world in an era when smartphones and tablets have made so much of the running recently. That too is no bad thing because an unchanging technology is one that dies and there are times when big changes are needed, as much as they upset some folk. For Microsoft, this looks like one of them and it’ll be interesting to see where things go from here for PC technology.

An in situ upgrade to Linux Mint 12

4th December 2011Though it isn’t the recommended approach, I have ended up upgrading to Linux Mint 12 from Linux Mint 11 using an in situ route. Having attempted this before with a VirtualBox hosted installation, I am well aware of the possibility of things going wrong. Then, a full re-installation was needed to remedy the situation. With that in mind, I made a number of backups in the case of an emergency fresh installation of the latest release of Linux Mint. Apache and VirtualBox configuration files together with MySQL backups were put where they could be retrieved should that be required. The same applied to the list of installed packages on my system. So far, I haven’t needed to use these, but there is no point in taking too many chances.

The first step in an in-situ Linux Mint upgrade is to edit /etc/apt/sources.list. In the repository location definitions, any reference to katya (11) was changed to lisa (for 12) and the same applied to any appearance of natty (Ubuntu 11.04) which needed to become oneiric (Ubuntu 11.10). With that done, it was time to issue the following command (all one line even if it is broken here):

sudo apt-get update && sudo apt-get upgrade && sudo apt-get dist-upgrade

Once that had completed, it was time to add the new additions that come with Linux Mint 12 to my system using a combination of apt-get, aptitude and Synaptic; the process took a few cycles. GNOME already was in place from prior experimentation, so there was no need to add this anew. However, I need to instate MGSE to gain the default Linux Mint customisations of GNOME 3. Along with that, I decided to add MATE, the fork of GNOME 2. That necessitated the removal of two old libraries (libgcr0 and libgpp11, if I recall correctly but it will tell you what is causing any conflict) using Synaptic. With MGSE and MATE in place, it was time to install LightDM and its Unity greeter to get the Linux Mint login screen. Using GDM wasn’t giving a very smooth visual experience and Ubuntu, the basis of Linux Mint, uses LightDM anyway. Even using the GTK greeter with LightDM produced a clunky login box in front of a garish screen. Configuration tweaks could have improved on this but it seems that using LightDM and Unity greeter is what gives the intended setup and experience.

With all of this complete, the system seemed to be running fine until the occasional desktop freeze occurred with Banshee running. Blaming that, I changed to Rhythmbox instead, though that helped only marginally. While this might be blamed on how I upgraded my system, things seemed to have steadied themselves in the week since then. As a test, I had the music player going for a few hours and there was no problem. With the call for testing of an update to MATE a few days ago, it now looks as if there may have been bugs in the original release of Linux Mint 12. Daily updates have added new versions of MGSE and MATE so that may have something to do with the increase in stability. Even so, I haven’t discounted the possibility of needing to do a fresh installation of Linux Mint 12 just yet. However, if things continue as they are, then it won’t be needed and that’s an upheaval avoided should things go that way. That’s why in situ upgrades are attractive though rolling distros like Arch Linux (these words are being written on a system running this) and LMDE are more so.

On Upgrading to Linux Mint 11

31st May 2011For a Linux distribution that focuses on user-friendliness, it does surprise me that Linux Mint offers no seamless upgrade path. In fact, the underlying philosophy is that upgrading an operating system is a risky business. However, I have been doing in-situ upgrades with both Ubuntu and Fedora for a few years without any real calamities. A mishap with a hard drive that resulted in lost data in the days when I mainly was a Windows user places this into sharp relief. These days, I am far more careful but thought nothing of sticking a Fedora DVD into a drive to move my Fedora machine from 14 to 15 recently. Apart from a few rough edges and the need to get used to GNOME 3 together with making a better fit for me, there was no problem to report. The same sort of outcome used to apply to those online Ubuntu upgrades that I was accustomed to doing.

The recommended approach for Linux Mint is to back up your package lists and your data before the upgrade. Doing the former is a boon because it automates adding the extras that a standard CD or DVD installation doesn’t do. While I did do a little backing up of data, it wasn’t total because I know how to identify my drives and take my time over things. Apache settings and the contents of MySQL databases were my main concern because of where these are stored.

When I was ready to do so, I popped a DVD in the drive and carried out a fresh installation into the partition where my operating system files are kept. Being a Live DVD, I was able to set up any drive and partition mappings with reference to Mint’s Disk Utility. What didn’t go so well was the GRUB installation, and it was due to the choice that I made on one of the installation screens. Despite doing an installation of version 10 just over a month ago, I had overlooked an intricacy of the task and placed GRUB on the operating system files partition rather than at the top level for the disk where it is located. Instead of trying to address this manually, I took the easier and more time-consuming step of repeating the installation like I did the last time. If there was a graphical tool for addressing GRUB problems, I might have gone for that instead, but am left wondering at why there isn’t one included at all. Maybe it’s something that the people behind GRUB should consider creating unless there is one out there already about which I know nothing.

With the booting problem sorted, I tried logging in only to find a problem with my desktop that made the system next to unusable. It was back to the DVD and I moved many of the configuration files and folders (the ones with names beginning with a “.”) from my home directory in the belief that there might have been an incompatibility. That action gained me a fully usable desktop environment but I now think that the cause of my problem may have been different to what I initially suspected. Later I discovered that ownership of files in my home area elsewhere wasn’t associated with my user ID though there was no change to it during the installation. As it happened, a few minutes with the chown command were enough to sort out the permissions issue.

The restoration of the extra software that I had added beyond what standardly gets installed was took its share of time but the use of a previously prepared list made things so much easier. That it didn’t work smoothly because some packages couldn’t be found the first time around, so another one was needed. Nevertheless, that is nothing compared to the effort needed to do the same thing by issuing an installation command at a time. Once the usual distribution software updates were in place, all that was left was to update VirtualBox to the latest version, install a Citrix client and add a PHP plugin to NetBeans. Then, next to everything was in place for me.

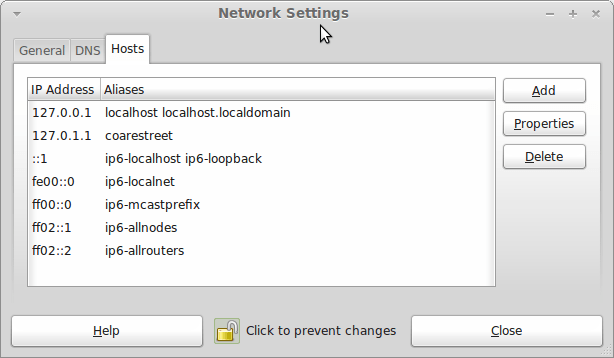

Next, Apache settings were restored as were the databases that I used for offline web development. That nearly was all that was needed to get offline websites working but for the need to add an alias for localhost.localdomain. That required installation of the Network Settings tool so that I could add the alias in its Hosts tab. With that out of the way, the system had been settled in and was ready for real work.

In light of some of the glitches that I saw, I can understand the level of caution regarding a more automated upgrade process on the part of the Linux Mint team. Even so, I still wonder if the more manual alternative that they have pursued brings its own problems in the form of those that I met. The fact that the whole process took a few hours in comparison to the single hour taken by the in-situ upgrades that I mentioned earlier is another consideration that makes you wonder if it is all worth it every six months or so. Saying that, there is something to letting a user decide when to upgrade rather than luring one along to a new version, a point that is more than pertinent in light of the recent changes made to Ubuntu and Fedora. Whichever approach you care to choose, there are arguments in favour as well as counterarguments too.

A lot of work ahead

6th December 2010Curiosity recently got the better of me and I decided to have a look at the first alpha release of Ubuntu 11.04, both in a VirtualBox virtual machine and on a spare PC that I have. They always warn you about alpha releases of software and the first sight of Ubuntu was in keeping with this. The move to using Unity as a desktop environment is in train and it didn’t work perfectly on either of the systems on which I tried it, not a huge surprise really. There wasn’t any sign of a top panel or one at the side and no application had its top bar, either. It looks as if others may have got on better but it may have been to my doing an in situ upgrade rather than a fresh installation. Doing the latter might be an idea but I may wait for the next alpha release first. Still, it looks as if we’ll be getting Firefox 4 so the change of desktop isn’t going to be the only alteration. All in all, it looks as if Natty Narwhal will be an interesting Ubuntu release with more change than is usually the case. In the meantime, I’ll keep tabs on how development goes so as to be informed before the time to think about upgrading comes around. So far, it’s early days and there a few months to go yet.

On upgrading from Fedora 13 to Fedora 14

7th November 2010My Fedora box recently got upgraded to the latest version of the distribution (14) and I stuck to a method that I have used successfully before and one that isn’t that common with variants of Linux either. What I did was to go to the Fedora website and download a full DVD image, burn it to a disk and boot from that. Then, I chose the upgrade option from the menus and all went smoothly with only commonplace options needing selection from the menus and no data was lost either. Apparently. this way of going about things is only offered by the DVD option because the equivalent Live CD versions only do full installations.

However, there was another option that I fancied trying but was stymied by messages about a troublesome Dropbox repository. As I later discovered, that would have been easily sorted but I went for a tried and tested method instead. This was a pity because only two commands would have needed to be issued when logged in as root and it would have been good to have had a go with them:

yum update yum

yum --releasever=14 update --skip-broken

These may have done what I habitually do with Ubuntu upgrades but trying them out either will have to await the release of the next version or my getting around to setting up a Fedora virtual machine to see what happens. The latter course of action might be sensible anyway to see if all works without any problem before doing it for a real PC installation.

Upgrading to Fedora 13

1st June 2010After having a spin of Fedora’s latest in a Virtualbox virtual machine on my main home PC, I decided to upgrade my Fedora box. First, I needed to battle imperfect Internet speeds to get an ISO image that I could burn to a DVD. Once that was in place, I rebooted the Fedora machine using the DVD and chose the upgrade option to avoid bringing a major upheaval upon myself. You need the full DVD for this because only a full installation is available from Live ISO images and CD’s.

All was graphical easiness and I got back into Fedora again without a hitch. Along with other bits and pieces, MySQL, PHP and Apache are working as before. If there was any glitch, it was with Netbeans 6.8 because the upgrade from the previous version didn’t seem to be a complete as hoped. However, it was nothing that an update of the open source variant of Java and Netbeans itself couldn’t resolve. There may have been untidy poking around before the solution was found but all has been well since then.

Solving an upgrade hitch en route to Ubuntu 10.04

4th May 2010After waiting until after a weekend in the Isle of Man, I got to upgrading my main home PC to Ubuntu 10.04. Before the weekend away, I had been updating a 10.04 installation on an old spare PC and that worked fine so the prospects were good for a similar changeover on the main box. That may have been so but breaking a computer hardly is the perfect complement to a getaway.

So as to keep the level of disruption to a minimum, I opted for an in-situ upgrade. The download was left to complete in its own good time and I returned to attend to installation messages asking me if I wished to retain old logs files for the likes of Apache. When the system asked for reboot at the end of the sequence of package downloading, installation and removal, I was ready to leave it do the needful.

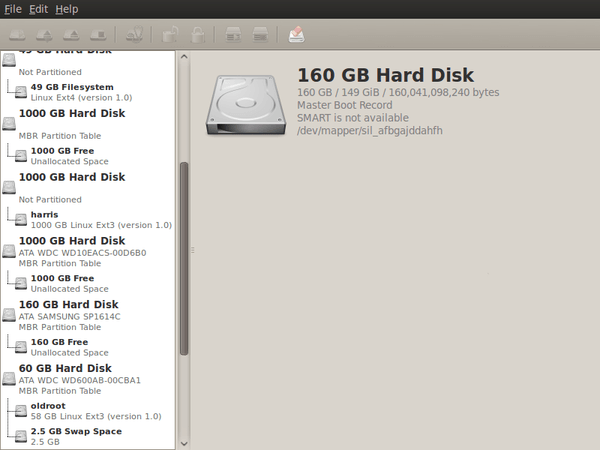

However, I met with a hitch when the machine restarted: it couldn’t find the root drive. Live CD’s were pressed into service to shed light on what had happened. First up was an old disc for 9.10 before one for 10.04 Beta 1 was used. That identified a difference between the two that was to prove to be the cause of what I was seeing. 10.04 uses /dev/hd*# (/dev/hda1 is an example) nomenclature for everything including software RAID arrays (“fakeraid”). 9.10 used the /dev/mapper/sil_**************# convention for two of my drives and I get the impression that the names differ according to the chipset that is used.

During the upgrade process, the one thing that was missed was the changeover from /dev/mapper/sil_**************# to /dev/hd*# in the appropriate places in /boot/grub/menu.lst; look for the lines starting with the word kernel. When I did what the operating system forgot, I was greeted by a screen telling of the progress of checks on one of the system’s disks. That process took a while but a login screen followed and I had my desktop much as before. The only other thing that I had to do was run gconf-editor from the terminal to send the title bar buttons to the right where I am accustomed to having them. Since then, I have been working away as before.

Some may decry the lack of change (ImageMagick and UFRaw could do with working together much faster, though) but I’m not complaining; the rough of 9.10 drilled that into me. Nevertheless, I am left wondering how many are getting tripped up by what I encountered, even if it means that Palimpsest (what Ubuntu calls Disk Utility) looks much tidier than it did. Could the same thing be affecting /etc/fstab too? The reason that I don’t know the answer to that question is that I changed all hard disk drive references to UUID a while ago but it’s another place to look if the GRUB change isn’t fixing things for you. If my memory isn’t failing me, I seem to remember seeing /dev/mapper/sil_**************# drive names in there too.

Command Line Software Management

2nd December 2009One of the nice things about a Debian-based Linux distribution is that it is easy to pull a piece of software onto your system from a repository using either apt-get or aptitude. Some may prefer to have a GUI but I find that the command line offers certain extra transparency that stops the “what’s it doing?” type of question. that’s never to say that the GUI-based approach hasn’t a place and I only go using it when seeking out a piece of software without knowing its aptitude-ready name. Interestingly, there are signs that Canonical may be playing with the idea of making Ubuntu’s Software Centre a full application management tool with updates and upgrades getting added to the current searching, installation and removal facilities. That well may be but it’s going to take a lot of effort to get me away from the command line altogether.

Fedora and openSUSE have their software management commands too in the shape of yum and zypper, respectively. The recent flurry of new operating system releases has had me experimenting with both of those distros on a real test machine. As might be expected, the usual battery of installation, removal and update activities are well served and I have been playing with software searching using yum too. What has yet to mature is in-situ distribution upgrading à la Ubuntu. In principle, it is possible but I got a black screen when I tried moving from openSUSE 11.1 to 11.2 within VirtualBox using instructions on the openSUSE website. Not wanting to wait, I reached for a Live CD instead and that worked a treat on both virtual and real machines. Being in an experiment turn of mind, I attempted the same to get from Fedora 11 to the beta release of its version 12. A spot of repository trouble got me using a Live CD in its place. You can perform an in-situ upgrade from a full Fedora DVD but the only option is system replacement when you have a Live CD. Once installation is out of the way, YAST can be ignored in favour of zypper and yum is good enough that Fedora’s GUI-using alternative can be ignored. It’s nice to see good transparent ideas taking hold elsewhere and may make migration between distros much easier too.

A case of double vision?

4th November 2009One of the early signs that I noticed after upgrading my main PC to Ubuntu 9.10 was a warning regarding the health of one of my hard disks. Others have reported that this can be triggered by the least bit of roughness in a SMART profile but that’s not how it was for me. The PATA disk that has hosted my Ubuntu installation since the move away from Windows had a few bad sectors but no adverse warning. It was a 320 GB Western Digital SATA drive that was raising alarm bells with its 200 bad sectors.

The conveyor of this news was Palimpsest (not sure how it got that name even when I read the Wikipedia entry) and that is part of the subject of this post. Some have been irritated by its disk health warnings but it’s easy to make them go away by turning off Disk Notifications in the dialogue that going to System > Preferences > Startup Applications will bring up for you. To fire up Palimpsest itself, there’s always the command line but you’ll find it at System > Administration > Disk Utility too. My complaint about it is that I see the same hard drive listed in there more than once and it takes some finding to separate the real entries from the “bogus” ones. Whether this is because Ubuntu has seen my SATA drives with SIL RAID mappings (for the record, I have no array set up) or not is an open question but it’s one that needs continued investigation and I already have had a go with the dmraid command. Even GParted shows both the original /dev/sd* type addressing and the /dev/mapper/sil_* equivalent with the latter being the one with which you need to work (Ubuntu now lives on a partition on one of the SATA drives which is why I noticed this). All in all, it looks less than tidy so additional interrogation is in order, especially when I have no recollection of 9.04 doing anything of the sort.