Another look at Drupal

20th January 2010Early on in the first year of this blog, I got to investigating the use of Drupal for creating an article-based subsite. In the end, the complexities of its HTML and CSS thwarted my attempts to harmonise the appearance of web pages with other parts of the same site and I discontinued my efforts. In the end, it was Textpattern that suited my needs and I have stuck with that for the aforementioned subsite. However, I recently spotted someone very obviously using Drupal in its out of the box state for a sort of blog (there is even an extension for importing WXR files containing content from a WordPress blog); they even hadn’t removed the Drupal logo. With my interest rekindled, I took another look for the sake of seeing where things have gone in the last few years. Well, first impressions are that it now looks like a blogging tool with greater menu control and the facility to define custom content types. There are plenty of nice themes around too though that highlights an idiosyncrasy in the sense that content editing is not fully integrated into the administration area where I’d expect it to be. The consequence of this situation is that pages, posts (or story as the content type is called) or any content types that you have defined yourself are created and edited with the front page theme controlling the appearance of the user interface. It is made even more striking when you use a different theme for the administration screens. That oddity aside, there is a lot to recommend Drupal though I’d try setting up a standalone site with it rather than attempting to shoehorn it as a part of an existing one like what I was trying when I last looked.

Why the manual step?

18th January 2010One of the consequences of buying a new camera is that your current photo processing software may not be fully equipped for the job of handling the images that it creates. This is a particular issue with raw image files and Adobe Photoshop Elements 5 was unable to completely handle DNG files made with my Pentax K10D until I upgraded to version 7. Yes, I do realise that the upgrading camera should been in order but I only lost the white balance adjustments so I could with things as they until upgrading gained a more compelling case.

As things stood, Elements 7 was unable to import CR2 files from my Canon PowerShot G11 into the Organiser so it was off to the appropriate page on the Adobe website for a Camera Raw updater. I picked up the latest release of Camera Raw (5.6 at the time of writing) even though it was found in the Elements 8 category (don’t be put by this because release notes address the version compatibility question more extensively). Strangely, the updater doesn’t complete everything because you still need to copy Camera Raw.8bi from the zip archive and backup the original. Quite why this couldn’t have been more automated, even with user prompts for file names and locations, is beyond me but that is how it is. However, once all was in place, CR2 files were handled by Elements without missing a beat.

Outlook rule size limitation

12th January 2010A move from Outlook 2000 to Outlook 2007 at work before Christmas resulted in deactivated Outlook rules and messages like the following when I tried reactivating them:

One or more rules could not be uploaded to Exchange server and have been deactivated. This could be because some of the parameters are not supported or there is insufficient space to store all your rules.

The cause is a 32 KB size limitation for all rules associated with your Exchange server account prior to Exchange 2007. With the latter, the default size increases to 64 KB and can be increased further to 256 KB by manual intervention. This wouldn’t be a big issue if you had the option to store rules locally on your own PC but that was removed after Outlook 2000, therefore explaining why I first encountered it when I did. Microsoft has a useful article on their support website containing suggested remedies and they aren’t all as extreme as deleting some rules either. Consolidation and shortening of rule names are other suggestions and you should never discount how much space the “run on this machine only” parameter takes up either. Still, it’s an odd design decision that caused this but I suppose that it wouldn’t the first made by Microsoft and it may not be the last either.

Investigating the real-time web

10th January 2010Admittedly, I have been keeping away from Twitter and its kind for a while now but the current run of cold weather in the Britain and Ireland has alerted me to its usefulness and I have given the thing a go. With public transport operator website heaving over the last week, the advantages of microblogging became more than apparent, thanks in no small part to the efforts of Centrebus, National Rail Enquiries and the U.K. Met Office. The pithy nature of any messages saves the effort needed to compile a longer blog post and to read it afterwards. This aspect makes it invaluable for those times when all that needs to be communicated is short and sweet. Anything that cuts down on the information tide that hits all of us every day cam only be a good thing.

Along with Twitter, there is a whole suite of tools available for various bits and pieces. First off, there’s integration with WordPress courtesy of plugins like Alex King’s Twitter Tools. After that, there are numerous web applications for taming the beast. Though I only can say that I scratched the surface of what’s available, I have come accross HootSuite and Twitterfeed. The former is a console for managing more than one Twitter account at once while also offering the facility to do the same for Facebook, LinkedIn, WordPress.com and others too. Twitterfeed may be more limited in scope with offering to turn RSS feeds into tweets but it has its place too. HootSuite might have something similar for WordPress but Twitterfeed is a good more universal in its sweep. Naturally, there’s more out there than these two but I am not trying to be exhaustive here. If I make use of any other such services, I even might get inspired to mention them on here.

Converting from CGM to Postscript

24th November 2009On thing that I recently had to investigate was the possibility of converting CGM vector graphics files into Postscript and from there into PDF. Having used ImageMagick for converting images before, that was an obvious option. However, that cannot process CGM files on its own and needs a delegate or helper application as well. This is the case with raw digital camera files too with UFRaw being the program chosen. For CGM images, the more obscure RALCGM is what’s needed and tracking it down is a bit of an art. The history is that it was developed at the U.K.’s Rutherford Appleton Laboratory but it seems that it was left go off into the wilderness rather than someone keeping an eye on things. With that in mind, here are the installation packages for Windows and Linux (RPM):

RALCGM is a handy command line tool that can covert from CGM to Postscript on its own without any need for ImageMagick at all. From what I have seen, fonts on graphical output may look greyer than black but it otherwise does its job well. However, considering that it is a freely available tool, one cannot complain too much. There are other packages for doing vector to raster conversion and the ones that I have seen do have GUI‘s but the freedom to look at for cost software wasn’t mine to have. The required command looks something like the following:

ralcgm -d PS -oL test.cgm test.ps

The switch -d PS uses the software’s Postscript driver and -oL specifies landscape orientation. If you want to find out more, here’s a PDF rendition of the help file that comes with the thing:

So you just need a web browser?

21st November 2009When Google announced that it was working on an operating system, it was bound to result in a frisson of excitement. However, a peek at the preview edition that has been doing the rounds confirms that Chrome OS is a very different beast from those operating systems to which we are accustomed. The first thing that you notice is that it only starts up the Chrome web browser. In this, it is like a Windows terminal server session that opens just one application. Of course, in Google’s case, that one piece of software is the gateway to its usual collection of productivity software like GMail, Calendar, Docs & Spreadsheets and more. Then, there are offerings from others too with Microsoft just beginning to come into the fray to join Adobe and many more. As far as I can tell, all files are stored remotely and I reckon that adding the possibility of local storage and management of those local files would be a useful enhancement.

With Chrome OS, Google’s general strategy starts to make sense. First create a raft of web applications, follow them up with a browser and then knock up an operating system. It just goes to show that Google Labs doesn’t just churn out stuff for fun but that there is a serious point to their endeavours. In fact, you could say that they sucked us in to a point along the way. Speaking for myself, I may not entrust all of my files to storage in the cloud but I am perfectly happy to entrust all of my personal email activity to GMail. It’s the widespread availability and platform independence that has done it for me. For others spread between one place and another, the attractions of Google’s other web apps cannot be understated. Maybe, that’s why they are not the only players in the field either.

With the rise of mobile computing, that portability is the opportunity that Google is trying to use to its advantage. For example, mobile phones are being used for things now that would have been unthinkable a few years back. Then, there’s the netbook revolution started by Asus with its Eee PC. All of this is creating an ever internet connected bunch of people so have devices that connect straight to the web like they would with Chrome OS has to be a smart move. Some may decry the idea that Chrome OS is going to be available on a device only basis but I suppose they have to make money from this too; search can only pay for so much and they have experience with Android too.

There have been some who wondered about Google’s activities killing off Linux and giving Windows a good run for its money; Chrome OS seems to be a very different animal to either of these. It looks as if it is a tool for those on the move, an appliance rather than the pure multipurpose tools that operating systems usually are. If there is a symbol of what an operating system usually means for me, it’s the ability to start with a bare desktop and decide what to do next. Transparency is another plus point and the Linux command line had that in spades. For those who view PC’s purely as means to get things done, such interests are peripheral and it is for these that the likes of Chrome OS has been created. In other word, the Linux community need to keep an eye on what Google is doing but should not take fright because there are other things that Linux always will have as unique selling points. The same sort of thing applies to Windows too but Microsoft’s near stranglehold on the enterprise market will take a lot of loosening, perhaps keeping Chrome OS in the consumer arena. Counterpoints to that include the use GMail for enterprise email by some companies and the increasing footprint of web-based applications, even bespoke ones, in business computing. In fact, it’s the latter than can be blamed for any tardiness in Internet Explorer development. In summary, Chrome OS is a new type of thing rather than a replacement for what’s already there. We may find that co-existence is how things turn out but it means for Linux in the netbook market is another matter. Only time will tell on that one.

Consolidation

19th November 2009For a while, the Windows computing side of my life has been spread across far too many versions of the pervasive operating systems with the list including 2000 (desktop and server), XP, 2003 Server, Vista and 7; 9x hasn’t been part of my life for what feels like an age. At home, XP has been the mainstay for my Windows computing needs with Vista Home Premium loaded on my Toshiba laptop. The latter variant came in for more use during that period of home computing “homelessness” and, despite a cacophony of complaints from some, it seemed to work well enough. Since the start of the year, 7 has also been in my sights with beta and release candidate instances in virtual machines leaving me impressed enough to go popping the final version onto both the laptop and in a VM on my main PC. Microsoft finally have got around to checking product keys over the net so that meant a licence purchase for each installation using the same downloaded 32-bit ISO image. 7 still is doing well by me so I am beginning to wonder whether having an XP VM is becoming pointless. The reason for that train of thought is that 7 is becoming the only version that I really need for anything that takes me into the world of Windows.

Work is a different matter with a recent move away from Windows 2000 to Vista heavily reducing my exposure to the venerable old stager (businesses usually take longer to migrate and any good IT manager usually delays any migration by a year anyway). 2000 is sufficiently outmoded by now that even my brother was considering a move to 7 for his work because of al the Office 2007 files that have been coming his way. He may be no technical user but the bad press gained by Vista hasn’t passed him by so a certain wariness is understandable. Saying that, my experiences with Vista haven’t been unpleasant and it always worked well on the laptop and the same also can be said for its corporate desktop counterpart. Much of the noise centered around issues of hardware and software compatibility and that certainly is apparent at work with my having some creases left to straighten.

With all of this general forward heaving, you might think that IE6 would be shuffling its mortal coil by now but a recent check on visitor statistics for this website places it at about 13% share, tantalisingly close to oblivion but still too large to ignore it completely. All in all, it is lingering like that earlier blight of web design, Netscape 4.x. If I was planning a big change to the site design, setting up a Win2K VM would be in order not to completely put off those labouring with the old curmudgeon. For smaller changes, the temptation is not to bother checking but that is questionable when XP is set to live on for a while yet. That came with IE6 and there must be users labouring with the old curmudgeon and that’s ironic with IE8 being available for SP2 since its original launch a while back. Where all this is leading me is towards the idea of waiting for IE6 share to decrease further before tackling any major site changes. After all, I can wait with the general downward trend in market share; there has to be a point when its awkwardness makes it no longer viable to support the thing. That would be a happy day.

When buttons stop working…

16th November 2009One of the things that stopped working as it should after my recent Ubuntu 9.10 upgrade was the Eclipse PDT installation that I had in place. Editing files went a bit haywire and creating projects had me pushing buttons with nothing happening. Whether this was a Java or GNOME issue, I don’t know but I found it happening too on openSUSE 11.2 (there should be more on that distro in a later entry). That was enough to get me looking again at Netbeans.

In both openSUSE (NB version 6.5) and Ubuntu (NB version 6.7.1), I plucked the default offering of Netbeans from the respective software repositories and added the PHP plugin in both cases. Unlike when I last gave the platform a go, things seemed to go smoothly and it looks to have replaced Eclipse for PHP development duties. Project scanning make take a little while but it’s far from annoying and my earlier dalliance with using Netbeans as a PHP editor was stymied by performance that was so sluggish as to make the thing a pain to use. Up to now, Netbeans’ footprints when it comes to its use of PC power never was light so I am wondering if dual-core and quad-core CPU’s help along with a copious supply of RAM. Only time will tell if these inital positive impressions stay the course and I’ll be keeping an open mind for now.

A use for choice

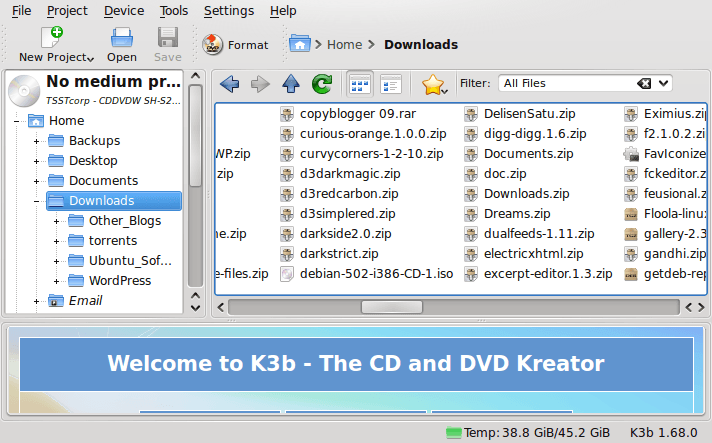

8th November 2009After moving to Ubuntu 9.10, Brasero stopped behaving as well as it did in Ubuntu 9.04. Any bootable disks that I have created with it weren’t without glitches. After a recent update, things got better with a live CD actually booting up a PC rather than failing to find a file system like those created with its forbear. While unsure if the observed imperfections stemmed from my using the RC for the upgrades and installations, I got to looking for a solution and gave K3b a go. It certainly behaves like I’d expect it and a live CD created with it worked without fault. The end result is that Brasero has been booted off my main home system for now. That may mean that all of the in-built GNOME convenience is lost to me but I can live without the extras; after all, it’s the quality of the created disks that matters.

A certain lack of speed

2nd November 2009A little while, I encountered a problem with ImageMagick processing DNG files in Ubuntu 9.04. Not realising that I could solve me own problem by editing a file named delegates.xml, I took to getting a Debian VM to do the legwork for me. That’s where you’ll find all the commands used when helper software is used by ImageMagick to help it on its way. On its own, ImageMagick cannot deal with DNG files so the command line variant of UFRaw (itself a front end for DCRaw) is used to create a PNM file that ImageMagick can handle. The problem a few months back was that the command in delegates.xml wasn’t appropriate for a newer version of UFRaw and I got it into my head that things like this were hard-wired into ImageMagick. Now, I know better and admit my error.

With 9.1o, it seems that the command in delegates.xml has been corrected but another issue had raised its head. UFRaw 0.15, it seems, isn’t the speediest when it comes to creating PNM files and, while my raw file processing script works after a spot of modification to deal with changes in output from the identify command used, it takes far too long to run. GIMP also uses UFRaw so I wonder if the same problem has surfaced there too but it has been noticed by the Debian team and they have a package for version 0.16 of the software in their unstable branch that looks as if it has sorted the speed issue. However, I am seeing that 0.15 is in the testing branch so I’d be tempted to stick with Lenny (5.x) if any successor turns out to have slower DNG file handling with ImageMagick and UFRaw. In my estimation, 0.13 does what I need so why go for a newer release if it turns out ot be slower?