A little bit of abstraction

21st August 2021

Data science has remained in my awareness since 2017 though my work is more on its fringes in clinical research. In fact, I have been involved more in the standardisation and automation of more traditional data reporting than in the needs of data modelling such as data engineering or other similar disciplines. Much of this effort has meant the use of SAS, with which I have programmed since 2000 and for which I have a licence (an expensive commodity, it has to be said), but other technologies are being explored with R, Python and Julia being among them.

The change in technological scope does bring an element of excitement and new interest but there is also some sadness when tried and trusted technologies meet with newer competition and valued skills are no longer as career securing as they once were. Still, there is plenty of online training out there and I already have collected some of my thoughts on this. The learning continues and the need for repositioning is also clear.

The journey also has brought some curios to my notice. One of these is This Person Does Not Exist, a website building photos of non-existent faces using machine learning. Recently, I learned of others like it such as This Artwork Does Not Exist, This Cat Does Not Exist, This Horse Does Not Exist, and This Chemical Does Not Exist. The last of these probably should be entitled “This Molecule Does Not Exist (Yet)” since it is a fictitious molecular structure that has been created and what you get is an actual moving image that spins it around in three-dimensional space. The one with dynamically generated abstract art is the main inspiration for this piece and is of more interest to me while the other two are more explanatory though the horse website is not so successful in its execution and one can ask why we need more cat pictures.

To some, the idea of creating fake pictures may feel a little foreboding and that especially applies to photos of people and the livelihoods of any content creators. Nevertheless, these sources of imagery have their legitimate uses such as decorating websites or brochures and that is where my interest is piqued. After all, there are some subjects where pictures can be scarce so any form of decoration that enlivens an article has to have some use. Technology websites like this one can feature images too with screenshots and device photos being commonplace but they can all look like each other, hence the need for a little more variety and having pictures often increases the choice of website themes as well since so many need images to make them work or stand out. As ever, being sparing with any new innovations remains in order so that is how I approach this matter as well.

Rethinking photo editing

17th April 2018Photo editing has been something that I have been doing since my first-ever photo scan in 1998 (I believe it was in June of that year but cannot be completely sure nearly twenty years later). Since then, I have been using a variety of tools for the job and wondered how other photos can look better than my own. What cannot be excluded is my preference for being active in the middle of the day when light is at its bluest as well as a penchant for using a higher ISO of 400. In other words, what I do when making photos affects how they look afterwards as much as the weather that I had encountered.

My reason for mentioning the above aspects of photographic craft is that they affect what you can do in photo editing afterwards, even with the benefits of technological advancement. My tastes have changed over time, so the appeal of re-editing old photos fades when you realise that you only are going around in circles and there always are new ones to share, so that may be a better way to improve.

When I started, I was a user of Paint Shop Pro but have gone over to Adobe since then. First, it was Photoshop Elements, but an offer in 2011 lured me into having Lightroom and the full version of Photoshop. Nowadays, I am a Creative Cloud photography plan subscriber so I get to see new developments much sooner than once was the case.

Even though I have had Lightroom for all that time, I never really made full use of it and preferred a Photoshop-based workflow. Lightroom was used to select photos for Photoshop editing, mainly using adjustments for such things as tones, exposure, levels, hue and saturation. Removal of dust spots, resizing and sharpening were other parts of a still minimalist approach.

What changed all this was a day spent pottering about the 2018 Photography Show at the Birmingham NEC during a cold snap in March. That was followed by my checking out the Adobe YouTube Channel afterwards where there were videos of the talks featured every day of the four-day event. Here are some shortcuts if you want to do some catching up yourself: Day 1, Day 2, Day 3, and Day 4. Be warned though that these videos are long in that they feature the whole day and there are enough gaps that you may wish to fast-forward through them. Even so, there is quite a bit of variety of things to see.

Of particular interest were the talks given by the landscape photographer David Noton who sensibly has a philosophy of doing as little to his images as possible. It helps that his starting points are so good that adjusting black and white points with a little tonal adjustment does most of what he needs. Vibrancy, clarity and sharpening adjustments are kept to a minimum while some work with graduated filters evens out exposure differences between skies and landscapes. It helps that all this can be done in Lightroom, so that set me thinking about trying it out for size and the trick of using the backslash (\) key to switch between raw and processed views is a bonus granted by non-destructive editing. Others may have demonstrated the creation of composite imagery, but simplicity is more like my way of working.

Confusingly, we now have the cloud-based Lightroom CC while the previous desktop counterpart is known as Lightroom Classic CC. Though the former may allow for easy dust spot removal among other things, it is the latter that I prefer because the idea of wholesale image library upload does not appeal to me for now and I already have other places for off-site image backup like Google Drive and Dropbox. The mobile app does look interesting since it allows capturing images on a such a device in Adobe’s raw image format DNG. Still, my workflow is set to be more Lightroom-based than it once was and I quite fancy what new technology offers, especially since Adobe is progressing its Sensai artificial intelligence engine. The fact that it has access to many images on its systems due to Lightroom CC and its own stock library (Adobe Stock, formerly Fotolia) must mean that it has plenty of data for training this AI engine.

A look at Google’s Pixel C

26th December 2016Since my last thoughts on trips away without a laptop, I have come by Google’s Pixel C. It is a 10″ tablet so it may not raise hackles on an aircraft like the 12.9″ screen of the large Apple iPad Pro might. The one that I have tried comes with 64 GB of storage space and its companion keyboard cover (there is a folio version). Together, they can be bought for £448, a saving of £150 on the full price.

![]()

The Pixel C keyboard cover uses strong magnets to hold the tablet onto it and that does mean some extra effort when changing between the various modes. These include covering the tablet screen as well as piggy backing onto it with the screen side showing or attached in such a way that allows typing. The latter usefully allows you to vary the screen angle as you see fit instead of having to stick with whatever is selected for you by a manufacturer. Unlike the physical connection offered by an iPad Pro, Bluetooth is the means offered by the Pixel C and it works just as well from my experiences so far. Because of the smaller size, it feels a little cramped in comparison with a full size keyboard or even that with a 12.9″ iPad Pro. They also are of the scrabble variety though they work well otherwise.

The tablet itself is impressively fast compared to a HTC One A9 phone or even a Google Nexus 9 and that became very clear when it came to installing or updating apps. The speed is just as well since an upgrade to Android 7 (Nougat) was needed on the one that I tried. You can turn on adaptive brightness too, which is a bonus. Audio quality is nowhere near as good as a 12.9″ iPad Pro but that of the screen easily is good enough for assessing photos stored on a WD My Passport Wireless portable hard drive using the WD My Cloud app.

All in all, it may offer that bit more flexibility for overseas trips compared to the bigger iPad Pro so I am tempted to bring one with me instead. The possibility of seeing newly captured photos in slideshow mode is a big selling point since it does functions well for tasks like writing emails or blog posts, like this one since it started life on there. Otherwise, this is a well made device.

Pondering travel device consolidation using an Apple iPad Pro 12.9″

18th September 2016It was a change of job in 2010 that got me interested in using devices with internet connectivity on the go. Until then, the attraction of smartphones had not been strong, but I got myself a Blackberry on a pay as you go contract, but the entry device was painfully slow, and the connectivity was 2G. It was a very sluggish start.

It was supplemented by an Asus Eee PC that I connected to the internet using broadband dongles and a Wi-Fi hub. This cumbersome arrangement did not work well on short journeys and the variability of mobile network reception even meant that longer journeys were not all that successful either. Usage in hotels and guest houses though went better and that has meant that the miniature laptop came with me on many a journey.

In time, I moved away from broadband dongles to using smartphones as Wi-Fi hubs and that largely is how I work with laptops and tablets away from home unless there is hotel Wi-Fi available. Even trips overseas have seen me operate in much the same manner.

One feature is that we seem to carry quite a number of different gadgets with us at a time and that can cause inconvenience when going through airport security since they want to screen each device separately. When you are carrying a laptop, a tablet, a phone and a camera, it does take time to organise yourself and you can meet impatient staff, as I found recently when returning from Oslo. Checking in whatever you can as hold luggage helps to get around at least some of the nuisance and it might be time for the use of better machinery to cut down on having to screen everything separately.

When you come away after an embarrassing episode as I once did, the attractions of consolidating devices start to become plain. In fact, most probably could get with having just their phone. It is when you take activities like photography more seriously that the gadget count increases. After all, the main reason a laptop comes on trips beyond Britain and Ireland at all is to back up photos from my camera in case an SD card fails.

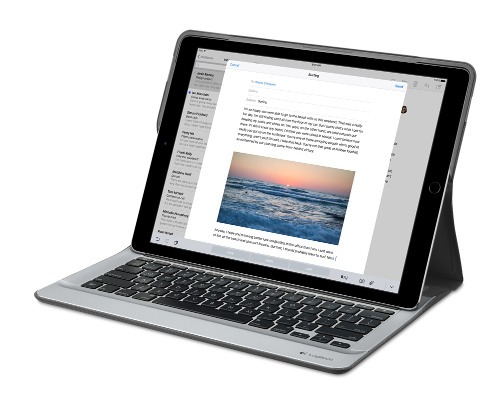

Parking that thought for a while, let’s go back to March this year when temptation overcame what should have been a period of personal restraint. The result was that a 32 GB 12.9″ Apple iPad Pro came into my possession along with an Apple Pencil and a Logitech CREATE Backlit Keyboard Case. It should have done so, but the size of the screen did not strike me until I got it home from the Apple Store. That was one of the main attractions because maps can be shown with a greater field of view in a variety of apps, a big selling point for a hiker with a liking for maps, who wants more than what is on offer from Apple, Google or even Bing. The precision of the Pencil is another boon that makes surfing the website so much easier and the solid connection between the case and the iPad means that keyboard usage is less fiddly than it would if it used Bluetooth. Having tried them with the BBC iPlayer app, I can confirm that the sound from the speakers is better than any other mobile device that I have used.

Already, it has come with me on trips around England and Scotland. These weekend trips saw me leave the Asus Eee PC stay at home when it normally might have come with me and taking just a single device along with a camera or two had its uses too. The screen is large for reading on a train but I find that it works just as well so long as you have enough space. Otherwise, combining use of a suite of apps with recourse to the web does much of the information seeking needed while on a trip away and I was not found wanting. Battery life is good too, which helps.

Those trips allowed for a little light hotel room blog post editing too and the iPad Pro did what was needed, though the ergonomics of reaching for the screen with the Pencil meant that my arm was held aloft more than was ideal. Another thing that raised questions in my mind is the appearance of word suggestions at the bottom of the screen as if this were a mobile phone since I wondered if these were more of a hindrance than a help given that I just fancied typing and not pointing at the screen to complete words. Copying and pasting works too but I have found the screen-based version a little clunky so I must see if the keyboard one works just as well, though the keyboard set up is typical of a Mac so that affects word selection. You need to use the OPTION key in the keyboard shortcut that you use for this and not COMMAND or CONTROL as you might do on a PC.

Even with these eccentricities, I was left wondering if it had any utility when it came to backing up photos from digital cameras and there is an SD card adapter that makes this possible. A failure of foresight on my part meant that the 32 GB capacity now is an obvious limitation but I think I might have hit on a possible solution that does not need to upload to an iCloud account. It involves clearing off the photos onto a 128 GB Transcend JetDrive Go 300 so they do not clog up the iPad Pro’s storage. That the device has both Lightning and USB connectivity means that you can plug it into a laptop or desktop PC afterwards too. If that were to work as I would hope, then the laptop/tablet combination that I have been using for all overseas trips could be replaced to allow a weight reduction as well as cutting the hassle at airport security.

Trips to Ireland still may see my sticking with a tried and tested combination though because I often have needed to do some printing while over there. While I have been able to print a test document from an iPad Mini on my home network-connected printer, not every model supports this and that for NFC or Air Print is not universal either. If this were not an obstacle, apps like Pages, Numbers and Keynote could have their uses for business-related work and there are web-based offerings from Google, Microsoft and others too.

In conclusion, I have found that my iPad Pro does so much of what I need on a trip away that retiring the laptop/tablet combination for most of these is not as outrageous as it once would have seemed. In some ways, iOS has a way to go yet before it could take over from macOS but it remains in development so it will be interesting to see what happens next. All the while, hybrid devices running Windows 10 are becoming more pervasive and that might provide Apple with the encouragement that it needs.

Batch conversion of DNG files to other file types with the Linux command line

8th June 2016At the time of writing, Google Drive is unable to accept DNG files, the Adobe file type for RAW images from digital cameras. The uploads themselves work fine but the additional processing at the end that I believe is needed for Google Photos appears to be failing. Because of this, I thought of other possibilities like uploading them to Dropbox or enclosing them in ZIP archives instead; of these, it is the first that I have been doing and with nothing but success so far. Another idea is to convert the files into an image format that Google Drive can handle and TIFF came to mind because it keeps all the detail from the original image. In contrast, JPEG files lose some information because of the nature of the compression.

Handily, a one line command does the conversion for all files in a directory once you have all the required software installed:

find -type f | grep -i “DNG” | parallel mogrify -format tiff {}

The find and grep commands are standard with the first getting you a list of all the files in the current directory and sending (piping) these to the grep command so the list only retains the names of all DNG files. The last part uses two commands for which I found installation was needed on my Linux Mint machine. The parallel package is the first of these and distributes the heavy workload across all the cores in your processor and this command will add it to your system:

sudo apt-get install parallel

The mogrify command is part of the ImageMagick suite along with others like convert and this is how you add that to your system:

sudo apt-get install imagemagick

In the command at the top, the parallel command works through all the files in the list provided to it and feeds them to mogrify for conversion. Without the use of parallel, the basic command is like this:

mogrify -format tiff *.DNG

In both cases, the -format switch specifies the output file type with tiff triggering the creation of TIFF files. The *.DNG portion itself captures all DNG files in a directory but {} does this in the main command at the top of this post. If you wanted JPEG ones, you would replace tiff with jpg. Shoudl you ever need them, a full list of what file types are supported is produced using the identify command (also part of ImageMagick) as follows:

identify -list format

A display of brand loyalty

12th July 2013Since 2007, my main camera has been a Pentax K10D DSLR and it has gone on many journeys with me. In fact, more than 15,000 images have been captured with it and I have classed it as an unfailing servant. The autofocus may not be the fastest but my subjects tend to be stationary: landscapes, architecture, flora and transport. Even any bus and train photos have included parked vehicles rather than moving ones so there never have been issues. The hint of underexposure in any photos always can be sorted because DNG files are what I create, with all the raw capture information that is possible to retain. In fact, it has been hard to justify buying another SLR because the K10D has done so well for me.

In recent months, I have looking at processed photos and asking myself if time has moved along for what is not far from being a six year old camera. At various times, I have been looking at higher members of the Pentax while wondering if an upgrade would be a good idea. First, there was the K7 and then the K5 before the K5 II got launched. Even though its predecessor is still to be found on sale, it was the newer model that became my choice.

My move to Pentax in 2007 was a case of brand disloyalty since I had been a Canon user from when I acquired my first SLR, an EOS 300. Even now, I still have a Powershot G11 that finds itself slipped into a pocket on many a time. Nevertheless, I find that Canon images feel a little washed out prior to post processing and that hasn’t been the case with the K10D. In fact, I have been hearing good things about Nikon cameras delivering punchy results so one of them would be a contender were it not for how well the Pentax performed.

So, what has my new K5 II body gained me that I didn’t have before? For one thing, the autofocus is a major improvement on that in the K10D. It may not stop me persevering with manual focusing for most of the time but there are occasions the option of solid autofocus is good to have. Other advances include a 16.3 megapixel sensor with a much larger ISO range. The advances in sensor technology since when the K10D appeared may give me better quality photos and noise is something that my eyes may have begun to detect in K10D photos even at my usual ISO of 400.

There have been innovations that I don’t need too. Live View is something that I use heavily with the Powershot G11 because it has such a pitiful optical viewfinder. The K5 II has a very bright and sharp one so that function lays dormant, especially when I witnessed dodgy autofocus performance with it in use; manual focusing should be OK, I reckon. By default too, the screen stays on all the time and that’s a nuisance for an optical viewfinder user like me so I looked through the manual and the menus to switch off the thing. My brief flirtation with the image level display met an end for much the same reason though it’s good that it’s there. There is some horizon auto-correction available as a feature and this is left on to see what it offers since there have been a multitude of times when I needed to sort out crooked horizons caused by my handholding the camera.

The K5 II may have a 3″ screen on its back but it has done nothing to increase the size of the camera. If anything, it is smaller that the K10D and that usefully means that I am not on the lookout for a new camera holster. Not having a bigger body also means there is little change in how the much camera feels in the hand compared with the older one.

In many ways, the K5 II works very like the K10D once I took control over settings that didn’t suit me. Both have Shake Reduction in their camera bodies though the setting has been moved into the settings menu in the new camera when the older one had a separate switch on its body. Since I’d be inclined to leave it on all the time and prefer not to have it knocked off accidentally, this is not an issue. Otherwise, many of the various switches are in the same places so it’s not that hard to find my way around them.

That’s not to say that there aren’t other changes like the addition of a lock to the mode dial but I have used Canon EOS camera bodies with that feature so I do not consider it a step backwards. The exposure compensation button has been moved to the top of the camera where I found it very easily and have been using perhaps more than on the K10D; it’s also something that I use on the G11 so the experimentation is being brought across to the K5 II now as well. Beside it, there’s a new ISO button so further experimentation can be attempted with that to see how it does.

If I have any criticism, it’s about the clutter of the menus on the K5 II. The long lists through you scrolled on the K10D have been replaced with a series of extra tabs so that on-screen scrolling is not needed as before. However, I reckon that this breaks up things too much and makes working through the settings look more foreboding to anyone who is not so technical in mindset. Nevertheless, settings such as the the type of file to capture are there and I continue to use RAW DNG files as is usual for me though JPEG and Pentax’s own RAW format also are there. For a while, I forgot to set the date, soon found out what I did and the situation was remedied. The same sort of thing applied to storing files in different folders according to the capture date. For my own reasons, I turned this off to put everything into a single PENTX directory to suit my own workflow. My latest discovery among the menus was the ability to add photographer and copyright holder information to the EXIF metadata attached to the image files created by the camera. With legislative proposals that dilute the automatic rights of copyright holders going through the U.K. parliament, this seems a very timely inclusion even if most would prefer that there was no change to copyright law.

Of course, the worth of any camera is in the images that it produces and I have been happy with what I have been getting so far. The bigger files mean less images fit on a memory card as before. Thankfully, SDHC card capacities have grown even if I don’t wish to machine gun my photography altogether. While out and about, I was surprised to apertures like F/14 and F/18 when I was more accustomed to a progression like F/11, F/13, F/16, F/19, F/22, etc. Most of those older values still are there though so there hasn’t been a complete break with convention. The same comment applies to shutter speeds where ones like 1/100 and 1/160 made there appearance where I might have expected just ones like 1/90, 1/125, 1/250 and so on. The extra possibilities, and that is what they are, do allow more flexibility I suppose and may even make it easier to make correct exposures though any judgement of correctness has to be in the eye of a photographer and not what a computer algorithm in a camera determines. For much of the time until now, I have stuck with an ISO of 400 apart from a little testing in a woodland area of an evening soon after the camera arrived.

Since the K5 II came my way a few months ago, I have been meaning to collect my thoughts on here and there has been a delay while I brought mu thinking to a sensible close.At one point, it felt like there was so much to say that the piece became larger in my mind that even what you have been reading now. After all, there are other things that I can adjust to see how the resulting images look and white balance is but one of these.The K10D isn’t beyond experimentation either, especially since I discovered that shake reduction was switched off and it has me asking if that lacking in quality that I mentioned earlier has another explanation. Of course, actually making use of my tripod would be another good suggestion so it’s safe to say that yet more photographic explorations await.

Command Line Processing of EXIF Image Metadata

8th July 2013There is a bill making its way through the U.K. parliament at the moment that could reduce the power of copyright when it comes to images placed on the web. The current situation is that anyone who creates an image automatically holds the copyright for it. However, the new legislation will remove that if it becomes law as it stands. As it happens, the Royal Photographic Society is doing what it can to avoid any changes to what we have now. There may be the barrier of due diligence but how many of us take steps to mark our own intellectual property? For one, I have been less that attentive to this and now wonder if there is anything more that I should be doing. Others may copyleft their images but I don’t want to find myself unable to share my own photos because another party is claiming rights over them. There’s watermarking them but I also want to add something to the image metadata too.

That got me wondering about adding metadata to any images that I post online that assert my status as the copyright holder. It may not be perfect but any action is better than doing nothing at all. Given that I don’t post photos where EXIF metadata is stripped as part of the uploading process, it should be there to see for anyone who bothers to check and there may not be many who do.

Because I also wanted to batch process images, I looked for a command line tool to do the needful and found ExifTool. Being a Perl library, it is cross-platform so you can use it on Linux, Windows and even OS X. To install it on a Debian or Ubuntu based Linux distro, just use the following command:

sudo apt-get install libimage-exiftool-perl

The form of the command that I found useful for adding the actual copyright information is below:

exiftool -p “-copyright=(c) John …” -ext jpg -overwrite_original

The -p switch preserves the timestamp of the image file while the -overwrite_original one ensures that you don’t end up with unwanted backup files. The copyright message goes within the quotes along with the -copyright option. With a little shell scripting, you can traverse a directory structure and change the metadata for any image files contained in different sub-folders. If you wish to do more than this, there’s always the user documentation to be consulted.

Sometimes, a firmware update is in order

28th February 2011After a recent trip to Oxford, I have started to mull over adding a longer lens (could make more distant architectural detail photos a possibility) to complement my trusty Sigma 18-125mm f/3.8-5.6 DC HSM zoom lens that now is entering approaching its third year in my hands. While I have made no decision about the acquisition of another lens, there are some tempting bargains out there, it seems. However, the real draw on my attention is the lack of autofocus with the aforementioned Sigma and I now find it hard to believe that I was blaming the manufacturer for no keeping up with Pentax when it really was the other way around. A bit of poking around on the web revealed that all that I needed to do was download a firmware update from the Pentax website. While being slowed down by the lack of autofocus cannot have done bad things for my photography, I still wonder at why I didn’t try updating the camera for as long as I have.

In the file for updating my K10D, there was a README file containing the instructions for carrying out the update with the included binary file that was set to take the camera from version 1.00 to 1.30 (hold down the Menu button while starting the camera to see what you have). In summary, both files were copied onto an SD card that was inserted into the camera and it turned off. The next step was to power up the camera with the menu button held down to start the update. To stop erroneous updates, there is an “Are you sure?” style Yes/No menu popped up before anything else happens. Selecting Yes sets things into motion and you have to wait until the word “COMPLETE” appears in the bottom left corner before turning the camera and removing the card. Now that I think of it, I should have checked the battery before doing anything because the consequences of losing power in the middle of what I was doing would have been annoying, especially with my liking the photographic results produced by the camera.

Risk taking aside, the process was worth its while with HSM now working as it should have done all this time. It seems quiet and responsive too from my limited tests to date. Even better, the autofocus doesn’t hunt anywhere near as much as the 18-55 mm Pentax kit lens that came with the camera. The next decision is whether to stick with my manual focussing ways or lapse into trusting autofocus from now on though my better reason is to stick with the slower approach unless the subjects are fast. Now that I think of it, train and bus photos for my transport website have become a whole lot easier as have any wildlife photos that I care to capture. Speaking of the latter brings me back to that telephoto quandary that I mentioned at the beginning. Well, there’s a tempting Sigma 50-200 mm that has caught my eye…

An avalanche of innovation?

23rd September 2010It seems that, almost in spite of the uncertain times or maybe because of them, it feels like an era of change on the technology front. Computing is the domain of many of the postings on this blog and a hell of a lot seems to be going mobile at the moment. For a good while, I managed to stay clear of the attractions of smartphones until a change of job convinced me that having a BlackBerry was a good idea. Though the small size of the thing really places limitations on the sort of web surfing experience that you can have with it, you can keep an eye on the weather, news, traffic, bus and train times so long as the website in question is built for mobile browsing. Otherwise, it’s more of a nuisance than a patchy phone network (in the U.K., T-Mobile could do better on this score as I have discovered for myself; thankfully, a merger with the Orange network is coming next month).

Speaking of mobile websites, it almost feels as if a free for all has recurred for web designers. Just when the desktop or laptop computing situation had more or less stabilised, along come a whole pile of mobile phone platforms to make things interesting again. Familiar names like Opera, Safari, Firefox and even Internet Explorer are to be found popping up on handheld devices these days along with less familiar ones like Web ‘n’ Walk or BOLT. The operating system choices vary too with iOS, Android, Symbian, Windows and others all competing for attention. It is the sort of flowering of innovation that makes one wonder if a time will come when things begin to consolidate but it doesn’t look like that at the moment.

The transformation of mobile phones into handheld computers isn’t the only big change in computing with the traditional formats of desktop and laptop PC’s being flexed in all sorts of ways. First, there’s the appearance of netbooks and I have succumbed to the idea of owning an Asus Eee. Though you realise that these are not full size laptops, it still didn’t hit me how small these were until I owned one. They are undeniably portable and tablets look even more interesting in the aftermath of Apple’s iPad. You may call them over-sized mobile photos but the idea of making a touchscreen do the work for you has made the concept fly for many. Even so, I cannot say that I’m overly tempted though I have said that before about other things.

Another area of interest for me is photography and it is around this time of year that all sorts of innovations are revealed to the public. It’s a long way from what we thought was the digital photography revolution when digital imaging sensors started to take the place of camera film in otherwise conventional compact and SLR cameras, making the former far more versatile than they used to be. Now, we have SLD cameras from Olympus, Panasonic, Samsung and Sony that eschew the reflex mirror and prism arrangement of an SLR using digital sensor and electronic viewfinders while offering the possibility of lens interchangeability and better quality than might be expected from such small cameras. In recent months, Sony has offered SLR-style cameras with translucent mirror technology instead of the conventional mirror that is flipped out of the way when a photographic image is captured. Change doesn’t end there with movie making capabilities being part of the toolset of many a newly launch compact, SLD and SLR camera. The pixel race also seems to have ended though increases still happen as with the Pentax K-5 and Canon EOS 60D (both otherwise conventional offerings that have caught my eye though so much comes on the market at this time of year that waiting is better for the bank balance).

The mention of digital photography brings to mind the subject of digital image processing and Adobe Photoshop Elements 9 is just announced after Photoshop CS5 appeared earlier this year. It almost feels as if a new version of Photoshop or its consumer cousin are released every year, causing me to skip releases when I don’t see the point. Elements 6 and 8 were such versions for me and I’ll be in no hurry to upgrade to 9 yet either though the prospect of using content aware filling to eradicate unwanted objects from images is tempting. Nevertheless, that shouldn’t stop anyone trying to exclude them in the first place. In fact, I may need to reduce the overall number of images that I collect in favour of bringing away only good ones. The outstanding question on this is can I slow down and calm my eagerness to bring at least one good image away from an outing by capturing anything that seems promising at the time. Some experimentation but being a little more choosy can save work later on.

While back on the subject of software, I’ll voyage in to the world of the web before bringing these meanderings to a close. It almost feels as if there is web-based application following web-based application these days when Twitter and Facebook nearly have become household names and cloud computing is a phrase that turns up all over the place. In fact, the former seems to have encouraged a whole swathe of applications all of itself. Applications written using technologies well used on the web must stuff many a mobile phone app store too and that brings me full circle for it is these that put so much functionality on our handsets with Java seemingly powering those I use on my BlackBerry. Them there’s spat between Apple and Adobe regarding the former’s support for Flash.

To close this mental amble, there may be technologies that didn’t come to mind while I was pondering this piece but they doubtless enliven the technological landscape too. However, what I have described is enough to take me back more than ten years ago when desktop computing and the world of the web were a lot more nascent than is the case today. Then, the changes that were ongoing felt a little exciting now that I look back on them and it does feel as if the same sort of thing is recurring though with things like phones creating the interest in place of new developments in desktop computing such as a new version of Window (though 7 was anticipated after Vista). Web designers may complain about a lack of standardisation and they’re not wrong but this may be an ear of technological change that in time may be remembered with its own fondness too.

A bigger screen?

23rd February 2010A recent bit of thinking has caused me to cast my mind back over all the screens that have sat in front of me while working with computers over the years. Well, things have come a long way from the spare television that I used with a Commodore 64 that I occasionally got to exploring the thing. Needless to say, a variety of dedicated CRT screens ensued as I started to make use of Apple and IBM compatible PC’s provided in computing labs and other such places before I bought an example of the latter as my first ever PC of my own. That sported a 15″ display that stood out a little in times when 14″ ones were mainstream but a 17″ Iiyama followed it when its operational quality deteriorated. That Iiyama came south with me from Edinburgh as I moved to where the work was and offered sterling service before it too started to succumb to aging.

During the time that the Iiyama CRT screen was my mainstay at home, there were changes afoot in the world of computer displays. A weighty 21″ Philips screen was what greeted me on a first day at work but 21″ Eizo LCD displays were set to replace those behemoths and remain in use as if to prove the longevity of LCD panels and the validity of using what had been sufficient for laptops for a decade or so. In fact, the same comment regarding reliability applies to the screen that now is what I use at home, a 17″ Iiyama LCD panel (yes, I stuck with the same brand when I changed technologies longer ago than I like to remember).

However, that hasn’t stopped me wondering about my display needs and it’s screen size that is making me think rather than the reliability of the current panel. That is a reflection on how my home computing needs have changed over time and they show how my non-computing interests have evolved too. Photography is but one of these and the move the digital capture has brought with a greater deal of image processing, so much that I wonder if I need to make less photos rather than bringing home so many that it can be hard to pick out the ones that are deserving of a wider viewing. That is but one area where a bigger screen would help but there is another and it arises from my interest in exploring countryside on foot or on my bike: digital mapping. When planning outings, it would be nice to have a wider field of view to be able to see more at a larger scale.

None of the above is a showstopper that would be the case if the screen itself was unreliable so I am going to take my time on this one. The prospect of sharing desktops across two screens is another idea but that needs some thought about where it all would fit; the room that I have set aside for working at my computer isn’t the largest but it’ll need to do. After the space side of things, then there’s the matter of setting up the hardware. Quite how a dual display is going to work with a KVM setup is something to explore as is the adding of extra video cards to existing machines. After the hardware fiddling, the software side of things is not a concern that I have because of when I used laptop as my main machine for a while last year. That confirmed that Windows (Vista but it has been possible since 2000 anyway…) and Ubuntu (other modern Linux distributions should work too…) can cope with desktop sharing out of the box.

Apart from the nice thoughts of having more desktop space, the other tempting side to all of this is what you can get for not much outlay. It isn’t impossible to get a 22″ display for less than £200 and the prices for 24″ ones are tempting too. That’s a far cry from paying next to £300 (if my memory serves me correctly) for that 17″ Iiyama and I’d hope that the quality is as good as ever.

It’s all very well talking about pricing but you need to sit down and choose a make and model when you get to deciding on a purchase. There is plenty of choice so that would take a while but magazine reviews will come in handy here. Saying that, last year’s computing misadventures have me questioning the sense of going for what a magazine places on its A-list. They also have me minded to go to a nearby computer shop to make a purchase rather than choosing a supplier on the web; it is easier to take back a faulty unit if you don’t have far to go. Speaking of faulty units, last year has left me contemplating waiting until the year is older before making any acquisitions of computer kit. All of that has put the idea of buying a new screen on the low priority list, nice to have but not essential. For now, that is where it stays but you never know what the attractions of a shiny new thing can do…