When a hard drive is unrecognised by the Linux hddtemp command

15th August 2021One should not do a new PC build in the middle of a heatwave if you do not want to be concerned about how fast fans are spinning and how hot things are getting. Yet, that is what I did last month after delaying the act for numerous months.

My efforts mean that I have a system built around an AMD Ryzen 9 5950X CPU and a Gigabyte X570 Aorus Pro with 64 GB of memory and things are settling down after the initial upheaval. That also meant some adjustments to the CPU fan profile in the BIOS for quieter running while the the use of Be Quiet! Dark Rock 4 cooler also helps as does a Be Quiet! Silent Wings 3 case fan. All are components from trusted brands though I wonder how much abuse they got during their installation and subsequent running in.

Fan noise is a non-quantitative indicator of heat levels as much as touch so more quantitative means are in order. Aside from using a thermocouple device, there are in-built sensors too. My using Linux Mint means that I have the sensors command from the lm-sensors package for checking on CPU and other temperatures though hddtemp is what you need for checking on the same for hard drives. The latter can be used as follows:

sudo hddtemp /dev/sda /dev/sdb

This has to happen using administrator access and a list of drives needs to be provided because it cannot find them by itself. In my case, I have no mechanical hard drives installed in non-NAS systems and I even got to replacing a 6 TB Western Digital Green disk with an 8 TB SSD but I got the following when I tried checking on things with hddtemp:

WARNING: Drive /dev/sda doesn't seem to have a temperature sensor.

WARNING: This doesn't mean it hasn't got one.

WARNING: If you are sure it has one, please contact me ([email protected]).

WARNING: See --help, --debug and --drivebase options.

/dev/sda: Samsung SSD 870 QVO 8TB: no sensor

The cause of the message for me was that there is no entry for Samsung SSD 870 QVO 8TB in /etc/hddtemp.db so that needed to be added there. Before that could be rectified, I needed to get some additional information using smartmontools and these needed to be installed using the following command:

sudo apt-get install smartmontools

What I needed to do was check the drive’s SMART data output for extra information and that was achieved using the following command:

sudo smartctl /dev/sda -a | grep -i Temp

What this does is to look for the temperature information from smartctl output using the grep command with output from the first being passed to the second through a pipe. This yielded the following:

190 Airflow_Temperature_Cel 0x0032 072 050 000 Old_age Always - 28

The first number in the above (190) is the thermal sensor’s attribute identifier and that was needed in what got added to /etc/hddtemp.db. The following command added the necessary data to the aforementioned file:

echo \"Samsung SSD 870 QVO 8TB\" 190 C \"Samsung SSD 870 QVO 8TB\" | sudo tee -a /etc/hddtemp.db

Here, the output of the echo command was passed to the tee command for adding to the end of the file. In the echo command output, the first part is the name of the drive, the second is the heat sensor identifier, the third is the temperature scale (C for Celsius or F for Fahrenheit) and the last part is the label (it can be anything that you like but I kept it the same as the name). On re-running the hddtemp command, I got output like the following so all was as I needed it to be.

/dev/sda: Samsung SSD 870 QVO 8TB: 28°C

Since then, temperatures may have cooled and the weather become more like what we usually get but I am still keeping an eye on things, especially when the system is put under load using Perl, R, Python or SAS. There may be further modifications such as changing the case or even adding water cooling, not least to have a cooler power supply unit, but nothing is being rushed as I monitor things to my satisfaction.

Carrying out a hard reset of a home KVM switch

20th March 2017During a recent upgrade from Linux Mint 18 to Linux Mint 18.1 on a secondary machine, I ran into bother with my Startech KVM (keyboard, video, mouse and audio sharing) switch. The PC failed to recognise the attachment of my keyboard and mouse so an internet search began.

Nothing promising came from it apart from resetting the KVM switch. In other words, the solution was to turn it off and back on again. That was something that I did try without success. What I had overlooked was that there USB connections to PC’s that fed the device with a certain amount of power and that was enough to keep it on.

Unplugging those USB cables as well as the power cable was needed to completely switch off the device. That provided the reset that I needed and all was well again. Otherwise, I would have been baffled enough to resort to buying a replacement KVM switch so the extra information avoided a purchase that could have cost in the region of £100. In other words, a little research had saved me money.

Pondering travel device consolidation using an Apple iPad Pro 12.9″

18th September 2016It was a change of job in 2010 that got me interested in using devices with internet connectivity on the go. Until then, the attraction of smartphones had not been strong, but I got myself a Blackberry on a pay as you go contract, but the entry device was painfully slow, and the connectivity was 2G. It was a very sluggish start.

It was supplemented by an Asus Eee PC that I connected to the internet using broadband dongles and a Wi-Fi hub. This cumbersome arrangement did not work well on short journeys and the variability of mobile network reception even meant that longer journeys were not all that successful either. Usage in hotels and guest houses though went better and that has meant that the miniature laptop came with me on many a journey.

In time, I moved away from broadband dongles to using smartphones as Wi-Fi hubs and that largely is how I work with laptops and tablets away from home unless there is hotel Wi-Fi available. Even trips overseas have seen me operate in much the same manner.

One feature is that we seem to carry quite a number of different gadgets with us at a time and that can cause inconvenience when going through airport security since they want to screen each device separately. When you are carrying a laptop, a tablet, a phone and a camera, it does take time to organise yourself and you can meet impatient staff, as I found recently when returning from Oslo. Checking in whatever you can as hold luggage helps to get around at least some of the nuisance and it might be time for the use of better machinery to cut down on having to screen everything separately.

When you come away after an embarrassing episode as I once did, the attractions of consolidating devices start to become plain. In fact, most probably could get with having just their phone. It is when you take activities like photography more seriously that the gadget count increases. After all, the main reason a laptop comes on trips beyond Britain and Ireland at all is to back up photos from my camera in case an SD card fails.

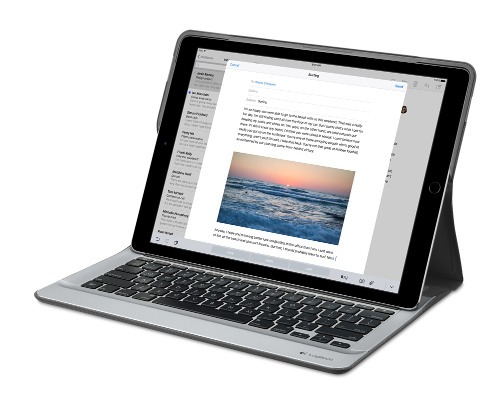

Parking that thought for a while, let’s go back to March this year when temptation overcame what should have been a period of personal restraint. The result was that a 32 GB 12.9″ Apple iPad Pro came into my possession along with an Apple Pencil and a Logitech CREATE Backlit Keyboard Case. It should have done so, but the size of the screen did not strike me until I got it home from the Apple Store. That was one of the main attractions because maps can be shown with a greater field of view in a variety of apps, a big selling point for a hiker with a liking for maps, who wants more than what is on offer from Apple, Google or even Bing. The precision of the Pencil is another boon that makes surfing the website so much easier and the solid connection between the case and the iPad means that keyboard usage is less fiddly than it would if it used Bluetooth. Having tried them with the BBC iPlayer app, I can confirm that the sound from the speakers is better than any other mobile device that I have used.

Already, it has come with me on trips around England and Scotland. These weekend trips saw me leave the Asus Eee PC stay at home when it normally might have come with me and taking just a single device along with a camera or two had its uses too. The screen is large for reading on a train but I find that it works just as well so long as you have enough space. Otherwise, combining use of a suite of apps with recourse to the web does much of the information seeking needed while on a trip away and I was not found wanting. Battery life is good too, which helps.

Those trips allowed for a little light hotel room blog post editing too and the iPad Pro did what was needed, though the ergonomics of reaching for the screen with the Pencil meant that my arm was held aloft more than was ideal. Another thing that raised questions in my mind is the appearance of word suggestions at the bottom of the screen as if this were a mobile phone since I wondered if these were more of a hindrance than a help given that I just fancied typing and not pointing at the screen to complete words. Copying and pasting works too but I have found the screen-based version a little clunky so I must see if the keyboard one works just as well, though the keyboard set up is typical of a Mac so that affects word selection. You need to use the OPTION key in the keyboard shortcut that you use for this and not COMMAND or CONTROL as you might do on a PC.

Even with these eccentricities, I was left wondering if it had any utility when it came to backing up photos from digital cameras and there is an SD card adapter that makes this possible. A failure of foresight on my part meant that the 32 GB capacity now is an obvious limitation but I think I might have hit on a possible solution that does not need to upload to an iCloud account. It involves clearing off the photos onto a 128 GB Transcend JetDrive Go 300 so they do not clog up the iPad Pro’s storage. That the device has both Lightning and USB connectivity means that you can plug it into a laptop or desktop PC afterwards too. If that were to work as I would hope, then the laptop/tablet combination that I have been using for all overseas trips could be replaced to allow a weight reduction as well as cutting the hassle at airport security.

Trips to Ireland still may see my sticking with a tried and tested combination though because I often have needed to do some printing while over there. While I have been able to print a test document from an iPad Mini on my home network-connected printer, not every model supports this and that for NFC or Air Print is not universal either. If this were not an obstacle, apps like Pages, Numbers and Keynote could have their uses for business-related work and there are web-based offerings from Google, Microsoft and others too.

In conclusion, I have found that my iPad Pro does so much of what I need on a trip away that retiring the laptop/tablet combination for most of these is not as outrageous as it once would have seemed. In some ways, iOS has a way to go yet before it could take over from macOS but it remains in development so it will be interesting to see what happens next. All the while, hybrid devices running Windows 10 are becoming more pervasive and that might provide Apple with the encouragement that it needs.

Migrating to Windows 10

10th August 2015While I have had preview builds of Windows 10 in various virtual machines for the most of twelve months, actually upgrading physical and virtual devices that you use for more critical work is a very different matter. Also, Windows 10 is set to be a rolling release with enhancements coming on an occasional basis so I would like to see what comes before it hits the actual machines that I need to use. That means that a VirtualBox instance of the preview build is being retained to see what happens to that over time.

Some might call it incautious but I have taken the plunge and completely moved from Windows 8.1 to Windows 10. The first machine that I upgraded was more expendable and success with that encouraged me to move onto others before even including a Windows 7 machine to see how that went. The 30-day restoration period allows an added degree of comfort when doing all this. The list of machines that I upgraded were a VMware VM with 32-bit Windows 8.1 Pro (itself part of a 32-bit upgrade cascade involving Windows 7 Home and Windows 8 Pro), a VirtualBox VM with 64-bit Windows 8.1, a physical PC that dual booted Linux Mint 17.2 and 64-bit Windows 8.1 and an HP Pavilion dm4 laptop (Intel Core i3 with 8 GB RAM and a 1 TB SSHD) with Windows 7.

The main issue that I uncovered with the virtual machines is that the Windows 10 update tool that is downloaded onto Windows 7 and 8.x does not accept the graphics capability on there. This is a bug because the functionality works fine on the Windows Insider builds. The solution was to download the appropriate Windows 10 ISO image for use in the ensuing upgrade. There are 32-bit and 64-bit disk images with Windows 10 and Windows 10 Pro installation files on each. My own actions used both disk images.

During the virtual machine upgrades, most of the applications that considered important were carried over from Windows 8.1 to Windows without a bother. Anyone would expect Microsoft’s own software like Word, Excel and others to make the transition, but others like Adobe’s Photoshop and Lightroom made it too, as did Mozilla’s Firefox, albeit requiring a trip to Settings to set it as the default option for opening web pages. Less well-known desktop applications like Zinio (digital magazines) or Mapyx Quo (maps for cycling, walking and the like) were the same. Classic Shell was an exception but the Windows 10 Start Menu suffices for now anyway. Also, there was a need to reinstate Bitdefender Antivirus Plus using its new Windows 10 compatible installation file. Still, the experience was a big change from the way things used to be in the days when you used to have to reinstall nearly all your software following a Windows upgrade.

The Windows 10 update tool worked well for the Windows 8.1 PC, so no installation disks were needed. Neither was the bootloader overwritten so the Windows option needed selecting from GRUB every time there was a system reboot as part of the installation process, a temporary nuisance that was tolerated since booting into Linux Mint was preserved. Again, no critical software was lost in the process apart from Kaspersky Internet Security, which needed the Windows 10 compatible version installed, much like Bitdefender, or Epson scanning software that I found was easy to reinstall anyway. Usefully, Anquet’s Outdoor Map Navigator (again used for working with walking and cycling maps) continued to function properly after the changeover.

For the Windows 7 laptop, it was much the same story, albeit with the upgrade being delivered using Windows Update. Then, the main Windows account could be connected to my Outlook account to get everything tied up with the other machines for the first time. Before the obligatory change of background picture, the browns in the one that I was using were causing interface items to appear in red, not exactly my favourite colour for application menus and the like. Now they are in blue and all the upheaval surrounding the operating system upgrade had no effect on the Dropbox or Kaspersky installations that I had in place before it all started. If there is any irritation, it is that unpinning of application tiles from the Start Menu or turning off of live tiles is not always as instantaneous as I would have liked and that is all done now anyway.

While writing the above, I could not help thinking that more observations on Windows 10 may follow, but these will do for now. Microsoft had to get this upgrade process right and it does appear that they have, so credit is due to them for that. So far, I have Windows 10 to be stable and will be seeing how things develop from here, especially when those new features arrive occasionally as is the promise that has been made to us users. Hopefully, that will be as painless as it needs to be to ensure trust is retained.

Dropping back to a full screen terminal session from a desktop one in Linux

29th May 2014There are times when you might need to drop back to a full screen terminal session from a graphical desktop environment when running Linux. One example that I have encountered is the installation of Nvidia’s own graphics drivers on either Ubuntu or Linux Mint. Another happened to me on Arch Linux when a Cinnamon desktop environment update left me without the ability to open up a terminal window. Then, the full screen command allowed me to add in an alternative terminal emulator and Tech Drive-in’s list came in handy for this. there might have been something to sort on a FreeBSD installation that needed the same treatment. This latter pair happened to me on sessions running within VirtualBox and that has its own needs when it comes to dropping into a full screen command line session and I will come to that later.

When running Linux on a physical PC, the keyboard shortcuts that you are CTRL + ALT + F1 for entering a full screen terminal session and CTRL + ALT + F7 for returning to the graphical desktop again. When you are running a Linux guest in VirtualBox and the host operating system also is Linux, then the aforementioned shortcuts do not work within the virtual machine but instead affect the host. To get the guest operating system to drop into a full screen terminal session, the keyboard shortcut you need is [Host Key] + F1 and the default host key is the right hand CTRL key on your keyboard unless you have assigned to something else as I have. The exit the full screen terminal session and return to the graphical desktop again, the keyboard shortcut is [Host Key] + F7.

All of this works with GNOME and Cinnamon desktop environments running in X sessions and I cannot vouch for anything else unless alternatives like Wayland come my way. Hopefully, this useful functionality applies to those other set-ups too because there are times when a terminal session is needed to recover from a mishap, rare though those thankfully are and they need to be very rare if not non-existent for most users.

Wiping of hard drives with Linux

2nd December 2013More than a decade of computer upgrades and rebuilds can leave obsolete kit in your hands and the arrival of legislation controlling the dumping of electronic goods during this time can leave one wondering how anyone can dispose of them. Thankfully, I discovered that the local council refuse site only a few miles away from me accepts such things for recycling and saw me a good few times over the last summer with obsolete and non-working gadgets that has stayed with me far too long. Some were as bulky as a computer monitor and a printer but others were relatively diminutive.

Disposing of non-working and utterly obsolete equipment is an easy choice but I find this is harder when a device still works as intended and even might have a use yet. When you realise that computer motherboards still come with PS/2, floppy and IDE ports, things get trickier. My Gigabyte Z87-HD3 mainboard just has one PS/2 when predecessors would have had two and the same applies to IDE sockets and there still is a floppy drive socket on there too, a surprising sight for anyone used to thinking that such things are utterly outmoded these days. So, PC technology isn’t relinquishing backwards compatibility just yet since that mainboard is part of a system with an Intel Core i5-4670K CPU and 24 GB of RAM on there.

Even with that presence of an IDE port, I was not tempted to use leftover 10 GB and 20GB hard drives that I have had for just over a decade. Ten years ago, that sort of capacity would been respectable were it not for our voracious appetite for data storage thanks to photography, video and music. Apart from the size constraints, the speed of those drives cannot compare well with what we have today either and I quickly saw that when I replaced a Samsung 160 HD of a similar age with a Samsung SSD.

The result of this line of thought was that I was minded to recycle the drives so I started to think about wiping and Linux has a good tool for this in the form of the dd command. It can overwrite data on the disks so as to render the information virtually irretrievable. Also, Linux has a number of dummy devices that can supply junk data for overwriting purposes. They are like /dev/null which is used to suppress the issuing of output to the command. The first is /dev/zero which supplies octal zeros and I have used this. However, there also is /dev/random and /dev/urandom for those wanting a more random element to the overwriting.

To overwrite data on a disk with zeroes while having feedback on progress, the following command achieves the required result:

sudo dd if=/dev/zero | pv | sudo dd of=/dev/sdd bs=16M

The whole operation needs to be executed with root privileges and the if parameter of dd specifies the input data and this is sent to a pv command that shows a progress bar that dd would not produce by itself while sending the output on to another dd command with the disk to be overwritten specified using the of parameter. The bs parameter in that second dd command specifies the block size for the disk writing job. Unfortunately, pv is not installed by default so you need to add it yourself. On a Debian, Ubuntu or Linux Mint system, the command is the following:

sudo apt-get install pv

That pv sandwich also is invaluable for those times when dd is needed to copy partitions between different physical or virtual (in a virtual machine) disks. Without it, you might wonder what exactly is happening in the silence and that especially is concerning when you are retrying an operation that failed previously and it takes a while to complete each time.

Setting up a WD My Book Live NAS on Ubuntu GNOME 13.10

1st December 2013The official line from Western Digital is that they do not support the use of their My Book Live NAS drives with Linux or UNIX. However, what that means is that they only develop tools for accessing their products for Windows and maybe OS X. It still doesn’t mean that you cannot access the drive’s configuration settings by pointing your web browser at http://mybooklive.local/. In fact, not having those extra tools is no drawback at all since the drive can be accessed through your file manager of choice under the Network section and the default name is MyBookLive too so you easily can find the thing once it is connected to a router or switch anyway.

Once you are in the servers web configuration area, you can do things like changing its name, updating its firmware, finding out what network has been assigned to it, creating and deleting file shares, password protecting file shares and other things. These are the kinds of things that come in handy if you are going to have a more permanent connection to the NAS from a PC that runs Linux. The steps that I describe have worked on Ubuntu 12.04 and 13.10 with the GNOME desktop environment.

What I was surprised to discover that you cannot just set up a symbolic link that points to a file share. Instead, it needs to be mounted and this can be done from the command line using mount or at start-up with /etc/fstab. For this to happen, you need the Common Internet File System utilities and these are added as follows if you need them (check on the Software Software or in Synaptic):

sudo apt-get install cifs-utils

Once these are added, you can add a line like the following to /etc/fstab:

//[NAS IP address]/[file share name] /[file system mount point] cifs

credentials=[full file location]/.creds,

iocharset=utf8,

sec=ntlm,

gid=1000,

uid=1000,

file_mode=0775,

dir_mode=0775

0 0

Though I have broken it over several lines above, this is one unwrapped line in /etc/fstab with all the fields in square brackets populated for your system and with no brackets around these. Though there are other ways to specify the server, using its IP address is what has given me the most success and this is found under Settings > Network on the web console. Next up is the actual file share name on the NAS and I have used a custom them instead of the default of Public. The NAS file share needs to be mounted to an actual directory in your file system like /media/nas or whatever you like and you will need to create this beforehand. After that, you have to specify the file system and it is cifs instead of more conventional alternatives like ext4 or swap. After this and before the final two space delimited zeroes in the line comes the chunk that deals with the security of the mount point.

What I have done in my case is to have a password protected file share and the user ID and password have been placed in a file in my home area with only the owner having read and write permissions for it (600 in chmod-speak). Preceding the filename with a “.” also affords extra invisibility. That file then is populated with the user ID and password like the following. Of course, the bracketed values have to be replaced with what you have in your case.

username=[NAS file share user ID]

password=[NAS file share password]

With the credentials file created, its options have to be set. First, there is the character set of the file (usually UTF-8 and I got error code 79 when I mistyped this) and the security that is to be applied to the credentials (ntlm in this case). To save having no write access to the mounted file share, the uid and gid for your user needs specification with 1000 being the values for the first non-root user created on a Linux system. After that, it does no harm to set the file and directory permissions because they only can be set at mount time; using chmod, chown and chgrp later on has no effect whatsoever. Here, I have set permissions to read, write and execute for the owner and the user group while only allowing read and execute access for everyone else (that’s 775 in the world of chmod).

All of what I have described here worked for me and had to gleaned from disparate sources like Mount Windows Shares Permanently from the Ubuntu Wiki, another blog entry regarding the permissions settings for a CIFS mount point and an Ubuntu forum posting on mounting CIFS with UTF-8 support. Because of the scattering of information, I just felt that it needed to all together in one place for others to use and I hope that fulfils someone else’s needs in a similar way to mine.

Creating a test web server using Ubuntu Server 13.04 and VirtualBox

1st September 2013Having seen Linux Format cover tools like Vagrant and Puppet that manage virtual machines, I have been attracted by the prospect of a virtual web server running on my own PC. Certainly, having the LAMP software stack in a VM means that the corresponding tools don’t need to be added to a host system should its operating system need a fresh installation.

As intriguing as tools like Vagrant may be, I decided that I needed to learn a bit more about getting server instances set up in VirtualBox anyway. Thus, I went and downloaded the latest version of Ubuntu Server and gave that a go. One lesson that I learned was that Bridged Networking needs to be added to the VM before installation of the operating system unless you fancy overcoming the challenge of getting Ubuntu Server to recognise an altered or additional network interface. In my case, I added an extra adapter for the Bridged Networking and left the original in place as NAT. The reason for having Bridged Networking set up is that it allows access to the virtual web server from the host once you know the IP address and that information can be obtained by executing the ifconfig command on the virtual machine.

With the networking sorted, the next step was to install the 64-bit edition of Ubuntu Server. Unlike its desktop counterpart, this is all driven by text menus but remains fairly intuitive and there is hardly anything there that you wouldn’t see with another Linux distribution. A useful addition is the addition of a menu to select the type of server services that you’d like to see installed. From this, I chose the web server and SSH options and I seem to remember that there was a database server option too. If there was an FTP server option, I would have chosen that too but it was no ordeal to add ProFTPd later on anyway.

All of this set was done through the VirtualBox GUI just to keep life more straightforward. Even so, I only selected 12 MB of video memory and was tempted to cut the overall memory back from 512 MB but leaving things be for now. However, what I have begun to do is start and stop the virtual machine from the command line since servers are headless operations anyway. With SSH enabled, there is little need to have the VirtualBox GUI going. The command for starting the server is below:

VBoxManage startvm "Ubuntu Server" --type=headless

There is a VBoxHeadless command for the same end too but VBoxManage does what I need. The startvm option is what tells VBoxManage to start the server and the virtual machine’s name is enclosed in quotes. The --type=headless ensures that no window pops up. To stop the virtual web server cleanly, a command like the following is needed:

VBoxManage controlvm "Ubuntu Server" acpipowerbutton

Again, the VBoxManage command gets used and the acpipowerbutton option ensures that a clean shutdown is performed. Not doing so results in the server not fully starting up according to my experiences thus far. Getting the virtual web server to start and stop with the host machine itself starting and stopping but this looks more complex so I plan to leave things a while before trying that experiment.

A look at Windows 8.1

4th July 2013Last week, Microsoft released a preview of Windows 8.1 and some hailed the return of the Start button but the reality is not as simple as that. Being a Linux user, I am left wondering if ideas have been borrowed from GNOME Shell instead of putting back the Start Menu like it was in Windows 7. What we have got is a smoothing of the interface that is there for those who like to tweak settings and not available be default. GNOME Shell has been controversial too so borrowing from it is not an uncontentious move even if there are people like me who are at home in that kind of interface.

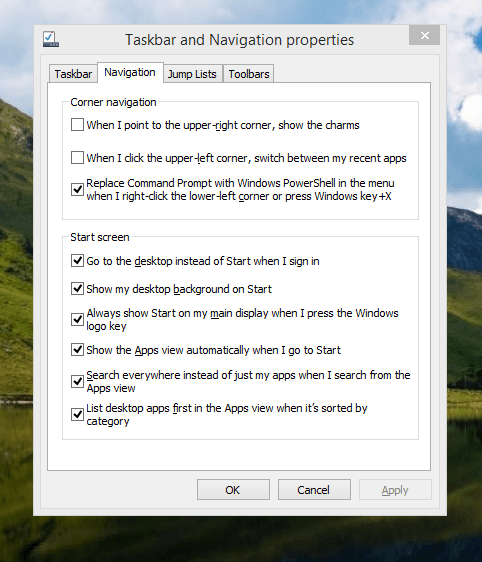

What you get now is more configuration options to go with the new Start button. Right clicking on the latter does get you a menu but this is no Start Menu like we had before. Instead, we get a settings menu with a “Shut down” entry. That’s better than before, which might be saying something about what was done in Windows 8, and it produces a sub-menu with options of shutting down or restarting your PC as well as putting it to sleep. Otherwise, it is place for accessing system configuration items and not your more usual software, not a bad thing but it’s best to be clear about these things. Holding down the Windows key and pressing X will pop up the same menu if you prefer keyboard shortcuts and I have a soft spot for them too.

The real power is to be discovered when you right click on the task bar and select the Properties entry from the pop-up menu. Within the dialogue box box that appears, there is the Navigation tab that contains a whole plethora of interesting options. Corner navigation can be scaled back to remove the options of switching between applications at the upper left corner or getting the charms menu from the upper right corner. Things are interesting in the Start Screen section. This where you tell Windows to boot to the desktop instead of the Start Screen and adjust what the Start button gives you. For instance, you can make it use your desktop background and display the Start Screen Apps View. Both of these make the new Start interface less intrusive and make the Apps View feel not unlike the way GNOME Shell overlays your screen when you hit the Activities button or hover over the upper left corner of the desktop.

It all seems rather more like a series of little concessions and not the restoration that some (many?) would prefer. Classic Shell still works for all those seeking an actual Start Menu and even replaces the restored Microsoft Start button too. So, if the new improvements aren’t enough for you, you still can take matters into your own hands until you start to take advantage of what’s new in 8.1.

Apart from the refusal to give us back a Windows 7 style desktop experience, we now have a touchscreen keyboard button added to the taskbar.So far, it always appears there even when I try turning it off. For me, that’s a bug and it’s something that I’d like to see fixed before the final release.

All in all, Windows 8.1 feels more polished than Windows 8 was and will be a free update when the production version is released. My explorations have taken place within a separate VMware virtual machine because updating a Windows 8 installation to the 8.1 preview is forcing a complete re-installation on yourself later on. There are talks about Windows 9 now but I am left wondering if going for point releases like 8.2, 8.3, etc. might be a better strategy for Microsoft. It still looks as if Windows 8 could do with continual polishing before it gets more acceptable to users. 8.1 is a step forward and more like it may be needed yet.

A need to update graphics hardware

16th June 2013Not being a gaming enthusiast, having to upgrade graphics cards in PC’s is not something that I do very often or even rate as a priority. However, two PC’s in my possession have had that very piece of hardware upgraded on them and it’s not because anything was broken either. My backup machine has seen quite a few Linux distros on there since I built it nearly four years ago. The motherboard is an ASRock K10N78 that sourced from MicroDirect and it has onboard an NVIDIA graphics chip that has performed well if not spectacularly. One glitch that always existed was a less than optimal text rendering in web browsers but that never was enough to get me to add a graphics card to the machine.

More recently, I ran into trouble with Sabayon 13.04 with only the 2D variant of the Cinnamon desktop environment working on it and things getting totally non-functional when a full re-installation of the GNOME edition was attempted. Everything went fine until I added the latest updates to the system when a reboot revealed that it was impossible to boot into a desktop environment. Some will relish this as a challenge but I need to admit that I am not one of those. In fact, I tried out two Arch-based distros on the same PC and got the same results following a system update on each. So, my explorations of Antergos and Manjaro have to continue in virtual machines instead.

To get a working system, I gave Linux Mint 15 Cinnamon a go and that worked a treat. However, I couldn’t ignore that the cutting edge distros that I tried before it all took exception to the onboard NVIDIA graphics. systemd has been implemented in all of these and it seems reasonable to think that it is coming to Linux Mint at some stage in the future so I went about getting a graphics card to add into the machine. Having had good experiences with ATi’s Radeon in the past, I stuck with it even though it now is in the hands of AMD. Not being that fussed so long there was Linux driver support, I picked up a Radeon HD 6450 card from PC World. Adding it into the PC was a simpler of switching off the machine, slotting in the card, closing it up and powering it on again. Only later on did I set the BIOS to look for PCI Express graphics before anything else and I could have got away without doing that. Then, I made use of the Linux Mint Additional Driver applet in its setting panel to add in the proprietary driver before restarting the machine to see if there were any visual benefits. To sort out the web browser font rendering, I used the Fonts applet in the same settings panel and selected full RGBA hinting. The improvement was unmissable if not still like the appearance of fonts on my main machine. Overall, there had been an improvement and a spot of future proofing too.

That tinkering with the standby machine got me wondering about what I had on my main PC. As well as onboard Radeon graphics, it also gained a Radeon 4650 card for which 3D support wasn’t being made available by Ubuntu GNOME 12.10 or 13.04 to VMware Player and it wasn’t happy about this when a virtual machine was set to have 3D support. Adding the latest fglrx driver only ensured that I got a command line instead of a graphical interface. Issuing one of the following commands and rebooting was the only remedy:

sudo apt-get remove fglrx

sudo apt-get remove fglrx-updates

Looking at the AMD website revealed that they no longer support 2000, 3000 or 4000 series Radeon cards with their latest Catalyst driver the last version that did not install on my machine since it was built for version 3.4.x of the Linux kernel. A new graphics card then was in order if I wanted 3D graphics in VWware VM’s and both GNOME and Cinnamon appear to need this capability. Another ASUS card, a Radeon HD 6670, duly was acquired and installed in a manner similar to the Radeon HD 6450 on the standby PC. Apart from not needing to alter the font rendering (there is a Font tab on Gnome Tweak Tool where this can be set), the only real exception was to add the Jockey software to my main PC for installation of the proprietary Radeon driver. The following command does this:

sudo apt-get install jockey-kde

When that was done I issue the jockey-kde command and selected the first entry on the list. The machine worked as it should on restarting apart from an AMD message at the bottom right hand corner bemoaning unrecognised hardware. There had been two entries on that Jockey list with exactly the same name so it was time to select the second of these and see how it went. On restarting, the incompatibility message had gone and all was well. VMware even started virtual machines with 3D support without any messages so the upgrade did the needful there.

Hearing of someone doing two PC graphics card upgrades in a weekend may make you see them as an enthusiast but my disinterest in computer gaming belies this. Maybe it highlights that Linux operating systems need 3D more than might be expected. The Cinnamon desktop environment now issues messages if it is operating in 2D mode with software 3D rendering and GNOME always had the tendency to fall back to classic mode, as it had been doing when Sabayon was installed on my standby PC. However, there remain cases where Linux can rejuvenate older hardware and I installed Lubuntu onto a machine with 10 year old technology on there (an 1100 MHz Athlon CPU, 1GB of RAM and 60GB of hard drive space in case dating from 1998) and it works surprisingly well too.

It seems that having fancier desktop environments like GNOME Shell and Cinnamon means having the hardware on which it could run. For a while, I have been tempted by the possibility of a new PC since even my main machine is not far from four years old either. However, I also spied a CPU, motherboard and RAM bundle featuring an Intel Core i5-4670 CPU, 8GB of Corsair Vengence Pro Blue memory and a Gigabyte Z87-HD3 ATX motherboard included as part of a pre-built bundle (with a heatsink and fan for the CPU) for around £420. Even for someone who has used AMD CPU’s since 1998, that does look tempting but I’ll hold off before making any such upgrade decisions. Apart from exercising sensible spending restraint, waiting for Linux UEFI support to mature a little more may be no bad idea either.

Update 2013-06-23: The new graphics card in my main machine is working as it should and has reduced the number of system error report messages turning up too; maybe Ubuntu GNOME 13.04 didn’t fancy the old graphics card all that much. A rogue .fonts.conf file was found in my home area on the standby machine and removing it has improved how fonts are displayed on there immeasurably. If you find one on your system, it’s worth doing the same or renaming it to see if it helps. Otherwise, tinkering with the font rendering settings is another beneficial act and it even helps on Debian 6 too and that uses GNOME 2! Seeing what happens on Debian 7.1 could be something that I go testing sometime.