Still able to build PC systems

25th October 2009This weekend has been something of a success for me on the PC hardware front. Earlier this year, a series of mishaps rendering my former main home PC unusable; it was a power failure that finished it off for good. My remedy was a rebuild using my then usual recipe of a Gigabyte motherboard, AMD CPU and crucial memory. However, assembling the said pieces never returned the thing to life and I ended up in no man’s land for a while, dependent on and my backup machine and laptop. That wouldn’t have been so bad but for the need for accessing data from the old behemoth’s hard drives but an external drive housing set that in order. Nevertheless, there is something unfinished about work with machines having a series of external drives hanging off them. That appearance of disarray was set to rights by the arrival of a bare bones system from Novatech in July with any assembly work restricted to the kitchen table.There was a certain pleasure in seeing a system come to life after my developing a fear that I had lost all of my PC building prowess.

That restoration of order still left finding out why those components bought earlier in the year didn’t work together well enough to give me a screen display on start-up. Having electronics testing equipment and the knowledge of its correct use would make any troubleshooting far easier but I haven’t got these. There is a place near to me where I could go for this but you are left wondering what might be said to a PC build gone wrong. Of course, the last thing that you want to be doing is embarking on a series of purchases that do not fix the problem, especially in the current economic climate.

One thing to suspect when all doesn’t turn out as hoped is the motherboard and, for whatever reason, I always suspect it last. It now looks as if that needs to change after I discovered that it was the Gigabyte motherboard that was at fault. Whether it was faulty from the outset or it came a cropper with a rogue power supply or careless with static protection is something that I’ll never know. An Asus motherboard did go rogue on me in the past and it might be that it ruined CPU’s and even a hard drive before I laid it to rest. Its eventual replacement put a stop to a year of computing misfortune and kick-started my reliance on Gigabyte. That faith is under question now but the 2009 computing hardware mishap seems to be behind me and any PC rebuilds will be done on tables and motherboards will be suspected earlier when anything goes awry.

Returning to the present, my acquisition of an ASRock K10N78 and subsequent building activities has brought a new system using an AMD Phenom X4 CPU and 4 GB of memory into use. In fact, I writing these very words using the thing. It’s all in a new TrendSonic case too (placing an elderly behemoth into retirement) and with a SATA hard drive and DVD writer. The new motherboard has onboard audio and graphics so external cards are not needed unless you are an audiophile and/or a gamer; for the record, I am neither. Those additional facilities make for easier building and fault-finding should the undesirable happen.

The new box is running the release candidate of Ubuntu 9.10 and it seems to be working without a hitch too. Earlier builds of 9.10 broke in their VirtualBox VM so you should understand the level of concern that this aroused in my mind; the last thing that you want to be doing is reinstalling an operating system because its booting capability breaks every other day. Thankfully, the RC seems to have none of these rough edges so I can upgrade the Novatech box, still my main machine and likely to remain so for now, with peace of mind when the time comes.

Booting from external drives

16th September 2009Sticking with older hardware may mean that you miss out on the possibilities offered by later kit and being able to boot from external optical and hard disk drives was something of which I learned only recently. Like many things, a compatible motherboard and my enforced summer upgrade means that I have one with the requisite capabilities.

There is usually an external DVD drive attached to my main PC so that allowed the prospect of a test. A bit of poking around in the BIOS settings for the Foxconn motherboard was sufficient to get it looking at the external drive at boot time. Popping in a CrunchBang Linux live DVD was all that was needed to prove that booting from a USB drive was a goer. That CrunchBang is a minimalist variant of Ubuntu helped for acceptable speed at system startup and afterwards.

Having lived off them while in home PC limbo, the temptation to test out the idea of installing an operating system on an external HD and booting from that is definitely there though I think that I’ll be keeping mine as backup drives for now. Still, there’s nothing to stop me installing an operating system onto of them and giving that a whirl sometime. Of course, speed constraints mean that any use of such an arrangement would be occasional but, in the event of an emergency, such a setup could have its uses and tide you over for longer than a Live CD or DVD. Having the chance to poke around with an alternative operating system as it might exist on a real PC has its appeal too and avoids the need for any partitioning and other chores that dual booting would require. After all, there’s only so much testing that can be done in a virtual machine.

Anquet and VirtualBox Shared Folders

18th July 2009For a while now, I have had Anquet installed in a virtual machine instance of Windows XP but it has been throwing errors continuously on start up. Perhaps surprisingly, it only dawned upon me recently what might have been the cause. A bit of fiddling revealed that my storing the mapping data Linux side and sharing it into the VM wasn’t helping and copying it to a VM hard drive set things to rights. This type of thing can also cause problems when it comes getting Photoshop to save files using VirtualBox’s Shared Folders feature too. Untangling the situation is a multi-layered exercise. On the Linux side, permissions need to be in order and that involves some work with chmod (775 is my usual remedy) and chgrp to open things up to the vboxusers group. Adding in Windows’ foibles when it comes to networked drives and their mapping to drive letters brings extra complexity; shared folders are made visible to Windows as \\vboxsvr\shared_folder_name\. The solution is either a lot of rebooting, extensive use of the net use command or both. It induces the sort of toing and froing that makes copying things over and back as needed look less involved and more sensible if a little more manual than might be liked.

A restoration of order

12th July 2009This weekend, I finally put my home computing displacement behind me. My laptop had become my main PC with a combination of external hard drives and an Octigen external hard drive enclosure keeping me motoring in laptop limbo. Having had no joy in the realm of PC building, I decided to go down the partially built route and order a bare-bones system from Novatech. That gave me a Foxconn case and motherboard loaded up with an AMD 7850 dual-core CPU and 2 GB of RAM. With the motherboard offering onboard sound and video capability, all that was needed was to add drives. I added no floppy drive but instead installed a SATA DVD Writer (not sure that it was a successful purchase, though, but that can be resolved at my leisure) and the hard drives from the old behemoth that had been serving me until its demise. A session of work on the kitchen table and some toing and froing ensued as I inched my way towards a working system.

Once I had set all the expected hard disks into place, Ubuntu was capable of being summoned to life with the only impediment being an insistence of scanning the 1 TB Western Digital and getting stuck along the way. Not having the patience, I skipped this at start up and later unmounted the drive to let fsck do its thing while I got on with other tasks; the hold up had been the presence of VirtualBox disk images on the drive. Speaking of VirtualBox, I needed to scale back the capabilities of Compiz, so things would work as they should. Otherwise, it was a matter of updating various directories with files that had appeared on external drives without making it into their usual storage areas. Windows would never have been so tolerant and, as if to prove the point, I needed to repair an XP installation in one of my virtual machines.

In the instructions that came with the new box, Novatech stated that time was a vital ingredient for a build and they weren’t wrong. The delivery arrived at 09:30 and I later got a shock when I saw the time to be 15:15! However, it was time well spent and I noticed the speed increase when putting ImageMagick through its paces with a Perl script. In time, I might get brave and be tempted to add more memory to get up to 4 GB; the motherboard may only have two slots, but that’s not such a problem with my planning on sticking with 32-bit Linux for a while to come. My brief brush with its 64-bit counterpart revealed some roughness that warded me off for a little while longer. For now, I’ll leave well alone and allow things to settle down again. Lessons for the future remain and I may even mull over them in another post…

Adding a new hard drive to Ubuntu

19th January 2009This is a subject that I thought that I had discussed on this blog before but I can’t seem to find any reference to it now. I have discussed the subject of adding hard drives to Windows machines a while back so that might explain what I was under the impression that I was. Of course, there’s always the possibility that I can’t find things on my own blog but I’ll go through the process.

What has brought all of this about was the rate at which digital images were filling my hard disks. Even with some housekeeping, I could only foresee the collection growing so I went and ordered a 1TB Western Digital Caviar Green Power from Misco. City Link did the honours with the delivery and I can credit their customer service with regard to organising delivery without my needing to get to the depot to collect the thing; it was a refreshing experience that left me pleasantly surprised.

For the most of the time, hard drives that I have had generally got on with the job there was one experience that has left me wary. Assured by good reviews, I went and got myself an IBM DeskStar and its reliability didn’t fill me with confidence and I will not touch their Hitachi equivalents because of it (IBM sold their hard drive business to Hitachi). This was a period in time when I had a hardware faltering on me with an Asus motherboard putting me off that brand around the same time as well (I now blame it for going through a succession of AMD Athlon CPU’s). The result is that I have a tendency to go for brands that I can trust from personal experience and both Western Digital falls into this category (as does Gigabyte for motherboards), hence my going for a WD this time around. That’s not to say that other hard drive makers wouldn’t satisfy my needs since I have had no problems with disks from Maxtor or Samsung but Ill stick with those makers that I know until they leave me down, something that I hope never happens.

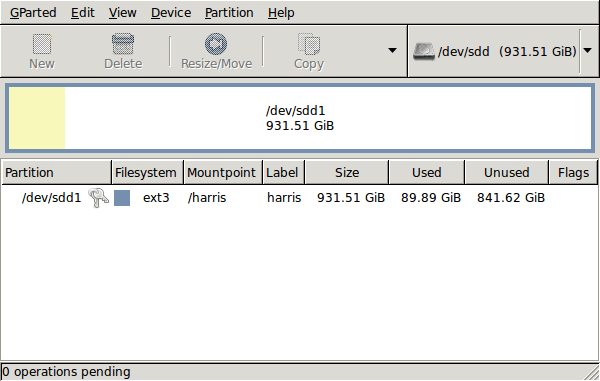

GParted running on Ubuntu

Anyway, let’s get back to installing the hard drive. The physical side of the business was the usual shuffle within the PC to add the SATA drive before starting up Ubuntu. From there, it was a matter of firing up GParted (System -> Administration -> Partition Editor on the menus if you already have it installed). The next step was to find the new empty drive and create a partition table on it. At this point, I selected msdos from the menu before proceeding to set up a single ext3 partition on the drive. You need to select Edit -> Apply All Operations from the menus set things into motion before sitting back and waiting for GParted to do its thing.

After the GParted activities, the next task is to set up automounting for the drive so that it is available every time that Ubuntu starts up. The first thing to be done is to create the folder that will be the mount point for your new drive, /newdrive in this example. This involves editing /etc/fstab with superuser access to add a line like the following with the correct UUID for your situation:

UUID=”32cf775f-9d3d-4c66-b943-bad96049da53″ /newdrive ext3 defaults,noatime,errors=remount-ro

You can can also add a comment like “# /dev/sdd1” above that so that you know what’s what in the future. To get the actual UUID that you need to add to fstab, issue a command like one of those below, changing /dev/sdd1 to what is right for you:

sudo vol_id /dev/sdd1 | grep “UUID=” /* Older Ubuntu versions */

sudo blkid /dev/sdd1 | grep “UUID=” /* Newer Ubuntu versions */

This is the sort of thing that you get back and the part beyond the “=” is what you need:

ID_FS_UUID=32cf775f-9d3d-4c66-b943-bad96049da53

Once all of this has been done, a reboot is in order and you then need to set up folder permissions as required before you can use the drive. This part gets me firing up Nautilus using gksu and adding myself to the user group in the Permissions tab of the Properties dialogue for the mount point (/newdrive, for example). After that, I issued something akin to the following command to set global permissions:

chmod 775 /newdrive

With that, I had completed what I needed to do to get the WD drive going under Ubuntu. After that IBM DeskStar experience, the new drive remains on probation but moving some non-essential things on there has allowed me to free some space elsewhere and carry out a reorganisation. Further consolidation will follow but I hope that the new 931.51 GiB (binary gigabytes or 1024*1024*1024 rather the decimal gigabytes (1,000,000,000) preferred by hard disk manufacturers) will keep me going for a good while before I need to add extra space again.

Whither Fedora?

10th January 2009There is a reason why things have got a little quieter on this blog: my main inspiration for many posts that make their way on here, Ubuntu, is just working away without much complaint. I have to say that BBC iPlayer isn’t working so well for me at the moment so I need to take a look at my setup. Otherwise, everything is continuing quietly. In some respects, that’s no bad thing and allows me to spend my time doing other things like engaging in hill walking, photography and other such things. I suppose that the calm is also a reflection of the fact that Ubuntu has matured but there is a sense that some changes may be on the horizon. For one thing, there are the opinions of a certain Mark Shuttleworth but the competition is progressing too.

That latter point brings me to Linux Format’s recently published verdict that Fedora has overtaken Ubuntu. I do have a machine with Fedora on there and it performs what I ask of it without any trouble. However, I have never been on it trying all of the sorts of things that I ask of Ubuntu so my impressions are not in-depth ones. Going deeper into the subject mightn’t be such a bad use of a few hours. What I am not planning to do is convert my main Ubuntu machine to Fedora. I moved from Windows because of constant upheavals and I have no intention to bring those upon me without good reason and that’s just not there at the moment.

Speaking of upheavals, one thought that is entering my mind is that of upgrading that main machine. Its last rebuild was over three years ago and computer technology has moved on a bit since then with dual and quad core CPU‘s from Intel and AMD coming into the fray. Of course, the cost of all of this needs to be considered too and that is never more true than of these troubled economic times. If you asked me about the prospect of a system upgrade a few weeks ago, I would have ruled it out of hand. What has got me wondering is my continued used of virtualisation and the resources that it needs. I am getting mad notions like the idea of running more than one VM at once and I do need to admit that it has its uses, even if it puts CPU’s and memory through their paces. Another attractive idea would be getting a new and bigger screen, particularly with what you can get for around £100 these days. However, my 17″ Iiyama is doing very well so this is one for the wish list more than anything else. None of the changes that I have described are imminent but I have noticed how fast I am filling disks up with digital images so an expansion of hard disk capacity has come much higher up the to do list.

If I ever get to doing a full system rebuild with a new CPU, memory and motherboard (I am not so sure about graphics since I am no gamer), the idea of moving into the world of 64-bit computing comes about. The maximum amount of memory usable by 32-bit software is 4 GB so 64-bit is a must if I decide to go beyond this limit. That all sounds very fine but for the possibility of problems arising with support for legacy hardware. It sounds like another bridge to be assessed before its crossing, even if two upheavals can be made into one.

Aside from system breakages, the sort of hardware and software changes over which I have been musing here are optional and can be done in my own time. That’s probably just as for a very good reason that I have mentioned earlier. Being careful with money becomes more important at times like these and it’s good that free software not only offers freedom of choice and usage but also a way to leave the closed commercial software acquisition treadmill with all of its cost implications, leaving money for much more important things.

The peril of /tmp

19th July 2008By default, I think that Windows plants its temporary files in c:\windows\temp. In Linux or in Ubuntu at least, the equivalent area is /tmp. However, not realising that /tmp when you shut down and start your PC could cause the silly blunder that I made today. I was doing a spot of reorganisation on my spare PC when I dumped some files in /tmp from a hard drive that I had added. I was reformatting the drive as ext3 following its NTFS former life. As part of this, I was editing fstab to automount the thing and a system restart ensued. I ended up losing whatever I put into /tmp, a very silly blunder. Luckily, I had the good sense not to put anything critical in there so nothing of consequence has been lost. Nevertheless, a lesson has been learnt: Windows allows its temporary area to pick up all kinds of clutter until you clear it while Linux clears the thing regularly. It’s amazing how Windows thinking can cause a howler when you have a lapse of concentration using a *NIX operating system, even for someone who uses the latter every day.

Windows Home Server: an interesting proposition?

29th January 2008If I was still running Windows as my main OS, the idea of storing my files on a separate computer acting as a server would appeal to me. After all, I very quickly developed the habit of partitioning my hard disk so that my data files were separated from the rough and tumble lives of operating system and software files. Later, I took it further by placing system files and data files on separate hard drives, an arrangement that smoothed my move Linux. Separation of computers would further secure things and that’s why Windows Home Server caught my eye when it was released. The recent spate of glitches with the thing might have changed my mind but my move to Ubuntu makes that irrelevant now. In any event, I suppose that I could have gone with Network-Attached Storage or an external hard rive. I do possess the latter, and a backup is being stored on it as i write this, and the former still remains an option but for the fact that I am happy with how things stand. In any event, the conventional networking model would be yet another potential choice. I was going to say more about Windows Home Server but I think I’ll leave that to others so here’s a library of links for your perusal.

Windows Home Server

Windows Home Server Blog

The irritation of a 4 GB file size limitation

20th November 2007I recently got myself a 500GB Western Digital My Book, an external hard drive in other words. Bizarrely, the thing is formatted using the FAT32 file system. I appreciate that backward compatibility for Windows 9x might seem desirable but using NTFS would be more understandable, particularly given that the last of the 9x line, Windows ME, is now eight years old (there cannot be anybody who still uses that, can there?). The result is that I got core dump messages from cp commands issued from the terminal on my Ubuntu system to copy files of size in excess of 4GB last night. It surprised me at first but it now seems to be a FAT32 limitation. The idea of formatting the drive as NTFS did occur to me but GParted would not do that, at least not with my current configuration. The ext3 file system is an option but I have a spare PC with Windows 2000 so that will be a step too far for now, unless I take the plunge and bring that into the Linux universe too.

Other than the 4GB irritation, the new drive works well and was picked up and supported by Ubuntu without any hassle beyond getting it out of the box, finding a place for it on my desk and plugging in a few cables. While needing judiciousness about file sizes, it played an important role while I converted a 320 GB internal WD drive from NTFS to ext3 and may yet be vital if my Windows 2000 box gets a migration to Linux. In interim, 500 GB is a lot of space and having an external drive that size is a bonus these days. That is especially the case when you consider that the 1 terabyte threshold is on the verge of getting crossed. It certainly makes DVD’s, flash drives and other multi-gigabyte media less impressive than they otherwise might appear.

Want attention? Just mention Ubuntu…

5th November 2007According to Google Analytics, visitor numbers for this blog hit their highest level one day last week. I suspect that I might have been down to a mention of two of my posts on tuxmachines.org. Thanks guys. Feedburner activity has been strong too.

That brings me to another thought: the web seems a good place for Ubuntu users to find find solutions to problems that they might encounter. I certainly found recipes that resolved issues that I was having: scanner set up and using another hard drive to host my home directory, all very useful stuff. When I last played with Linux to the same extent that I am now doing, the web was still a resource but it wouldn’t have been as helpful as I found it recently. I suppose that there are people like me posting tips and tricks for computing on blogs and that makes them easier to find. That’s no bad thing and I hope that it continues. Saying that, I might still get my mits on an Ubuntu book yet…