Upgrading to Fedora 13

1st June 2010After having a spin of Fedora’s latest in a Virtualbox virtual machine on my main home PC, I decided to upgrade my Fedora box. First, I needed to battle imperfect Internet speeds to get an ISO image that I could burn to a DVD. Once that was in place, I rebooted the Fedora machine using the DVD and chose the upgrade option to avoid bringing a major upheaval upon myself. You need the full DVD for this because only a full installation is available from Live ISO images and CD’s.

All was graphical easiness and I got back into Fedora again without a hitch. Along with other bits and pieces, MySQL, PHP and Apache are working as before. If there was any glitch, it was with Netbeans 6.8 because the upgrade from the previous version didn’t seem to be a complete as hoped. However, it was nothing that an update of the open source variant of Java and Netbeans itself couldn’t resolve. There may have been untidy poking around before the solution was found but all has been well since then.

Solving an upgrade hitch en route to Ubuntu 10.04

4th May 2010After waiting until after a weekend in the Isle of Man, I got to upgrading my main home PC to Ubuntu 10.04. Before the weekend away, I had been updating a 10.04 installation on an old spare PC and that worked fine so the prospects were good for a similar changeover on the main box. That may have been so but breaking a computer hardly is the perfect complement to a getaway.

So as to keep the level of disruption to a minimum, I opted for an in-situ upgrade. The download was left to complete in its own good time and I returned to attend to installation messages asking me if I wished to retain old logs files for the likes of Apache. When the system asked for reboot at the end of the sequence of package downloading, installation and removal, I was ready to leave it do the needful.

However, I met with a hitch when the machine restarted: it couldn’t find the root drive. Live CD’s were pressed into service to shed light on what had happened. First up was an old disc for 9.10 before one for 10.04 Beta 1 was used. That identified a difference between the two that was to prove to be the cause of what I was seeing. 10.04 uses /dev/hd*# (/dev/hda1 is an example) nomenclature for everything including software RAID arrays (“fakeraid”). 9.10 used the /dev/mapper/sil_**************# convention for two of my drives and I get the impression that the names differ according to the chipset that is used.

During the upgrade process, the one thing that was missed was the changeover from /dev/mapper/sil_**************# to /dev/hd*# in the appropriate places in /boot/grub/menu.lst; look for the lines starting with the word kernel. When I did what the operating system forgot, I was greeted by a screen telling of the progress of checks on one of the system’s disks. That process took a while but a login screen followed and I had my desktop much as before. The only other thing that I had to do was run gconf-editor from the terminal to send the title bar buttons to the right where I am accustomed to having them. Since then, I have been working away as before.

Some may decry the lack of change (ImageMagick and UFRaw could do with working together much faster, though) but I’m not complaining; the rough of 9.10 drilled that into me. Nevertheless, I am left wondering how many are getting tripped up by what I encountered, even if it means that Palimpsest (what Ubuntu calls Disk Utility) looks much tidier than it did. Could the same thing be affecting /etc/fstab too? The reason that I don’t know the answer to that question is that I changed all hard disk drive references to UUID a while ago but it’s another place to look if the GRUB change isn’t fixing things for you. If my memory isn’t failing me, I seem to remember seeing /dev/mapper/sil_**************# drive names in there too.

Relocating the Apache web server document root directory in Fedora 12

9th April 2010So as not to deface anything that is available online on the web, I have a tendency to set up an offline Apache server on a home PC to do any tinkering away from the eyes of the unsuspecting public. Though Ubuntu is my mainstay for home computing, I do have a PC with Fedora installed and I have been trying to get an Apache instance starting automatically on there without success for a few months. While I can start it by running the following command as root, I’d rather not have more manual steps than is necessary.

httpd -k start

The command used by the system when it starts is different and, even when manually run as root, it failed with messages saying that it couldn’t find the directory while the web server files are stored. Here it is:

service httpd start

The default document root location on any Linux distribution that I have seen is /var/www and all is very well with this but it isn’t a safe place to leave things if ever a re-installation is needed. Having needed to wipe /var after having it on a separate disk or partition for the sake of one installation, it doesn’t look so persistent to me. In contrast, you can safeguard /home by having it on another disk or in a dedicated partition and it can be retained even when you change the distro that you’re using. Thus, I have got into the habit of having the root of the web server document root folder in my home area and that is where I have been seeing the problem.

Because of the access message, I tried using chmod and chgrp but to no avail. The remedy has to do with reassigning the security contexts used by SELinux. In Fedora, Apache will not work with the context user_home_t that is usually associated with home directories but needs httpd_sys_content_t instead. To find out what contexts are associated with particular folders, issue the following command:

ls -Z

The final solution was to create a user account whose home directory hosts the root of the web server file system, called www in my case. Then, I executed the following command as root to get things going:

chcon -R -h -t httpd_sys_content_t /home/www

It seems that even the root of the home directory has to have an appropriate security context (/home has home_root_t so that might do the needful too). Without that, nothing will work even if all is well at the next level down. The switches for chcon command translate as follows:

-R : recursive; applies changes to all files and folders within a directory.

-h : changes apply only to symbolic links and not to where they refer in the file system.

-t : alters context type.

It took a while for all of this stuff about SELinux security contexts to percolate through to the point where I was able to solve the problem. A spot of further inspiration was needed too and even guided my search for the information that I needed. It’s well worth trying Linux Home Networking if you need more information. There are references to an earlier release of Fedora but the content still applies to later versions of Fedora right up to the current release if my experience is typical.

Service management in Ubuntu 9.10

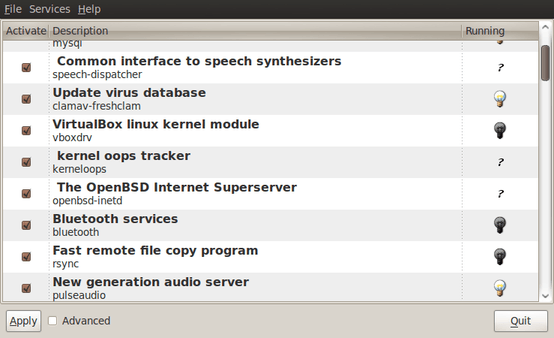

29th October 2009The final release of Ubuntu 9.10 is due out today but there is minor item that seems to have disappeared from the System>Administration menu, in the release candidate at least: Services. While some reader may put me right, I can’t seem to find it anywhere else. Luckily, there is a solution in the form of the GNOME Boot-Up Manager or BUM as it is known sometimes. It is always handy to have a graphical means of restarting services and BUM suffices for the purpose. Restarting Apache from the command line is all well and good but the GUI approach has its place too.

Ubuntu upgrades: do a clean installation or use Update Manager?

9th April 2009Part of some recent “fooling” brought on by the investigation of what turned out to be a duff DVD writer was a fresh installation of Ubuntu 8.10 on my main home PC. It might have brought on a certain amount of upheaval but it was nowhere near as severe as that following the same sort of thing with a Windows system. A few hours was all that was needed but the question as to whether it is better to do an upgrade every time a new Ubuntu release is unleashed on the world or to go for a complete virgin installation instead. With Ubuntu 9.04 in the offing, that question takes on a more immediate significance than it otherwise might do.

Various tricks make the whole reinstallation idea more palatable. For instance, many years of Windows usage have taught me the benefits of separating system and user files. The result is that my home directory lives on a different disk to my operating system files. Add to that the experience of being able to reuse that home drive across different Linux distros and even swapping from one distro to another becomes feasible. From various changes to my secondary machine, I can vouch that this works for Ubuntu, Fedora and Debian; the latter is what currently powers the said PC. You might have to user superuser powers to attend to ownership and access issues but the portability is certainly there and it applies anything kept on other disks too.

Naturally, there’s always the possibility of losing programs that you have had installed but losing the clutter can be liberating too. However, assembling a script made up up of one of more apt-get install commands can allow you to get many things back at a stroke. For example, I have a test web server (Apache/MySQL/PHP/Perl) set up so this would be how I’d get everything back in place before beginning further configuration. It might be no bad idea to back up your collection of software sources either; I have yet to add all of the ones that I have been using back into Synaptic. Then there are closed source packages such as VirtualBox (yes, I know that there is an open source edition) and Adobe Reader. After reinstating the former, all my virtual machines were available for me to use again without further ado. Restoring the latter allowed me to grab version 9.1 (probably more secure anyway) and it inveigles itself into Firefox now too so the number of times that I need to go through the download shuffle before seeing the contents of a PDF are much reduced, though not completely eliminated by the Windows-like ability to see a PDF loaded in a browser tab. Moving from software to hardware for a moment, it looks like any bespoke actions such as my activating an Epson Perfection 4490 Photo scanner need to be repeated but that was all that I needed to do. Getting things back into order is not so bad but you need to allow a modicum of time for this.

What I have discussed so far are what might be categorised as the common or garden aspects of a clean installation but I have seen some behaviours that make me wonder if the usual Ubuntu upgrade path is sufficiently complete in its refresh of your system. The counterpoint to all of this is that I may not have been looking for some of these things before now. That may apply to my noticing that DSLR support seems to be better with my Canon and Pentax cameras both being picked up and mounted for me as soon as they are connected to a PC, the caveat being that they are themselves powered on for this to happen. Another surprise that may be new is that the BBC iPlayer’s Listen Again works without further work from the user, a very useful development. It very clearly wasn’t that way before I carried out the invasive means. My previous tweaking might have prevented the in situ upgrade from doing its thing but I do see the point of not upsetting people’s systems with an overly aggressive update process, even if it means that some advances do not make themselves known.

So what’s my answer regarding which way to go once Ubuntu Jaunty Jackalope appears? For sake of avoiding initial disruption, I’d be inclined to go down the Update Manager route first while reserving the right to do a fresh installation later on. All in all, I am left with the gut feeling is that the jury is still out on this one.

Work locally, update remotely

4th December 2008Here’s a trick that might have its uses: using a local WordPress instance to update your online blog (yes, there are plenty of applications that promise to edit your online blog but these need file permissions to the likes of xmlrpc.php to be opened up). Along with the right database access credentials and the ability to log in remotely, adding the following two lines to wp-config.php does the trick:

define('WP_SITEURL', 'http://localhost/blog');

define('WP_HOME', 'http://localhost/blog');

These two constants override what is in the database and allow to update the online database from your own PC using WordPress running on a local web server (Apache or otherwise). One thing to remember here is that both online and offline directory structures are similar. For example, if your online WordPress files are in blog in the root of the online web server file system (typically htdocs for Linux), then they need to be contained in the same directory in the root of the offline server too. Otherwise, things could get confusing and perhaps messy. Another thing to consider is that you are modifying your online blog so the usual rules about care and attention apply, particularly with respect to using the same version of WordPress both locally and remotely. This is especially a concern if you, like me, run development versions of WordPress to see if there are any upheavals ahead of us like the overhaul that is coming in with WordPress 2.7.

An alternative use of this same trick is to keep a local copy of your online database in case of any problems while using a local WordPress instance to work with it. I used to have to edit the database backup directly (on my main Ubuntu system), first with GEdit but then using a sed command like the following:

sed -e s/www\.onlinewebsite\.com/localhost/g backup.sql > backup_l.sql

The -e switch uses regular expression substitution that follows it to edit the input with the output being directed to a new file. It’s slicker than the interactive GEdit route but has been made redundant by defining constants for a local WordPress installation as described above.

Hosting more than one WordPress blog on your website

12th March 2008An idea recently popped into my head for my hillwalking website: collecting a listing of bus services of use and interest to hillwalkers. Being rural, these services may not get the publicity that they deserve. In addition, they are generally subsidised so any increase in their patronage can only help maintain their survival.

Currently, the list lives on on several pages page in the blog but another thought has come to mind: using WordPress to host the list as a series of log entries, a sort of blog if you like. Effectively, that would involve having two blogs on the same website it can be done. One way is to set up up two instances of WordPress and they could work from the same database; the facility for this is allowed by the ability to use different table prefixes for the different blogs so that there are no collisions. There’s nothing to stop you having two databases but your hosting provider may charge extra for this. This set up will work but there is a caveat: you now have two blogs to maintain and, with regular WordPress releases, that means an extra overhead. Apart from that, it’s a workable approach.

Another option is to use WordPress MU. That would cut down on the maintenance but there are costs here too. It’s need of virtual hosts is a big one. If my experience is any guide, you probably need a dedicated server to go down this route and they aren’t that cheap. I needed to do a spot of Apache configuration and some editing of my hosts file to get my own installation off the ground; I don’t reckon that would be an option with shared hosting. Once I sorted out the hosts with a something.something.else address, set up was very much quick and easy.

Apart from a tab named Site Admin, the administration dashboard isn’t at all that different from a standard WordPress 2.3.x arrangement. In the extra tab, you can create blogs and users, control blogs and themes as well upgrade everything in a single step. Themes and plugins largely work as usual from an administration point of view. With plugins, you have just to try them and see what happens; one adding FCKEditor threw an error while the editor window was loading but it otherwise worked OK. I had no trouble at all with themes so all looks very well on that front.

Importing and editing posts worked as usual but for two perhaps irritating behaviours: tags are, not unreasonably, removed from titles and inline styled and class declarations are removed from tags in the body of a blog entry. Both could be resolved by post processing in the blog’s theme but the Sniplets plugin allows a better way out for the latter and I have been putting it to good use.

In summary, WordPress MU worked well and looks a very good option for multi-blog sites. However, the need for a dedicated server and the quirks that I have seen when it comes to handling post contents keep me away from using it for production blogs for now. Even so, I’ll be retaining it as a test system anyway. As regards the country bus log, I think that I’ll be sticking with the blog page for the moment.

Setting up a test web server on Ubuntu

1st November 2007Installing all of the bits and pieces is painless enough so long as you know what’s what; Synaptic does make it thus. Interestingly, Ubuntu’s default installation is a lightweight affair with the addition of any additional components involving downloading the packages from the web. The whole process is all very well integrated and doesn’t make you sweat every time you to install additional software. In fact, it resolves any dependencies for you so that those packages can be put in place too; it lists them, you select them and Synaptic does the rest.

Returning to the job in hand, my shopping list included Apache, Perl, PHP and MySQL, the usual suspects in other words. Perl was already there as it is on many UNIX systems so installing the appropriate Apache module was all that was needed. PHP needed the base installation as well as the additional Apache module. MySQL needed the full treatment too, though its being split up into different pieces confounded things a little for my tired mind. Then, there were the MySQL modules for PHP to be set in place too.

The addition of Apache preceded all of these but I have left it until now to describe its configuration, something that took longer than for the others; the installation itself was as easy as it was for the others. However, what surprised me were the differences in its configuration set up when compared with Windows. Same software, different operating system and they have set up the configuration files differently. I have no idea why they did this and it makes no sense at all to me; we are only talking about text files after all. The first difference is that the main configuration file is called apache2.conf in Ubuntu rather than httpd.conf as in Windows. Like its Windows counterpart, Ubuntu’s Apache does uses subsidiary configuration files. However, there is an additional layer of configurability added courtesy of a standard feature of UNIX operating systems: symbolic links. Rather than having a single folder with the all configuration files stored therein, there are two pairs of folders, one pair for module configuration and another for site settings: mods-available/mods-enabled and sites-available/sites-enabled, respectively. In each pair, there is a folder with all of the files and another containing symbolic links. It is the presence of a symbolic link for a given configuration file in the latter that activates it. I learned all this when trying to get mod_rewrite going and changing the web server folder from the default to somewhere less susceptible to wrecking during a re-installation or, heaven forbid, a destructive system crash. It’s unusual but it does work, even if it takes that little bit longer to get things sorted out when you first meet up with it.

Apart from the Apache set up and finding the right things to install, getting a test web server up and running was a fairly uneventful process. All’s working well now and I’ll be taking things forward from here; making website Perl scripts compatible with their new world will be one of the next things that need to be done.

An unexpected side effect

29th June 2007I recently posted about using mod_rewrite to block access to your images from all but the websites to which you want access to be available. Following so doing, I discovered that my FAVICON had disappeared from Firefox’s address. As it turned out, it was easy to fix and that is covered in another recent post.

More on mod_rewrite

25th June 2007Today, I caught sight of an article on anti-plagiarism tools at The Blog Herald and among the tricks was to use mod-rewrite to stop people “borrowing” both your images and your bandwidth. The gist is that you set up one or more conditions that exclude websites from the application of a rule forbidding access to images; the logic is that if the website referencing an image is not one of the websites listed in the conditions, then it doesn’t get to display any of your images.

RewriteCond %{HTTP_REFERER} !^http://(www\.)?awebsite.com(/)?.*$ [NC]

RewriteRule .*\.(gif|jpe?g|png|bmp)$ [F,NC]