More Linux Distributions

21st September 2012

If a certain Richard Stallman had his way, Linux would be called GNU/Linux because he wants GNU to have some of the credit, but we’re lazy creatures and we all call it Linux instead. What still amazes me is the number of Linux distributions that are out there. This list captures those that do not fit into other lists that you can find in the sidebar, so do look at the others as well.

Many fit into the desktop and server computing paradigms while a minority are very distinctive. It is easier to write about the latter than the former, though personal experiences do add to any narrative. It is tempting to think that everything has become static after more than thirty years, yet that may be foolish given the ongoing flux in the world of technology. Only change is ever a constant presence.

More in the Way of Privacy

The controversy about security agencies eavesdropping on internet communications has upset some and here are some distros offering anonymity and privacy. Of course, none of these should be used for unlawful purposes since there are those in less liberal countries who need invisibility to speak their minds.

It is harder and harder to create a Linux distro that is very different from the rest, but this one uses application virtualisation for added security. You can organise your software into different domains so that you work more securely when moving data between applications from different domains.

There is more than a hint of privacy-mindedness in this distro when you look long enough at what it offers. Cinnamon, MATE and Xfce desktop environments are part of the offer and there is added software for extra privacy and security.

This is an option for those who are worried about being tracked online. All internet connections are sent via the Tor network and it is run exclusively as a live distro from CD, DVD or USB stick drive too, so no trace is left on any PC. The basis is Debian and the distro’s name is an acronym: The Amnesiac Incognito Live System. For us living in a democratic country, the effort may seem excessive but that changes in other places where folk are not so fortunate. The use of Tor may not be perfect but it should help in combination with the use of different sessions for different tasks and encrypting any files. There even is an option to make the desktop appear like that of Windows XP for extra discreteness of use.

Most Linux distros that have enhanced security and anonymity as a feature are not installable on a PC, but that exactly is what’s unique about Whonix. It’s based on Debian but all internet connections go via the Tor network. The latter is called Whonix-Gateway with Whonix-Workstation being what you use to work on your system. It may sound like being overly careful but it has me intrigued.

Entertainment

In many ways, these are appliance distros for anyone who just wants an install-it-and-go approach to things. That works better with dedicated devices than with multipurpose machines, so that is one thing that needs to be kept in mind.

The idea behind this offering is what it offers console gamers. Legacy games and peripherals will work and there even is support for Raspberry Pi as well.

The main purpose of this distro is to offer a home for the KODI entertainment centre on PC and Raspberry Pi devices. It follows from the now defunct OpenELEC project, which ran into trouble when developers’ voices were not given a hearing.

The acronym stands for Open-Source Media Centre and there is KODI here too. Though the distro also is based on Debian, one is tempted to wonder why anyone would not just install that and install KODI on top of it. The answer possibly has something got to do with added user-friendliness for those who do not need to deal with such things.

Mandriva Offshoots

Mandrake once was a spin of Red Hat with a more user-friendly focus. In the days before the appearance of Ubuntu, it would have been a choice for those not wanting to overcome obstacles such as a level of hardware support that was much less than what we have today. Later, Mandrake became Mandriva following litigation and the acquisition of Conectiva in 2005. The organisation has declined since those heady days and it became defunct during 2015. Its legacy continues though in the form of two spin-off projects, so all the work of forebears has not been lost.

It was the uncertainty surrounding the future of Mandriva that originally caused this project to be started. Beginnings have been promising, so this is a one to watch, though you have to wonder if the now community-based OpenMandriva is stealing some of its limelight.

Of the pair that is listed here, it is OpenMandriva which is a continuation of the now-defunct Mandriva. Seeing how things progress for a project with user-friendliness at its heart will be of interest in these days when Debian, Ubuntu and Linux Mint are so pervasive. Even with those, there are KDE options, so there is a challenge in place.

Anything Russian may not be everyone’s choice given the state of world affairs at the time of writing, yet this still is an offshoot of Mandriva so it gets a mention in this list. Desktop environment options include KDE, XFCE and LXQt and there are various use cases covered by a range of solutions.

Others

Not every distro falls in the above categories, and some that you find here may surprise you. There are some better-known names like openSUSE that go their way.

Aside from the founder’s dislike of ISO disk images for whatever reason, this distro has its own eccentricities. For example, it is container-friendly, runs in memory as root and much more. This is branded as an experimental distro, and it is that in many ways.

This project creates respins of openSUSE for the sake of a more refined experience. For instance, there are live booting ISO images as well as inclusion of media codecs. There is plenty of choice too when it comes to desktop environments.

From what I have seen, this project seems to be supporting the same needs as Arch, albeit with all software needing to be compiled, so there’s more of a DIY approach. The wiki also comes in handy for those users.

Billing itself as a lean independent distribution focussing on QT and KDE, this is built from the ground up without any dependence on other distros. Some tools, like pacman, naturally come from elsewhere in this otherwise standalone offering.

Here is another distro apart from Ubuntu that has an African name, the Zulu for big chief this time around. It came to my notice among the pages of the now defunct Micro Mart magazine and uses MATE, XFCE, Enlightenment and KDE as its desktop environment choices.

SuSE Linux was one of the first Linux distros that I started to explore and I even had it loaded on my home PC as a secondary operating system for quite a while too before my attention went elsewhere. Only for a PC Plus cover-mounted CD, it never might have discovered it and it bested Red Hat, which was as prominent then, as Fedora is today. When SuSE fell into Novell’s hands, it became both openSUSE and SuSE Linux Enterprise Edition. The former is the community and the latter is what Novell, now itself an Attachmate Group company, offers to business customers. As it happens, I continue to keep an eye on openSUSE and even had it on a secondary PC before font resolution deficiencies had me looking elsewhere. While it’s best known for its KDE variant, there is a GNOME one too and it is this that I have been examining.

There was a time when this was being touted as an Ubuntu killer but it never seems to have made good on that promise. Recent troubles within the project haven’t helped either, especially with a long wait between releases.

This Turkish distro recently got reviewed in Linux Format and they were not satisfied with its documentation. It does not help that the website is not in English, so you need a translation tool of your choosing for this one.

Though there also is a spin using the MATE desktop environment, this distro is perhaps better known as the home for the Budgie desktop environment. All of this is for computing and not its business or enterprise counterpart. There is nothing to say against that and may make it feel a little more friendly.

The name sounded similar for some reason and I reckon that’s because Samsung has smartphones running Tizen on sale. The whole point of the project is to power mobile computing platforms with only the mention of netbooks sullying an otherwise non-PC target market that includes tablets and TV’s. It’s overseen by the Linux Foundation too.

UNIX

21st September 2012The world of open UNIX variants may not be as vibrant as the Linux one, but UNIX predates Linux by decades so it might be put down to its much greater maturity. BSD seems to predominate here, but the reason may be because of Sun keeping a tight hold on Solaris for so long. Now that Oracle has gone and been more restrictive again, it is the breakaway projects to which we have to look for OpenSolaris successors now. However, the partially free availability of Solaris 10 & 11 may draw some away from the open-source community of the alternative.

BSD

In the world of BSD UNIX, it often is difficult to see what is different between the various projects and some are based on technical excellence using the sort of reasoning that would be inaccessible to many computer users. Though many see the operating system as being one for servers alone, there are PC-focussed versions with PC-BSD being the most notable. The existence of those projects is in start contrast to a mantra that keeps BSD for servers and Linux for desktop systems.

This was a fork of FreeBSD and it seems to have been done for very technical reasons, such as handling of cluster computing and larger disc drives. If the reasons make sense to you, then it could be an option, but it doesn’t sound like one for the masses, though BSD UNIX hardly is at the best of times.

When someone turns to creating a desktop variant of BSD, FreeBSD seems to be a starting point for so much of the time. Even Debian, itself the foundation of so many Linux distributions, bases its own BSD variant on FreeBSD and Gentoo apparently has been looking at doing something similar. FreeBSD does give away a bias towards servers in that the default installation does not include a desktop environment. However, if you do the work, you can get one like GNOME 2 or XFCE on there and the process does remind me of the thinking behind Arch Linux. Until recently, I had FreeBSD 10 installed in a VirtualBox virtual machine until a software update broke it and that does sit well with the BSD culture of stability. Of course, it could be another sign of a focus on server computing too. Nevertheless, it ran well until then and fared no worse than the aforementioned Arch Linux, though it probably should have done better.

Apparently, this is FreeBSD with a choice of MATE (a fork of GNOME 2 for those not fancying the idea of using GNOME 3 and its GNOME Shell), XFCE, LXDE or OpenBox desktop environments. A recent look demonstrated that the desktop environments are turned out very nicely too. All in all, it looks like an interesting counterpart to what you would find with a Linux distro.

Given the troubled state of the online world because of cybercrime and cyberwarfare, it hardly comes as a surprise that computer security has a higher profile than it ever has. It then is hardly surprising that someone decided to create a more secure spin of FreeBSD. For added context, here is what the project had to say about its goals:

HardenedBSD aims to implement innovative exploit mitigation and security solutions for the FreeBSD community. Security is like an onion--it’s made up of layers. To be successful, attackers must peel back each layer. HardenedBSD takes a holistic approach to security by hardening the system and implementing exploit mitigation technologies. We will work with FreeBSD and any other FreeBSD-based project to include our innovations. Our primary goal is to provide a clean-room reimplementation of the publicly documented parts of the grsecurity patchset for Linux.

According to the website, this is a derivative of NetBSD developed with desktop users in mind. At first, it had a feel that would have been more widely available with UNIX and Linux systems in the middle of the 1990’s. Since then, XFCE was chosen as a desktop environment and that has modernised the feel.

Since I last had a look, the focus of this project has become portability. What they mean by portability is have versions of NetBSD that run on all sorts of hardware and I even thought I saw a mention of Sony PlayStation (PS2) if my eyes did not deceive me and ARM-based systems also appeared, hardly a surprise with the rise of tablet computing. Other more conventional computing platforms are served too, but the others make NetBSD stand out from the others more than I once thought it did.

To some, portability is about running software under different hardware architectures. That is not what is meant here since we are talking about the ability to run an installation off a USB drive plugged in to any computer, more likely with Intel and AMD processors. The underlying basis is FreeBSD with OpenBox being the chosen desktop environment, assuring a friendly user interface as well.

With a strap line like “Only two remote holes in the default install, in a heck of a long time!”, you’d have to suspect that security and stability are the key attributes of this operating system. The security aspect certainly crops up a lot so I think that a spot of exploration is in order, especially when various system types (x86 and SPARC are just two of them) are supported anyway. The ongoing furore about intelligence service monitoring and increasing numbers of attacks on different systems over the web do make the whole subject more relevant now than it ever was and it never was irrelevant.

When m0n0wall was discontinued in 2015, OPNsense was forked from pfSense, a move that has left tension between the two projects. The newcomer gave the following reasons for its actions: code quality, regular releases, security issues related to the web UI being run as root, source code for the pfSense build tools is no longer publicly available, concern regarding transparency, new ownership of the pfSense brand, using the brand name to fence off the competition and several licence changes for no apparent reason. These have been contested by the pfSense while OPNsense now uses HardenedBSD as its basis and has stuck with a frequent release model.

This was started in 2004 as a fork of the now defunct m0n0wall with the first public release coming in 2006. It is based on FreeBSD and can be installed on physical or virtual appliances for added network security. It seems to add a BSD installation for a firewall and other security functions, but there clearly is a place for this in the enterprise market by all accounts.

Network-assisted Storage (NAS) has blossomed in recent years for home users and anyone with a DIY mindset might be tempted to go and build things themselves using PC parts and it is for those that this FreeBSD-based distro would be an asset. When I went looking at the possibility, the inability to boot the installation disk that I was using put paid to the attempt. Then, I was left wondering if my use of AMD’s CPU’s was part of the problem, though I since have realised that building a low-power system might be a better option than reusing a full PC. There has been an incursion into the world of NAS drives in the form of a 3 GB Western Digital My Book Live, so any return to DIY ways could be a better informed.

Like TrueNAS, this another BSD for use when making an old PC into a NAS file server. In fact, this came into being when part of the FreeNAS community took exception to the direction in which iXsystems were starting to take it after 2011. It also is based on FreeBSD and has a different web interface. That makes it an alternative if TrueNAS does not do the deed for you.

Solaris

One of the casualties of Oracle’s takeover of Sun Microsystems was the community-based OpenSolaris project. The more proprietary Solaris 11 Express became Oracle’s answer to the need that OpenSolaris fulfilled back then. Since, Solaris 10 & 11 became available without charge with support contracts becoming the revenue earner.

The demise of OpenSolaris saw a major new project emerge. Its basis is Illumos, itself a fork of the now defunct OpenSolaris, and a recent look revealed that it is maturing rather nicely. MATE is the chosen desktop environment so it should not be that unfamiliar to those coming from the Linux world. Initially, there is not so much software installed, but Firefox does get included and there is a graphical package manager, so there is little point in complaining.

The enterprise focus of this offering is plain on the website since virtualisation and the storage platform get a strong showing. Discussion of desktop environments and such like are conspicuous by their absence. Seemingly, this is infrastructural software above all else and there are support contracts available too.

The website for this Illumos distro has a retro, so it is easy to believe that the operating system could be similar. Since MATE, XFCE and Enlightenment are the available desktop environments, anyone coming from Linux should be thrown off very much once they figure out how to get things started.

With a moniker like “Converged Container and Virtual Machine Hypervisor”, this clearly is not a desktop computing offering. There is more than a hint of cloud computing about it and that hardly is a surprise given the age in which we work.

Adding a new hard drive to Ubuntu

19th January 2009This is a subject that I thought that I had discussed on this blog before but I can’t seem to find any reference to it now. I have discussed the subject of adding hard drives to Windows machines a while back so that might explain what I was under the impression that I was. Of course, there’s always the possibility that I can’t find things on my own blog but I’ll go through the process.

What has brought all of this about was the rate at which digital images were filling my hard disks. Even with some housekeeping, I could only foresee the collection growing so I went and ordered a 1TB Western Digital Caviar Green Power from Misco. City Link did the honours with the delivery and I can credit their customer service with regard to organising delivery without my needing to get to the depot to collect the thing; it was a refreshing experience that left me pleasantly surprised.

For the most of the time, hard drives that I have had generally got on with the job there was one experience that has left me wary. Assured by good reviews, I went and got myself an IBM DeskStar and its reliability didn’t fill me with confidence and I will not touch their Hitachi equivalents because of it (IBM sold their hard drive business to Hitachi). This was a period in time when I had a hardware faltering on me with an Asus motherboard putting me off that brand around the same time as well (I now blame it for going through a succession of AMD Athlon CPU’s). The result is that I have a tendency to go for brands that I can trust from personal experience and both Western Digital falls into this category (as does Gigabyte for motherboards), hence my going for a WD this time around. That’s not to say that other hard drive makers wouldn’t satisfy my needs since I have had no problems with disks from Maxtor or Samsung but Ill stick with those makers that I know until they leave me down, something that I hope never happens.

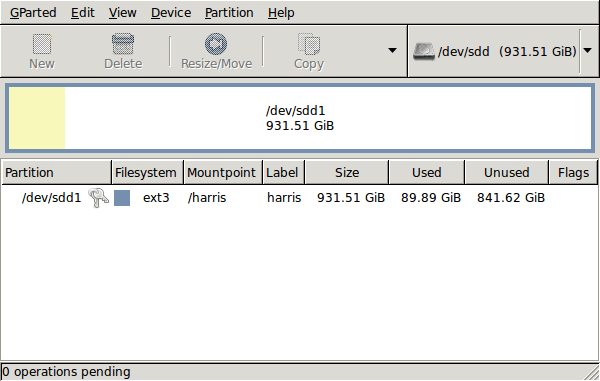

GParted running on Ubuntu

Anyway, let’s get back to installing the hard drive. The physical side of the business was the usual shuffle within the PC to add the SATA drive before starting up Ubuntu. From there, it was a matter of firing up GParted (System -> Administration -> Partition Editor on the menus if you already have it installed). The next step was to find the new empty drive and create a partition table on it. At this point, I selected msdos from the menu before proceeding to set up a single ext3 partition on the drive. You need to select Edit -> Apply All Operations from the menus set things into motion before sitting back and waiting for GParted to do its thing.

After the GParted activities, the next task is to set up automounting for the drive so that it is available every time that Ubuntu starts up. The first thing to be done is to create the folder that will be the mount point for your new drive, /newdrive in this example. This involves editing /etc/fstab with superuser access to add a line like the following with the correct UUID for your situation:

UUID=”32cf775f-9d3d-4c66-b943-bad96049da53″ /newdrive ext3 defaults,noatime,errors=remount-ro

You can can also add a comment like “# /dev/sdd1” above that so that you know what’s what in the future. To get the actual UUID that you need to add to fstab, issue a command like one of those below, changing /dev/sdd1 to what is right for you:

sudo vol_id /dev/sdd1 | grep “UUID=” /* Older Ubuntu versions */

sudo blkid /dev/sdd1 | grep “UUID=” /* Newer Ubuntu versions */

This is the sort of thing that you get back and the part beyond the “=” is what you need:

ID_FS_UUID=32cf775f-9d3d-4c66-b943-bad96049da53

Once all of this has been done, a reboot is in order and you then need to set up folder permissions as required before you can use the drive. This part gets me firing up Nautilus using gksu and adding myself to the user group in the Permissions tab of the Properties dialogue for the mount point (/newdrive, for example). After that, I issued something akin to the following command to set global permissions:

chmod 775 /newdrive

With that, I had completed what I needed to do to get the WD drive going under Ubuntu. After that IBM DeskStar experience, the new drive remains on probation but moving some non-essential things on there has allowed me to free some space elsewhere and carry out a reorganisation. Further consolidation will follow but I hope that the new 931.51 GiB (binary gigabytes or 1024*1024*1024 rather the decimal gigabytes (1,000,000,000) preferred by hard disk manufacturers) will keep me going for a good while before I need to add extra space again.

Setting up a WD My Book Live NAS on Ubuntu GNOME 13.10

1st December 2013The official line from Western Digital is that they do not support the use of their My Book Live NAS drives with Linux or UNIX. However, what that means is that they only develop tools for accessing their products for Windows and maybe OS X. It still doesn’t mean that you cannot access the drive’s configuration settings by pointing your web browser at http://mybooklive.local/. In fact, not having those extra tools is no drawback at all since the drive can be accessed through your file manager of choice under the Network section and the default name is MyBookLive too so you easily can find the thing once it is connected to a router or switch anyway.

Once you are in the servers web configuration area, you can do things like changing its name, updating its firmware, finding out what network has been assigned to it, creating and deleting file shares, password protecting file shares and other things. These are the kinds of things that come in handy if you are going to have a more permanent connection to the NAS from a PC that runs Linux. The steps that I describe have worked on Ubuntu 12.04 and 13.10 with the GNOME desktop environment.

What I was surprised to discover that you cannot just set up a symbolic link that points to a file share. Instead, it needs to be mounted and this can be done from the command line using mount or at start-up with /etc/fstab. For this to happen, you need the Common Internet File System utilities and these are added as follows if you need them (check on the Software Software or in Synaptic):

sudo apt-get install cifs-utils

Once these are added, you can add a line like the following to /etc/fstab:

//[NAS IP address]/[file share name] /[file system mount point] cifs

credentials=[full file location]/.creds,

iocharset=utf8,

sec=ntlm,

gid=1000,

uid=1000,

file_mode=0775,

dir_mode=0775

0 0

Though I have broken it over several lines above, this is one unwrapped line in /etc/fstab with all the fields in square brackets populated for your system and with no brackets around these. Though there are other ways to specify the server, using its IP address is what has given me the most success and this is found under Settings > Network on the web console. Next up is the actual file share name on the NAS and I have used a custom them instead of the default of Public. The NAS file share needs to be mounted to an actual directory in your file system like /media/nas or whatever you like and you will need to create this beforehand. After that, you have to specify the file system and it is cifs instead of more conventional alternatives like ext4 or swap. After this and before the final two space delimited zeroes in the line comes the chunk that deals with the security of the mount point.

What I have done in my case is to have a password protected file share and the user ID and password have been placed in a file in my home area with only the owner having read and write permissions for it (600 in chmod-speak). Preceding the filename with a “.” also affords extra invisibility. That file then is populated with the user ID and password like the following. Of course, the bracketed values have to be replaced with what you have in your case.

username=[NAS file share user ID]

password=[NAS file share password]

With the credentials file created, its options have to be set. First, there is the character set of the file (usually UTF-8 and I got error code 79 when I mistyped this) and the security that is to be applied to the credentials (ntlm in this case). To save having no write access to the mounted file share, the uid and gid for your user needs specification with 1000 being the values for the first non-root user created on a Linux system. After that, it does no harm to set the file and directory permissions because they only can be set at mount time; using chmod, chown and chgrp later on has no effect whatsoever. Here, I have set permissions to read, write and execute for the owner and the user group while only allowing read and execute access for everyone else (that’s 775 in the world of chmod).

All of what I have described here worked for me and had to gleaned from disparate sources like Mount Windows Shares Permanently from the Ubuntu Wiki, another blog entry regarding the permissions settings for a CIFS mount point and an Ubuntu forum posting on mounting CIFS with UTF-8 support. Because of the scattering of information, I just felt that it needed to all together in one place for others to use and I hope that fulfils someone else’s needs in a similar way to mine.

Piggybacking an Android Wi-Fi device off your Windows PC’s internet connection

16th March 2013One of the disadvantages of my Google/Asus Nexus 7 is that it needs a Wi-Fi connection to use. Most of the time this is not a problem since I also have a Huawei mobile WiFi hub from T-Mobile and this seems to work just about anywhere in the U.K. Away from the U.K. though, it won’t work because roaming is not switched on for it and that may be no bad thing with the fees that could introduce. My HTC Desire S could deputise but I need to watch costs with that too.

There’s also the factor of download caps and those apply both to the Huawei and to the HTC. Recently, I added Anquet‘s Outdoor Map Navigator (OMN) to my Nexus 7 through the Google Play store for a fee of £7 and that allows access to any walking maps that I have bought from Anquet. However, those are large downloads so the caps start to come into play. Frugality would help but I began to look at other possibilities that make use of a laptop’s Wi-Fi functionality.

Looking on the web, I found two options for this that work on Windows 7 (8 should be OK too): Connectify Hotspot and Virtual Router Manager. The first of these is commercial software but there is a Lite edition for those wanting to try it out; that it is not a time limited demo is not something that I can confirm though that did not seem to be the case since it looked as if only features were missing from it that you’d get if you paid for the Pro variant. The second option is an open source one and is free of charge apart from an invitation to donate to the project.

Though online tutorials show the usage of either of these to be straightforward, my experiences were not all that positive at the outset. In fact, there was something that I needed to do and that is why this post has come to exist at all. That happened even after the restart that Conectify Hotspot needed as part of its installation; it runs as a system service so that’s why the restart was needed. In fact, it was Virtual Router Manager that told me what the issue was and it needed no reboot. Neither did it cause network disconnection of a laptop like the Connectify offering did on me and that was the cause of its ejection from that system; limitations in favour of its paid addition aside, it may have the snazzier interface but I’ll take effective simplicity any day.

Using Virtual Router Manager turns out to be simple enough. It needs a network name (also known as an SSID), a password to restrict who accesses the network and the internet connection to be shared. In my case, the was Local Area Connection on the drop down list. With all the required information entered, I was ready to start the router using the Start Network Router button. The text on this changes to Stop Network Router when the hub is operational or at least it should have done for me on the first time that I ran it. What I got instead was the following message:

The group or resource is not in the correct state to perform the requested operation.

The above may not say all that much but it becomes more than ample information if you enter it into the likes of Google. Behind the scenes, Virtual Router Manager is using native Windows functionality is create a WiFi hub from a PC and it appears to be the Microsoft Virtual Wi-Fi Miniport Adapter from what I have seen. When I tried setting up an adhoc Wi-Fi network from a laptop to the Nexus 7 using Windows’ own network set up capability via its Control Panel, it didn’t do what I needed so there might be something that third party software can do. So, the interesting thing about the solution to my Virtual Router Manager problem was that it needed me to delve into the innards of Windows a little.

Firstly, there’s running Command Prompt (All Programs > Accessories) from the Start Menu with Administrator privileges. It helps here if the account with which you log into Windows is in the Administrators group since all you have to do then is right click on the Start Menu entry and choose Run as administrator entry in the pop-up context menu. With a command line window now open, you then need to issue the following command:

netsh wlan set hostednetwork mode=allow ssid=[network name] key=[password] keyUsage=persistent

When that had done its thing, Virtual Router Manager worked without a hitch though it did turn itself after a while and that may be no bad thing from the security standpoint. On the Android side, it was a matter of going in Settings > Wi-Fi and choose the new network that have been creating on the laptop. This sort of thing may apply to other types of tablet (Dare I mention iPads?) so you could connect anything to the hub without needing to do any more on the Windows side.

For those wanting to know what’s going on behind the scenes on Windows, there’s a useful tutorial on Instructables that shows what third party software is saving you from having to do. Even if I never go down the more DIY route, I probably have saved myself having to buy a mobile Wi-Fi hub for any trips to Éire. For now, the Irish 3G dongle that I already have should be enough.

Upgrading from Windows 7 to Windows 8 in a VMWare Virtual Machine

1st November 2012Though my main home PC runs Linux Mint, I do like to have the facility to use Windows software from time to time and virtualisation has allowed me to continue doing that. For a good while, it was a Windows 7 guest within a VirtualBox virtual machine and, before that, one running Windows XP fulfilled the same role. However, it did feel as if things were running slower in VirtualBox than once might have been the case and I jumped ship to VMware Player. It may be proprietary and closed source but it is free of charge and has been doing what was needed. A subsequent recent upgrade of video driver on the host operating system allowed the enabling of a better graphical environment in the Windows 7 guest.

Instability

However, there were issues with stability and I lost the ability to flit from the VM window to the Linux desktop at will with the system freezing on me and needing a reboot. Working in Windows 7 using full screen mode avoided this but it did feel as I was constrained to working in a Windows machine whenever I did so. The graphics performance was imperfect too with screening refreshing being very blocky with some momentary scrambling whenever I opened the Start menu. Others would not have been as patient with that as I was though there was the matter of an expensive Photoshop licence to be guarded too.

In hindsight, a bit of pruning could have helped. An example would have been driver housekeeping in the form of removing VirtualBox Guest Additions because they could have been conflicting with their VMware counterparts. For some reason, those thoughts entered my mind and I was pondering another more expensive option instead.

Considering NAS & Windows/Linux Networking

That would have taken the form of setting aside a PC for running Windows 7 and having a NAS for sharing files between it and my Linux system. In fact, I did get to exploring what a four bay QNAP TS-412 would offer me and realised that you cannot put normal desktop hard drives into devices like that. For a while, it looked as if it would be a matter of getting drives bundled with the device or acquiring enterprise grade disks so as to main the required continuity of operation. The final edition of PC Plus highlighted another one though: the Western Digital Red range. These are part way been desktop and enterprise classifications and have been developed in association with NAS makers too.

Looking at the NAS option certainly became an education but it has exited any sort of wish list that I have. After all, there is the cost of such a setup and it’s enough to get me asking if I really need such a thing. The purchase of a Netgear FS 605 ethernet switch would have helped incorporate it but there has been no trouble sorting alternative uses for it since it bumps up the number of networked devices that I can have, never a bad capability to have. As I was to find, there was a less expensive alternative that became sufficient for my needs.

In-situ Windows 8 Upgrade

Microsoft have been making available evaluation copies of Windows 8 Enterprise that last for 90 days before expiring. One is in my hands has been running faultlessly in a VMware virtual machine for the past few weeks. That made me wonder if upgrading from Windows 7 to Windows 8 help with my main Windows VM problems. Being a curious risk-taking type I decided to answer the question for myself using the £24.99 Windows Pro upgrade offer that Microsoft have been running for those not needing a disk up front; they need to pay £49.99 but you can get one afterwards for an extra £12.99 and £3.49 postage if you wish, a slightly cheaper option. There also was a time cost in that it occupied a lot of a weekend on me but it seems to have done what was needed so it was worth the outlay.

Given the element of risk, Photoshop was deactivated to be on the safe side. That wasn’t the only pre-upgrade action that was needed because the Windows 8 Pro 32-bit upgrade needs at least 16 GB before it will proceed. Of course, there was the matter of downloading the installer from the Microsoft website too. This took care of system evaluation and paying for the software as well as the actual upgrade itself.

The installation took a few hours with virtual machine reboots along the way. Naturally, the licence key was needed too as well as the selection of a few options though there weren’t many of these. Being able to carry over settings from the pre-exisiting Windows 7 instance certainly helped with this and with making the process smoother too. No software needed reinstatement and it doesn’t feel as if the system has forgotten very much at all, a successful outcome.

Post-upgrade Actions

Just because I had a working Windows 8 instance didn’t mean that there wasn’t more to be done. In fact, it was the post-upgrade sorting that took up more time than the actual installation. For one thing, my digital mapping software wouldn’t work without .Net Framework 3.5 and turning on the operating system feature form the Control Panel fell over at the point where it was being downloaded from the Microsoft Update website. Even removing Avira Internet Security after updating it to the latest version had no effect and it was a finding during the Windows 8 system evaluation process. The solution was to mount the Windows 8 Enterprise ISO installation image that I had and issue the following command from a command prompt running with administrative privileges (it’s all one line though that’s wrapped here):

dism.exe /online /enable-feature /featurename:NetFX3 /Source:d:\sources\sxs /LimitAccess

For sake of assurance regarding compatibility, Avira has been replaced with Trend Micro Titanium Internet Security. The Avira licence won’t go to waste since I have another another home in mind for it. Removing Avira without crashing Windows 8 proved impossible though and necessitating booting Windows 8 into Safe Mode. Because of much faster startup times, that cannot be achieved with a key press at the appropriate moment because the time window is too short now. One solution is to set the Safe Boot tickbox in the Boot tab of Msconfig (or System Configuration as it otherwise calls itself) before the machine is restarted. There may be others but this was the one that I used. With Avira removed, clearing the same setting and rebooting restored normal service.

Dealing with a Dual Personality

One observer has stated that Windows 8 gives you two operating systems for the price of one: the one in the Start screen and the one on the desktop. Having got to wanting to work with one at a time, I decided to make some adjustments. Adding Classic Shell got me back a Start menu and I left out the Windows Explorer (or File Explorer as it is known in Windows 8) and Internet Explorer components. Though Classic Shell will present a desktop like what we have been getting from Windows 7 by sweeping the Start screen out of the way for you, I found that this wasn’t quick enough for my liking so I added Skip Metro Suite to do this and it seemed to do that a little faster. The tool does more than sweeping the Start screen out of the way but I have switched off these functions. Classic Shell also has been configured so the Start screen can be accessed with a press of Windows key but you can have it as you wish. It has updated too so that boot into the desktop should be faster now. As for me, I’ll leave things as they are for now. Even the possibility of using Windows’ own functionality to go directly to the traditional desktop will be left untested while things are left to settle. Tinkering can need a break.

Outcome

After all that effort, I now have a seemingly more stable Windows virtual machine running Windows 8. Flitting between it and other Linux desktop applications has not caused a system freeze so far and that was the result that I wanted. There now is no need to consider having separate Windows and Linux PC’s with a NAS for sharing files between them so that option is well off my wish-list. There are better uses for my money.

Not everyone has had my experience though because I saw a report that one user failed to update a physical machine to Windows 8 and installed Ubuntu instead; they were a Linux user anyway even if they used Fedora more than Ubuntu. It is possible to roll back from Windows 8 to the previous version of Windows because there is a windows.old directory left primarily for that purpose. However, that may not help you if you have a partially operating system that doesn’t allow you to do just that. In time, I’ll remove it using the Disk Clean-up utility by asking it to remove previous Windows installations or running File Explorer with administrator privileges. Somehow, the former approach sounds the safer.

What About Installing Afresh?

While there was a time when I went solely for upgrades when moving from one version of Windows to the next, the annoyance of the process got to me. If I had known that installing the upgrade twice onto a computer with a clean disk would suffice, it would have saved me a lot. Staring from Windows 95 (from the days when you got a full installation disk with a PC and not the rescue media that we get now) and moving through a sequence of successors not only was time consuming but it also revealed the limitations of the first in the series when it came to supporting more recent hardware. It was enough to have me buying the full retailed editions of Windows XP and Windows 7 when they were released; the latter got downloaded directly from Microsoft. These were retail versions that you could move from one computer to another but Windows 8 will not be like that. In fact, you will need to get its System Builder edition from a reseller and that can only be used on one machine. It is the merging of the former retail and OEM product offerings.

What I have been reading is that the market for full retail versions of Windows was not a big one anyway. However, it was how I used to work as you have read above and it does give you a fresh system. Most probably get Windows with a new PC and don’t go building them from scratch like I have done for more than a decade. Maybe the System Builder version would apply to me anyway and it appears to be intended for virtual machine use as well as on physical ones. More care will be needed with those licences by the looks of things and I wonder what needs not to be changed so as not to invalidate a licence. After all, making a mistake might cost between £75 and £120 depending on the edition.

Final Thoughts

So far Windows 8 is treating me well and I have managed to bend to my will too, always a good thing to be able to say. In time, it might be that a System Builder copy could need buying yet but I’ll leave well alone for now. Though I needed new security software, the upgrade still saved me money over a hardware solution to my home computing needs and I have a backup disk on order from Microsoft too. That I have had to spend some time settling things was a means of learning new things for me but others may not be so patient and, with Windows 7 working well enough for most, you have to ask if it’s only curious folk like me who are taking the plunge. Still, the dramatic change has re-energised the PC world in an era when smartphones and tablets have made so much of the running recently. That too is no bad thing because an unchanging technology is one that dies and there are times when big changes are needed, as much as they upset some folk. For Microsoft, this looks like one of them and it’ll be interesting to see where things go from here for PC technology.

Automatically enabling your network connection at startup on CentOS 7

15th August 2014The release of CentOS 7 stoked my curiosity so I gave it a go in a VirtualBox virtual machine. It uses GNOME Shell in classic mode so the feel is not too far removed from that of GNOME 2. One thing to watch though is that it needs at least version 4.3.14 of VirtualBox or the Guest Additions kernel drivers will not compile at all. That might sound surprising when you learn that the kernel version is 3.10.x and that for GNOME Shell is 3.8.4. Much like Debian production releases, more established versions are chosen for the sake of stability and that fits in with the enterprise nature of the intended user base. Even with that more conservative approach, the results still please the eye though attempting to change the desktop background picture managed to freeze the machine. Other than that, most things work fine.

Even so, there are unexpected things to be encountered and one that I spotted was that network connectivity needed to switched on every time the VM was started. The default installation gives rise to this state of affairs and it is a known situation with CentOS from at least version 6 of the distribution and is not so hard to fix once you know what to do.

What you need to do is look for the relevant configuration file in /etc/sysconfig/network-scripts/ and update that. Using the ifconfig command, I found that the name of the network interface. Usually, this is something like eth0 but it was enp0s3 in my case so I had to look for a file named ifcfg-enp0s3 and edit that. The text that is sought is ONBOOT=no and that needs to become ONBOOT=yes for network connections to start automatically. To do something similar from the command line, CentOS had suggested the following:

sed -i -e ‘s@^ONBOOT=”no@ONBOOT=”yes@’ ifcfg-enp0s3

The above uses sed to do an inline (and case insensitve) edit of the file to change the offending no to a yes, once you have dropped in the /etc/sysconfig/network-scripts/ directory. My edit was done manually with Gedit so that works too. One thing to add is that any file editing needs superuser privileges so switching to root with the su command and using sudo is in order here.

Adding a Start Menu to Windows 8

16th October 2012For all the world, it looks like Microsoft has mined a concept from a not often recalled series of Windows: 3.x. Then, we had a Program Manager for starting all our applications with no sign of a Start Menu. That came with Windows 95 and I cannot anyone mourning the burying of the Program Manager interface either. It was there in Windows 95 if you knew where to look and I do remember starting an instance, possibly out of curiosity.

Every Windows user seems to have taken to the Start Menu regardless of how big they grow when you install a lot of software on your machine. It didn’t matter that Windows NT got it later than Windows 9x ones either; NT 3.51 has the Program Manager too and it was NT 4 that got the then new interface that has been developed and progressed in no less than four subsequent versions of Windows (2000, XP, Vista & 7). Maybe it was because computing was the preserve of fewer folk that the interchange brought little if any sign of a backlash. The zeitgeist of the age reflected the newness of desktop computing and its freshness probably brought an extra level of openness too.

Things are different now, though. You only have to hear of the complaints about changes to Linux desktop environments to realise how attached folk become to certain computer interfaces. Ironically, personal computing has just got exciting again after a fairly stale decade of stasis. Mobile computing devices are aplenty and it no longer is a matter of using a stationary desktop PC or laptop and those brought their own excitement in the 1990’s. In fact, reading a title like Computer Shopper reminds me of how things once were with its still sticking with PC reviews while others are not concentrating on them as much. Of course, the other gadgets get reviewed too so it is not stuck in any rut. Still, it is good to see the desktop PC getting a look in in an age when there is so much competition, especially from phones and tablets.

In this maelstrom, Microsoft has decided to do something dramatic with Windows 8. It has resurrected the Program Manager paradigm in the form of the Start screen and excised the Start Menu from the desktop altogether. For touch screen computing interfaces such as tablets, you can see the sense of this but it’s going to come as a major surprise to many. Removing what lies behind how many people interact with a PC is risky and you have to wonder how it’s going to work out for all concerned.

What reminded me of this was a piece on CNET by Mary Jo Foley. Interestingly, software is turning up that returns the Start Menu (or Button) to Windows 8. One of these is Classic Shell and I decided to give it a go on a Windows 8 Enterprise evaluation instance that I have. Installation is like any Windows program and I limited the options to the menu and updater. At the end of the operation, a button with a shell icon appeared on the desktop’s taskbar. You can make the resultant menu appear like that of Windows XP or Windows 7 if you want. There are other settings like what the Windows key does and what happens when you click on the button with a mouse. By default, both open the new Start Menu and holding down the Shift key when doing either brings up the Start screen. This is customisable so you can have things the other way around if you so desire. Another setting is to switch from the Start screen to the desktop after you log into Windows 8 (you may also have it log in for you automatically but it’s something that I believe anyone should be doing). The Start screen does flash up but things move along quickly; maybe having not appear at all would be better for many.

Classic Shell is free of charge and worked well for me apart from that small rough edge noted above. It also is open source and looks well maintained too. For that reason, it appeals to me more that Stardock’s Start8 (currently in beta release at the time of writing) or Pokki for Windows 8, which really is an App Store that adds a Start Menu. If you encounter Windows 8 on a new computer, then they might be worth trying should you want a Start Menu back. Being an open-minded type, I could get along with the standard Windows 8 interface but it’s always good to have choices too. Most of us want to own our computing experience, it seems, so these tools could have their uses for Windows 8 users.

Initial impressions of Windows 10

31st October 2014Being ever curious on the technology front, the release of the first build of a Technical Preview of Windows 10 was enough to get me having a look at what was on offer. The furore regarding Windows 8.x added to the interest so I went to the download page to get a 64-bit installation ISO image.

That got installed into a fresh VirtualBox virtual machine and the process worked smoothly to give something not so far removed from Windows 8.1. However, it took until release 4.3.18 of VirtualBox before the Guest additions had caught up with the Windows prototype so I signed up for the Windows Insider program and got a 64-bit ISO image to install the Enterprise preview of Windows 10 into a VMware virtual machine since and that supported full screen display of the preview while VirtualBox caught up with it.

Of course, the most obvious development was the return of the Start Menu and it works exactly as expected too. Initially, the apparent lack of an easy way to disable App panels had me going to Classic Shell for an acceptable Start Menu. It was only later that it dawned on me that unpinning these panels would deliver to me the undistracting result that I wanted.

Another feature that attracted my interest is the new virtual desktop functionality. Here I was expecting something like what I have used on Linux and UNIX. There, each workspace is a distinct desktop with only the applications open in a given workspace showing on a panel in there. Windows does not work that way with all applications visible on the taskbar regardless of what workspace they occupy, which causes clutter. Another deficiency is not having a desktop indicator on the taskbar instead of the Task View button. On Windows 7 and 8.x, I have been a user of VirtuaWin and this still works largely in the way that I expect of it too, except for any application windows that have some persistence associated with them; the Task Manager is an example and I include some security software in the same category too.

Even so, here are some keyboard shortcuts for anyone who wants to take advantage of the Windows 10 virtual desktop feature:

- Create a new desktop: Windows key + Ctrl + D

- Switch to previous desktop: Windows key + Ctrl + Left arrow

- Switch to next desktop: Windows key + Ctrl + Right arrow

Otherwise, stability is excellent for a preview of a version of Windows that is early on its road to final release. An upgrade to a whole new build went smoothly when initiated following a prompt from the operating system itself. All installed applications were retained and a new taskbar button for notifications made its appearance alongside the existing Action Centre icon. So far, I am unsure what this does and whether the Action Centre button will be replaced in the fullness of time but I am happy to await where things go with this.

All is polished up to now and there is nothing to suggest that Windows 10 will not be to 8.x what 7 was to Vista. The Start Screen has been dispatched after what has proved to be a misadventure on the part of Microsoft. The PC still is with us and touchscreen devices like tablets are augmenting it instead of replacing it for any tasks involving some sort of creation. If anything, we have seen the PC evolve with laptops perhaps becoming more like the Surface Pro, at least when it comes to hybrid devices. However, we are not as happy smudge our PC screens quite like those on phones and tablets so a return to a more keyboard and mouse centred approach for some devices is a welcome one.

What I have here are just a few observations and there is more elsewhere, including a useful article by Ed Bott on ZDNet. All in all, we are early in the process for Windows 10 and, though it looks favourable so far, I will continue to keep an eye on how it progresses. It needs to be less experimental than Windows 8.x and it certainly is less schizophrenic and should not be a major jump for users of Windows 7.

Ubuntu upgrades: do a clean installation or use Update Manager?

9th April 2009Part of some recent “fooling” brought on by the investigation of what turned out to be a duff DVD writer was a fresh installation of Ubuntu 8.10 on my main home PC. It might have brought on a certain amount of upheaval but it was nowhere near as severe as that following the same sort of thing with a Windows system. A few hours was all that was needed but the question as to whether it is better to do an upgrade every time a new Ubuntu release is unleashed on the world or to go for a complete virgin installation instead. With Ubuntu 9.04 in the offing, that question takes on a more immediate significance than it otherwise might do.

Various tricks make the whole reinstallation idea more palatable. For instance, many years of Windows usage have taught me the benefits of separating system and user files. The result is that my home directory lives on a different disk to my operating system files. Add to that the experience of being able to reuse that home drive across different Linux distros and even swapping from one distro to another becomes feasible. From various changes to my secondary machine, I can vouch that this works for Ubuntu, Fedora and Debian; the latter is what currently powers the said PC. You might have to user superuser powers to attend to ownership and access issues but the portability is certainly there and it applies anything kept on other disks too.

Naturally, there’s always the possibility of losing programs that you have had installed but losing the clutter can be liberating too. However, assembling a script made up up of one of more apt-get install commands can allow you to get many things back at a stroke. For example, I have a test web server (Apache/MySQL/PHP/Perl) set up so this would be how I’d get everything back in place before beginning further configuration. It might be no bad idea to back up your collection of software sources either; I have yet to add all of the ones that I have been using back into Synaptic. Then there are closed source packages such as VirtualBox (yes, I know that there is an open source edition) and Adobe Reader. After reinstating the former, all my virtual machines were available for me to use again without further ado. Restoring the latter allowed me to grab version 9.1 (probably more secure anyway) and it inveigles itself into Firefox now too so the number of times that I need to go through the download shuffle before seeing the contents of a PDF are much reduced, though not completely eliminated by the Windows-like ability to see a PDF loaded in a browser tab. Moving from software to hardware for a moment, it looks like any bespoke actions such as my activating an Epson Perfection 4490 Photo scanner need to be repeated but that was all that I needed to do. Getting things back into order is not so bad but you need to allow a modicum of time for this.

What I have discussed so far are what might be categorised as the common or garden aspects of a clean installation but I have seen some behaviours that make me wonder if the usual Ubuntu upgrade path is sufficiently complete in its refresh of your system. The counterpoint to all of this is that I may not have been looking for some of these things before now. That may apply to my noticing that DSLR support seems to be better with my Canon and Pentax cameras both being picked up and mounted for me as soon as they are connected to a PC, the caveat being that they are themselves powered on for this to happen. Another surprise that may be new is that the BBC iPlayer’s Listen Again works without further work from the user, a very useful development. It very clearly wasn’t that way before I carried out the invasive means. My previous tweaking might have prevented the in situ upgrade from doing its thing but I do see the point of not upsetting people’s systems with an overly aggressive update process, even if it means that some advances do not make themselves known.

So what’s my answer regarding which way to go once Ubuntu Jaunty Jackalope appears? For sake of avoiding initial disruption, I’d be inclined to go down the Update Manager route first while reserving the right to do a fresh installation later on. All in all, I am left with the gut feeling is that the jury is still out on this one.