Generating custom log messages in R

24th April 2023While I have been exploring the use of R on a private basis during the last few years, a recent opportunity allowed me to use this exposure at work. This took the form of creating a utility script for use by others. To keep things lightweight, I did not go down the packaging route, but that may come later, possibly for something else.

However, anything used by others needs input checking and comprehensible feedback should anything go wrong. For me, that meant looking at the message, warning and stop functions. The last of these aborts script execution when there is a critical error while the other two do not do that. The message function is for informative user input while the warning function suggests things that may need their attention.

Each function takes string input and sends this to the terminal or log. They also can combine different pieces of text in the style of the paste0 function and can take the text output of other functions as input. Used in combination with conditional logic or error handling, they can help a user track down what went wrong without their needing to ask a script developer. Anything that helps anyone else to help themselves has to be good.

Avoiding permissions, times or ownership failure messages when using rsync

22nd April 2023The rsync command is one that I use heavily for doing backups and web publishing. The latter means that it is part of how I update websites built using Hugo because new and/or updated files need uploading. The command also sees usage when uploading files onto other websites as well. During one of these operations, and I am unsure now as to which type is relevant, I encountered errors about being unable to set permissions.

The cause was the encompassing -a option. This is a shorthand for -rltpgoD, and the individual options perform the following:

-r: recursive transfer, copying all contents within a directory hierarchy

-l: symbolic links copied as symbolic links

-t: preserve times

-p: preserve permissions

-g: preserve groups

-o: preserve owners

-D: preserve device and special files

The solution is to some of the options if they are inappropriate. The minimum is to omit the option for permissions preservation, but others may not apply between different servers either, especially when operating systems differ. Removing the options for preserving permissions, groups and owners results in something like this:

rsync -rltD [rest of command]

While it can be good to have a more powerful command with the setting of a single option, it can mean trying to do too much. Another way to avoid permissions and similar errors is to have consistency between source and destination files systems, but that is not always possible.

Removing duplicate characters from strings using BASH scripting

30th March 2023Recently, I wanted to extract some text from the Linux command by word number only for multiple spaces to make things less predictable. The solution was to remove the duplicate spaces. This can be done using sed but you add the complexity of regular expressions if you opt for that solution. Instead, the tr command offers a neater approach. For removing duplicate spaces, the command takes the following form:

echo "test test" | tr -s " "

Since I was piping some text to the command, that is what I have above. The tr command is intended to replace or delete characters and the -s switch is a shorthand for --squeeze-repeats. The actual character to be deduplicated is passed in quotes at the end; here, it is a space but it could be anything that is duplicated. The resulting text in this example becomes:

test test

After the processing, there is now only one space separating the two words, which is the solution that I sought. It certainly cut out any variability that I was encountering in my usage.

Picking out a word from a string by its position using BASH scripting

28th March 2023My wanting to execute one command using the text output of another recently got me wondering about picking out a block of characters using its position in a space-delimited list. All this needed to be done from the Linux command line or in a shell script. The output text took a form like the following:

text1 text2 text3 text4

What I wanted in my case was something like the third word above. The solution was to use the cut command with the -d (for delimiter) and -f (for field number) switches. The following yields text3 as the output:

echo "text1 text2 text3 text4" | cut -d " " -f 3

Here the delimiter is the space character but it can be anything that is relevant for the string in question. Then, the “3” picks out the required block of text. For this to work, the text needs to be organised consistently and for the delimiters never to be duplicated, though there is a way of dealing with the latter as well.

Rendering Markdown into HTML using PHP

3rd December 2022One of the good things about using virtual private servers for hosting websites in preference to shared hosting or using a web application service like WordPress.com or Tumblr is that you get added control and flexibility. There was a time when HTML, CSS and client-side scripting were all that was available from the shared hosting providers that I was using. Then, static websites were my lot until it became possible to use Perl server side scripting. PHP predominates now, but Python or Ruby cannot be discounted either.

Being able to install whatever you want is a bonus as well, though it means that you also are responsible for the security of the containers that you use. There will be infrastructure security, but that of your own machine will be your own concern. Added power always means added responsibility, as many might say.

The reason that these thought emerge here is that getting PHP to render Markdown as HTML needs the installation of Composer. Without that, you cannot use the CommonMark package to do the required back-work. All the command that you see here will work on Ubuntu 22.04. First, you need to download Composer and executing the following command will accomplish this:

curl https://getcomposer.org/installer -o /tmp/composer-setup.php

Before the installation, it does no harm to ensure that all is well with the script before proceeding. That means that capturing the signature for the script using the following command is wise:

HASH=`curl https://composer.github.io/installer.sig`

Once you have the script signature, then you can check its integrity using this command:

php -r "if (hash_file('SHA384', '/tmp/composer-setup.php') === '$HASH') { echo 'Installer verified'; } else { echo 'Installer corrupt'; unlink('composer-setup.php'); } echo PHP_EOL;"

The result that you want is “Installer verified”. If not, you have some investigating to do. Otherwise, just execute the installation command:

sudo php /tmp/composer-setup.php --install-dir=/usr/local/bin --filename=composer

With Composer installed, the next step is to run the following command in the area where your web server expects files to be stored. That is important when calling the package in a PHP script.

composer require league/commonmark

Then, you can use it in a PHP script like so:

define("ROOT_LOC",$_SERVER['DOCUMENT_ROOT']);

include ROOT_LOC . '/vendor/autoload.php';

use League\CommonMark\CommonMarkConverter;

$converter = new CommonMarkConverter();

echo $converter->convertToHtml(file_get_contents(ROOT_LOC . '<location of markdown file>));

The first line finds the absolute location of your web server file directory before using it when defining the locations of the autoload script and the required markdown file. The third line then calls in the CommonMark package, while the fourth sets up a new object for the desired transformation. The last line converts the input to HTML and outputs the result.

If you need to render the output of more than one Markdown file, then repeating the last line from the preceding block with a different file location is all you need to do. The CommonMark object persists and can be used like a variable without needing the reinitialisation to be repeated every time.

The idea of building a website using PHP to render Markdown has come to mind, but I will leave it at custom web pages for now. If an opportunity comes, then I can examine the idea again. Before, I had to edit HTML, but Markdown is friendlier to edit, so that is a small advance for now.

Moves to Hugo

30th November 2022What amazes me is how things can become more complicated over time. As long as you knew HTML, CSS and JavaScript, building a website was not as onerous as long as web browsers played ball with it. Since then, things have got easier to use but more complex at the same time. One example is WordPress: in the early days, themes were much simpler than they are now. The web also has got more insecure over time, and that adds to complexity as well. It sometimes feels as if there is a choice to make between ease of use and simplicity.

It is against that background that I reassessed the technology that I was using on my public transport and Irish history websites. The former used WordPress, while the latter used Drupal. The irony was that the simpler website was using the more complex platform, so the act of going simpler probably was not before time. Alternatives to WordPress were being surveyed for the first of the pair, but none had quite the flexibility, pervasiveness and ease of use that WordPress offers.

There is another approach that has been gaining notice recently. One part of this is the use of Markdown for web publishing. This is a simple and distraction-free plain text format that can be transformed into something more readable. It sees usage in blogs hosted on GitHub, but also facilitates the generation of static websites. The clutter is absent for those who have no need of the Gutenberg Editor on WordPress.

With the content written in Markdown, it can be fed to a static website generator like Hugo. Using defined templates and fixed assets like CSS together with images and other static files, it can slot the content into HTML files very speedily since it is written in the Go programming language. Once you get acclimatised, there are no folder structures that cannot be used, so you get full flexibility in how you build out your website. Sitemaps and RSS feeds can be built at the same time, both using the same input as the HTML files.

In a nutshell, it automates what once needed manual effort used a code editor or a visual web page editor. The use of HTML snippets and layouts means that there is no necessity for hand-coding content, like there was at the start of the web. It also helps that Bootstrap can be built in using Node, so that gives a basis for any styling. Then, SCSS can take care of things, giving even more automation.

Given that there is no database involved in any of this, the required information has to be stored somewhere, and neither the Markdown content nor the layout files contain all that is needed. The main site configuration is defined in a single TOML file, and you can have a single one of these for every publishing destination; I have development and production servers, which makes this a very handy feature. Otherwise, every Markdown file needs a YAML header where titles, template references, publishing status and other similar information gets defined. The layouts then are linked to their components, and control logic and other advanced functionality can be added too.

Because static files are being created, it does mean that site searching and commenting or contact pages cannot work they would on a dynamic web platform. Often, external services are plugged in using JavaScript. One that I use for contact forms is Getform.io. Then, Zapier has had its uses in using the RSS feed to tweet site updates on Twitter when new content gets added. Though I made different choices, Disqus can be used for comments and Algolia for site searching. Generally, though, you can find yourself needing to pay, particularly if you need to remove advertising or gain advanced features.

Some comments service providers offer open source self-hosted options, but I found these difficult to set up and ended up not offering commenting at all. That was after I tried out Cactus Comments only to find that it was not discriminating between pages, so it showed the same comments everywhere. There are numerous alternatives like Remark42, Hyvor Talk, Commento, FastComments, Utterances, Isso, Mouthful, Muut and HyperComments but trying them all out was too time-consuming for what commenting was worth to me. It also explains why some static websites even send readers to Twitter if they have something to say, though I have not followed this way of working.

For searching, I added a JavaScript/JSON self-hosted component to the transport website, and it works well. However, it adds to the size of what a browser needs to download. That is not a major issue for desktop browsers, but the situation with mobile browsers is such that it has a sizeable effect. Testing with PageSpeed and Lighthouse highlighted this, even if I left things as they are. The solution works well in any case.

One thing that I have yet to work out is how to edit or add content while away from home. Editing files using an SSH connection is as much a possibility as setting up a Hugo publishing setup on a laptop. After that, there is the question of using a tablet or phone, since content management systems make everything web based. These are points that I have yet to explore.

As is natural with a code-based solution, there is a learning curve with Hugo. Reading a book provided some orientation, and looking on the web resolved many conundrums. There is good documentation on the project website, while forum discussions turn up on many a web search. Following any research, there was next to nothing that could not be done in some way.

Migration of content takes some forethought and took quite a bit of time, though there was an opportunity to carry some housekeeping as well. The history website was small, so copying and pasting sufficed. For the transport website, I used Python to convert what was on the database into Markdown files before refining the result. That provided some automation, but left a lot of work to be done afterwards.

The results were satisfactory, and I like the associated simplicity and efficiency. That Hugo works so fast means that it can handle large websites, so it is scalable. The new Markdown method for content production is not problematical so far apart from the need to make it more portable, and it helps that I found a setup that works for me. This also avoids any potential dealbreakers that continued development of publishing platforms like WordPress or Drupal could bring. For the former, I hope to remain with the Classic Editor indefinitely, but now have another option in case things go too far.

Building a sitemap in XML

24th November 2022While there are many tools that will build XML site maps, there is some satisfaction to be had in creating your own. This is in spite of there being a multitude of search engine optimisation plugins for content management systems like WordPress or what is built into static site generators like Hugo. Sometimes, building your own allows for added simplicity and that is shared with recent efforts in WordPress theme development.

The sitemap XML protocol is simple enough to offer a short coding project. The basis was what Hugo generates and I used Python to create the XML files. The only libraries that I needed were configparser, SQLAlchemy and pandas. the first two of these allowed databases to be queried and the last on the list was used for data processing. Otherwise, it was a case of using what is built into the Python language like file writing and looping.

Once the scripts were ready, they could be uploaded to web servers and executed by scheduled jobs using CRON to keep things up to date. along the way, I also uncovered a way to publicise the locations of the sitemap files to search engine bots using robots.txt. The structure of the instruction is the following:

User-agent: *

Sitemap: sitemap.xml

This means that it announces to all bots the location of the sitemap file. In my case, I always included the full URL for the XML file and that clearly varies by website location.

A look at the Julia programming language

19th November 2022Several open-source computing languages get mentioned when talking about working with data. Among these are R and Python, but there are others; Julia is another one of these. It took a while before I got to check out Julia because I felt the need to get acquainted with R and Python beforehand. There are others like Lua to investigate too, but that can wait for now.

With the way that R is making an incursion into clinical data reporting analysis following the passage of decades when SAS was predominant, my explorations of Julia are inspired by a certain contrariness on my part. Alongside some small personal projects, there has been some reading in (digital) book form and online. Concerning the latter of these, there are useful tutorials like Introduction to Data Science: Learn Julia Programming, Maths & Data Science from Scratch or Julia Programming: a Hands-on Tutorial. Like what happens with R, there are online versions of published books available free of charge, and they include Julia Data Science and Interactive Visualization and Plotting with Julia. Video learning can help too and Jane Herriman has recorded and shared useful beginner’s guides on YouTube that start with the basics before heading onto more advanced subjects like multiple dispatch, broadcasting and metaprogramming.

This piece of learning has been made of simple self-inspired puzzles before moving on to anything more complex. That differs from my dalliance with R and Python, where I ventured into complexity first, not least because of testing them out with public COVID data. Eventually, I got around to doing that with Julia too though my interest was beginning to wane by then, and Julia’s abilities for creating multipage PDF files were such that PDF Toolkit was needed to help with this. Along the way, I have made use of such packages as CSV.jl, DataFrames.jl, DataFramesMeta, Plots, Gadfly.jl, XLSX.jl and JSON3.jl, among others. After that, there is PrettyTables.jl to try out, and anyone can look at the Beautiful Makie website to see what Makie can do. There are plenty of other packages creating graphs such as SpatialGraphs.jl, PGFPlotsX and GRUtils.jl. For formatting numbers, options include Format.jl and Humanize.jl.

So far, my primary usage has been with personal financial data together with automated processing and backup of photo files. The photo file processing has taken advantage of the ability to compile Julia scripts for added speed because just-in-time compilation always means there is a lag before the real work begins.

VS Code is my chosen editor for working with Julia scripts, since it has a plugin for the language. That adds the REPL, syntax highlighting, execution and data frame viewing capabilities that once were added to the now defunct Atom editor by its own plugin. While it would be nice to have a keyboard shortcut for script execution, the whole thing works well and is regularly updated.

Naturally, there have been a load of queries as I have gone along and the Julia Documentation has been consulted as well as Julia Discourse and Stack Overflow. The latter pair have become regular landing spots on many a Google search. One example followed a glitch that I encountered after a Julia upgrade when I asked a question about this and was directed to the XLSX.jl Migration Guides where I got the information that I needed to fix my code for it to run properly.

There is more learning to do as I continue to use Julia for various things. Once compiled, it does run fast like it has been promised. The syntax paradigm is akin to R and Python, but there are Julia-specific features too. If you have used the others, the learning curve is lessened but not eliminated completely. This is not an object-oriented language as such, but its functional nature makes it familiar enough for getting going with it. In short, the project has come a long way since it started more than ten years ago. There is much for the scientific programmer, but only time will tell if it usurped its older competitors. For now, I will remain interested in it.

Self hosting Google fonts

14th November 2022One thing that I have been doing across my websites is to move away from using Google web fonts using the advice on the Switching.Software website. While I have looked at free web font directories like 1001 Free Fonts or DaFont, they do not have the full range of bolding and character sets that I desire so I opted instead for the Google Webfonts Helper website. That not only offered copies of what Google has but also created a portion of CSS that I could add to a stylesheet on a website, making things more streamlined. At the same time, I also took the opportunity to change some of the fonts that were being used for sake of added variety. Open Sans is good but there are other acceptable sans-serif options like Mulish or Nunita as well, so these got used.

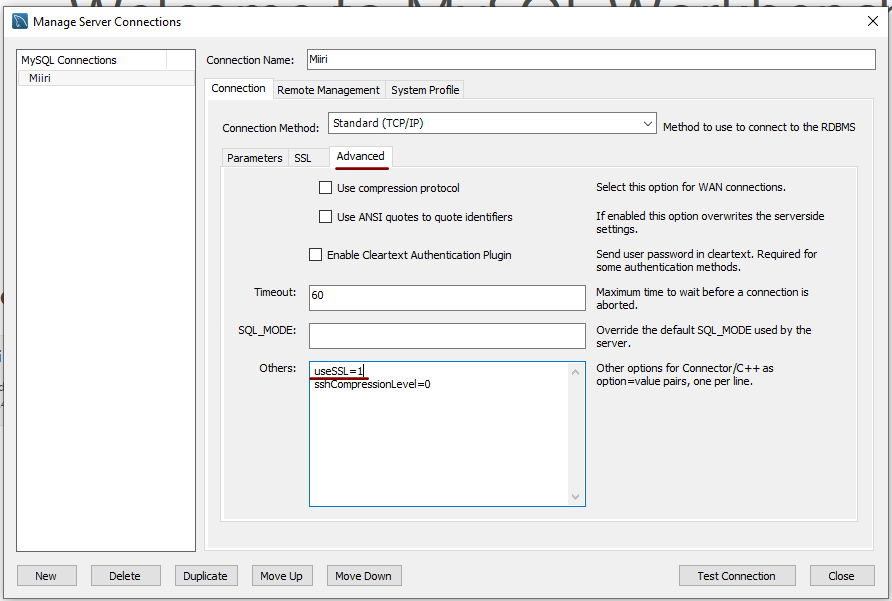

Disabling the SSL connection requirement in MySQL Workbench

7th November 2022A while ago, I found that MySQL Workbench would only use SSL connections and that was stopping it from connecting to local databases so I looked for a way to override this. The cure was to go to Database > Manage Connections… in the menus for the application’s home tab. in the dialogue box that appeared, I chose the connection of interest and went to the Advanced panel under Connection and removed the line useSSL=1 from the Others field. The screenshot below shows you what things look like before the change is made. Naturally, the best practice would be to secure a remote database connection using SSL so this approach is best reserved for remote non-production databases. However, it may be that this does not happen now but I thought I would share this in case the problem persists for anyone.